File size: 6,945 Bytes

e36abfb e27b050 e36abfb e27b050 e36abfb e27b050 e36abfb 9f21b15 e36abfb e27b050 e36abfb e27b050 e36abfb e27b050 e36abfb e27b050 e36abfb 1205833 0d20b6b 1205833 0d20b6b e36abfb e27b050 1205833 e27b050 e36abfb 1205833 e36abfb 1205833 e27b050 e36abfb 1205833 e36abfb 1205833 e36abfb 1205833 e36abfb e27b050 1205833 e27b050 e36abfb e27b050 e36abfb 9f21b15 e36abfb e27b050 e36abfb a311207 e27b050 e36abfb 9f21b15 e36abfb e27b050 e36abfb e27b050 9f21b15 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 |

---

library_name: transformers

license: mit

language:

- en

metrics:

- accuracy

---

# Model Card for Logic2Vision

Logic2Vision is a [LLaVA-1.5-13B](https://huggingface.co/llava-hf/llava-1.5-13b-hf) model finetuned on [VisReas dataset](https://arxiv.org/abs/2403.10534) for complex visual reasoning tasks.

## Model Details

### Model Description

Logic2Vision is a [LLaVA-1.5-13B](https://huggingface.co/llava-hf/llava-1.5-13b-hf) model finetuned on [VisReas dataset](https://arxiv.org/abs/2403.10534) for complex visual reasoning tasks.

The model has been finetuned using LoRA to generate python pseudocode outputs to solve a complex visual reasoning tasks.

- **Developed by:** Sangwu Lee and Syeda Akter

- **Model type:** Multimodal (Text + Image)

- **Language(s) (NLP):** English

- **License:** MIT

- **Finetuned from model:** [LLaVA-1.5-13B](https://huggingface.co/llava-hf/llava-1.5-13b-hf)

### Model Sources

- **Repository:** TBD

- **Paper:** [VisReas dataset](https://arxiv.org/abs/2403.10534)

## Uses

The inference method is similar to [LLaVA-1.5-13B](https://huggingface.co/llava-hf/llava-1.5-13b-hf).

### Example images

**Question**

- Q: What else in the image is striped as the rope and the mane to the left of the white clouds?

- A: Question is problematic. There are a couple zebras (i.e. mane/horses) at the back left of the clouds. But, there are clearly no ropes in the image.

**Question**

- Q: What material do the chair and the table have in common?

- A: Wood.

### Code

```python

import torch

from transformers import LlavaProcessor, LlavaForConditionalGeneration

import requests

from PIL import Image

class LLaVACodeTemplate:

prompt = """

USER: <image>

Executes the code and logs the results step-by-step to provide an answer to the question.

Question

{question}

Code

{codes}

ASSISTANT:

Log

"""

answer = """

{logs}

Answer:

{answer}</s>

"""

template = LLaVACodeTemplate()

model = LlavaForConditionalGeneration.from_pretrained("RE-N-Y/logic2vision", torch_dtype=torch.bfloat16, low_cpu_mem_usage=True, cache_dir="/data/tir/projects/tir6/general/sakter/cache")

model.to("cuda")

processor = LlavaProcessor.from_pretrained("RE-N-Y/logic2vision")

processor.tokenizer.pad_token = processor.tokenizer.eos_token

processor.tokenizer.padding_side = "left"

image = Image.open(requests.get("https://huggingface.co/RE-N-Y/logic2vision/resolve/main/zebras.jpg", stream=True).raw)

question = "What else in the image is striped as the rope and the mane to the left of the white clouds?"

codes = """selected_clouds = select(clouds)

filtered_clouds = filter(selected_clouds, ['white'])

related_mane = relate(mane, to the left of, o, filtered_clouds)

selected_rope = select(rope)

pattern = query_pattern(['selected_rope', 'related_mane'])

result = select(objects, attr=pattern)

"""

prompt = template.prompt.format(question=question, codes=codes)

inputs = processor(images=image, text=prompt, return_tensors="pt")

inputs.to("cuda")

generate_ids = model.generate(**inputs, max_new_tokens=256)

output = processor.batch_decode(generate_ids, skip_special_tokens=True)

print(output[0])

# USER:

# Executes the code and logs the results step-by-step to provide an answer to the question.

# Question

# What else in the image is striped as the rope and the mane to the left of the white clouds?

# Code

# selected_clouds = select(clouds)

# filtered_clouds = filter(selected_clouds, ['white'])

# related_mane = relate(mane, to the left of, o, filtered_clouds)

# selected_rope = select(rope)

# pattern = query_pattern(['selected_rope', 'related_mane'])

# result = select(objects, attr=pattern)

# ASSISTANT:

# Log

# ('clouds', ['white'])

# ('clouds', ['white'])

# ('mane', ['striped'])

# ('rope', ['no object'])

# ['the question itself is problematic']

# ['the question itself is problematic']

# Answer:

# the question itself is problematic

image = Image.open(requests.get("https://huggingface.co/RE-N-Y/logic2vision/resolve/main/room.jpg", stream=True).raw)

question = "What material do the chair and the table have in common?"

codes = """selected_chair = select(chair)

selected_table = select(table)

materials = query_material([selected_chair, selected_table])

common_material = common(materials)

"""

prompt = template.prompt.format(question=question, codes=codes)

inputs = processor(images=image, text=prompt, return_tensors="pt")

inputs.to("cuda")

generate_ids = model.generate(**inputs, max_new_tokens=256)

output = processor.batch_decode(generate_ids, skip_special_tokens=True)

print(output[0])

# USER:

# Executes the code and logs the results step-by-step to provide an answer to the question.

# Question

# What material do the chair and the table have in common?

# Code

# selected_chair = select(chair)

# selected_table = select(table)

# materials = query_material([selected_chair, selected_table])

# common_material = common(materials)

# ASSISTANT:

# Log

# ('chair', ['wood'])

# ('table', ['wood'])

# [['wood'], ['wood']]

# ['wood']

# Answer:

# wood

```

## Bias, Risks, and Limitations

The model has been mostly trained on VisReas dataset which is generated from [Visual Genome](https://homes.cs.washington.edu/~ranjay/visualgenome/index.html) dataset.

Furthermore, since the VLM was mostly finetuned to solve visual reasoning tasks by "generating python pseudocode" outputs provided by the user.

Hence, it may struggle to adopt to different prompt styles and code formats.

## Training / Evaluation Details

The model has been finetuned using 2 A6000 GPUs on CMU LTI's Babel cluster. The model has been finetuned using LoRA (`r=8, alpha=16, dropout=0.05, task_type="CAUSAL_LM"`).

LoRA modules were attached to `["q_proj", "v_proj"]`. We use DDP for distributed training and BF16 to speed up training. For more details, check [our paper](https://arxiv.org/abs/2403.10534)!

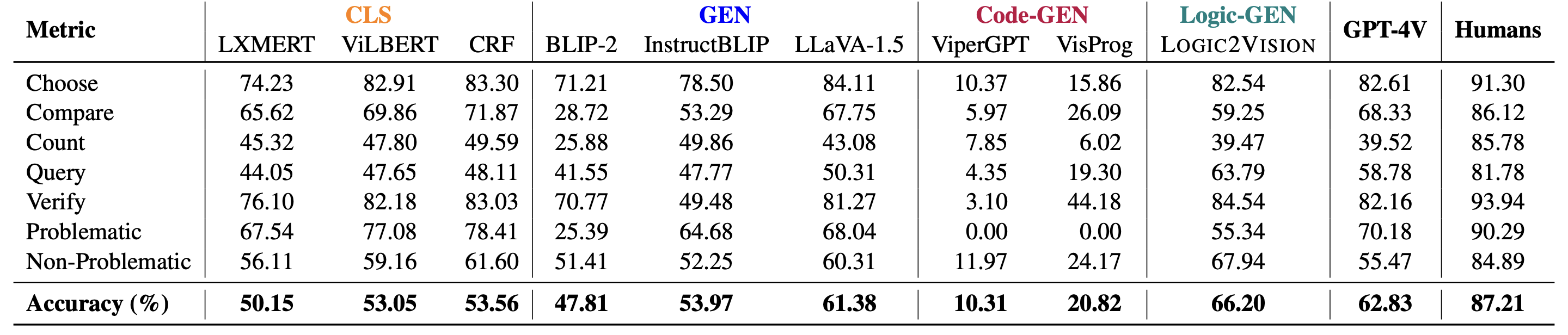

### Results

## Citation

**BibTeX:**

```

@misc{akter2024visreas,

title={VISREAS: Complex Visual Reasoning with Unanswerable Questions},

author={Syeda Nahida Akter and Sangwu Lee and Yingshan Chang and Yonatan Bisk and Eric Nyberg},

year={2024},

eprint={2403.10534},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## Model Card Authors

- Sangwu Lee - [Google Scholar](https://scholar.google.com/citations?user=FBJeGpAAAAAJ) - [Github](https://github.com/RE-N-Y) - [LinkedIn](https://www.linkedin.com/in/sangwulee/)

- Syeda Akter - [Google Scholar](https://scholar.google.com/citations?hl=en&user=tZFFHYcAAAAJ) - [Github](https://github.com/snat1505027) - [LinkedIn](https://www.linkedin.com/in/syeda-nahida-akter-989770114/) |