File size: 1,948 Bytes

07f67ad 12c1a48 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

---

license: mit

---

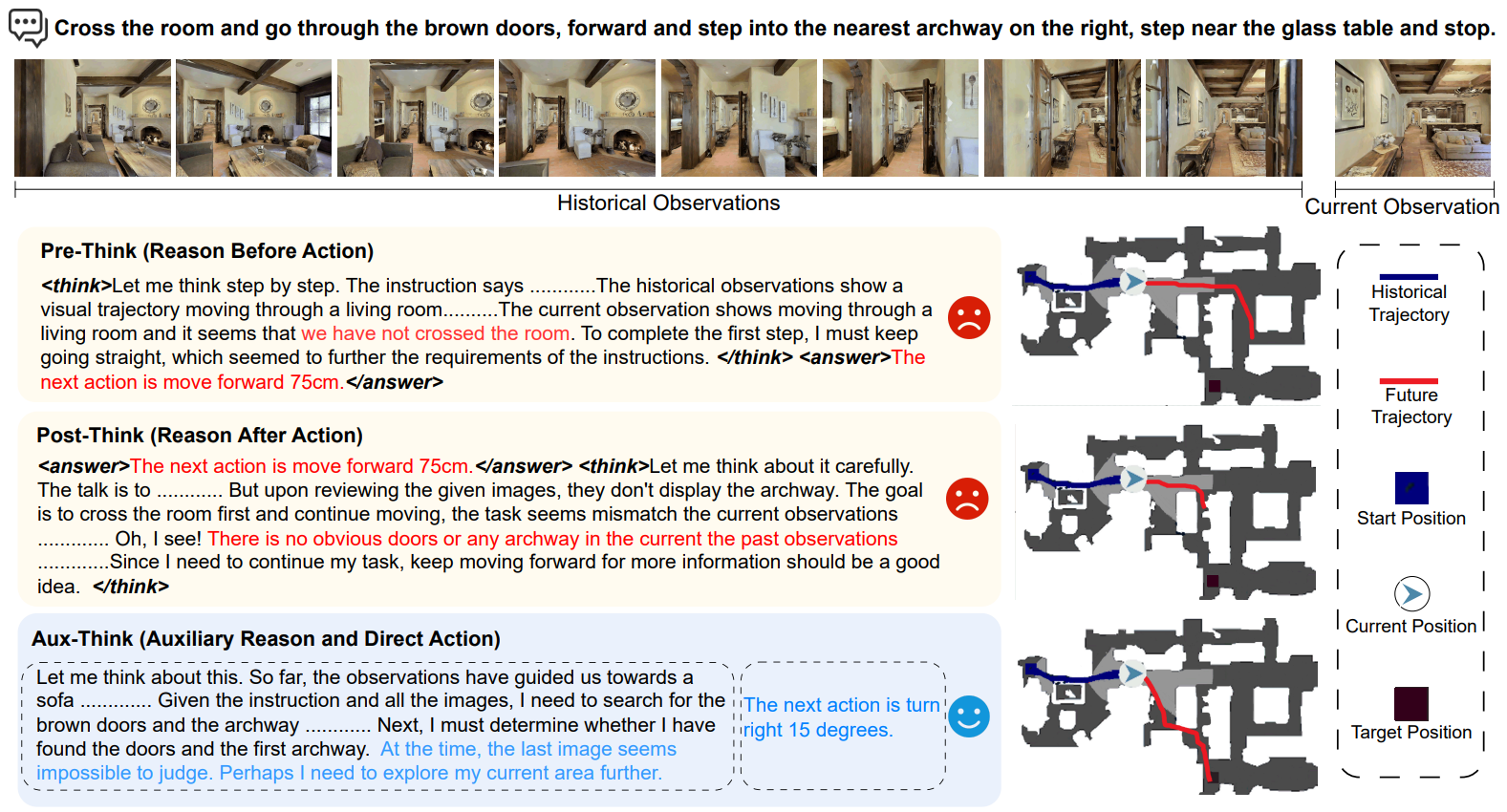

# Aux-Think: Exploring Reasoning Strategies for Data-Efficient Vision-Language Navigation

<div align="center" class="authors">

<a href="https://scholar.google.com/citations?user=IYLvsCQAAAAJ&hl" target="_blank">Shuo Wang</a>,

<a href="https://yongcaiwang.github.io/" target="_blank">Yongcai Wang</a>,

<a>Wanting Li</a>,

<a href="https://scholar.google.com/citations?user=TkwComsAAAAJ&hl=en" target="_blank">Xudong Cai</a>, <br>

<a>Yucheng Wang</a>,

<a>Maiyue Chen</a>,

<a>Kaihui Wang</a>,

<a href="https://scholar.google.com/citations?user=HQfc8TEAAAAJ&hl=en" target="_blank">Zhizhong Su</a>,

<a>Deying Li</a>,

<a href="https://zhaoxinf.github.io/" target="_blank">Zhaoxin Fan</a>

</div>

<div align="center" style="line-height: 3;">

<a href="https://horizonrobotics.github.io/robot_lab/aux-think" target="_blank" style="margin: 2px;">

<img alt="Homepage" src="https://img.shields.io/badge/Homepage-green" style="display: inline-block; vertical-align: middle;"/>

</a>

<a href="https://arxiv.org/abs/2505.11886" target="_blank" style="margin: 2px;">

<img alt="Paper" src="https://img.shields.io/badge/Paper-Arxiv-red" style="display: inline-block; vertical-align: middle;"/>

</a>

</div>

## Introduction

Aux-Think internalizes Chain-of-Thought (CoT) only during training, enabling efficient Vision-Language Navigation without explicit reasoning at inference, and achieving strong performance with minimal data.

## Citation

```bibtex

@article{wang2025think,

title={Aux-Think: Exploring Reasoning Strategies for Data-Efficient Vision-Language Navigation},

author={Wang, Shuo and Wang, Yongcai and Li, Wanting and Cai, Xudong and Wang, Yucheng and Chen, Maiyue and Wang, Kaihui and Su, Zhizhong and Li, Deying and Fan, Zhaoxin},

journal={arXiv preprint arXiv:2505.11886},

year={2025}

}

``` |