Mehfil-e-Sukhan: Har Lafz Ek Mehfil

Roman Urdu Poetry Generation Model

A bidirectional LSTM neural network for generating Roman Urdu poetry, fine-tuned on a curated dataset of Urdu poetry in Latin script.

Table of Contents

- Overview

- Repository Structure

- Model Architecture

- Dataset

- Data Processing

- Training Methodology

- Text Generation Process

- Results and Performance

- Usage

- Interactive Demo

- Installation

- Future Improvements

- License

- Contact

Overview

Mehfil-e-Sukhan (meaning "Poetry Gathering" in Urdu) is a natural language generation model specifically designed for Roman Urdu poetry creation. This repository contains the complete model implementation, including data preprocessing, tokenization, model architecture, training code, and inference utilities.

The model uses a Bidirectional LSTM architecture trained on a dataset of approximately 1,300 lines of Roman Urdu poetry to learn patterns, rhythms, and stylistic elements of Urdu poetry written in Latin script.

Repository Structure

The repository contains the following key files:

poetry_generation.ipynb: Complete notebook with data preparation, model definition, training code, and generation utilitiesmodel_weights.pth: Trained model weights (243 MB)urdu_sp.model: SentencePiece tokenizer model (429 KB)urdu_sp.vocab: SentencePiece vocabulary file (181 KB)all_texts.txt: Preprocessed dataset used for training (869 KB)requirements.txt: Required Python packages.gitattributes: Git LFS tracking for large files

Model Architecture

The poetry generation model uses a Bidirectional LSTM architecture:

- Embedding Layer: 512-dimensional embeddings

- BiLSTM Layers: 3 stacked bidirectional LSTM layers with 768 hidden units in each direction

- Dropout: 0.2 dropout rate for regularization

- Output Layer: Linear projection to vocabulary size (12,000 tokens)

This architecture was chosen to capture both preceding and following context in poetry lines, which is essential for maintaining coherence and style in the generated text.

Dataset

The model is trained on the Roman Urdu Poetry dataset, which contains approximately 1,300 lines of Urdu poetry written in Latin script (Roman Urdu). The dataset includes works from various poets and covers a range of poetic styles and themes.

Dataset Source: Roman Urdu Poetry Dataset on Kaggle

Data Processing

Raw poetry lines undergo several preprocessing steps:

- Diacritic Removal: Unicode diacritics are normalized and removed

- Text Cleaning: Excessive punctuation, symbols, and repeated spaces are eliminated

- Tokenization: SentencePiece BPE (Byte Pair Encoding) tokenization with a vocabulary size of 12,000

The tokenization approach allows the model to handle out-of-vocabulary words by breaking them into subword units, which is particularly important for Roman Urdu where spelling variations are common.

Training Methodology

The model was trained with the following parameters:

- Train/Validation/Test Split: 80% / 10% / 10%

- Loss Function: Cross-Entropy with ignore_index for padding tokens

- Optimizer: Adam with learning rate 1e-3 and weight decay 1e-5

- Learning Rate Schedule: StepLR with step size 2 and gamma 0.5

- Gradient Clipping: Maximum norm of 5.0

- Epochs: 10 (sufficient for convergence on this dataset size)

- Batch Size: 64

Training was performed on both CPU and GPU environments, with automatic device detection.

Text Generation Process

Poetry generation uses nucleus sampling (top-p) with adjustable parameters:

- Temperature: Controls randomness in word selection (default: 1.2)

- Top-p (nucleus) sampling: Limits token selection to the smallest set whose cumulative probability exceeds the threshold (default: 0.85)

- Formatting: Automatically formats output with 6 words per line for aesthetic presentation

This sampling approach balances creativity and coherence in the generated text, allowing for controlled variation in the output.

Results and Performance

The final model achieves a test loss of approximately 3.17, which is reasonable considering the dataset size. The model demonstrates the ability to:

- Generate contextually relevant continuations from a seed word

- Maintain some aspects of Urdu poetic style in Roman script

- Produce text with thematic consistency

The limited dataset size (1,300 lines) does result in some repetitiveness in longer generations, which could be improved with additional training data.

Usage

To use the model for generating poetry:

# Import required libraries (these are included in the notebook)

import torch

import sentencepiece as spm

# Load the SentencePiece model

sp = spm.SentencePieceProcessor()

sp.load("urdu_sp.model")

# Load the BiLSTM model

model = BiLSTMLanguageModel(vocab_size=sp.get_piece_size(),

embed_dim=512,

hidden_dim=768,

num_layers=3,

dropout=0.2)

model.load_state_dict(torch.load("model_weights.pth", map_location=device))

model.eval()

# Generate poetry

start_word = "ishq" # Example: "love"

generated_poetry = generate_poetry_nucleus(model, sp, start_word,

num_words=12,

temperature=1.2,

top_p=0.85)

print(generated_poetry)

Interactive Demo

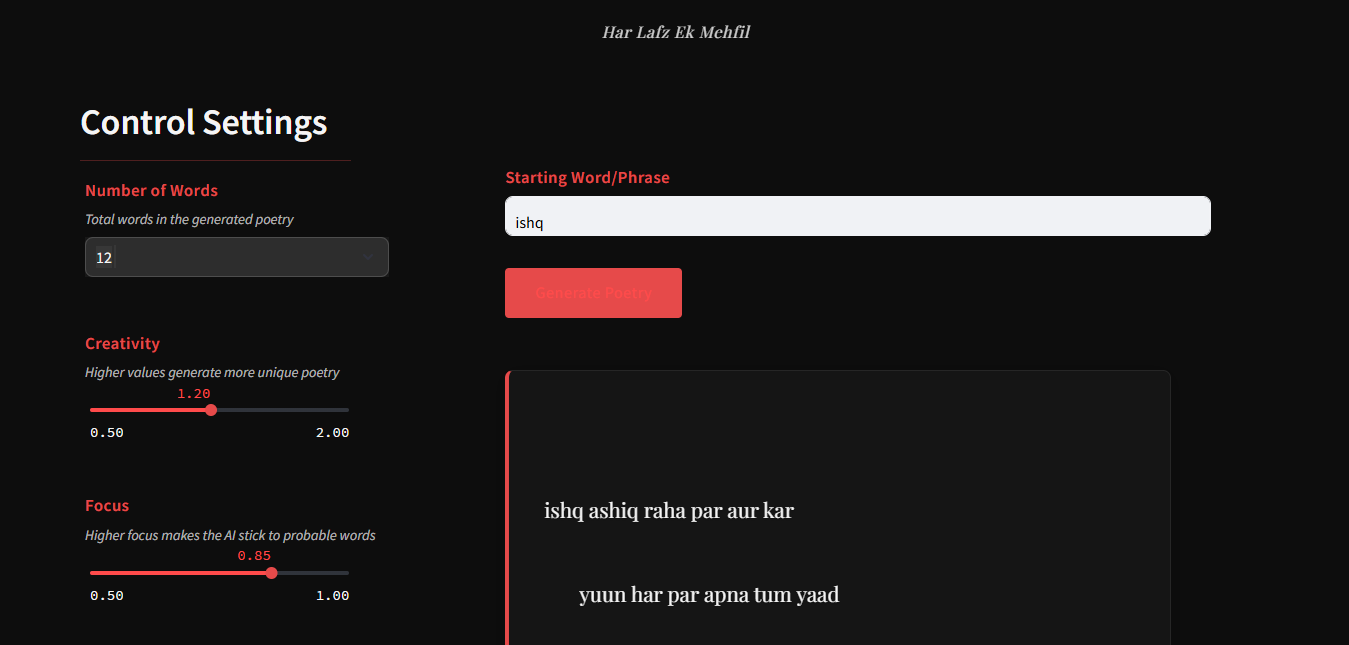

An interactive demo of this model is available as a Streamlit application, which provides a user-friendly interface to generate Roman Urdu poetry with adjustable parameters:

Mehfil-e-Sukhan Demo on HuggingFace Spaces

The Streamlit app allows users to:

- Enter a starting word or phrase

- Adjust the number of words to generate

- Control the creativity (temperature) and focus (top-p) parameters

- View the formatted poetry output in an elegant interface

Installation

To set up this model locally:

- Clone the repository

- Install the required dependencies:

pip install -r requirements.txt - Open and run

poetry_generation.ipynbto explore the complete implementation

The required packages include:

- torch

- sentencepiece

- pandas

- scikit-learn

- numpy

Future Improvements

Potential enhancements for the model include:

- Expanded Dataset: Increasing the training data size to thousands of poetry lines for improved diversity and coherence

- Transformer Architecture: Replacing BiLSTM with a Transformer-based model for better long-range dependencies

- Style Control: Adding mechanisms to control specific poetic styles or meters

- Multi-Language Support: Extending the model to handle both Roman Urdu and Nastaliq script

- Fine-Tuning Options: Adding more parameters to control the generation style and themes

License

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.

Contact

- LinkedIn: Muhammad Huzaifa Saqib

- GitHub: zaiffishiekh01

- Email: [email protected]