Please find the slides for presentation in this link

How to use

Caution: Prefix needs to be added

Note that due to the dataset we are fine-tuning on, only with some prefix, the style will transfer.

Example Prefix:

Still from the Anime, ...

Still from Anime Series, ...

Anime Series still of ...

We encourage people to try different prompts with different prefix.

from diffusers import DiffusionPipeline

pipe = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0")

pipe.load_lora_weights("yifei28/sdxl-base-1.0-Conan-lora")

prompt = "Still from the Anime, Elon Musk"

image = pipe(prompt).images[0]

Background

- Focus: Adapt Stable Diffusion XL (SDXL) to generate images in Conan (Anime) style

- Dataset: high-resolution Conan images with GPT-4-generated descriptions (Hugging Face)

- Framework: SDXL is a latent diffusion model built on Denoising Diffusion Probabilistic Models (DDPM), leveraging iterative denoising to progressively refine image generation

Dataset Overview

- Shape: 1,790*2, image and text

- Images: High resolution labeled images, vary from 890~1,300px

- Text: GPT-4-generated captions, making it well-suited for refining SDXL’s text-image alignment capabilities in such style.

- No extra data transformation needed

Model Selection & Metrics

- Pretrained Model: Stable Diffusion Model is a text‐to‐image generative model based on diffusion processes. SDXL (“Stable Diffusion XL”), as an advanced version of Stable diffusion, has improved image quality with super resolution, enhanced text‐image alignment.

- Fine-tuning: LoRA(Low‐Rank Adaptation) is a parameter‐efficient fine‐tuning method adapts SDXL effectively to our specific domain of Conan‐style image generation. It offers several advantages like it preserves pretrained knowledge and faster fine‐tuning, making it an efficient and cost‐effective.

- Metrics: FID Score is a measure of similarity between two datasets of images. It is often used to evaluate the quality of samples of Generative Adversarial Networks. FID is calculated by computing the Fréchet distance between two Gaussians fitted to feature representations of the Inception network.

Model Engineering and Fine-tuning Logic

- Baseline Installation with Default Parameters

Learning Rate = 1e-4

LoRA Rank = 4

Batch Size = 1

Mixed Precision = fp16

LR Warm-up Steps 0

Results:

- Successful style transfer

- But encountered issues, such as twisted faces and low-quality facial features.

Adjustments for More Stable Gradients

- Lower learning rate to 1e-5

- Change mixed precision to bf16: Offer larger numerical range with the same memory usage

- Increase batch size to 2: Allow more data per training iteration

- Increase LoRA rank to 16: Increase the number of learnable parameters

- Add warm-up steps to 500: Help stabilize the gradients by starting with a smaller learning rate

Metrics Results in FID Score

- LoRA Fine-tuned: 99.7 (-15%)

- Base model: 117.4

Current Model Problems and Solutions

Current Problems:

- Still have abnormal human features: twisted faces and low-quality body and facial features.

- Current Text Captions are generated by other GPT models using Images, text quality cannot be promised

- Most of them start with “Still from animates”, “Animated characters”, etc.

- From experiments, simple prompts yield better results compared to additional predicates

- Including only nouns and preps

- Current Tokenizer CLIP only include 77 tokens

Solutions for Future Improvement:

- Change loss function to align more with human visual perception

- Currently using MSE (standard for DMs)

- Incorporating Perceptual Loss, capturing more abstract information like texture, structure, and style

- Enhance hardware and resource budget to fit with more complex prompts

- Upgrading to better GPU, increasing larger batch size, and increasing LoRA rank to 64

- Elaborate higher quality Text Captions

- Enhance linguistic diversity, using more different syntactic patterns and structures

- Change loss function to align more with human visual perception

Future Opportunities

- Anime & Game Industry

- AI-assisted character design → faster concept art creation.

- Style transfer for anime production & comics.

- Personalized Content Creation

- Custom AI-generated avatars, wallpapers, and artworks.

- AI-powered manga generation based on text input.

- Enhancing Model Performance

- Transformer-based Diffusion (DiT) for better generation quality.

- 3D model adaptation for AI-generated anime in gaming/metaverse.

Limitations and bias

Abnormal human features: twisted faces and low-quality body and facial features. Only simple prompts yield better results, cannot handle long prompts.

Training details

--validation_epochs=1

--resolution=1024

--train_text_encoder

--train_batch_size=1

--num_train_epochs=10

--checkpointing_steps=1000

--gradient_accumulation_steps=8

--learning_rate=1e-05

--lr_warmup_steps=500

--dataloader_num_workers=8

--allow_tf32

--mixed_precision="bf16"

--rank=16

LoRA text2image fine-tuning - yifei28/sdxl-base-1.0-Conan-lora

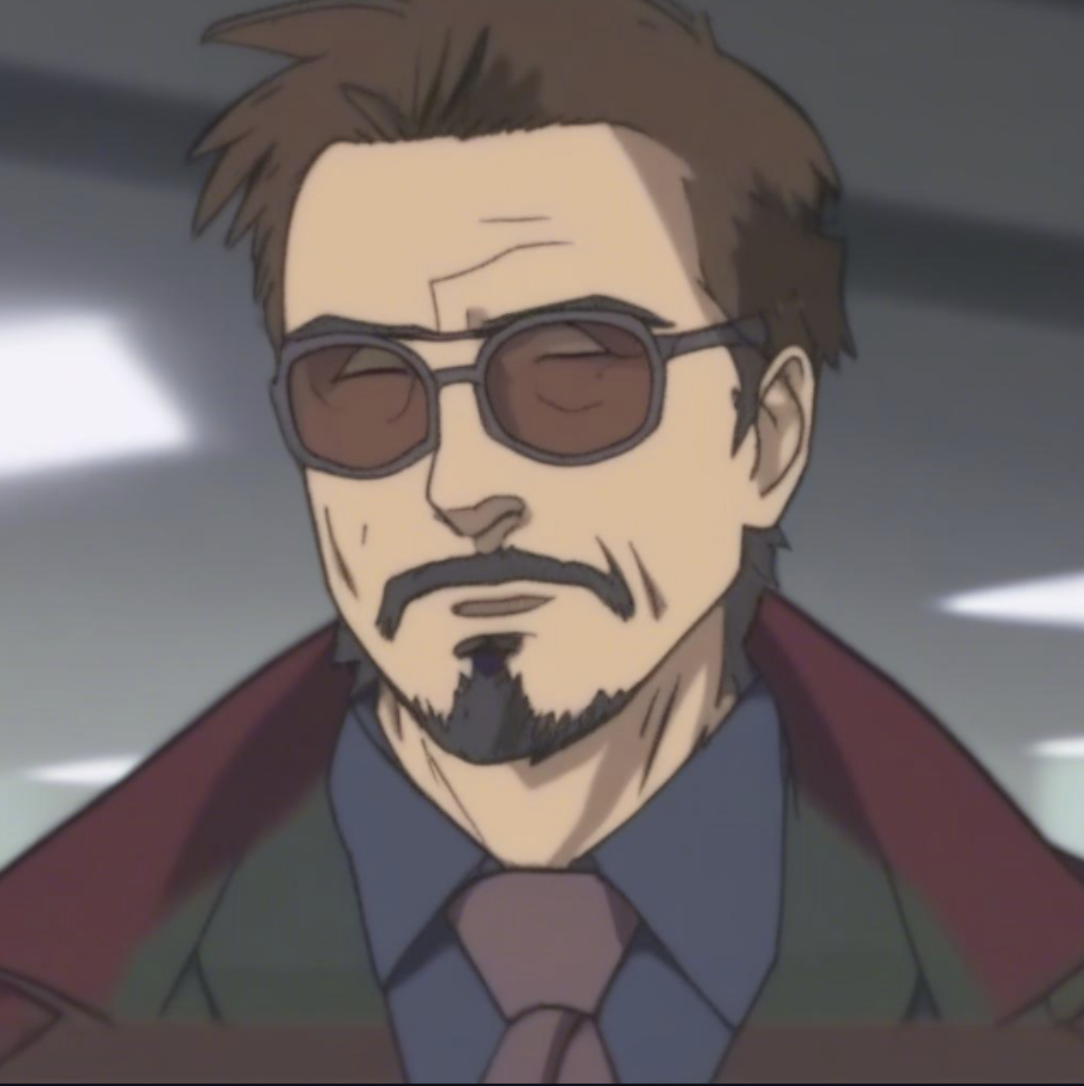

These are LoRA adaption weights for stabilityai/stable-diffusion-xl-base-1.0. The weights were fine-tuned on the jugg1024/conan-gpt4-captions dataset. You can find some example images in the following.

Still from the Anime Character, Mark Zuckerberg

Still from the Anime Character, Mark Zuckerberg

Still from the Anime Character, Robert Downey Jr.

Still from the Anime Character, Robert Downey Jr.

Still from the Anime, Draco Malfoy

Still from the Anime, Draco Malfoy

Still from the Anime, Severus Snape

Still from the Anime, Severus Snape

LoRA for the text encoder was enabled: True.

VAE used for training: madebyollin/sdxl-vae-fp16-fix.

- Downloads last month

- 67

Model tree for yifei28/sdxl-base-1.0-Conan-lora

Base model

stabilityai/stable-diffusion-xl-base-1.0