Model Descriptions:

This repo contains OpenVino model files for SimianLuo's LCM_Dreamshaper_v7 int8 quantized.

This 8 bit model is 1.4x faster than float32 model.

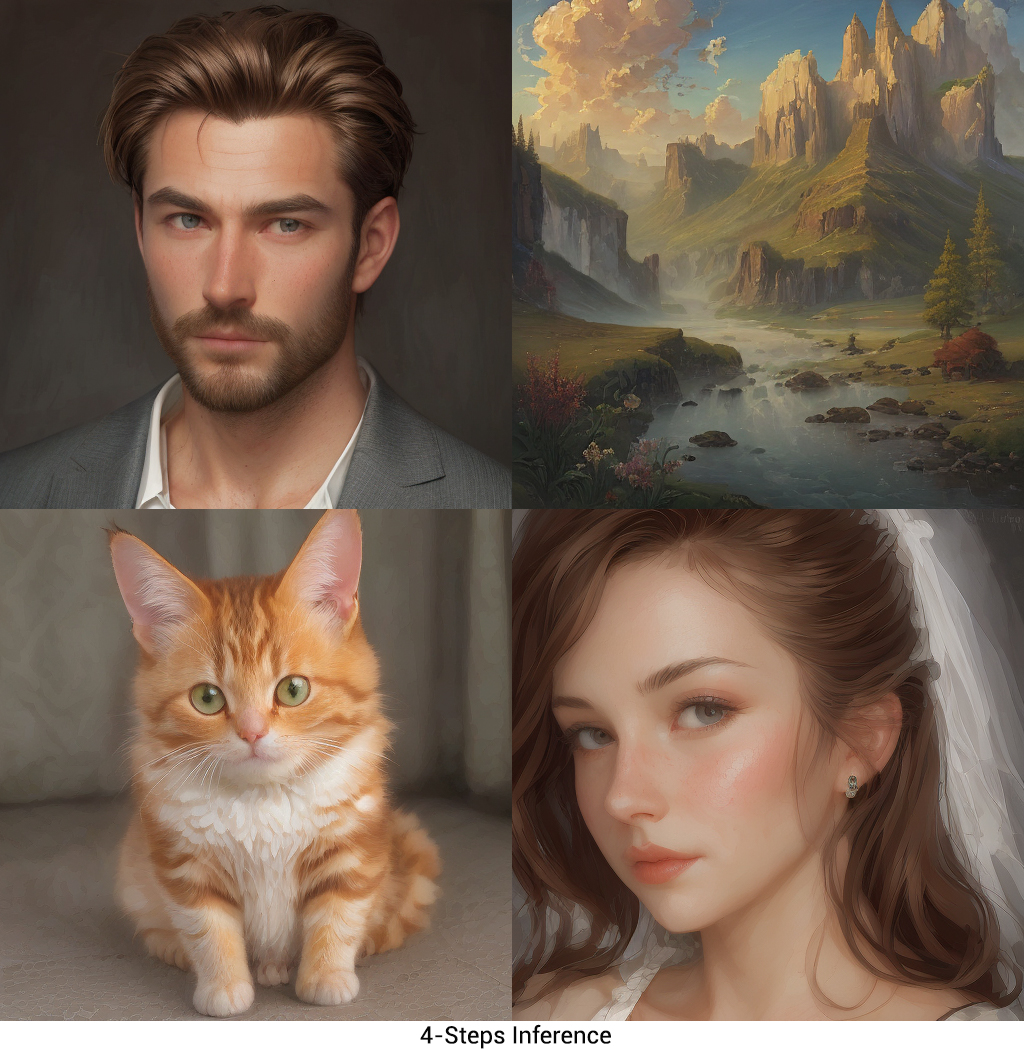

Generation Results:

Usage

You can try out model using Fast SD CPU

To run the model yourself, you can leverage the 🧨 Diffusers library:

- Install the dependencies:

pip install optimum-intel openvino diffusers onnx

- Run the model:

from optimum.intel import OVLatentConsistencyModelPipeline

pipe = OVLatentConsistencyModelPipeline.from_pretrained(

"rupeshs/LCM-dreamshaper-v7-openvino-int8",

ov_config={"CACHE_DIR": ""},

)

prompt = "sailing ship in storm by Leonardo da Vinci"

images = pipe(

prompt=prompt,

width=512,

height=512,

num_inference_steps=4,

guidance_scale=8.0,

).images

images[0].save("out_image.png")

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.