Image Search

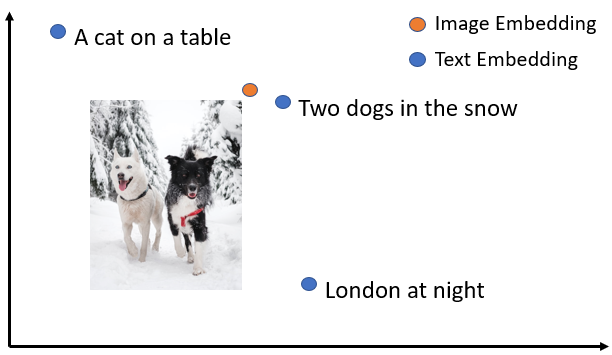

SentenceTransformers provides models that allow to embed images and text into the same vector space. This allows to find similar images as well as to implement image search.

Installation

Ensure that you have torchvision installed to use the image-text-models and use a recent PyTorch version (tested with PyTorch 1.7.0). Image-Text-Models have been added with SentenceTransformers version 1.0.0. Image-Text-Models are still in an experimental phase.

Usage

SentenceTransformers provides a wrapper for the OpenAI CLIP Model, which was trained on a variety of (image, text)-pairs.

from sentence_transformers import SentenceTransformer, util

from PIL import Image

#Load CLIP model

model = SentenceTransformer('clip-ViT-B-32')

#Encode an image:

img_emb = model.encode(Image.open('two_dogs_in_snow.jpg'))

#Encode text descriptions

text_emb = model.encode(['Two dogs in the snow', 'A cat on a table', 'A picture of London at night'])

#Compute cosine similarities

cos_scores = util.cos_sim(img_emb, text_emb)

print(cos_scores)

You can use the CLIP model for:

- Text-to-Image / Image-To-Text / Image-to-Image / Text-to-Text Search

- You can fine-tune it on your own image&text data with the regular SentenceTransformers training code.

Examples

- Image_Search.ipynb (Colab Version) depicts a larger example for text-to-image and image-to-image search using 25,000 free pictures from Unsplash.

- Image_Search-multilingual.ipynb (Colab Version) example of multilingual text2image search for 50+ languages.

- Image_Clustering.ipynb (Colab Version) shows how to perform image clustering. Given 25,000 free pictures from Unsplash, we find clusters of similar images. You can control how sensitive the clustering should be.

- Image_Duplicates.ipynb (Colab Version) shows an example how to find duplicate and near duplicate images in a large collection of photos.

- Image_Classification.ipynb (Colab Version) example for (multi-lingual) zero-shot image classifcation.