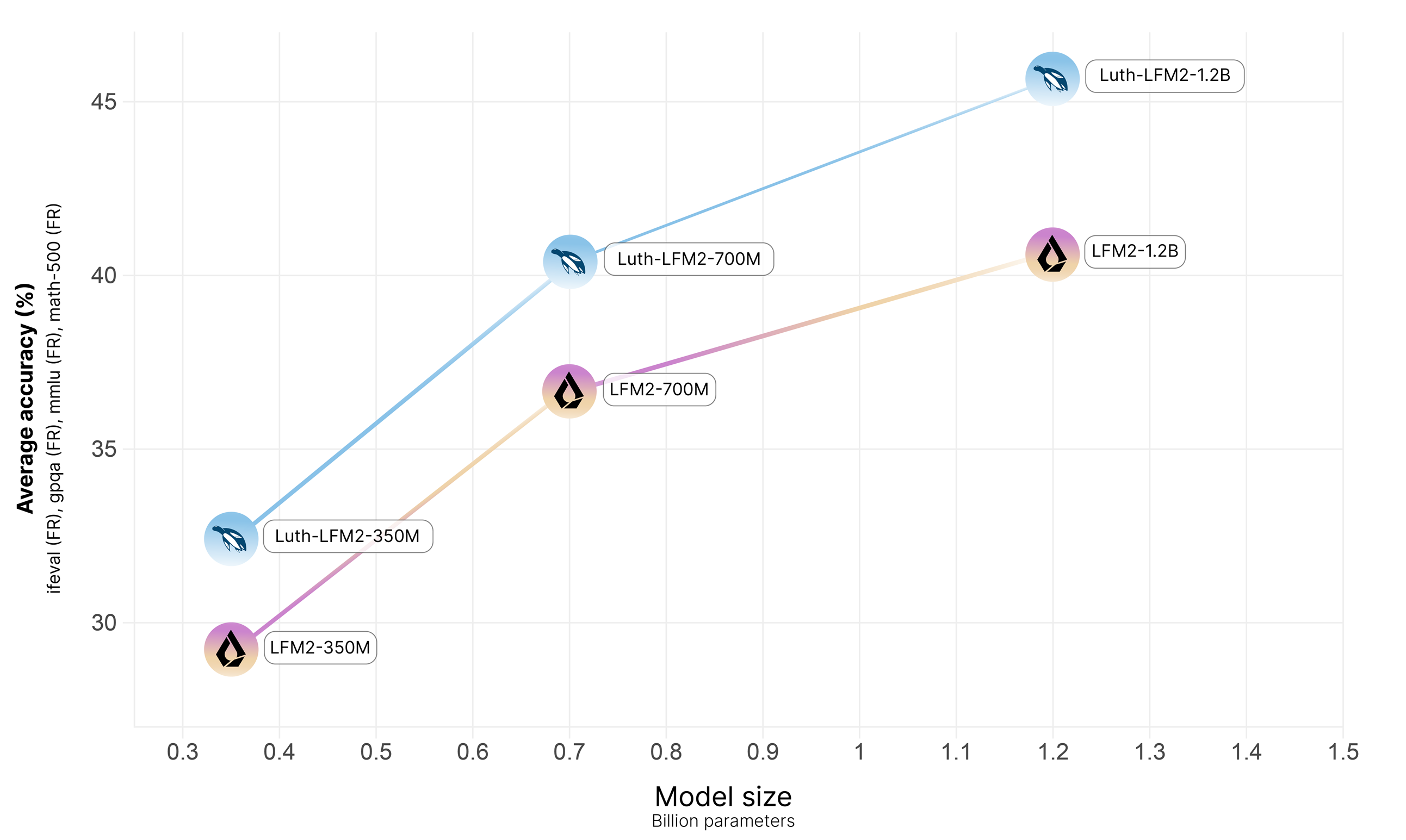

Luth-LFM2-1.2B

Luth-LFM2-1.2B is a French fine-tuned version of LFM2-1.2B in collaboration with Liquid AI, trained on the Luth-SFT dataset. The model has improved its French capabilities in instruction following, math, and general knowledge. Additionally, its English capabilities have remained stable or slightly improved such as in Maths.

Our Evaluation, training and data scripts are available on GitHub, along with the Blog we wrote, to further detail our recipe.

Model Details

The model was trained using full fine-tuning on the Luth-SFT dataset with Axolotl. The resulting model was then merged back with LFM2-1.2B. This process successfully retained the model's English capabilities while improving its performance in French.

Benchmark Results

We used LightEval for evaluation, with custom tasks for the French benchmarks. The models were evaluated with a temperature=0.

French Benchmark Scores

| Benchmark | LFM2-1.2B | Luth-LFM2-1.2B |

|---|---|---|

| IFEval-fr (strict prompt) | 53.60 | 60.44 |

| GPQA-fr | 25.77 | 27.02 |

| MMLU-fr | 47.59 | 47.98 |

| MATH-500-fr | 35.80 | 47.20 |

| Arc-Chall-fr | 39.44 | 39.01 |

| Hellaswag-fr | 33.05 | 36.76 |

English Benchmark Scores

| Benchmark | LFM2-1.2B | Luth-LFM2-1.2B |

|---|---|---|

| IFEval-en (strict prompt) | 70.43 | 70.61 |

| GPQA-en | 26.68 | 28.21 |

| MMLU-en | 55.18 | 54.59 |

| MATH-500-en | 44.60 | 50.20 |

| Arc-Chall-en | 43.09 | 43.26 |

| Hellaswag-en | 57.64 | 58.46 |

Code Example

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("kurakurai/Luth-LFM2-1.2B")

model = AutoModelForCausalLM.from_pretrained("kurakurai/Luth-LFM2-1.2B")

messages = [

{"role": "user", "content": "Quelle est la capitale de la France?"},

]

inputs = tokenizer.apply_chat_template(

messages,

add_generation_prompt=True,

tokenize=True,

return_dict=True,

return_tensors="pt",

).to(model.device)

outputs = model.generate(**inputs, max_new_tokens=100)

print(

tokenizer.decode(

outputs[0][inputs["input_ids"].shape[-1] :], skip_special_tokens=True

)

)

Citation

@misc{luthlfm2kurakurai,

title = {Luth-LFM2-1.2B},

author = {Kurakura AI Team},

year = {2025},

howpublished = {\url{https://huggingface.co/kurakurai/Luth-LFM2-1.2B}},

note = {LFM2-1.2B fine-tuned on French datasets}

}

- Downloads last month

- 346