Real-Time Iteration Scheme for Diffusion Policy (RTI-DP)

This repository contains the official model weights and code for the paper: "Real-Time Iteration Scheme for Diffusion Policy".

- 📚 Paper

- 🌐 Project Page

- 💻 Code

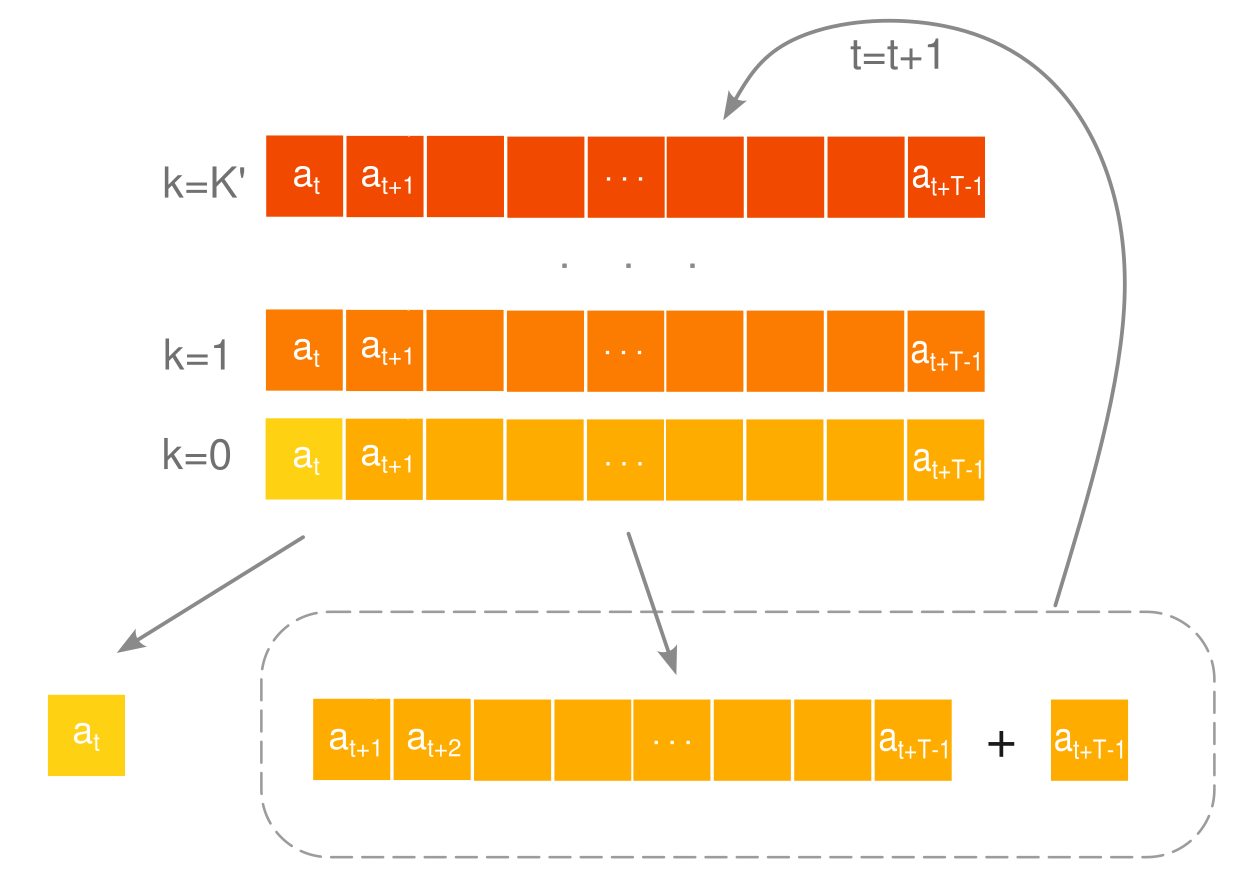

RTI-DP enables fast inference in diffusion-based robotic policies by initializing each denoising step with the previous prediction — no retraining, no distillation.

Usage

This model is designed to be used with its official codebase. For detailed installation instructions, environment setup, and further information, please refer to the official GitHub repository, which is based on Diffusion Policy.

Evaluation

To evaluate RTI-DP policies with DDPM, you can use the provided script from the repository:

python ../eval_rti.py --config-name=eval_diffusion_rti_lowdim_workspace.yaml

For RTI-DP-scale checkpoints, refer to the duandaxia/rti-dp-scale on Hugging Face.

Citation

If you find our work useful, please consider citing our paper:

@misc{duan2025realtimeiterationschemediffusion,

title={Real-Time Iteration Scheme for Diffusion Policy},

author={Yufei Duan and Hang Yin and Danica Kragic},

year={2025},

eprint={2508.05396},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2508.05396},

}

Acknowledgements

We thank the authors of Diffusion Policy, Consistency Policy and Streaming Diffusion Policy for sharing their codebase.

- Downloads last month

- -