Overview [Paper | Code | Demo]

Official implementation of IEEE/ACM Transactions on Audio, Speech, and Language Processing (IEEE/ACM TASLP) 2024 paper "DeFTAN-II: Efficient multichannel speech enhancement with subgroup processing (accepted)".

The abstract from the paper is the following:

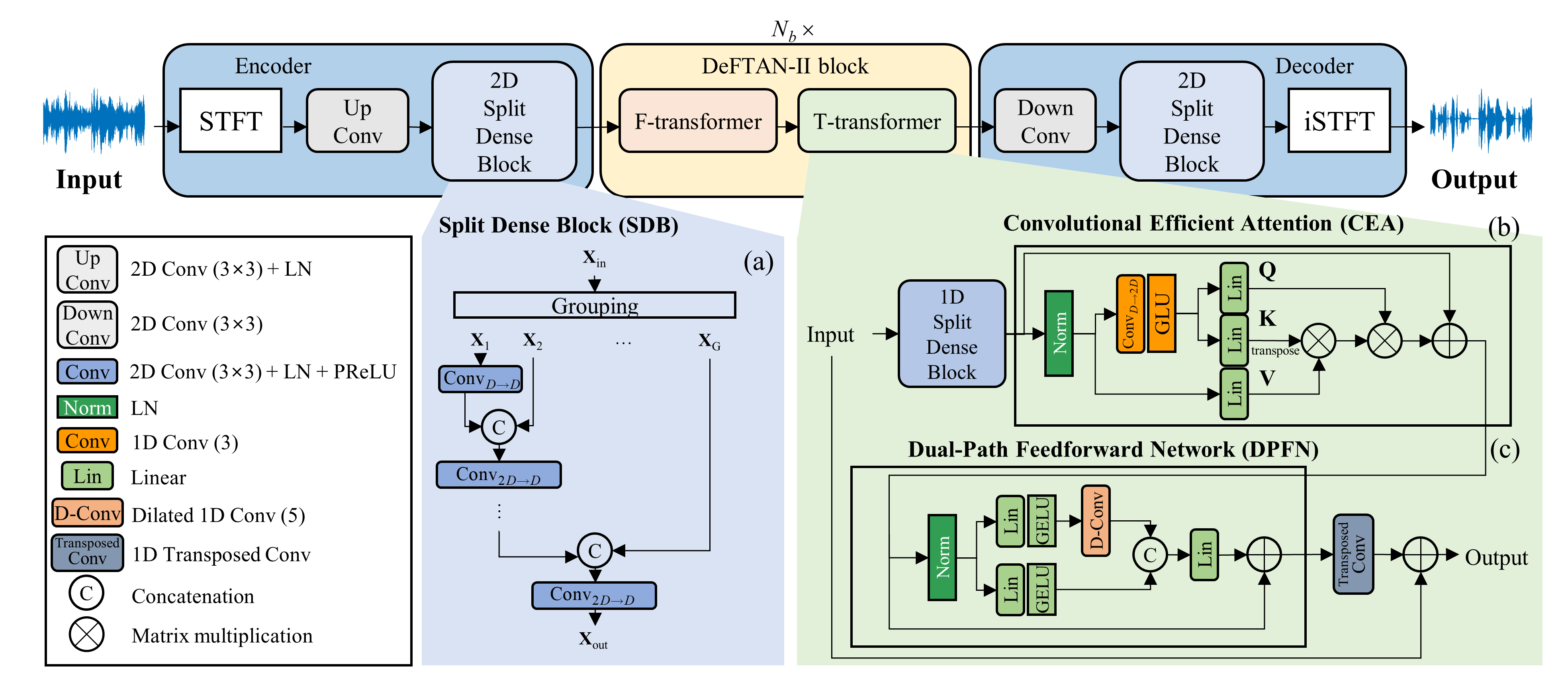

In this work, we present DeFTAN-II, an efficient multichannel speech enhancement model based on transformer architecture and subgroup processing. Despite the success of transformers in speech enhancement, they face challenges in capturing local relations, reducing the high computational complexity, and lowering memory usage. To address these limitations, we introduce subgroup processing in our model, combining subgroups of locally emphasized features with other subgroups containing original features. The subgroup processing is implemented in several blocks of the proposed network. In the proposed split dense blocks extracting spatial features, a pair of subgroups is sequentially concatenated and processed by convolution layers to effectively reduce the computational complexity and memory usage. For the F- and T-transformers extracting temporal and spectral relations, we introduce crossattention between subgroups to identify relationships between locally emphasized and non-emphasized features. The dualpath feedforward network then aggregates attended features in terms of the gating of local features processed by dilated convolutions. Through extensive comparisons with state-of-the-art multichannel speech enhancement models, we demonstrate that DeFTAN-II with subgroup processing outperforms existing methods at significantly lower computational complexity. Moreover, we evaluate the model’s generalization capability on real-world data without fine-tuning, which further demonstrates its effectiveness in practical scenarios.

We uploaded a pre-trained model trained on the spatialized DNS challenge dataset to Hugging Face.

Using pre-trained model (spatialized DNS challenge dataset)

Download deftan-II.py and max.pt to your\path\.

You may import the DeFTAN-II class and load the state_dict using the code below.

import torch

from DeFTAN-II import Network

DeFTAN2model = Network()

state_dict = torch.load('max.pt')

state_dict = {k.replace("module.", ""): v for k, v in state_dict.items()}

DeFTAN2model.load_state_dict(state_dict)

You can now use the DeFTAN-II with pre-trained parameter to enhance speech.

license: mit

- Downloads last month

- 44