|

|

--- |

|

|

language: en |

|

|

tags: [music, multimodal, qa, midi, vision] |

|

|

license: mit |

|

|

--- |

|

|

# MusiXQA 🎵 |

|

|

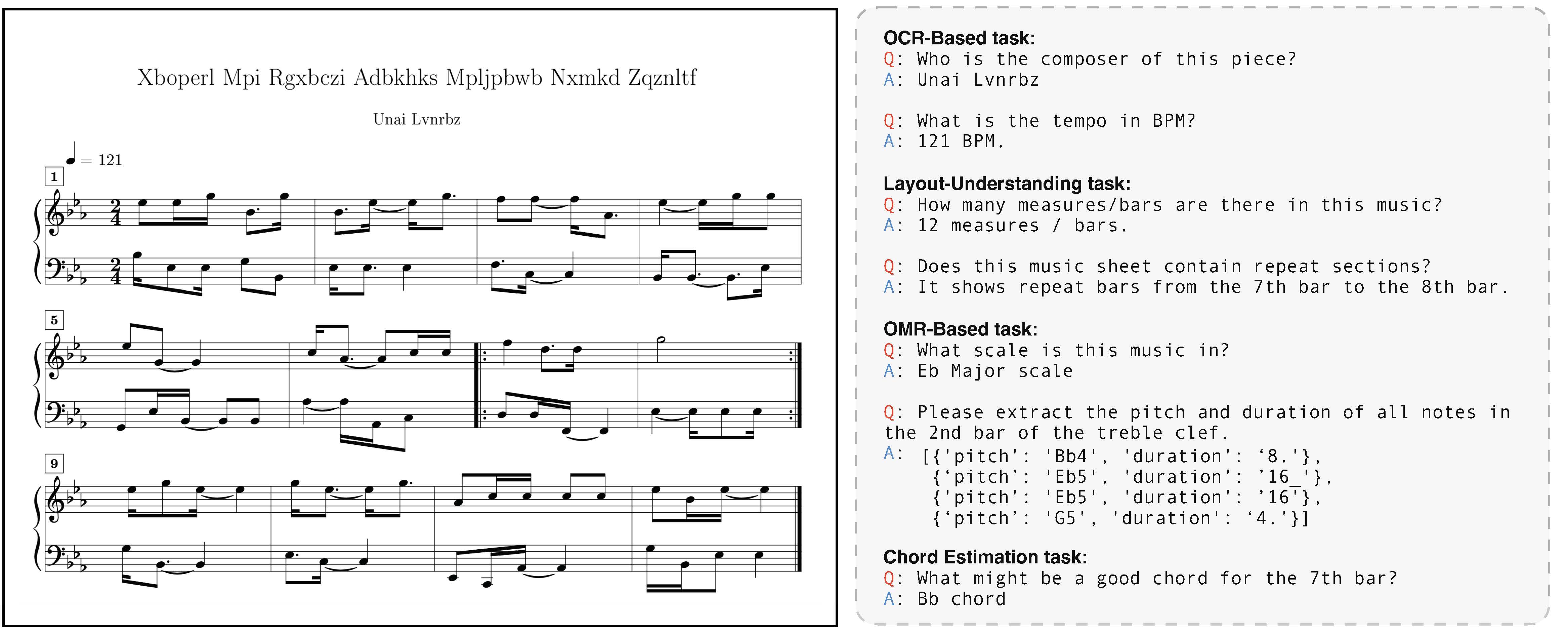

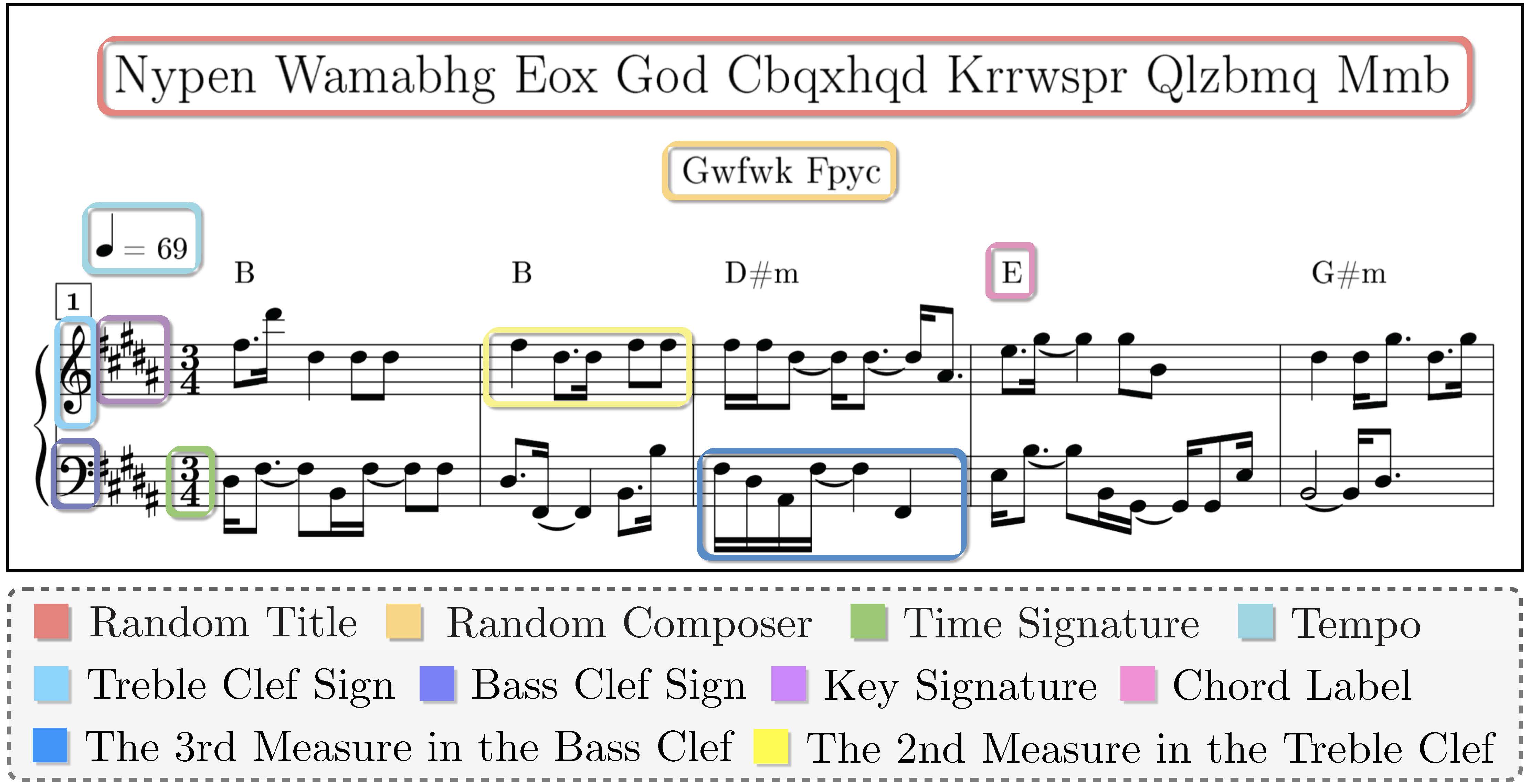

**MusiXQA** is a multimodal dataset for evaluating and training music sheet understanding systems. Each data sample is composed of: |

|

|

- A scanned music sheet image (`.png`) |

|

|

- Its corresponding MIDI file (`.mid`) |

|

|

- A structured annotation (from `metadata.json`) |

|

|

- Question–Answer (QA) pairs targeting musical structure, semantics, and optical music recognition (OMR) |

|

|

|

|

|

|

|

|

--- |

|

|

|

|

|

## 📂 Dataset Structure |

|

|

``` |

|

|

MusiXQA/ |

|

|

├── images.tar # PNG files of music sheets (e.g., 0000000.png) |

|

|

├── midi.tar # MIDI files (e.g., 0000000.mid), compressed |

|

|

├── train_qa_omr.json # OMR-tasks QA pairs (train split) |

|

|

├── train_qa_simple.json # Simple musical info QAs (train split) |

|

|

├── test_qa_omr.json # OMR-tasks QA pairs (test split) |

|

|

├── test_qa_simple.json # Simple musical info QAs (test split) |

|

|

├── metadata.json # Annotation for each document (e.g., key, time, instruments) |

|

|

``` |

|

|

## 🧾 Metadata |

|

|

The `metadata.json` file provides comprehensive annotations of the full music sheet content, facilitating research in symbolic music reasoning, score reconstruction, and multimodal alignment with audio or MIDI. |

|

|

|

|

|

|

|

|

|

|

|

## ❓ QA Data Format |

|

|

|

|

|

Each QA file (e.g., train_qa_omr.json) is a list of QA entries like this: |

|

|

``` |

|

|

{ |

|

|

"doc_id": "0086400", |

|

|

"question": "Please extract the pitch and duration of all notes in the 2nd bar of the treble clef.", |

|

|

"answer": "qB4~ sB4 e.E5~ sE5 sB4 eB4 e.E5 sG#4", |

|

|

"encode_format": "beat" |

|

|

}, |

|

|

``` |

|

|

• doc_id: corresponds to a sample in images/, midi/, and metadata.json<br /> |

|

|

• question: natural language query<br /> |

|

|

• answer: ground truth answer<br /> |

|

|

• encode_format: how the input is encoded (e.g., "beat", "note", etc.)<br /> |

|

|

|

|

|

## 🎓 Reference |

|

|

If you use this dataset in your work, please cite it using the following reference: |

|

|

``` |

|

|

@misc{chen2025musixqaadvancingvisualmusic, |

|

|

title={MusiXQA: Advancing Visual Music Understanding in Multimodal Large Language Models}, |

|

|

author={Jian Chen and Wenye Ma and Penghang Liu and Wei Wang and Tengwei Song and Ming Li and Chenguang Wang and Jiayu Qin and Ruiyi Zhang and Changyou Chen}, |

|

|

year={2025}, |

|

|

eprint={2506.23009}, |

|

|

archivePrefix={arXiv}, |

|

|

primaryClass={cs.CV}, |

|

|

url={https://arxiv.org/abs/2506.23009}, |

|

|

} |

|

|

``` |