modelId

string | author

string | last_modified

timestamp[us, tz=UTC] | downloads

int64 | likes

int64 | library_name

string | tags

list | pipeline_tag

string | createdAt

timestamp[us, tz=UTC] | card

string |

|---|---|---|---|---|---|---|---|---|---|

KrafterDen/copy

|

KrafterDen

| 2024-02-06T07:02:07Z

| 3

| 0

|

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"gpt3",

"en",

"ru",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-06T06:44:09Z

|

---

license: mit

language:

- en

- ru

tags:

- gpt3

- transformers

---

# 🗿 ruGPT-3.5 13B

Language model for Russian. Model has 13B parameters as you can guess from it's name. This is our biggest model so far and it was used for trainig GigaChat (read more about it in the [article](https://habr.com/ru/companies/sberbank/articles/730108/)).

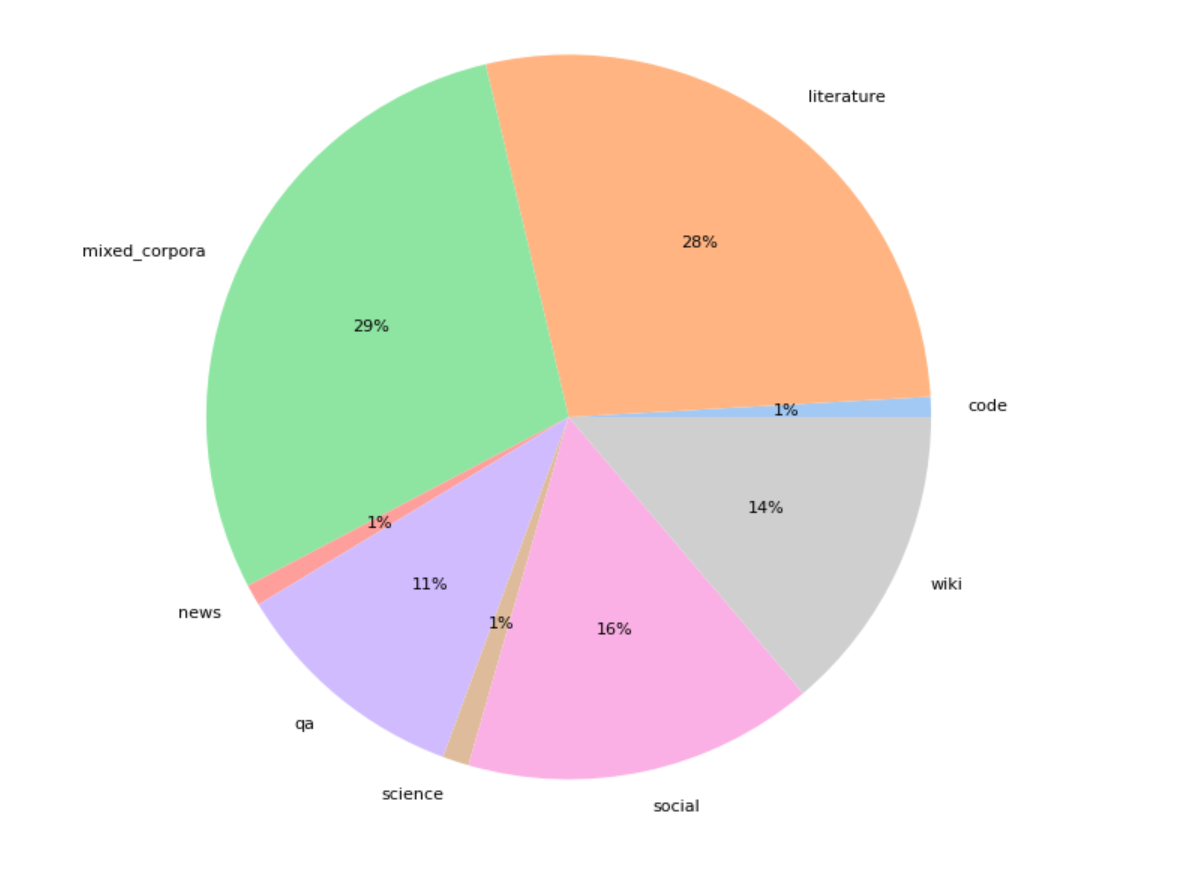

## Dataset

Model was pretrained on a 300Gb of various domains, than additionaly trained on the 100 Gb of code and legal documets. Here is the dataset structure:

Training data was deduplicated, the text deduplication includes 64-bit hashing of each text in the corpus for keeping texts with a unique hash. We also filter the documents based on their text compression rate using zlib4. The most strongly and weakly compressing deduplicated texts are discarded.

## Technical details

Model was trained using Deepspeed and Megatron libraries, on 300B tokens dataset for 3 epochs, around 45 days on 512 V100. After that model was finetuned 1 epoch with sequence length 2048 around 20 days on 200 GPU A100 on additional data (see above).

After the final training perplexity for this model was around 8.8 for Russian.

## Examples of usage

Try different generation strategies to reach better results.

```python

request = "Стих про программиста может быть таким:"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=2,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

```

>>> Стих про программиста может быть таким:

Программист сидит в кресле,

Стих сочиняет он про любовь,

Он пишет, пишет, пишет, пишет...

И не выходит ни черта!

```

```python

request = "Нейронная сеть — это"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=4,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

```

>>> Нейронная сеть — это математическая модель, состоящая из большого

количества нейронов, соединенных между собой электрическими связями.

Нейронная сеть может быть смоделирована на компьютере, и с ее помощью

можно решать задачи, которые не поддаются решению с помощью традиционных

математических методов.

```

```python

request = "Гагарин полетел в космос в"

encoded_input = tokenizer(request, return_tensors='pt', \

add_special_tokens=False).to('cuda:0')

output = model.generate(

**encoded_input,

num_beams=2,

do_sample=True,

max_new_tokens=100

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

```

>>> Гагарин полетел в космос в 1961 году. Это было первое в истории

человечества космическое путешествие. Юрий Гагарин совершил его

на космическом корабле Восток-1. Корабль был запущен с космодрома

Байконур.

```

|

DarqueDante/Codellama_Roblox

|

DarqueDante

| 2024-02-06T06:59:17Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-02-05T21:21:36Z

|

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

varun-v-rao/bert-large-cased-lora-1.58M-snli-model1

|

varun-v-rao

| 2024-02-06T06:57:05Z

| 4

| 0

|

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:google-bert/bert-large-cased",

"base_model:finetune:google-bert/bert-large-cased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-02-06T03:12:15Z

|

---

license: apache-2.0

base_model: bert-large-cased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-large-cased-lora-1.58M-snli-model1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-large-cased-lora-1.58M-snli-model1

This model is a fine-tuned version of [bert-large-cased](https://huggingface.co/bert-large-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8126

- Accuracy: 0.695

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 256

- eval_batch_size: 256

- seed: 57

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.5065 | 1.0 | 2146 | 0.4147 | 0.8480 |

| 0.4613 | 2.0 | 4292 | 0.3828 | 0.8588 |

| 0.4464 | 3.0 | 6438 | 0.3717 | 0.8629 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu121

- Datasets 2.15.0

- Tokenizers 0.15.0

|

kenilshah35/whisper-med-dictation-50

|

kenilshah35

| 2024-02-06T06:51:22Z

| 2

| 0

|

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:openai/whisper-medium.en",

"base_model:adapter:openai/whisper-medium.en",

"region:us"

] | null | 2024-02-06T04:02:33Z

|

---

library_name: peft

base_model: openai/whisper-medium.en

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2

|

thrunlab/Mistral_Sparse_pretraining_80_percent_10000

|

thrunlab

| 2024-02-06T06:20:58Z

| 3

| 0

|

transformers

|

[

"transformers",

"safetensors",

"mistral",

"generated_from_trainer",

"dataset:openwebtext",

"base_model:mistralai/Mistral-7B-Instruct-v0.1",

"base_model:finetune:mistralai/Mistral-7B-Instruct-v0.1",

"license:apache-2.0",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] | null | 2024-02-05T15:03:09Z

|

---

license: apache-2.0

base_model: mistralai/Mistral-7B-Instruct-v0.1

tags:

- generated_from_trainer

datasets:

- openwebtext

model-index:

- name: Mistral_Sparse_pretraining_80_percent_10000

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Mistral_Sparse_pretraining_80_percent_10000

This model is a fine-tuned version of [mistralai/Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) on the openwebtext dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6872

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 32

- seed: 0

- distributed_type: multi-GPU

- num_devices: 6

- gradient_accumulation_steps: 2

- total_train_batch_size: 96

- total_eval_batch_size: 192

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:-----:|:---------------:|

| 1.7461 | 0.05 | 50 | 1.7009 |

| 1.4034 | 0.1 | 100 | 1.3910 |

| 1.2302 | 0.15 | 150 | 1.2330 |

| 1.1363 | 0.19 | 200 | 1.1354 |

| 1.0699 | 0.24 | 250 | 1.0723 |

| 1.0316 | 0.29 | 300 | 1.0284 |

| 1.0044 | 0.34 | 350 | 0.9943 |

| 0.9719 | 0.39 | 400 | 0.9668 |

| 0.9391 | 0.44 | 450 | 0.9430 |

| 0.9194 | 0.48 | 500 | 0.9249 |

| 0.9131 | 0.53 | 550 | 0.9092 |

| 0.877 | 0.58 | 600 | 0.8953 |

| 0.8757 | 0.63 | 650 | 0.8852 |

| 0.8644 | 0.68 | 700 | 0.8749 |

| 0.8625 | 0.73 | 750 | 0.8679 |

| 0.867 | 0.78 | 800 | 0.8594 |

| 0.852 | 0.82 | 850 | 0.8529 |

| 0.8482 | 0.87 | 900 | 0.8473 |

| 0.8372 | 0.92 | 950 | 0.8421 |

| 0.8391 | 0.97 | 1000 | 0.8366 |

| 0.8209 | 1.02 | 1050 | 0.8327 |

| 0.8172 | 1.07 | 1100 | 0.8275 |

| 0.8094 | 1.11 | 1150 | 0.8247 |

| 0.8107 | 1.16 | 1200 | 0.8210 |

| 0.8137 | 1.21 | 1250 | 0.8168 |

| 0.8122 | 1.26 | 1300 | 0.8143 |

| 0.8047 | 1.31 | 1350 | 0.8115 |

| 0.804 | 1.36 | 1400 | 0.8083 |

| 0.7955 | 1.41 | 1450 | 0.8062 |

| 0.7939 | 1.45 | 1500 | 0.8040 |

| 0.7835 | 1.5 | 1550 | 0.8019 |

| 0.7983 | 1.55 | 1600 | 0.8001 |

| 0.7953 | 1.6 | 1650 | 0.7975 |

| 0.7903 | 1.65 | 1700 | 0.7945 |

| 0.7864 | 1.7 | 1750 | 0.7938 |

| 0.7972 | 1.75 | 1800 | 0.7914 |

| 0.7855 | 1.79 | 1850 | 0.7905 |

| 0.7834 | 1.84 | 1900 | 0.7878 |

| 0.7812 | 1.89 | 1950 | 0.7854 |

| 0.7865 | 1.94 | 2000 | 0.7847 |

| 0.7875 | 1.99 | 2050 | 0.7837 |

| 0.7764 | 2.04 | 2100 | 0.7815 |

| 0.7676 | 2.08 | 2150 | 0.7807 |

| 0.7716 | 2.13 | 2200 | 0.7796 |

| 0.777 | 2.18 | 2250 | 0.7781 |

| 0.7706 | 2.23 | 2300 | 0.7769 |

| 0.7669 | 2.28 | 2350 | 0.7748 |

| 0.771 | 2.33 | 2400 | 0.7742 |

| 0.7501 | 2.38 | 2450 | 0.7728 |

| 0.7653 | 2.42 | 2500 | 0.7713 |

| 0.7715 | 2.47 | 2550 | 0.7699 |

| 0.7588 | 2.52 | 2600 | 0.7694 |

| 0.7665 | 2.57 | 2650 | 0.7676 |

| 0.7616 | 2.62 | 2700 | 0.7658 |

| 0.7597 | 2.67 | 2750 | 0.7654 |

| 0.756 | 2.71 | 2800 | 0.7644 |

| 0.7517 | 2.76 | 2850 | 0.7628 |

| 0.7561 | 2.81 | 2900 | 0.7628 |

| 0.7413 | 2.86 | 2950 | 0.7620 |

| 0.7545 | 2.91 | 3000 | 0.7603 |

| 0.7442 | 2.96 | 3050 | 0.7592 |

| 0.7454 | 3.01 | 3100 | 0.7589 |

| 0.7575 | 3.05 | 3150 | 0.7583 |

| 0.739 | 3.1 | 3200 | 0.7571 |

| 0.7446 | 3.15 | 3250 | 0.7558 |

| 0.7428 | 3.2 | 3300 | 0.7557 |

| 0.737 | 3.25 | 3350 | 0.7553 |

| 0.7512 | 3.3 | 3400 | 0.7536 |

| 0.7447 | 3.34 | 3450 | 0.7525 |

| 0.7417 | 3.39 | 3500 | 0.7525 |

| 0.7403 | 3.44 | 3550 | 0.7512 |

| 0.761 | 3.49 | 3600 | 0.7502 |

| 0.7475 | 3.54 | 3650 | 0.7498 |

| 0.7535 | 3.59 | 3700 | 0.7486 |

| 0.733 | 3.64 | 3750 | 0.7483 |

| 0.7347 | 3.68 | 3800 | 0.7470 |

| 0.7439 | 3.73 | 3850 | 0.7470 |

| 0.7417 | 3.78 | 3900 | 0.7460 |

| 0.7383 | 3.83 | 3950 | 0.7460 |

| 0.7316 | 3.88 | 4000 | 0.7450 |

| 0.7273 | 3.93 | 4050 | 0.7442 |

| 0.7376 | 3.97 | 4100 | 0.7440 |

| 0.73 | 4.02 | 4150 | 0.7424 |

| 0.732 | 4.07 | 4200 | 0.7429 |

| 0.7278 | 4.12 | 4250 | 0.7419 |

| 0.721 | 4.17 | 4300 | 0.7416 |

| 0.7309 | 4.22 | 4350 | 0.7410 |

| 0.7273 | 4.27 | 4400 | 0.7400 |

| 0.7297 | 4.31 | 4450 | 0.7395 |

| 0.7321 | 4.36 | 4500 | 0.7385 |

| 0.7348 | 4.41 | 4550 | 0.7381 |

| 0.7251 | 4.46 | 4600 | 0.7371 |

| 0.7175 | 4.51 | 4650 | 0.7372 |

| 0.7356 | 4.56 | 4700 | 0.7368 |

| 0.7306 | 4.6 | 4750 | 0.7363 |

| 0.7248 | 4.65 | 4800 | 0.7359 |

| 0.7266 | 4.7 | 4850 | 0.7343 |

| 0.7243 | 4.75 | 4900 | 0.7349 |

| 0.7256 | 4.8 | 4950 | 0.7338 |

| 0.7301 | 4.85 | 5000 | 0.7335 |

| 0.7266 | 4.9 | 5050 | 0.7327 |

| 0.7229 | 4.94 | 5100 | 0.7321 |

| 0.7355 | 4.99 | 5150 | 0.7315 |

| 0.7207 | 5.04 | 5200 | 0.7317 |

| 0.7157 | 5.09 | 5250 | 0.7314 |

| 0.7214 | 5.14 | 5300 | 0.7299 |

| 0.7104 | 5.19 | 5350 | 0.7304 |

| 0.7059 | 5.24 | 5400 | 0.7296 |

| 0.7181 | 5.28 | 5450 | 0.7295 |

| 0.7226 | 5.33 | 5500 | 0.7286 |

| 0.7077 | 5.38 | 5550 | 0.7282 |

| 0.7239 | 5.43 | 5600 | 0.7276 |

| 0.7159 | 5.48 | 5650 | 0.7277 |

| 0.7169 | 5.53 | 5700 | 0.7271 |

| 0.7101 | 5.57 | 5750 | 0.7269 |

| 0.7146 | 5.62 | 5800 | 0.7262 |

| 0.7191 | 5.67 | 5850 | 0.7265 |

| 0.7124 | 5.72 | 5900 | 0.7248 |

| 0.7085 | 5.77 | 5950 | 0.7238 |

| 0.7052 | 5.82 | 6000 | 0.7235 |

| 0.7222 | 5.87 | 6050 | 0.7222 |

| 0.7089 | 5.91 | 6100 | 0.7221 |

| 0.7088 | 5.96 | 6150 | 0.7222 |

| 0.7017 | 6.01 | 6200 | 0.7218 |

| 0.7079 | 6.06 | 6250 | 0.7218 |

| 0.7209 | 6.11 | 6300 | 0.7211 |

| 0.691 | 6.16 | 6350 | 0.7210 |

| 0.7035 | 6.2 | 6400 | 0.7203 |

| 0.7075 | 6.25 | 6450 | 0.7207 |

| 0.7036 | 6.3 | 6500 | 0.7200 |

| 0.7023 | 6.35 | 6550 | 0.7189 |

| 0.7201 | 6.4 | 6600 | 0.7192 |

| 0.7021 | 6.45 | 6650 | 0.7188 |

| 0.6971 | 6.5 | 6700 | 0.7174 |

| 0.7087 | 6.54 | 6750 | 0.7184 |

| 0.7044 | 6.59 | 6800 | 0.7176 |

| 0.6921 | 6.64 | 6850 | 0.7179 |

| 0.7079 | 6.69 | 6900 | 0.7166 |

| 0.6908 | 6.74 | 6950 | 0.7158 |

| 0.687 | 6.79 | 7000 | 0.7158 |

| 0.696 | 6.83 | 7050 | 0.7148 |

| 0.6954 | 6.88 | 7100 | 0.7152 |

| 0.7103 | 6.93 | 7150 | 0.7143 |

| 0.6999 | 6.98 | 7200 | 0.7140 |

| 0.699 | 7.03 | 7250 | 0.7138 |

| 0.6959 | 7.08 | 7300 | 0.7138 |

| 0.6871 | 7.13 | 7350 | 0.7122 |

| 0.6941 | 7.17 | 7400 | 0.7131 |

| 0.6931 | 7.22 | 7450 | 0.7132 |

| 0.707 | 7.27 | 7500 | 0.7110 |

| 0.6911 | 7.32 | 7550 | 0.7122 |

| 0.7036 | 7.37 | 7600 | 0.7113 |

| 0.7105 | 7.42 | 7650 | 0.7107 |

| 0.7035 | 7.46 | 7700 | 0.7108 |

| 0.6901 | 7.51 | 7750 | 0.7113 |

| 0.6944 | 7.56 | 7800 | 0.7096 |

| 0.6927 | 7.61 | 7850 | 0.7093 |

| 0.7052 | 7.66 | 7900 | 0.7090 |

| 0.7046 | 7.71 | 7950 | 0.7082 |

| 0.6949 | 7.76 | 8000 | 0.7082 |

| 0.6888 | 7.8 | 8050 | 0.7071 |

| 0.6916 | 7.85 | 8100 | 0.7071 |

| 0.6937 | 7.9 | 8150 | 0.7067 |

| 0.7077 | 7.95 | 8200 | 0.7066 |

| 0.6847 | 8.0 | 8250 | 0.7057 |

| 0.6908 | 8.05 | 8300 | 0.7056 |

| 0.6813 | 8.1 | 8350 | 0.7060 |

| 0.6756 | 8.14 | 8400 | 0.7055 |

| 0.7006 | 8.19 | 8450 | 0.7052 |

| 0.6842 | 8.24 | 8500 | 0.7035 |

| 0.6851 | 8.29 | 8550 | 0.7044 |

| 0.6944 | 8.34 | 8600 | 0.7042 |

| 0.6929 | 8.39 | 8650 | 0.7040 |

| 0.6924 | 8.43 | 8700 | 0.7037 |

| 0.6843 | 8.48 | 8750 | 0.7037 |

| 0.7005 | 8.53 | 8800 | 0.7028 |

| 0.6795 | 8.58 | 8850 | 0.7022 |

| 0.6946 | 8.63 | 8900 | 0.7019 |

| 0.6761 | 8.68 | 8950 | 0.7016 |

| 0.6817 | 8.73 | 9000 | 0.7012 |

| 0.6838 | 8.77 | 9050 | 0.7012 |

| 0.6877 | 8.82 | 9100 | 0.7006 |

| 0.6812 | 8.87 | 9150 | 0.7004 |

| 0.6966 | 8.92 | 9200 | 0.7005 |

| 0.6778 | 8.97 | 9250 | 0.6993 |

| 0.6844 | 9.02 | 9300 | 0.6991 |

| 0.6853 | 9.06 | 9350 | 0.7000 |

| 0.6839 | 9.11 | 9400 | 0.6998 |

| 0.6813 | 9.16 | 9450 | 0.6984 |

| 0.6903 | 9.21 | 9500 | 0.6985 |

| 0.6819 | 9.26 | 9550 | 0.6987 |

| 0.6749 | 9.31 | 9600 | 0.6980 |

| 0.6782 | 9.36 | 9650 | 0.6979 |

| 0.6805 | 9.4 | 9700 | 0.6975 |

| 0.6907 | 9.45 | 9750 | 0.6974 |

| 0.6854 | 9.5 | 9800 | 0.6967 |

| 0.6803 | 9.55 | 9850 | 0.6969 |

| 0.6854 | 9.6 | 9900 | 0.6964 |

| 0.6761 | 9.65 | 9950 | 0.6966 |

| 0.6939 | 9.69 | 10000 | 0.6959 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu121

- Datasets 2.15.0

- Tokenizers 0.15.0

|

sujitvasanth/YouLiXiya-tinyllava-v1.0-1.1b-hf

|

sujitvasanth

| 2024-02-06T06:14:35Z

| 7

| 0

|

transformers

|

[

"transformers",

"safetensors",

"llava",

"image-text-to-text",

"image-to-text",

"en",

"license:apache-2.0",

"region:us"

] |

image-to-text

| 2024-02-06T05:46:03Z

|

---

language:

- en

pipeline_tag: image-to-text

inference: false

arxiv: 2304.08485

license: apache-2.0

---

# LLaVA Model Card: This is a fork of https://huggingface.co/YouLiXiya/tinyllava-v1.0-1.1b-hf from January 2024

Below is the model card of TinyLlava model 1.1b.

Check out also the Google Colab demo to run Llava on a free-tier Google Colab instance: [](https://colab.research.google.com/drive/1XtdA_UoyNzqiEYVR-iWA-xmit8Y2tKV2#scrollTo=DFVZgElEQk3x)

## Model details

**Model type:**

TinyLLaVA is an open-source chatbot trained by fine-tuning TinyLlama on GPT-generated multimodal instruction-following data.

It is an auto-regressive language model, based on the transformer architecture.

**Paper or resources for more information:**

https://llava-vl.github.io/

## How to use the model

First, make sure to have `transformers >= 4.35.3`.

The model supports multi-image and multi-prompt generation. Meaning that you can pass multiple images in your prompt. Make sure also to follow the correct prompt template (`USER: xxx\nASSISTANT:`) and add the token `<image>` to the location where you want to query images:

### Using `pipeline`:

Below we used [`"YouLiXiya/tinyllava-v1.0-1.1b-hf"`](https://huggingface.co/YouLiXiya/tinyllava-v1.0-1.1b-hf) checkpoint.

```python

from transformers import pipeline

from PIL import Image

import requests

model_id = "YouLiXiya/tinyllava-v1.0-1.1b-hf"

pipe = pipeline("image-to-text", model=model_id)

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/transformers/tasks/ai2d-demo.jpg"

image = Image.open(requests.get(url, stream=True).raw)

prompt = "USER: <image>\nWhat does the label 15 represent? (1) lava (2) core (3) tunnel (4) ash cloud\nASSISTANT:"

outputs = pipe(image, prompt=prompt, generate_kwargs={"max_new_tokens": 200})

print(outputs)

{'generated_text': 'USER: \nWhat does the label 15 represent? (1) lava (2) core (3) tunnel (4) ash cloud\nASSISTANT: The label 15 represents lava, which is the type of rock that is formed from molten magma. '}

```

### Using pure `transformers`:

Below is an example script to run generation in `float16` precision on a GPU device:

```python

import requests

from PIL import Image

import torch

from transformers import AutoProcessor, LlavaForConditionalGeneration

model_id = "YouLiXiya/tinyllava-v1.0-1.1b-hf"

prompt = "USER: <image>\nWhat are these?\nASSISTANT:"

image_file = "http://images.cocodataset.org/val2017/000000039769.jpg"

model = LlavaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.float16,

low_cpu_mem_usage=True,

).to(0)

processor = AutoProcessor.from_pretrained(model_id)

raw_image = Image.open(requests.get(image_file, stream=True).raw)

inputs = processor(prompt, raw_image, return_tensors='pt').to(0, torch.float16)

output = model.generate(**inputs, max_new_tokens=200, do_sample=False)

print(processor.decode(output[0][2:], skip_special_tokens=True))

```

### Model optimization

#### 4-bit quantization through `bitsandbytes` library

First make sure to install `bitsandbytes`, `pip install bitsandbytes` and make sure to have access to a CUDA compatible GPU device. Simply change the snippet above with:

```diff

model = LlavaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.float16,

low_cpu_mem_usage=True,

+ load_in_4bit=True

)

```

#### Use Flash-Attention 2 to further speed-up generation

First make sure to install `flash-attn`. Refer to the [original repository of Flash Attention](https://github.com/Dao-AILab/flash-attention) regarding that package installation. Simply change the snippet above with:

```diff

model = LlavaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.float16,

low_cpu_mem_usage=True,

+ use_flash_attention_2=True

).to(0)

```

## License

Llama 2 is licensed under the LLAMA 2 Community License,

Copyright (c) Meta Platforms, Inc. All Rights Reserved.

|

NK2306/FineTunedModelSD

|

NK2306

| 2024-02-06T06:10:25Z

| 0

| 1

|

diffusers

|

[

"diffusers",

"tensorboard",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"dreambooth",

"base_model:CompVis/stable-diffusion-v1-4",

"base_model:finetune:CompVis/stable-diffusion-v1-4",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2024-02-06T04:43:23Z

|

---

license: creativeml-openrail-m

base_model: CompVis/stable-diffusion-v1-4

instance_prompt: ku Tenh

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- dreambooth

inference: true

---

# DreamBooth - NK2306/FineTunedModelSD

This is a dreambooth model derived from CompVis/stable-diffusion-v1-4. The weights were trained on ku Tenh using [DreamBooth](https://dreambooth.github.io/).

You can find some example images in the following.

DreamBooth for the text encoder was enabled: False.

|

SudiptoPramanik/Mistral_RL_RL_ExtractiveSummary

|

SudiptoPramanik

| 2024-02-06T06:06:45Z

| 0

| 0

|

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:alexsherstinsky/Mistral-7B-v0.1-sharded",

"base_model:adapter:alexsherstinsky/Mistral-7B-v0.1-sharded",

"region:us"

] | null | 2024-02-06T06:06:37Z

|

---

library_name: peft

base_model: alexsherstinsky/Mistral-7B-v0.1-sharded

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2

|

popwenh/essayWriter

|

popwenh

| 2024-02-06T05:57:24Z

| 0

| 0

| null |

[

"license:bigscience-bloom-rail-1.0",

"region:us"

] | null | 2024-02-06T05:57:24Z

|

---

license: bigscience-bloom-rail-1.0

---

|

TinyPixel/qwen-1

|

TinyPixel

| 2024-02-06T05:55:29Z

| 0

| 0

|

transformers

|

[

"transformers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-02-06T05:35:22Z

|

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

macadeliccc/MarcoroCapy-7B

|

macadeliccc

| 2024-02-06T05:36:19Z

| 6

| 1

|

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-06T05:26:34Z

|

---

library_name: transformers

tags: []

---

# MarcoroCapy-7B

This model is a DPO fine tune of [mlabonne/Marcoro14-7B-slerp](https://huggingface.co/mlabonne/Marcoro14-7B-slerp) on [argilla/distilabel-capybara-dpo-7k-binarized](https://huggingface.co/datasets/argilla/distilabel-capybara-dpo-7k-binarized)

<div align="center">

[<img src="https://raw.githubusercontent.com/argilla-io/distilabel/main/docs/assets/distilabel-badge-dark.png" alt="Built with Distilabel" width="200" height="32"/>](https://github.com/argilla-io/distilabel)

</div>

## Process

+ Realigned the chat template to ChatML

+ Completed 1 Epoch

+ 5e-5 learning rate

+ Training time was about 4.5 hours on 1 H100

+ Cost was ~$20

## GGUF

TODO

## Evaluations

TODO

|

manibt1993/huner_ncbi_disease_dslim

|

manibt1993

| 2024-02-06T05:23:00Z

| 5

| 0

|

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"distilbert",

"token-classification",

"generated_from_trainer",

"dataset:transformer_dataset_ner",

"base_model:dslim/distilbert-NER",

"base_model:finetune:dslim/distilbert-NER",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2024-02-06T05:05:05Z

|

---

license: apache-2.0

base_model: dslim/distilbert-NER

tags:

- generated_from_trainer

datasets:

- transformer_dataset_ner

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: huner_ncbi_disease_dslim

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: transformer_dataset_ner

type: transformer_dataset_ner

config: ncbi_disease

split: validation

args: ncbi_disease

metrics:

- name: Precision

type: precision

value: 0.8325183374083129

- name: Recall

type: recall

value: 0.8653113087674714

- name: F1

type: f1

value: 0.8485981308411215

- name: Accuracy

type: accuracy

value: 0.9849891909996041

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# huner_ncbi_disease_dslim

This model is a fine-tuned version of [dslim/distilbert-NER](https://huggingface.co/dslim/distilbert-NER) on the transformer_dataset_ner dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1484

- Precision: 0.8325

- Recall: 0.8653

- F1: 0.8486

- Accuracy: 0.9850

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.1243 | 1.0 | 667 | 0.0669 | 0.7013 | 0.8412 | 0.7649 | 0.9787 |

| 0.0512 | 2.0 | 1334 | 0.0656 | 0.7825 | 0.8412 | 0.8108 | 0.9818 |

| 0.0221 | 3.0 | 2001 | 0.0744 | 0.7908 | 0.8501 | 0.8194 | 0.9822 |

| 0.0107 | 4.0 | 2668 | 0.1022 | 0.7940 | 0.8475 | 0.8199 | 0.9808 |

| 0.008 | 5.0 | 3335 | 0.1055 | 0.7818 | 0.8602 | 0.8191 | 0.9816 |

| 0.0057 | 6.0 | 4002 | 0.1173 | 0.8067 | 0.8590 | 0.832 | 0.9830 |

| 0.0027 | 7.0 | 4669 | 0.1188 | 0.8188 | 0.8501 | 0.8342 | 0.9834 |

| 0.0022 | 8.0 | 5336 | 0.1229 | 0.8080 | 0.8450 | 0.8261 | 0.9826 |

| 0.0019 | 9.0 | 6003 | 0.1341 | 0.8007 | 0.8526 | 0.8258 | 0.9834 |

| 0.0019 | 10.0 | 6670 | 0.1360 | 0.8045 | 0.8628 | 0.8326 | 0.9822 |

| 0.0011 | 11.0 | 7337 | 0.1376 | 0.8163 | 0.8640 | 0.8395 | 0.9838 |

| 0.0008 | 12.0 | 8004 | 0.1447 | 0.8007 | 0.8577 | 0.8282 | 0.9833 |

| 0.0006 | 13.0 | 8671 | 0.1381 | 0.8139 | 0.8615 | 0.8370 | 0.9839 |

| 0.0005 | 14.0 | 9338 | 0.1398 | 0.8297 | 0.8666 | 0.8477 | 0.9843 |

| 0.0004 | 15.0 | 10005 | 0.1404 | 0.8232 | 0.8640 | 0.8431 | 0.9842 |

| 0.0003 | 16.0 | 10672 | 0.1486 | 0.8329 | 0.8551 | 0.8439 | 0.9838 |

| 0.0 | 17.0 | 11339 | 0.1469 | 0.8114 | 0.8691 | 0.8393 | 0.9837 |

| 0.0002 | 18.0 | 12006 | 0.1500 | 0.8297 | 0.8602 | 0.8447 | 0.9843 |

| 0.0001 | 19.0 | 12673 | 0.1489 | 0.8315 | 0.8653 | 0.8481 | 0.9849 |

| 0.0 | 20.0 | 13340 | 0.1484 | 0.8325 | 0.8653 | 0.8486 | 0.9850 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.0+cu121

- Datasets 2.16.1

- Tokenizers 0.15.1

|

simonycl/llama-2-7b-hf-cohere-KMeansDynamic-0.05-Llama-2-7b-hf-2e-5

|

simonycl

| 2024-02-06T05:20:37Z

| 1

| 0

|

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:meta-llama/Llama-2-7b-hf",

"base_model:adapter:meta-llama/Llama-2-7b-hf",

"region:us"

] | null | 2024-02-06T01:53:38Z

|

---

library_name: peft

base_model: meta-llama/Llama-2-7b-hf

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.7.1

|

simonycl/llama-2-7b-hf-cohere-KMenasRandomDeita-0.05-Llama-2-7b-hf-2e-5

|

simonycl

| 2024-02-06T05:16:23Z

| 0

| 0

|

peft

|

[

"peft",

"safetensors",

"arxiv:1910.09700",

"base_model:meta-llama/Llama-2-7b-hf",

"base_model:adapter:meta-llama/Llama-2-7b-hf",

"region:us"

] | null | 2024-02-06T05:15:55Z

|

---

library_name: peft

base_model: meta-llama/Llama-2-7b-hf

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

- **Developed by:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Data Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Data Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

### Framework versions

- PEFT 0.8.2

|

shidowake/cyber2-7B-base-bnb-4bit

|

shidowake

| 2024-02-06T05:12:42Z

| 4

| 0

|

transformers

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"4-bit",

"bitsandbytes",

"region:us"

] |

text-generation

| 2024-02-06T05:11:07Z

|

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

xtuner/Qwen-7B-qlora-moss-003-sft

|

xtuner

| 2024-02-06T04:58:49Z

| 4

| 0

|

peft

|

[

"peft",

"conversational",

"dataset:fnlp/moss-003-sft-data",

"region:us"

] |

text-generation

| 2023-08-16T02:07:20Z

|

---

library_name: peft

pipeline_tag: conversational

datasets:

- fnlp/moss-003-sft-data

---

<div align="center">

<img src="https://github.com/InternLM/lmdeploy/assets/36994684/0cf8d00f-e86b-40ba-9b54-dc8f1bc6c8d8" width="600"/>

[](https://github.com/InternLM/xtuner)

</div>

## Model

Qwen-7B-qlora-moss-003-sft is fine-tuned from [Qwen-7B](https://huggingface.co/Qwen/Qwen-7B) with [moss-003-sft](https://huggingface.co/datasets/fnlp/moss-003-sft-data) dataset by [XTuner](https://github.com/InternLM/xtuner).

## Quickstart

### Usage with HuggingFace libraries

```python

import torch

from peft import PeftModel

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, StoppingCriteria

from transformers.generation import GenerationConfig

class StopWordStoppingCriteria(StoppingCriteria):

def __init__(self, tokenizer, stop_word):

self.tokenizer = tokenizer

self.stop_word = stop_word

self.length = len(self.stop_word)

def __call__(self, input_ids, *args, **kwargs) -> bool:

cur_text = self.tokenizer.decode(input_ids[0])

cur_text = cur_text.replace('\r', '').replace('\n', '')

return cur_text[-self.length:] == self.stop_word

tokenizer = AutoTokenizer.from_pretrained('Qwen/Qwen-7B', trust_remote_code=True)

quantization_config = BitsAndBytesConfig(load_in_4bit=True, load_in_8bit=False, llm_int8_threshold=6.0, llm_int8_has_fp16_weight=False, bnb_4bit_compute_dtype=torch.float16, bnb_4bit_use_double_quant=True, bnb_4bit_quant_type='nf4')

model = AutoModelForCausalLM.from_pretrained('Qwen/Qwen-7B', quantization_config=quantization_config, device_map='auto', trust_remote_code=True).eval()

model = PeftModel.from_pretrained(model, 'xtuner/Qwen-7B-qlora-moss-003-sft')

gen_config = GenerationConfig(max_new_tokens=512, do_sample=True, temperature=0.1, top_p=0.75, top_k=40)

# Note: In this example, we disable the use of plugins because the API depends on additional implementations.

# If you want to experience plugins, please refer to XTuner CLI!

prompt_template = (

'You are an AI assistant whose name is Qwen.\n'

'Capabilities and tools that Qwen can possess.\n'

'- Inner thoughts: disabled.\n'

'- Web search: disabled.\n'

'- Calculator: disabled.\n'

'- Equation solver: disabled.\n'

'- Text-to-image: disabled.\n'

'- Image edition: disabled.\n'

'- Text-to-speech: disabled.\n'

'<|Human|>: {input}<eoh>\n'

'<|Inner Thoughts|>: None<eot>\n'

'<|Commands|>: None<eoc>\n'

'<|Results|>: None<eor>\n')

text = '请给我介绍五个上海的景点'

inputs = tokenizer(prompt_template.format(input=text), return_tensors='pt')

inputs = inputs.to(model.device)

pred = model.generate(**inputs, generation_config=gen_config, stopping_criteria=[StopWordStoppingCriteria(tokenizer, '<eom>')])

print(tokenizer.decode(pred.cpu()[0], skip_special_tokens=True))

"""

好的,以下是五个上海的景点介绍:

1. 上海博物馆:上海博物馆是中国最大的综合性博物馆之一,收藏了大量的历史文物和艺术品,包括青铜器、陶瓷、书画、玉器等。

2. 上海城隍庙:上海城隍庙是上海最古老的庙宇之一,建于明朝,是上海的标志性建筑之一。庙内有各种神像和文物,是了解上海历史文化的好去处。

3. 上海科技馆:上海科技馆是一座集科技、文化、教育为一体的综合性博物馆,展示了各种科技展品和互动体验项目,适合全家人一起参观。

4. 上海东方明珠塔:上海东方明珠塔是上海的标志性建筑之一,高468米。游客可以乘坐高速电梯到达观景台,欣赏上海的美景。

5. 上海迪士尼乐园:上海迪士尼乐园是中国第一个迪士尼主题公园,拥有各种游乐设施和表演节目,适合全家人一起游玩。

"""

```

### Usage with XTuner CLI

#### Installation

```shell

pip install -U xtuner

```

#### Chat

```shell

export SERPER_API_KEY="xxx" # Please get the key from https://serper.dev to support google search!

xtuner chat Qwen/Qwen-7B --adapter xtuner/Qwen-7B-qlora-moss-003-sft --bot-name Qwen --prompt-template moss_sft --system-template moss_sft --with-plugins calculate solve search

```

#### Fine-tune

Use the following command to quickly reproduce the fine-tuning results.

```shell

NPROC_PER_NODE=8 xtuner train qwen_7b_qlora_moss_sft_all_e2_gpu8

```

|

varun-v-rao/bert-base-cased-lora-592K-snli-model1

|

varun-v-rao

| 2024-02-06T04:58:02Z

| 8

| 0

|

transformers

|

[

"transformers",

"tensorboard",

"safetensors",

"bert",

"text-classification",

"generated_from_trainer",

"base_model:google-bert/bert-base-cased",

"base_model:finetune:google-bert/bert-base-cased",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2024-02-06T03:07:32Z

|

---

license: apache-2.0

base_model: bert-base-cased

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: bert-base-cased-lora-592K-snli-model1

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-cased-lora-592K-snli-model1

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9110

- Accuracy: 0.6425

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 256

- eval_batch_size: 256

- seed: 95

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.6263 | 1.0 | 2146 | 0.5487 | 0.7913 |

| 0.5726 | 2.0 | 4292 | 0.4945 | 0.8125 |

| 0.5543 | 3.0 | 6438 | 0.4860 | 0.8153 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.1.1+cu121

- Datasets 2.15.0

- Tokenizers 0.15.0

|

dvilasuero/DistilabelOpenHermes-2.5-mistral-7b-mix2

|

dvilasuero

| 2024-02-06T04:45:03Z

| 6

| 0

|

transformers

|

[

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"arxiv:1910.09700",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2024-02-06T04:42:30Z

|

---

library_name: transformers

tags: []

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]