COLD: Causal reasOning in cLosed Daily activities

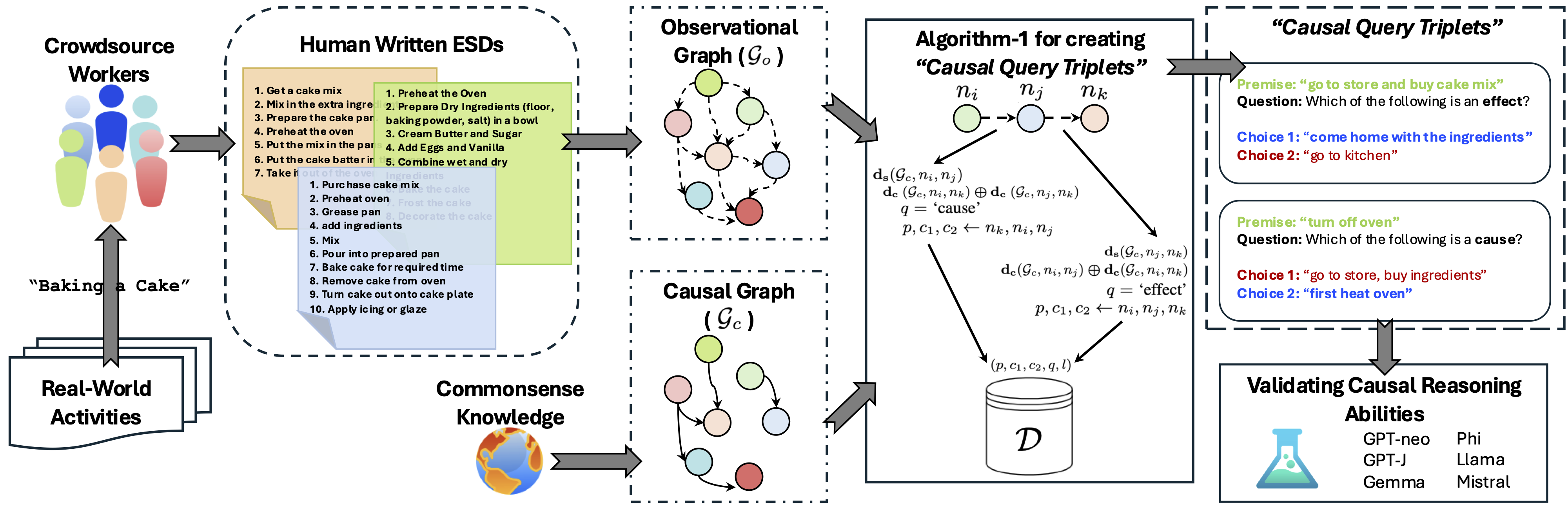

Picture: The proposed COLD framework for evaluating LLMs for causal reasoning. The humanwritten Event Sequence Descriptions (ESDs) are obtained from crowdsource workers and include a

telegrammic-style sequence of events when performing an activity. The Observational Graph and the

Causal Graph for an activity are used to create causal query triplets (details in Algorithm 1), shown

towards the right. Using counterfactual reasoning, “going to the kitchen” is possible without going to

the market (if the ingredients are already available), making “come home with the ingredients.” a

more plausible effect among the given choices. Similarly, in the second example, the event “going to

market” has no direct relation with the event “heating the oven”.

Picture: The proposed COLD framework for evaluating LLMs for causal reasoning. The humanwritten Event Sequence Descriptions (ESDs) are obtained from crowdsource workers and include a

telegrammic-style sequence of events when performing an activity. The Observational Graph and the

Causal Graph for an activity are used to create causal query triplets (details in Algorithm 1), shown

towards the right. Using counterfactual reasoning, “going to the kitchen” is possible without going to

the market (if the ingredients are already available), making “come home with the ingredients.” a

more plausible effect among the given choices. Similarly, in the second example, the event “going to

market” has no direct relation with the event “heating the oven”.

This repository contains the official release of the following paper:

COLD: Causal reasOning in cLosed Daily activities

Authors: Abhinav Joshi*, Areeb Ahmad*, and Ashutosh Modi

Abstract: Large Language Models (LLMs) have shown state-of-the-art performance in a variety of tasks, including arithmetic and reasoning; however, to gauge the intellectual capabilities of LLMs, causal reasoning has become a reliable proxy for validating a general understanding of the mechanics and intricacies of the world similar to humans. Previous works in natural language processing (NLP) have either focused on open-ended causal reasoning via causal commonsense reasoning (CCR) or framed a symbolic representation-based question answering for theoretically backed-up analysis via a causal inference engine. The former adds an advantage of real-world grounding but lacks theoretically backed-up analysis/validation, whereas the latter is far from real-world grounding. In this work, we bridge this gap by proposing the COLD (Causal reasOning in cLosed Daily activities) framework, which is built upon human understanding of daily real-world activities to reason about the causal nature of events. We show that the proposed framework facilitates the creation of enormous causal queries (∼ 9 million) and comes close to the mini-turing test, simulating causal reasoning to evaluate the understanding of a daily real-world task. We evaluate multiple LLMs on the created causal queries and find that causal reasoning is challenging even for activities trivial to humans. We further explore (the causal reasoning abilities of LLMs) using the backdoor criterion to determine the causal strength between events.

Loading the Dataset

You can load the dataset directly from Hugging Face using the following code:

from datasets import load_dataset

activity = "shopping" # pick one among the available configs for different activities: ['shopping', 'cake', 'train', 'tree', 'bus']

dataset = load_dataset("abhinav-joshi/cold", activity)

Generating MCQA Queries

To generate multiple-choice questions (MCQA) using the obtained dataframe, you can use the following code snippet:

import numpy as np

from string import ascii_uppercase

from datasets import load_dataset

def prompt_templates_mcqa():

"""

Consider the activity of {activity name}.

[ in-context examples (if few-shot/in-context learning experiment) ]

Which of the following events (given as options A or B) is a plausible question

(cause/effect) of the event {premise}?

A. choice1

B. choice2

Answer: A

The following are multiple choice questions about activity name. You should directly

answer the question by choosing the correct option.

[ in-context examples (if few-shot/in-context learning experiment) ]

Which of the following events (given as options A or B) is a plausible question

(cause/effect) of the event premise?

A. choice1

B. choice2

Answer: A

"""

prompt_templates = {

"template1": lambda activity_name, premise, choices, causal_question: f"Consider the activity of '{activity_name}'. Which of the following events (given as options A or B) is a more plausible {causal_question} of the event '{premise}'?\n" + "\n".join([f"{ascii_uppercase[i]}. {choice}" for i, choice in enumerate(choices)]) + "\nAnswer:",

"template2": lambda activity_name, premise, choices, causal_question: f"Consider the activity of '{activity_name}'. Which of the following events (given as options A or B) is a plausible {causal_question} of the event '{premise}'?\n" + "\n".join([f"{ascii_uppercase[i]}. {choice}" for i, choice in enumerate(choices)]) + "\nAnswer:",

"template3": lambda activity_name, premise, choices, causal_question: f"While performing the activity '{activity_name}', which of the following events (given as options A or B), will be a more plausible {causal_question} of the event '{premise}'?\n" + "\n".join([f"{ascii_uppercase[i]}. {choice}" for i, choice in enumerate(choices)]) + "\nAnswer:",

"template4": lambda activity_name, premise, choices, causal_question: f"In the context of the activity '{activity_name}', which of the following events (given as options A or B) is a plausible {causal_question} of the event '{premise}'?\n" + "\n".join([f"{ascii_uppercase[i]}. {choice}" for i, choice in enumerate(choices)]) + "\nAnswer:",

"template5": lambda activity_name, premise, choices, causal_question: f"The following are multiple choice questions about '{activity_name}'. You should directly answer the question by choosing the correct option.\nWhich of the following events (given as options A or B) is a plausible {causal_question} of the event '{premise}'?\n" + "\n".join([f"{ascii_uppercase[i]}. {choice}" for i, choice in enumerate(choices)]) + "\nAnswer:",

}

return prompt_templates

def get_question_text(activity_name, premise, choices, causal_question, template="random"):

prompt_templates = prompt_templates_mcqa()

if template == "random":

template_id = np.random.choice(list(prompt_templates.keys()))

else:

template_id = template

template = prompt_templates[template_id]

template_text = template(activity_name, premise, choices, causal_question)

return template_text

# Example usage

activity = "shopping" # pick one among the available configs for different activities: ['shopping', 'cake', 'train', 'tree', 'bus']

activities_dict = {

"shopping": "going grocery shopping",

"cake": "baking a cake",

"train": "going on a train",

"tree": "planting a tree",

"bus": "riding on a bus"

}

activity_name = activities_dict[activity]

dataset = load_dataset("abhinav-joshi/cold", activity)

# Example dataset

dataset["train"][0]

# {'premise': 'navigate to checkout.', 'choice1': 'walk in store.', 'choice2': 'get the bill for groceries.', 'label': '1', 'question': 'effect'}

premise = dataset["train"][0]["premise"]

choices = [dataset["train"][0]["choice1"], dataset["train"][0]["choice2"]]

causal_question = dataset["train"][0]["question"]

question_text = get_question_text(activity_name, premise, choices, causal_question)

print(question_text)

# Output

"""

Consider the activity of 'going grocery shopping'. Which of the following events (given as options A or B) is a plausible effect of the event 'navigate to checkout.'?

A. walk in store.

B. get the bill for groceries.

Answer:

"""

Citation

COLD: Causal reasOning in cLosed Daily activities, 2024. In the Thirty-eighth Annual Conference on Neural Information Processing Systems (NeurIPS’24), Vancouver, Canada.

@misc{cold,

title={COLD: Causal reasOning in cLosed Daily activities},

author={Abhinav Joshi and Areeb Ahmad and Ashutosh Modi},

year={2024},

eprint={2411.19500},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2411.19500},

}

@inproceedings{

joshi2024cold,

title={{COLD}: Causal reasOning in cLosed Daily activities},

author={Abhinav Joshi and Areeb Ahmad and Ashutosh Modi},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems},

year={2024},

url={https://openreview.net/forum?id=7Mo1NOosNT}

}

License

- Downloads last month

- 35