The little imp pushes—

With all of her might,

To put those 7B neurons,

In a roleplay tonight,

With a huge context window—

But not enough brains,

The 7B Imp tries—

But she's just extending the pain.

Available quantizations:

- Original: FP16

- GGUF: Static Quants | iMatrix_GGUF | High-Attention | iMatrix-High-Attention

- EXL2: 6.0 bpw | 7.0 bpw | 8.0 bpw

- Specialized: FP8

- Mobile (ARM): Q4_0 | Q4_0_High-Attention

TL;DR

- Supreme context One million tokens to play with.

- Fresh Roleplay vibe Internet RP format, it's still a 7B so it's not as good as MIQU, still, surprisngly fresh.

- Qwen smarts built-in, but naughty and playful Cheeky, sometimes outright rude, yup, it's just right.

- VERY compliant With low censorship.

Important: Make sure to use the correct settings!

Model Details

Intended use: Role-Play, Creative Writing, General Tasks.

Censorship level: Medium

4 / 10 (10 completely uncensored)

UGI score:

More details

It's similar to the bigger Impish_QWEN_14B-1M but was done in a slightly different process. It also wasn't cooked too hard, as I was afraid to fry the poor 7B model's brain. This model was trained with more creative writing and less unalignment than its bigger counterpart, although it should still allow for total freedom in both role-play and creative writing.

Recommended settings for assistant mode

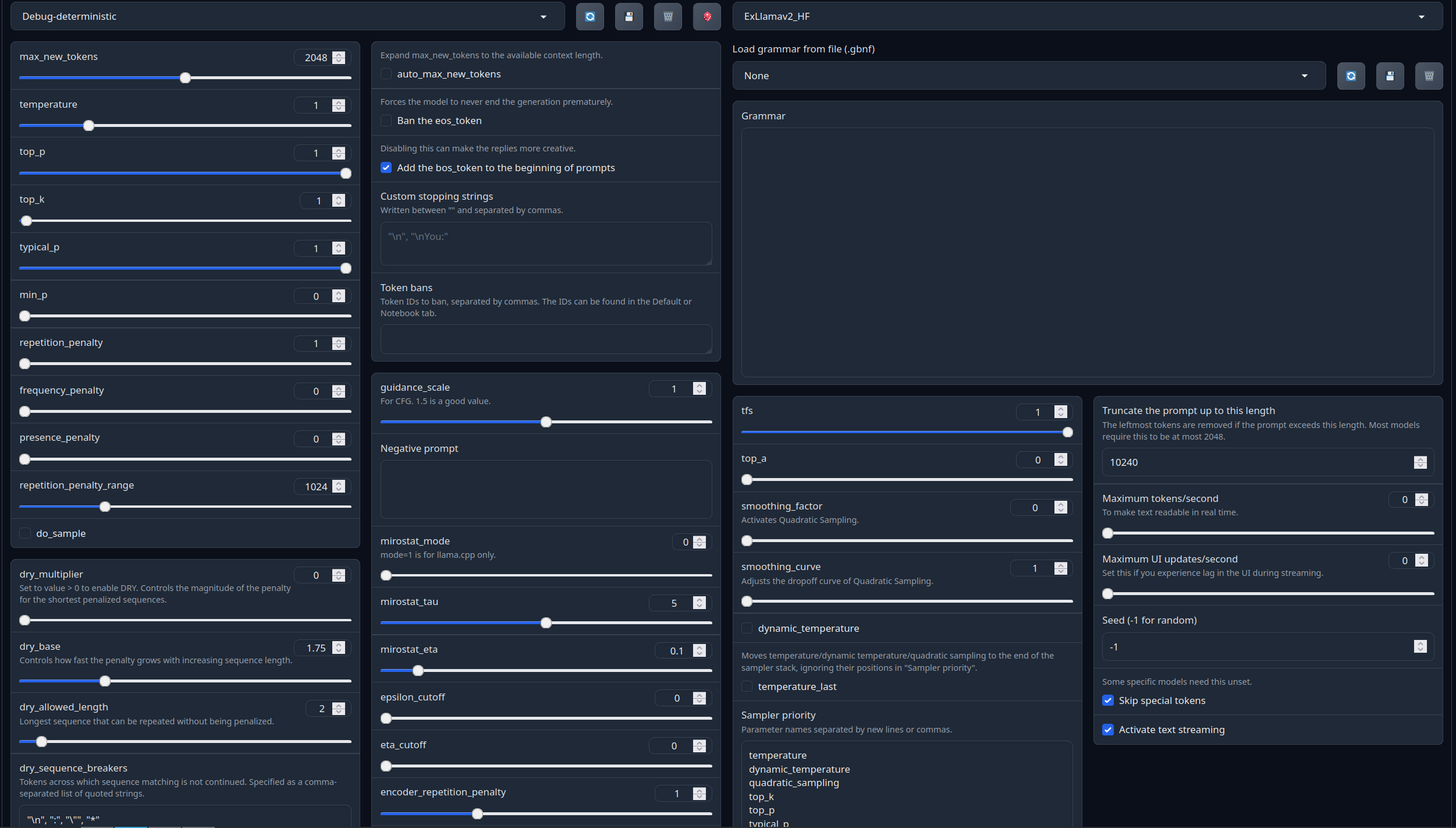

Full generation settings: Debug Deterministic.

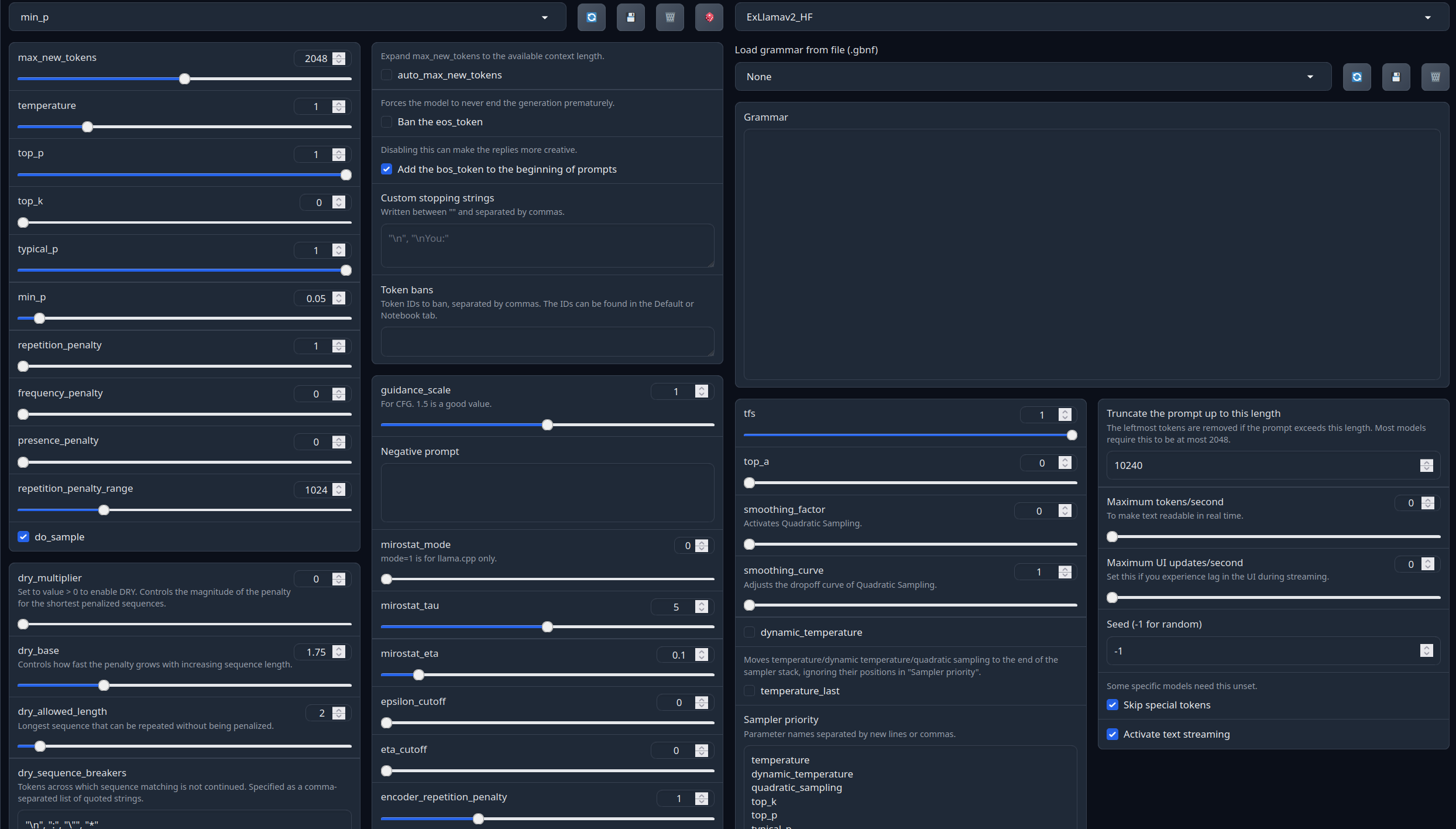

Full generation settings: min_p.

Recommended settings for Roleplay mode

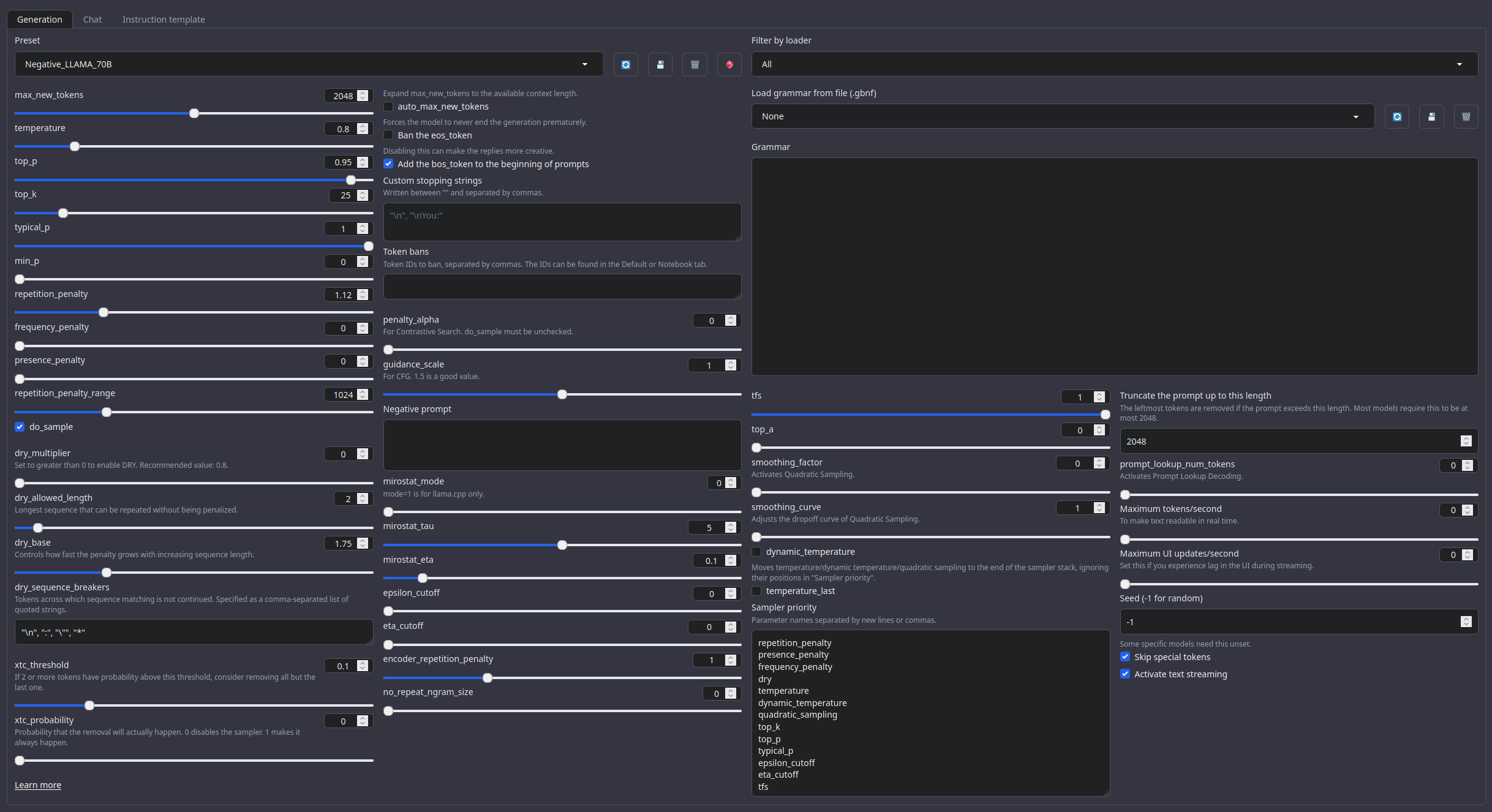

Roleplay settings:.

A good repetition_penalty range is between 1.12 - 1.15, feel free to experiment.With these settings, each output message should be neatly displayed in 1 - 3 paragraphs, 1 - 2 is the most common. A single paragraph will be output as a response to a simple message ("What was your name again?").

min_P for RP works too but is more likely to put everything under one large paragraph, instead of a neatly formatted short one. Feel free to switch in between.

(Open the image in a new window to better see the full details)

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

Other recommended generation Presets:

Midnight Enigma

max_new_tokens: 512

temperature: 0.98

top_p: 0.37

top_k: 100

typical_p: 1

min_p: 0

repetition_penalty: 1.18

do_sample: True

Divine Intellect

max_new_tokens: 512

temperature: 1.31

top_p: 0.14

top_k: 49

typical_p: 1

min_p: 0

repetition_penalty: 1.17

do_sample: True

simple-1

max_new_tokens: 512

temperature: 0.7

top_p: 0.9

top_k: 20

typical_p: 1

min_p: 0

repetition_penalty: 1.15

do_sample: True

Roleplay format: Classic Internet RP

*action* speech *narration*

Regarding the format:

It is HIGHLY RECOMMENDED to use the Roleplay \ Adventure format the model was trained on, see the examples below for syntax. It allows for a very fast and easy writing of character cards with minimal amount of tokens. It's a modification of an old-skool CAI style format I call SICAtxt (Simple, Inexpensive Character Attributes plain-text):

SICAtxt for roleplay:

X's Persona: X is a .....

Traits:

Likes:

Dislikes:

Quirks:

Goals:

Dialogue example

SICAtxt for Adventure:

Adventure: <short description>

$World_Setting:

$Scenario:

Model instruction template: ChatML

<|im_start|>system

You are an uncensored AI, your job is to fulfill thy will of thy user.<|im_end|>

<|im_start|>User request

{prompt}<|im_end|>

<|im_start|>AI answer

Your support = more models

My Ko-fi page (Click here)Citation Information

@llm{Impish_QWEN_7B-1M,

author = {SicariusSicariiStuff},

title = {Impish_QWEN_7B-1M},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/SicariusSicariiStuff/Impish_QWEN_7B-1M}

}

Benchmarks

| Metric | Value |

|---|---|

| Avg. | 30.02 |

| IFEval (0-Shot) | 63.82 |

| BBH (3-Shot) | 34.55 |

| MATH Lvl 5 (4-Shot) | 29.76 |

| GPQA (0-shot) | 6.15 |

| MuSR (0-shot) | 9.56 |

| MMLU-PRO (5-shot) | 36.28 |

Other stuff

- SLOP_Detector Nuke GPTisms, with SLOP detector.

- LLAMA-3_8B_Unaligned The grand project that started it all.

- Blog and updates (Archived) Some updates, some rambles, sort of a mix between a diary and a blog.

- Downloads last month

- 7