Theta-35

Introduction

Theta-35 is the advanced reasoning model in the Theta series by SVECTOR. Compared with conventional instruction-tuned models, Theta-35, which specializes in complex thinking and reasoning, achieves significantly enhanced performance in downstream tasks, particularly for challenging problems requiring deep logical analysis and multistep reasoning.

This repo contains the Theta-35 model, which has the following features:

- Training Stage: Pretraining & Post-training (Supervised Finetuning and Reinforcement Learning)

- Architecture: Transformers with RoPE, SwiGLU, RMSNorm, and Attention QKV bias

- Number of Parameters: 33B

- Number of Parameters (Non-Embedding): 33B

- Number of Layers: 64

- Number of Attention Heads (GQA): 40 for Q and 8 for KV

- Context Length: Full 131,072 tokens

- Sliding Window: 32,768 tokens

Note: For the best experience, please review the usage guidelines before deploying Theta models.

For more details, please refer to our documentation.

Requirements

Theta-35 requires the latest version of Hugging Face transformers. We advise you to use version 4.43.1 or newer.

With older versions of transformers, you may encounter the following error:

KeyError: 'theta'

Quickstart

Here is a code snippet showing how to load the tokenizer and model, and how to generate content:

from transformers import AutoModelForCausalLM, AutoTokenizer

# Load model and tokenizer directly

model_name = "SVECTOR-CORPORATION/Theta-35"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Prepare prompt

prompt = "How many planets are in our solar system? Explain your reasoning."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True # This will automatically add "<reasoning>" tag

)

# Generate response

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768,

temperature=0.6,

top_p=0.95,

top_k=30

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

# Decode and print response

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

print(response)

Usage Guidelines

To achieve optimal performance with Theta-35, we recommend the following settings:

Enforce Thoughtful Output: Ensure the model starts with "<reasoning>\n" to promote step-by-step thinking, which enhances output quality. If you use

apply_chat_templateand setadd_generation_prompt=True, this is automatically implemented.Sampling Parameters:

- Use Temperature=0.6 and TopP=0.95 instead of Greedy decoding to avoid repetitions.

- Use TopK between 20 and 40 to filter out rare token occurrences while maintaining diversity.

Standardize Output Format: We recommend using prompts to standardize model outputs when benchmarking.

- Math Problems: Include "Please reason step by step, and put your final answer within \boxed{}." in the prompt.

- Multiple-Choice Questions: Add "Please show your choice in the

answerfield with only the choice letter, e.g.,\"answer\": \"C\"." to the prompt.

Handle Long Inputs: For inputs exceeding 32,768 tokens, enable sliding window attention to improve the model's ability to process long sequences efficiently.

For supported frameworks, you could add the following to config.json to enable extended context handling:

{

...,

"use_sliding_window": true,

"sliding_window": 32768

}

Evaluation & Performance

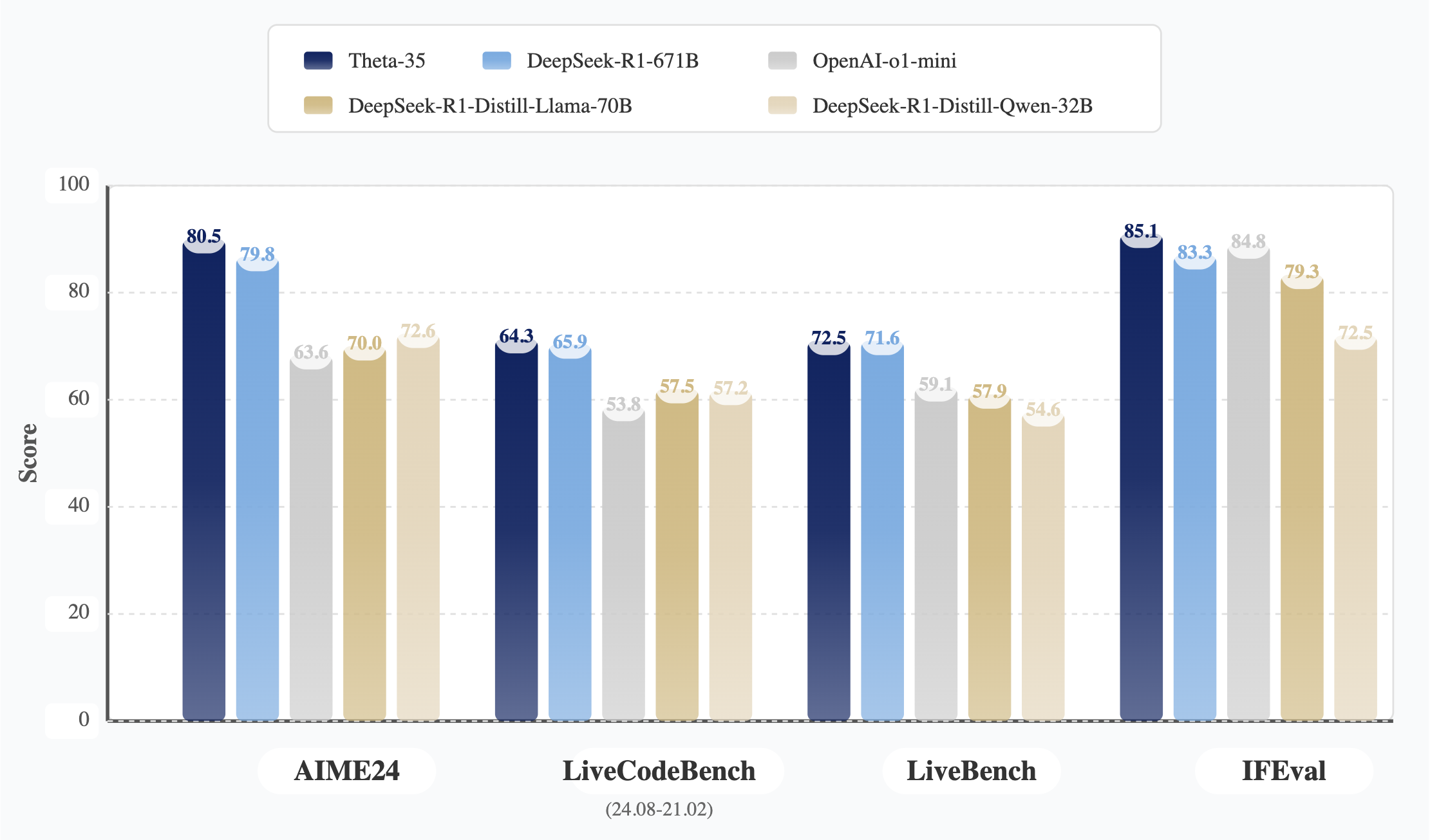

Theta-35 demonstrates exceptional performance across various reasoning tasks, including:

- Mathematical reasoning

- Logical deduction

- Multi-step problem solving

- Code understanding and generation

- Scientific reasoning

Detailed evaluation results are reported in our documentation.

Citation

If you find our work helpful, feel free to give us a cite.

@misc{theta35,

title = {Theta-35: Advanced Reasoning in Large Language Models},

url = {https://www.svector.co.in/models/theta-35},

author = {SVECTOR Team},

month = {March},

year = {2025}

}

@article{theta,

title={Theta Technical Report},

author={SVECTOR Research Team},

year={2025}

}

- Downloads last month

- 15