Quantization made by Richard Erkhov.

Llama-3-8B - GGUF

- Model creator: https://huggingface.co/AI-Sweden-Models/

- Original model: https://huggingface.co/AI-Sweden-Models/Llama-3-8B/

| Name | Quant method | Size |

|---|---|---|

| Llama-3-8B.Q2_K.gguf | Q2_K | 2.96GB |

| Llama-3-8B.IQ3_XS.gguf | IQ3_XS | 3.28GB |

| Llama-3-8B.IQ3_S.gguf | IQ3_S | 3.43GB |

| Llama-3-8B.Q3_K_S.gguf | Q3_K_S | 3.41GB |

| Llama-3-8B.IQ3_M.gguf | IQ3_M | 3.52GB |

| Llama-3-8B.Q3_K.gguf | Q3_K | 3.74GB |

| Llama-3-8B.Q3_K_M.gguf | Q3_K_M | 3.74GB |

| Llama-3-8B.Q3_K_L.gguf | Q3_K_L | 4.03GB |

| Llama-3-8B.IQ4_XS.gguf | IQ4_XS | 4.18GB |

| Llama-3-8B.Q4_0.gguf | Q4_0 | 4.34GB |

| Llama-3-8B.IQ4_NL.gguf | IQ4_NL | 4.38GB |

| Llama-3-8B.Q4_K_S.gguf | Q4_K_S | 4.37GB |

| Llama-3-8B.Q4_K.gguf | Q4_K | 4.58GB |

| Llama-3-8B.Q4_K_M.gguf | Q4_K_M | 4.58GB |

| Llama-3-8B.Q4_1.gguf | Q4_1 | 4.78GB |

| Llama-3-8B.Q5_0.gguf | Q5_0 | 5.21GB |

| Llama-3-8B.Q5_K_S.gguf | Q5_K_S | 5.21GB |

| Llama-3-8B.Q5_K.gguf | Q5_K | 5.34GB |

| Llama-3-8B.Q5_K_M.gguf | Q5_K_M | 5.34GB |

| Llama-3-8B.Q5_1.gguf | Q5_1 | 5.65GB |

| Llama-3-8B.Q6_K.gguf | Q6_K | 6.14GB |

| Llama-3-8B.Q8_0.gguf | Q8_0 | 7.95GB |

Original model description:

language: - sv - da - 'no' license: llama3 tags: - pytorch - llama - llama-3 - ai-sweden base_model: meta-llama/Meta-Llama-3-8B pipeline_tag: text-generation inference: parameters: temperature: 0.6

AI-Sweden-Models/Llama-3-8B

Intended usage:

This is a base model, it can be finetuned to a particular use case.

-----> instruct version here <-----

Use with transformers

See the snippet below for usage with Transformers:

import transformers

import torch

model_id = "AI-Sweden-Models/Llama-3-8B"

pipeline = transformers.pipeline(

task="text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto"

)

pipeline(

text_inputs="Sommar och sol är det bästa jag vet",

max_length=128,

repetition_penalty=1.03

)

>>> "Sommar och sol är det bästa jag vet!

Och nu när jag har fått lite extra semester så ska jag njuta till max av allt som våren och sommaren har att erbjuda.

Jag har redan börjat med att sitta ute på min altan och ta en kopp kaffe och läsa i tidningen, det är så skönt att bara sitta där och njuta av livet.

Ikväll blir det grillat och det ser jag fram emot!"

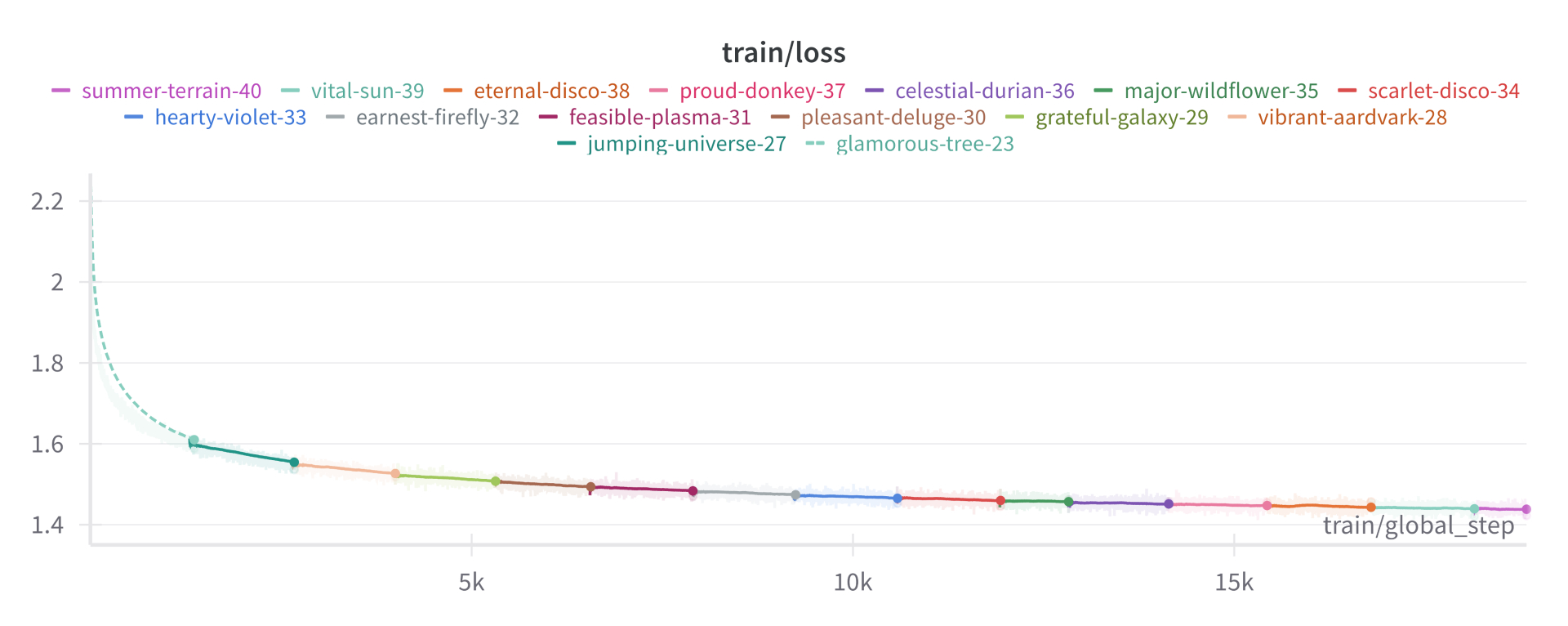

Training information

AI-Sweden-Models/Llama-3-8B is a continuation of the pretraining process from meta-llama/Meta-Llama-3-8B.

It was trained on a subset from The Nordic Pile containing Swedish, Norwegian and Danish. The training is done on all model parameters, it is a full finetune.

The training dataset consists of 227 105 079 296 tokens. It was trained on the Rattler supercomputer at the Dell Technologies Edge Innovation Center in Austin, Texas. The training used 23 nodes of a duration of 30 days, where one node contained 4X Nvidia A100 GPUs, yielding 92 GPUs.

trainer.yaml:

learning_rate: 2e-5

warmup_steps: 100

lr_scheduler: cosine

optimizer: adamw_torch_fused

max_grad_norm: 1.0

gradient_accumulation_steps: 16

micro_batch_size: 1

num_epochs: 1

sequence_len: 8192

deepspeed_zero2.json:

{

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"device": "cpu"

},

"contiguous_gradients": true,

"overlap_comm": true

},

"bf16": {

"enabled": "auto"

},

"fp16": {

"enabled": "auto",

"auto_cast": false,

"loss_scale": 0,

"initial_scale_power": 32,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"gradient_accumulation_steps": "auto",

"gradient_clipping": "auto",

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}

Checkpoints

- 15/6/2024 (18833) => 1 epoch

- 11/6/2024 (16000)

- 07/6/2024 (14375)

- 03/6/2024 (11525)

- 29/5/2024 (8200)

- 26/5/2024 (6550)

- 24/5/2024 (5325)

- 22/5/2024 (3900)

- 20/5/2024 (2700)

- 13/5/2024 (1500)

- Downloads last month

- 23