MoG: Motion-Aware Generative Frame Interpolation

MoG is a generative video frame interpolation (VFI) model, designed to synthesize intermediate frames between two input frames.

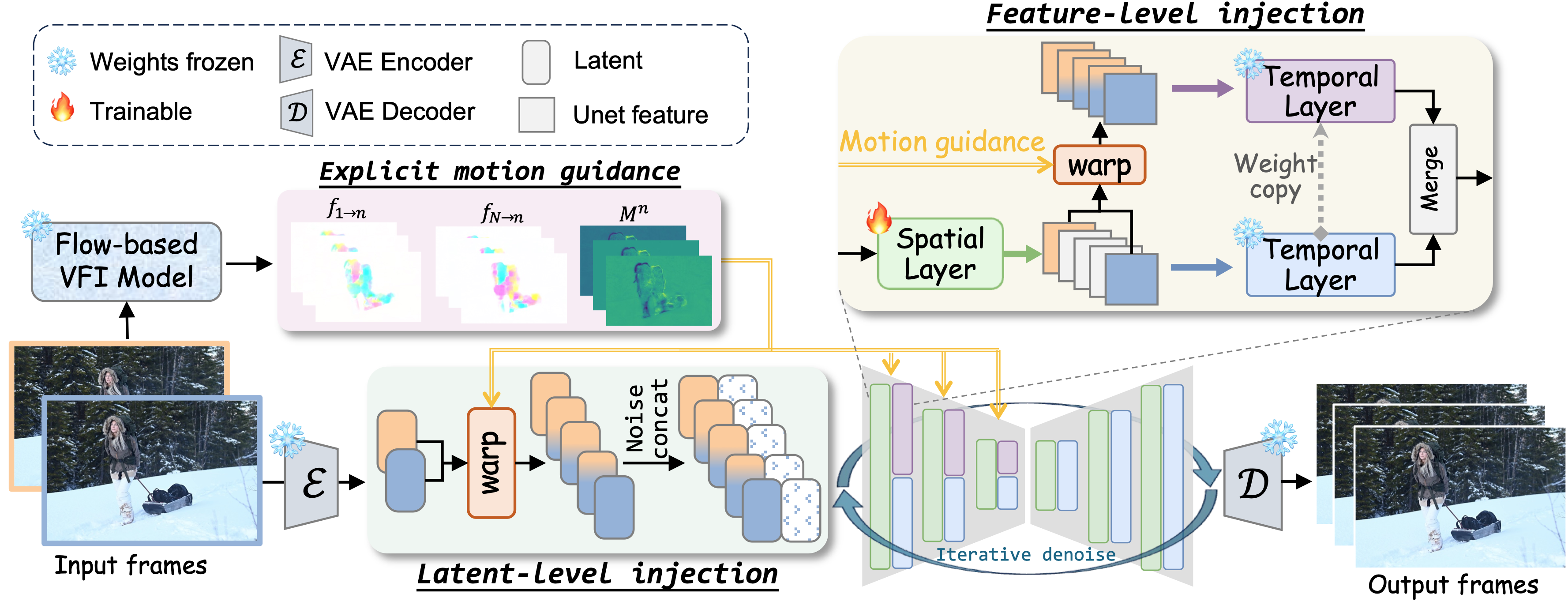

MoG is the first VFI framework to bridge the gap between flow-based stability and generative flexibility. We introduce a dual-level guidance injection design to constrain generated motion using motion trajectories derived from optical flow. To enhance the generative model's ability to dynamically correct flow errors, we implement encoder-only guidance injection and selective parameter fine-tuning. As a result, MoG achieves significant improvements over existing open-source generative VFI methods, delivering superior performance in both real-world and animated scenarios.

Source code is available at https://github.com/MCG-NJU/MoG-VFI.

Network Arichitecture

Model Description

- Developed by: Nanjing University, Tencent PCG

- Model type: Generative video frame interploation model, takes two still video frames as input.

- Arxiv paper: https://arxiv.org/pdf/2501.03699

- Project page: https://mcg-nju.github.io/MoG_Web/

- Repository: https://github.com/MCG-NJU/MoG-VFI

- License: Apache 2.0 license.

Usage

We provide two model checkpoints: real.ckpt for real-world scenes and ani.ckpt for animation scenes. For detailed instructions on loading the checkpoints and performing inference, please refer to our official repository.

Citation

If you find our code useful or our work relevant, please consider citing:

@article{zhang2025motion,

title={Motion-Aware Generative Frame Interpolation},

author={Zhang, Guozhen and Zhu, Yuhan and Cui, Yutao and Zhao, Xiaotong and Ma, Kai and Wang, Limin},

journal={arXiv preprint arXiv:2501.03699},

year={2025}

}

- Downloads last month

- 20