CAMOUFLaGE: Controllable AnoniMizatiOn throUgh diFfusion-based image coLlection GEneration

Code Here

Official implementations of "Latent Diffusion Models for Attribute-Preserving Image Anonymization" and "Harnessing Foundation Models for Image Anonymization".

Latent Diffusion Models for Attribute-Preserving Image Anonymization

This paper presents, to the best of our knowledge, the first approach to image anonymization based on Latent Diffusion Models (LDMs). Every element of a scene is maintained to convey the same meaning, yet manipulated in a way that makes re-identification difficult. We propose two LDMs for this purpose:

- CAMOUFLaGE-Base

- CAMOFULaGE-Light

The former solution achieves superior performance on most metrics and benchmarks, while the latter cuts the inference time in half at the cost of fine-tuning a lightweight module.

Compared to state-of-the-art, we anonymize complex scenes by introducing variations in the faces, bodies, and background elements.

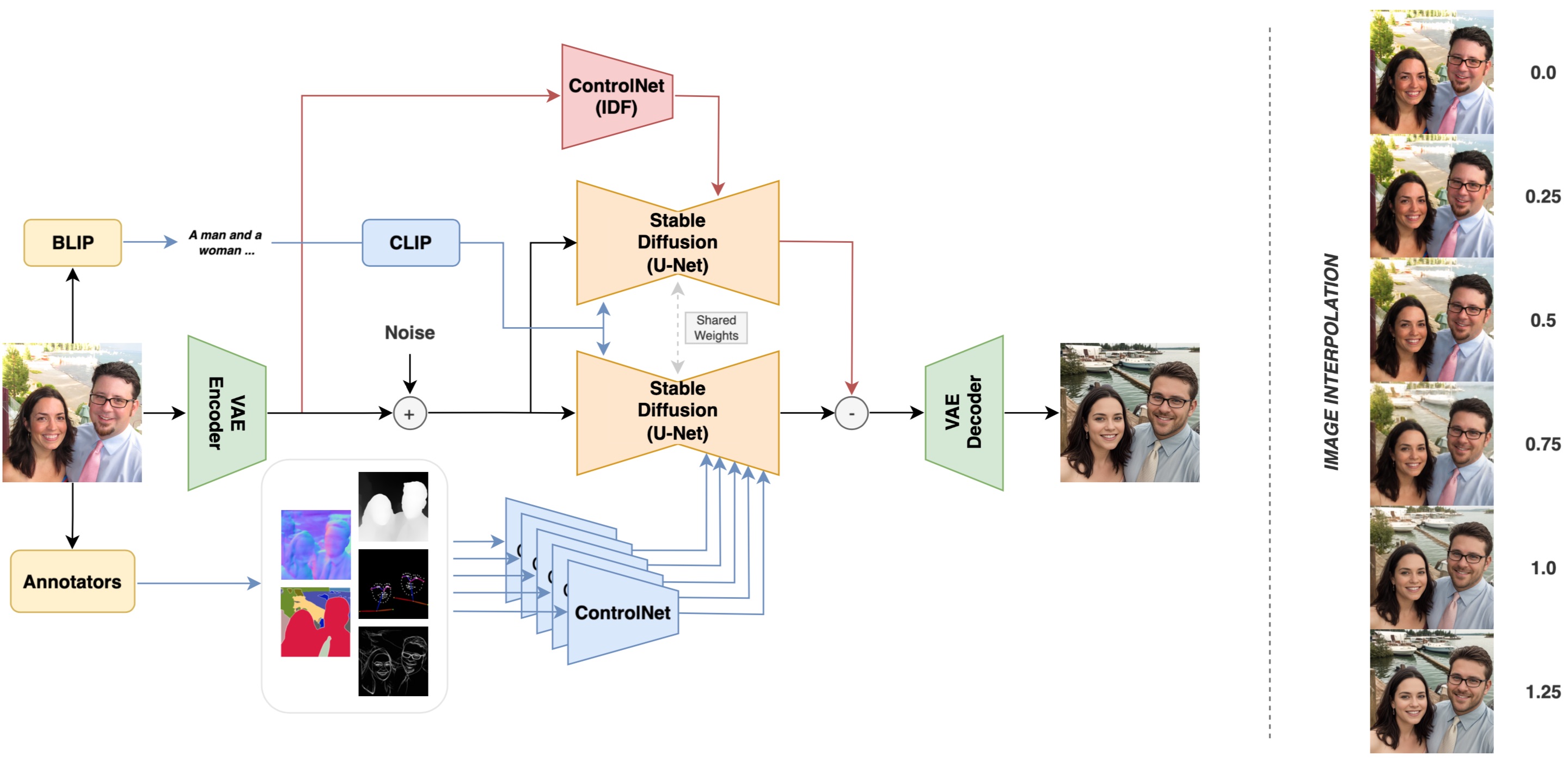

CAMOUFLaGE-Base

CAMOUFLaGE-Base exploits a combination of pre-trained ControlNets and introduces an anonymizazion guidance based on the original image.

More details on its usage can be found here.

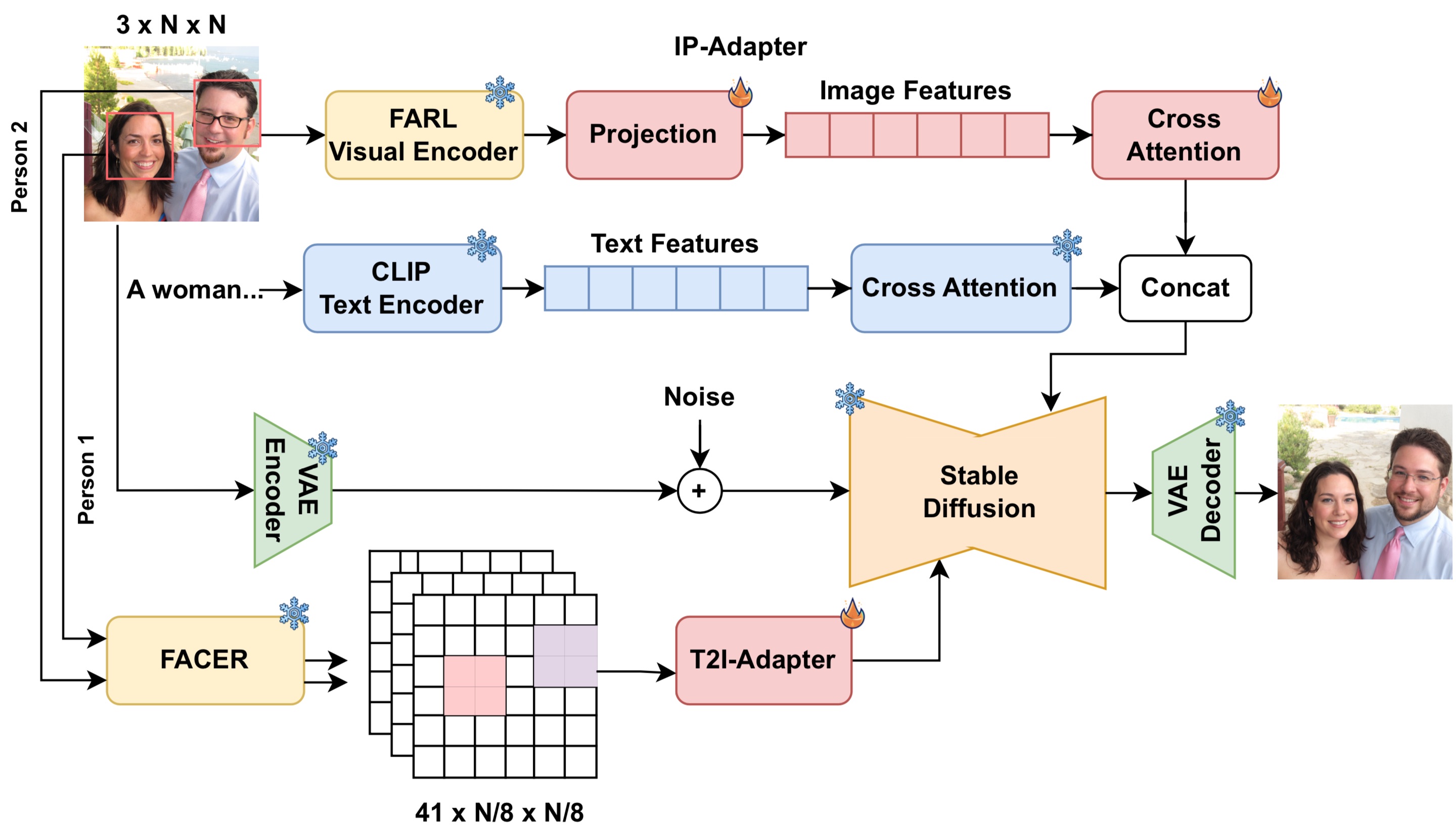

CAMOUFLaGE-Light

CAMOUFLaGE-Light trains a lightweight IP-Adapter to encode key elements of the scene and facial attributes of each person.

More details on its usage can be found here.

Harnessing Foundation Models for Image Anonymization

We explore how foundation models can be leveraged to solve tasks, specifically focusing on anonymization, without the requirement for training or fine-tuning. By bypassing traditional pipelines, we demonstrate the efficiency and effectiveness of this approach in achieving anonymization objectives directly from the foundation model’s inherent knowledge.

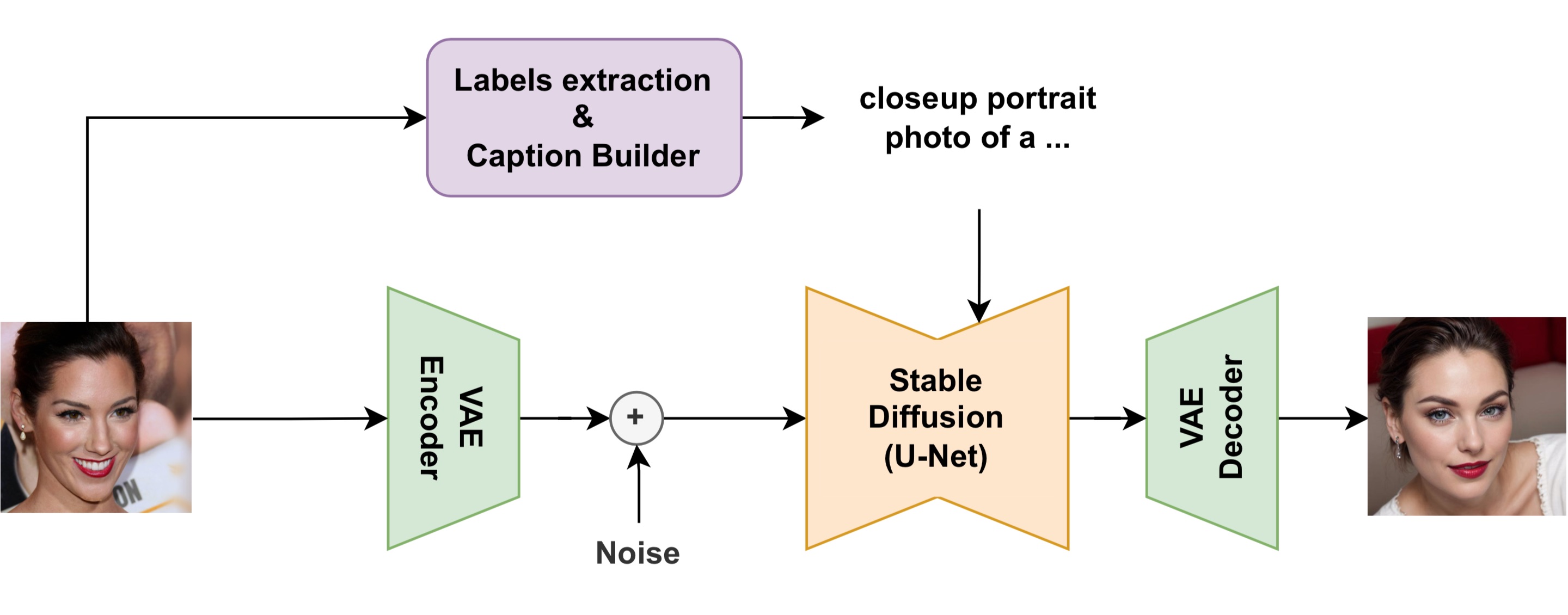

CAMOUFLaGE-Face

We examine how foundation models can generate anonymized images directly from textual descriptions. Two models were employed for information extraction: FACER, used to identify the 40 CelebA-HQ attributes, and DeepFace, used to determine ethnicity and age. Using this rich information, we craft captions to guide the generation process. Classifier-free guidance was employed to push the image content in the direction of the positive prompt P and far from the negative prompt ¬P.

More details on its usage can be found here.

Citation

If you find CAMOUFLaGE-Base and/or CAMOUFLaGE-Light useful, please cite:

@misc{camouflage,

title={Latent Diffusion Models for Attribute-Preserving Image Anonymization},

author={Luca Piano and Pietro Basci and Fabrizio Lamberti and Lia Morra},

year={2024},

eprint={2403.14790},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you find CAMOUFLaGE-Face useful, please cite:

@inproceedings{pianoGEM24,

title={Harnessing Foundation Models for Image Anonymization},

author={Piano, Luca and Basci, Pietro and Lamberti, Fabrizio and Morra, Lia},

booktitle={2024 IEEE CTSoc Gaming, Entertainment and Media},

year={2024},

organization={IEEE}

}