fixed READMEs and added IDR Prediction benchmark

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- fuson_plm/benchmarking/caid/README.md +44 -42

- fuson_plm/benchmarking/idr_prediction/README.md +211 -0

- fuson_plm/benchmarking/idr_prediction/__init__.py +0 -0

- fuson_plm/benchmarking/idr_prediction/clean.py +289 -0

- fuson_plm/benchmarking/idr_prediction/cluster.py +94 -0

- fuson_plm/benchmarking/idr_prediction/clustering/input.fasta +3 -0

- fuson_plm/benchmarking/idr_prediction/clustering/mmseqs_full_results.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/config.py +41 -0

- fuson_plm/benchmarking/idr_prediction/model.py +154 -0

- fuson_plm/benchmarking/idr_prediction/plot.py +204 -0

- fuson_plm/benchmarking/idr_prediction/processed_data/all_albatross_seqs_and_properties.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/processed_data/train_test_value_histograms.png +0 -0

- fuson_plm/benchmarking/idr_prediction/processed_data/value_histograms.png +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/asph_bio_synth_training_data_cleaned_05_09_2023.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/asph_nat_meth_test.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaled_re_bio_synth_training_data_cleaned_05_09_2023.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaled_re_nat_meth_test.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaled_rg_bio_synth_training_data_cleaned_05_09_2023.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaled_rg_nat_meth_test.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaling_exp_bio_synth_training_data_cleaned_05_09_2023.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/raw_data/scaling_exp_nat_meth_test.tsv +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/asph_best_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/asph_hyperparam_screen_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/asph/esm2_t33_650M_UR50D_asph_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/asph/esm2_t33_650M_UR50D_asph_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/asph/fuson_plm_asph_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/asph/fuson_plm_asph_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_re/esm2_t33_650M_UR50D_scaled_re_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_re/esm2_t33_650M_UR50D_scaled_re_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_re/fuson_plm_scaled_re_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_re/fuson_plm_scaled_re_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_rg/esm2_t33_650M_UR50D_scaled_rg_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_rg/esm2_t33_650M_UR50D_scaled_rg_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_rg/fuson_plm_scaled_rg_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaled_rg/fuson_plm_scaled_rg_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaling_exp/esm2_t33_650M_UR50D_scaling_exp_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaling_exp/esm2_t33_650M_UR50D_scaling_exp_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaling_exp/fuson_plm_scaling_exp_R2.png +0 -0

- fuson_plm/benchmarking/idr_prediction/results/final/r2_plots/scaling_exp/fuson_plm_scaling_exp_R2_source_data.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaled_re_best_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaled_re_hyperparam_screen_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaled_rg_best_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaled_rg_hyperparam_screen_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaling_exp_best_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/results/final/scaling_exp_hyperparam_screen_test_r2.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/split.py +135 -0

- fuson_plm/benchmarking/idr_prediction/splits/asph/test_df.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/splits/asph/train_df.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/splits/asph/val_df.csv +3 -0

- fuson_plm/benchmarking/idr_prediction/splits/scaled_re/test_df.csv +3 -0

fuson_plm/benchmarking/caid/README.md

CHANGED

|

@@ -120,47 +120,46 @@ benchmarking/

|

|

| 120 |

|

| 121 |

Here we describe what each script does and which files each script creates.

|

| 122 |

1. 🐍 **`scrape_fusionpdb.py`**

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

| 136 |

-

|

| 137 |

-

|

| 138 |

-

|

| 139 |

-

|

| 140 |

-

|

| 141 |

-

|

| 142 |

-

|

| 143 |

-

|

| 144 |

-

|

| 145 |

-

|

| 146 |

-

|

| 147 |

-

<br>

|

| 148 |

|

| 149 |

2. 🐍 **`process_fusion_structures.py`**

|

| 150 |

-

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

|

| 154 |

-

|

| 155 |

-

|

| 156 |

-

|

| 157 |

-

|

| 158 |

-

|

| 159 |

-

|

| 160 |

-

|

| 161 |

-

|

| 162 |

-

|

| 163 |

-

|

| 164 |

|

| 165 |

|

| 166 |

### Training

|

|

@@ -184,9 +183,12 @@ PERMISSION_TO_OVERWRITE_EMBEDDINGS = False # if False, scrip

|

|

| 184 |

PERMISSION_TO_OVERWRITE_MODELS = False # if False, script will halt if it believes these embeddings have already been made.

|

| 185 |

```

|

| 186 |

|

| 187 |

-

|

|

|

|

|

|

|

|

|

|

| 188 |

|

| 189 |

-

|

| 190 |

|

| 191 |

```

|

| 192 |

benchmarking/

|

|

@@ -209,7 +211,7 @@ benchmarking/

|

|

| 209 |

- **`caid_train_losses.csv`**: train losses over the 2 training epochs for top-performing model

|

| 210 |

- **`params.txt`**: hyperparameters of top performing model

|

| 211 |

|

| 212 |

-

|

| 213 |

|

| 214 |

```

|

| 215 |

benchmarking/

|

|

|

|

| 120 |

|

| 121 |

Here we describe what each script does and which files each script creates.

|

| 122 |

1. 🐍 **`scrape_fusionpdb.py`**

|

| 123 |

+

i. Scrapes metadata for FusionPDB Level 2 and Level 3

|

| 124 |

+

a. Pulls the online tables for [Level 2](https://compbio.uth.edu/FusionPDB/gene_search_result_0.cgi?type=chooseLevel&chooseLevel=level2) and [Level 3](https://compbio.uth.edu/FusionPDB/gene_search_result_0.cgi?type=chooseLevel&chooseLevel=level3), saving results to `raw_data/FusionPDB_level2_curated_09_05_2024.csv` and `raw_data/FusionPDB_level3_curated_09_05_2024.csv` respectively.

|

| 125 |

+

ii. Retrieves structure links

|

| 126 |

+

a. Using the tables collected in step (i), visits the page for each fusion oncoprotein (FO) in FusionPDB Level 2 and 3, and downloads all AlphaFold2 structure links for each FO.

|

| 127 |

+

b. Saves results directly to `raw_data/FusionPDB_level2_fusion_structure_links.csv` and `raw_data/FusionPDB_level3_fusion_structure_links.csv`, respectively

|

| 128 |

+

iii. Retrieves FO head gene and tail gene info

|

| 129 |

+

a. Using the tables collected in step (i), visits the page for each fusion oncoprotein (FO) in FusionPDB Level 2 and 3 to download head/tail info. Collects HGID and TGID (GeneIDs for head and tail) and UniProt accessions for each.

|

| 130 |

+

b. Saves results directly to `raw_data/level2_head_tail_info.txt` and `raw_data/level3_head_tail_info.txt`, respectively.

|

| 131 |

+

iv. Combines Level 2 and 3 head/tail data

|

| 132 |

+

a. Merges `raw_data/level2_head_tail_info.txt` and `raw_data/level3_head_tail_info.txt` into a dataframe.

|

| 133 |

+

b. Saves result at `processed_data/fusionpdb/fusion_heads_and_tails.csv` (columns="FusionGID","HGID","TGID","HGUniProtAcc","TGUniProtAcc")

|

| 134 |

+

v. Combines Level 2 and 3 structure link data

|

| 135 |

+

a. Joins structure link data with metadata for each of levels 2 and 3, then combines the result.

|

| 136 |

+

b. Saves result at `processed_data/fusionpdb/intermediates/giant_level2-3_fusion_protein_structure_links.csv`

|

| 137 |

+

vi. Combines structure link data and metadata (result of step (v)) with head and tail data (result of step (iv)), and resolves any missing head/tail UniProt IDs.

|

| 138 |

+

a. Merges the data

|

| 139 |

+

b. Checks how many rows have either missing or wrong UniProt accessions for the head or tail gene, and compiles the gene symbols for online quering in the UniProt ID Mapping tool (`processed_data/fusionpdb/intermediates/unmapped_parts.txt`)

|

| 140 |

+

c. Reads the UniProt ID Mapping result. Combines this data with FusionPDB-scraped data by matching FusionPDB's HGID (GeneID for head) and TGID (GeneID for tail) with the GeneID returned by UniProt.

|

| 141 |

+

d. For any FO where FusionPDB lacked a UniProt ID for the head/tail, this ID is filled in from the UniProt ID Mapping result.

|

| 142 |

+

e. Saves result to `processed_data/fusionpdb/intermediates/giant_level2-3_fusion_protein_head_tail_info.csv`. Columns: "FusionGID","FusionGene","Hgene","Tgene","URL","HGID","TGID","HGUniProtAcc","TGUniProtAcc","HGUniProtAcc_Source","TGUniProtAcc_Source", where the "_Source" columns indicate whether the UniProt ID came from FusionPDB, or from the ID Map.

|

| 143 |

+

vii. Downloads AlphaFold2 structures of FOs from FusionPDB.

|

| 144 |

+

a. Using structure links from `processed_data/fusionpdb/intermediates/giant_level2-3_fusion_protein_structure_links.csv` (step (v)), directly downloads `.pdb` and `.cif` files.

|

| 145 |

+

b. Saves results in 📁`raw_data/fusionpdb/structures`

|

| 146 |

+

|

|

|

|

| 147 |

|

| 148 |

2. 🐍 **`process_fusion_structures.py`**

|

| 149 |

+

i. Determines pLDDT(s) for each FO structure.

|

| 150 |

+

a. For each structure in 📁`raw_data/fusionpdb_structures/`, determines amino acid sequence, per-residue pLDDT, and average pLDDT from the AlphaFold2 structure.

|

| 151 |

+

b. Saves results in `processed_data/fusionpdb/intermediates/giant_level2-3_fusion_protein_structures_processed.csv`.

|

| 152 |

+

ii. Downloads AlphaFold2 structures for all head and tail proteins

|

| 153 |

+

a. Reads `processed_data/fusionpdb/intermediates/giant_level2-3_fusion_protein_head_tail_info.csv` and collects all unique UniProt IDs for all head/tail proteins.

|

| 154 |

+

b. For each UniProt ID, queries the AlphaFoldDB, downloads the AlphaFold2 structure (if available), and saves it to 📁`raw_data/fusionpdb/head_tail_af2db_structures/`. Saves files converted from PDB to CIF format in `mmcif_converted_files`. Then, extracts the sequence, per-residue pLDDT, and average pLDDT from the file.

|

| 155 |

+

c. Saves any UniProt IDs that did not have structures in the AlphaFoldDB to: `processed_data/fusionpdb/intermediates/uniprotids_not_in_afdb.txt`. Most of these were very long, but the shorter ones were folded and their average pLDDTs were manually inputted. These were put back into the AlphaFold ID map to look for alternative UniProt IDs, and their results are in `not_in_afdb_idmap.txt`.

|

| 156 |

+

d. Saves results to `processed_data/fusionpdb/heads_tails_structural_data.csv`

|

| 157 |

+

iii. Cleans the dataase of level 2&3 structural info

|

| 158 |

+

a. Drops rows where no structure was successfully downloaded

|

| 159 |

+

b. Drops rows where the FO sequence from FusionPDB does not match the FO sequence from its own AlphaFold2 structure file

|

| 160 |

+

c. ⭐️Saves **two final, cleaned databases**⭐️:

|

| 161 |

+

a. ⭐️ **`FusionPDB_level2-3_cleaned_FusionGID_info.csv`**: includes ful IDs and structural information for the Hgene and Tgene of each FO. Columns="FusionGID","FusionGene","Hgene","Tgene","URL","HGID","TGID","HGUniProtAcc","TGUniProtAcc","HGUniProtAcc_Source","TGUniProtAcc_Source","HG_pLDDT","HG_AA_pLDDTs","HG_Seq","TG_pLDDT","TG_AA_pLDDTs","TG_Seq".

|

| 162 |

+

b. ⭐️ **`FusionPDB_level2-3_cleaned_structure_info.csv`**: includes full structural information for each FO. Columns= "FusionGID","FusionGene","Fusion_Seq","Fusion_Length","Hgene","Hchr","Hbp","Hstrand","Tgene","Tchr","Tbp","Tstrand","Level","Fusion_Structure_Link","Fusion_Structure_Type","Fusion_pLDDT","Fusion_AA_pLDDTs","Fusion_Seq_Source"

|

| 163 |

|

| 164 |

|

| 165 |

### Training

|

|

|

|

| 183 |

PERMISSION_TO_OVERWRITE_MODELS = False # if False, script will halt if it believes these embeddings have already been made.

|

| 184 |

```

|

| 185 |

|

| 186 |

+

`train.py` trains the models using embeddings indicated in `config.py`. It also performs a hyperparameter screen.

|

| 187 |

+

- All **results** are stored in `caid/results/<timestamp>`, where `timestamp` is a unique string encoding the date and time when you started training.

|

| 188 |

+

- All **raw outputs from models** are stored in `caid/trained_models/<embedding_path>`, where `embedding_path` represents the embeddings used to build the disorder predictor.

|

| 189 |

+

- All **embeddings** made for training will be stored in a new folder called `caid/embeddings/` with subfolders for each model. This allows you to use the same model multiple times without regenerating embeddings.

|

| 190 |

|

| 191 |

+

Below is the FusOn-pLM-Diso raw outputs folder, `trained_models/fuson_plm/best/'. (ESM-2-650M-Diso has a folder in the same format, and future trained models will as well):

|

| 192 |

|

| 193 |

```

|

| 194 |

benchmarking/

|

|

|

|

| 211 |

- **`caid_train_losses.csv`**: train losses over the 2 training epochs for top-performing model

|

| 212 |

- **`params.txt`**: hyperparameters of top performing model

|

| 213 |

|

| 214 |

+

Results from the FusOn-pLM manuscript are found in `results/final`. A few extra data files and plots are added by `analyze_fusion_preds.py`

|

| 215 |

|

| 216 |

```

|

| 217 |

benchmarking/

|

fuson_plm/benchmarking/idr_prediction/README.md

ADDED

|

@@ -0,0 +1,211 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## IDR Property Prediction Benchmark

|

| 2 |

+

|

| 3 |

+

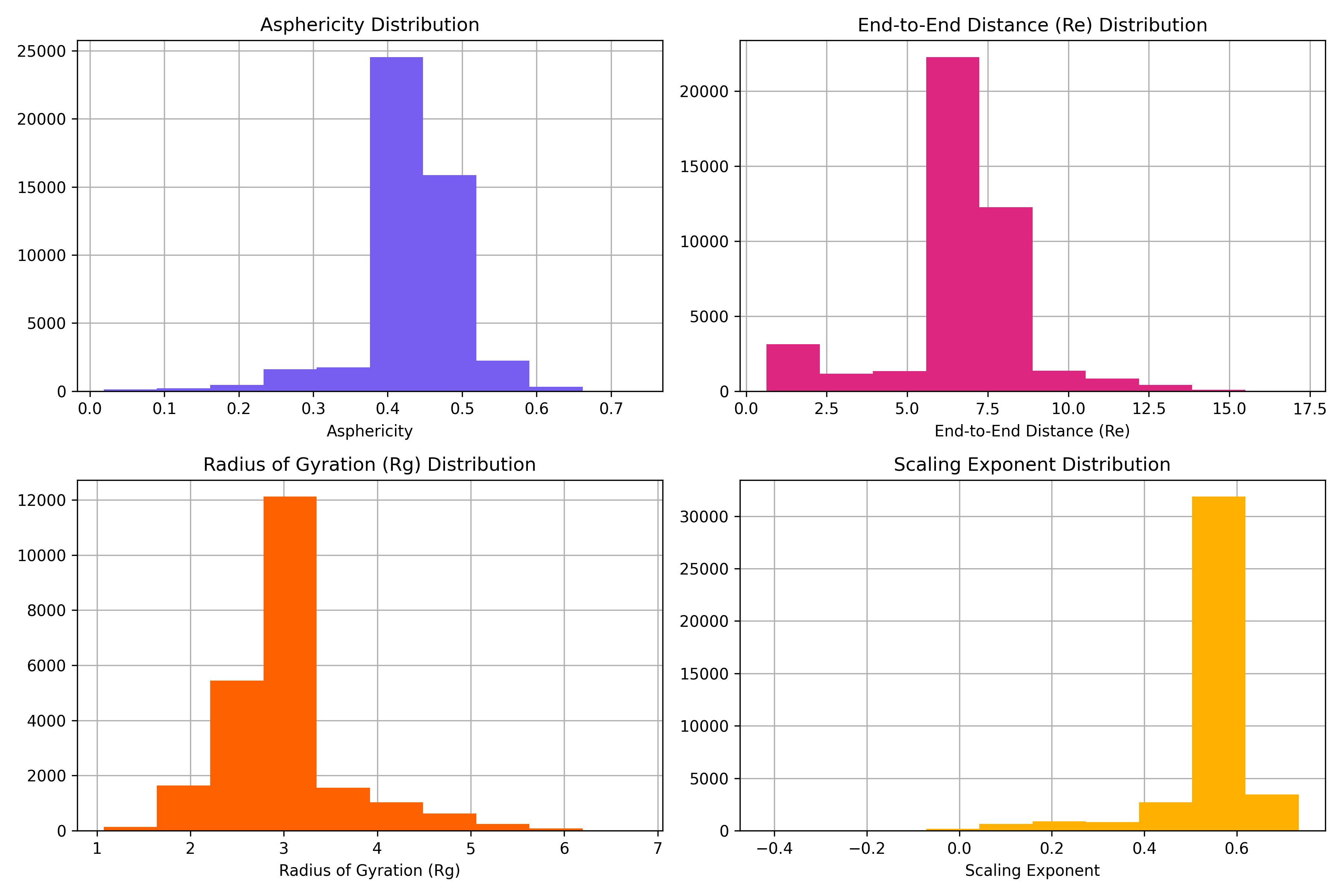

This folder contains all the data and code needed to perform the **IDR property prediction benchmark**, where FusOn-pLM-IDR (a regressor built on FusOn-pLM embeddings) is used to predict aggregate properties of intrinsically disordered regions (IDRs), specifically asphericity, end-to-end radius (R<sub>e</sub>), radius of gyration (R<sub>g</sub>), and polymer scaling exponent (Figure 4A-B).

|

| 4 |

+

|

| 5 |

+

### TL;DR

|

| 6 |

+

The order in which to run the scripts, after downloading data:

|

| 7 |

+

|

| 8 |

+

```

|

| 9 |

+

python clean.py # clean the data

|

| 10 |

+

python cluster.py # MMSeqs2 clustering

|

| 11 |

+

python split.py # make cluster-based train/val/test splits

|

| 12 |

+

python train.py # train the model

|

| 13 |

+

python plot.py # if you want to remake r2 plots

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

### Downloading raw IDR data

|

| 17 |

+

IDR properties from [Lotthammer et al. 2024](https://doi.org/10.1038/s41592-023-02159-5) (ALBATROSS model) were used to train FusOn-pLM-Diso. Sequences were downloaded from [this link](https://github.com/holehouse-lab/supportingdata/blob/master/2023/ALBATROSS_2023/simulations/data/all_sequences.tgz) and deposited in `raw_data`. All files in `raw_data` are from this direct download.

|

| 18 |

+

|

| 19 |

+

```

|

| 20 |

+

benchmarking/

|

| 21 |

+

└── idr_prediction/

|

| 22 |

+

└── raw_data/

|

| 23 |

+

├── asph_bio_synth_training_data_cleaned_05_09_2023.tsv

|

| 24 |

+

├── asph_nat_meth_test.tsv

|

| 25 |

+

├── scaled_re_bio_synth_training_data_cleaned_05_09_2023.tsv

|

| 26 |

+

├── scaled_re_nat_meth_test.tsv

|

| 27 |

+

├── scaled_rg_bio_synth_training_data_cleaned_05_09_2023.tsv

|

| 28 |

+

├── scaled_rg_nat_meth_test.tsv

|

| 29 |

+

├── scaling_exp_bio_synth_training_data_cleaned_05_09_2023.tsv

|

| 30 |

+

├── scaling_exp_nat_meth_test.tsv

|

| 31 |

+

```

|

| 32 |

+

- **`asph`**=asphericity, **`scaled_re`**=scaled R<sub>e</sub>, **`scaled_rg`**=scaled R<sub>g</sub>, **`scaling_exp`**=polymer scaling exponent

|

| 33 |

+

- **`<property>_bio_synth_training_data_cleaned_05_09_2023.tsv`** are ALBATROSS **training data** for the four properties, downloaded directly their GitHub

|

| 34 |

+

- **`<property>_nat_meth_test.tsv`** are ALBATROSS **testing data** for the four proeprties, downloaded directly from their GitHub

|

| 35 |

+

|

| 36 |

+

### Cleaning raw IDR data

|

| 37 |

+

`clean.py` cleans the raw training and testing data separately for each property. Any duplicates (in both train and test) are removed from train and kept in test. Finally, the four are combined into one file:

|

| 38 |

+

|

| 39 |

+

```

|

| 40 |

+

benchmarking/

|

| 41 |

+

└── idr_prediction/

|

| 42 |

+

└── processed_data/

|

| 43 |

+

├── all_albatross_seqs_and_properties.csv

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

- **`all_albatross_seqs_and_properties.csv`**: Columns = "Sequence","IDs","UniProt_IDs","UniProt_Names","Split","asph","scaled_re","scaled_rg","scaling_exp". All splits are either "Train" or "Test", indicating ALBATROSS model's usage of them

|

| 47 |

+

|

| 48 |

+

To perform cleaning, run

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

python clean.py

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

### Using config.py for clustering, splitting, training

|

| 55 |

+

|

| 56 |

+

This file has configurations for clustering, splitting, training.

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

# Clustering Parameters

|

| 60 |

+

CLUSTER = CustomParams(

|

| 61 |

+

# MMSeqs2 parameters: see GitHub or MMSeqs2 Wiki for guidance

|

| 62 |

+

MIN_SEQ_ID = 0.3, # % identity

|

| 63 |

+

C = 0.5, # % sequence length overlap

|

| 64 |

+

COV_MODE = 1, # cov-mode: 0 = bidirectional, 1 = target coverage, 2 = query coverage, 3 = target-in-query length coverage.

|

| 65 |

+

CLUSTER_MODE = 2,

|

| 66 |

+

# File paths

|

| 67 |

+

INPUT_PATH = 'processed_data/all_albatross_seqs_and_properties.csv',

|

| 68 |

+

PATH_TO_MMSEQS = '../../mmseqs' # path to where you installed MMSeqs2

|

| 69 |

+

)

|

| 70 |

+

|

| 71 |

+

# Split config

|

| 72 |

+

SPLIT = CustomParams(

|

| 73 |

+

IDR_DB_PATH = 'processed_data/all_albatross_seqs_and_properties.csv',

|

| 74 |

+

CLUSTER_OUTPUT_PATH = 'clustering/mmseqs_full_results.csv',

|

| 75 |

+

RANDOM_STATE_1 = 2, # random_state_1 = state for splitting all data into train & other

|

| 76 |

+

TEST_SIZE_1 = 0.21, # test size for data -> train/test split. e.g. 20 means 80% clusters in train, 20% clusters in other

|

| 77 |

+

RANDOM_STATE_2 = 6, # random_state_2 = state for splitting other from ^ into val and test

|

| 78 |

+

TEST_SIZE_2 = 0.50 # test size for train -> train/val split. e.g. 0.50 means 50% clusters in train, 50% clusters in test

|

| 79 |

+

|

| 80 |

+

)

|

| 81 |

+

|

| 82 |

+

# Which models to benchmark

|

| 83 |

+

TRAIN = CustomParams(

|

| 84 |

+

BENCHMARK_FUSONPLM = True,

|

| 85 |

+

FUSONPLM_CKPTS= "FusOn-pLM", # Dictionary: key = run name, values = epochs, or string "FusOn-pLM"

|

| 86 |

+

BENCHMARK_ESM = True,

|

| 87 |

+

|

| 88 |

+

# GPU configs

|

| 89 |

+

CUDA_VISIBLE_DEVICES="0",

|

| 90 |

+

|

| 91 |

+

# Overwriting configs

|

| 92 |

+

PERMISSION_TO_OVERWRITE_EMBEDDINGS = False, # if False, script will halt if it believes these embeddings have already been made.

|

| 93 |

+

PERMISSION_TO_OVERWRITE_MODELS = False # if False, script will halt if it believes these embeddings have already been made.

|

| 94 |

+

)

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

### Clustering

|

| 98 |

+

Clustering of all sequences in `all_albatross_seqs_and_properties.csv` is performed by `cluster.py`.

|

| 99 |

+

|

| 100 |

+

The clustering command entered by the script is:

|

| 101 |

+

```

|

| 102 |

+

mmseqs easy-cluster clustering/input.fasta clustering/raw_output/mmseqs clustering/raw_output --min-seq-id 0.3 -c 0.5 --cov-mode 1 --cluster-mode 2 --dbtype 1

|

| 103 |

+

```

|

| 104 |

+

The script will generate the following files:

|

| 105 |

+

```

|

| 106 |

+

benchmarking/

|

| 107 |

+

└── idr_prediction/

|

| 108 |

+

└── clustering/

|

| 109 |

+

├── input.fasta

|

| 110 |

+

├── mmseqs_full_results.csv

|

| 111 |

+

```

|

| 112 |

+

- **`clustering/input.fasta`**: the input file used by MMSeqs2 to cluster the fusion oncoprotein sequences. Headers are our assigned sequence IDs (can be found in the `IDs` column of `processed_data/all_albatross_seqs_and_properties.csv`.)

|

| 113 |

+

- **`clustering/mmseqs_full_results.csv`**: clustering results. Columns:

|

| 114 |

+

- `representative seq_id`: the seq_id of the sequence representing this cluster

|

| 115 |

+

- `member seq_id`: the seq_id of a member of the cluster

|

| 116 |

+

- `representative seq`: the amino acid sequence of the cluster representative (representative seq_id)

|

| 117 |

+

- `member seq`: the amino acid sequence of the cluster member

|

| 118 |

+

|

| 119 |

+

### Splitting

|

| 120 |

+

Cluster-based splitting is performed by `split.py`. Results are formatted as follows:

|

| 121 |

+

|

| 122 |

+

```

|

| 123 |

+

benchmarking/

|

| 124 |

+

└── idr_prediction/

|

| 125 |

+

└── splits/

|

| 126 |

+

└── asph/

|

| 127 |

+

├── test_df.csv

|

| 128 |

+

├── val_df.csv

|

| 129 |

+

├── train_df.csv

|

| 130 |

+

└── scaled_re/... # same format as splits/asph

|

| 131 |

+

└── scaled_rg/... # same format as splits/asph

|

| 132 |

+

└── scaling_exp/... # same format as splits/asph

|

| 133 |

+

├── test_cluster_split.csv

|

| 134 |

+

├── train_cluster_split.csv

|

| 135 |

+

├── val_cluster_split.csv

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

- **`<split>_cluster_split.csv`**: cluster information for the clusters in each split (train, val, test). Columns = "representative seq_id", "member seq_id", "representative seq", "member seq", "member length"

|

| 139 |

+

- 📁 **`asph/`**, **`scaled_re/`**, **`scaled_rg/`**, and **`scaling_exp/`** contain the train, val, and test sets for each property (`train_df.csv`, `val_df.csv`, and `test_df.csv`). The splits follow `<split>_cluster_split.csv`, but not every property has a measurement for each of these sequences. The train-val-test ratio still remains 80-10-10 for each property, despite the sequence losses.

|

| 140 |

+

|

| 141 |

+

### Training

|

| 142 |

+

The model is defined in `model.py` and `utils.py`. The `train.py` script trains FusOn-pLM-IDR and ESM-2-650M-IDR models *separately for each property* (asphericity, R<sub>e</sub>, R<sub>g</sub>, scaling exponent) with a hyperparameter screen, saves all results separated by property, and makes plots. `plot.py` can be used to regenerate the R<sup>2</sup> plots.

|

| 143 |

+

- All **results** are stored in `idr_prediction/results/<timestamp>`, where `timestamp` is a unique string encoding the date and time when you started training.

|

| 144 |

+

- All **raw outputs from models** are stored in `idr_prediction/trained_models/<embedding_path>`, where `embedding_path` represents the embeddings used to build the disorder predictor.

|

| 145 |

+

- All **embeddings** made for training will be stored in a new folder called `idr_prediction/embeddings/` with subfolders for each model. This allows you to use the same model multiple times without regenerating embeddings.

|

| 146 |

+

|

| 147 |

+

Below is the FusOn-pLM-Diso raw outputs folder, `trained_models/fuson_plm/best/`, and the results from the paper, `results/final/`...

|

| 148 |

+

|

| 149 |

+

The outputs are structured as follows:

|

| 150 |

+

|

| 151 |

+

```

|

| 152 |

+

benchmarking/

|

| 153 |

+

└── idr_prediction/

|

| 154 |

+

└── results/final/

|

| 155 |

+

└── r2_plots

|

| 156 |

+

└── asph/

|

| 157 |

+

├── esm2_t33_650M_UR50D_asph_R2.png

|

| 158 |

+

├── esm2_t33_650M_UR50D_asph_R2_source_data.csv

|

| 159 |

+

├── fuson_plm_asph_R2.png

|

| 160 |

+

├── fuson_plm_asph_R2_source_data.csv

|

| 161 |

+

└── scaled_re/ # same format as r2_plots/asph/...

|

| 162 |

+

└── scaled_rg/ # same format as r2_plots/asph/...

|

| 163 |

+

└── scaling_exp/ # same format as r2_plots/asph/...

|

| 164 |

+

├── asph_best_test_r2.csv

|

| 165 |

+

├── asph_hyperparam_screen_test_r2.csv

|

| 166 |

+

├── scaled_re_best_test_r2.csv

|

| 167 |

+

├── scaled_re_hyperparam_screen_test_r2.csv

|

| 168 |

+

├── scaled_rg_best_test_r2.csv

|

| 169 |

+

├── scaled_rg_hyperparam_screen_test_r2.csv

|

| 170 |

+

├── scaling_exp_best_test_r2.csv

|

| 171 |

+

├── scaling_exp_hyperparam_screen_test_r2.csv

|

| 172 |

+

└── trained_models/

|

| 173 |

+

└── asph/

|

| 174 |

+

└── fuson_plm/best/

|

| 175 |

+

└── lr0.0001_bs32/

|

| 176 |

+

├── asph_r2.csv

|

| 177 |

+

├── train_val_losses.csv

|

| 178 |

+

├── test_loss.csv

|

| 179 |

+

├── asph_test_predictions.csv

|

| 180 |

+

└── ... other hyperparameter folders with same format as lr0.001_bs32/

|

| 181 |

+

└── esm2_t33_650M_UR50D # same format as asph/fuson_plm/best/

|

| 182 |

+

└── scaled_re/ # same format as asph/

|

| 183 |

+

└── scaled_rg/ # same format as asph/

|

| 184 |

+

└── scaling_exp/ # same format as asph/

|

| 185 |

+

|

| 186 |

+

```

|

| 187 |

+

|

| 188 |

+

In both directories, results are organized by IDR property and by the type of embedding used to train FusOn-pLM-IDR.

|

| 189 |

+

|

| 190 |

+

In the 📁 `results/final` directory.

|

| 191 |

+

- 📁 **`r2_plots/<property>/`**: holds all R<sup>2</sup> plots and source data (the formatted data used to make the R<sup>2</sup> plots) for these properties.

|

| 192 |

+

- **`<property>_best_test_r2.csv`**: holds the R<sup>2</sup> values for the top-performing models of each embedding type (e.g. ESM-2-650M and a specific checkpoint of FusOn-pLM)

|

| 193 |

+

- **`<property>_hyperparam_screen_test_r2.csv`**: holds the R<sup>2</sup> values for all embedding types, for all screened hyperparaemters

|

| 194 |

+

|

| 195 |

+

In the 📁 `trained_models` directory:

|

| 196 |

+

- 📁 `<property>/`: holds all results for all trained models predicting this property

|

| 197 |

+

- 📁 `asph/fuson_plm/best/`: holds all FusOn-pLM-IDR results on asphericity prediction for each set of hyperparameters screened when embeddings are made from "fuson_plm/best" (FusOn-pLM model). For example, 📁 `lr0.0001_bs32/` holds results for learning rate of 0.001, batch size 32. If you were to retrain your own checkpoint of fuson_plm and run the IDR prediction benchmark, its results would be stored in a new subfolder of `trained_models/fuson_plm`.

|

| 198 |

+

- **`asph/fuson_plm/best/lr0.0001_bs32/asph_r2.csv`**: R<sup>2</sup> value for this set of hyperparameters with "fuson_plm/best" embeddings

|

| 199 |

+

- **`asph/fuson_plm/best/lr0.0001_bs32/asph_test_predictions.csv`**: true asphericity values of the test set proteins, alongside FusOn-pLM-IDR's predictions of them.

|

| 200 |

+

- **`asph/fuson_plm/best/lr0.0001_bs32/test_loss.csv`**: FusOn-pLM-IDR's asphericity test loss value

|

| 201 |

+

- **`asph/fuson_plm/best/lr0.0001_bs32/train_val_losses.csv`**: FusOn-pLM-IDR's tarining and validation loss over each epoch while training on asphericity data

|

| 202 |

+

|

| 203 |

+

To run the training script, enter:

|

| 204 |

+

```

|

| 205 |

+

nohup python train.py > train.out 2> train.err &

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

To run the plotting script, enter:

|

| 209 |

+

```

|

| 210 |

+

python plot.py

|

| 211 |

+

```

|

fuson_plm/benchmarking/idr_prediction/__init__.py

ADDED

|

File without changes

|

fuson_plm/benchmarking/idr_prediction/clean.py

ADDED

|

@@ -0,0 +1,289 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import pandas as pd

|

| 2 |

+

import numpy as np

|

| 3 |

+

import os

|

| 4 |

+

from fuson_plm.utils.logging import open_logfile, log_update

|

| 5 |

+

from fuson_plm.utils.constants import DELIMITERS, VALID_AAS

|

| 6 |

+

from fuson_plm.utils.data_cleaning import check_columns_for_listlike, find_invalid_chars

|

| 7 |

+

from fuson_plm.benchmarking.idr_prediction.plot import plot_all_values_hist_grid, plot_all_train_val_test_values_hist_grid

|

| 8 |

+

|

| 9 |

+

def process_raw_albatross(df):

|

| 10 |

+

# return a version of the df with first column split, duplicates cleaned,columns checked for weird characters and invalids

|

| 11 |

+

|

| 12 |

+

# first, look at the splits

|

| 13 |

+

split_str = df['Split'].value_counts().reset_index().rename(columns={'index': 'Split','Split': 'count'})

|

| 14 |

+

tot_prots = sum(split_str['count'])

|

| 15 |

+

split_str['pcnt'] = round(100*split_str['count']/tot_prots,2)

|

| 16 |

+

split_str = split_str.to_string(index=False)

|

| 17 |

+

split_str = "\t\t" + split_str.replace("\n","\n\t\t")

|

| 18 |

+

log_update(f"\tTotal proteins: {tot_prots}\n\tSplits:\n{split_str}")

|

| 19 |

+

|

| 20 |

+

# format: IDR_19076_tr___A0A8M9PNM5___A0A8M9PNM5_DANRE

|

| 21 |

+

# or: synth_test_sequence0

|

| 22 |

+

df['temp'] = df['ID'].str.split("_")

|

| 23 |

+

df['ID'] = df['temp'].apply(lambda x: f"{x[0]}" if len(x)==1 else f"{x[0]}_{x[1]}" if len(x)<3 else f"{x[0]}_{x[1]}_{x[2]}")

|

| 24 |

+

# Not ever column has UniProt IDs and Names, so we have to allow np.nan if this info is missing.

|

| 25 |

+

df['UniProt_ID'] = df['temp'].apply(lambda x: x[5].strip() if len(x)>=5 else np.nan)

|

| 26 |

+

df['UniProt_Name'] = df['temp'].apply(lambda x: f"{x[8].strip()}_{x[9].strip()}" if len(x)>=8 else np.nan)

|

| 27 |

+

df = df.drop(columns=['temp'])

|

| 28 |

+

|

| 29 |

+

cols_to_check = list(df.columns)

|

| 30 |

+

cols_to_check.remove('Value') # don't check this one because it shouldn't be string

|

| 31 |

+

# Investigate the colimns we just created and make sure they don't have any invalid features.

|

| 32 |

+

# make sure value is float type

|

| 33 |

+

assert df['Value'].dtype == 'float64'

|

| 34 |

+

check_columns_for_listlike(df, cols_of_interest=cols_to_check, delimiters=DELIMITERS)

|

| 35 |

+

|

| 36 |

+

# Check for invalid AAs

|

| 37 |

+

df['invalid_chars'] = df['Sequence'].apply(lambda x: find_invalid_chars(x, VALID_AAS))

|

| 38 |

+

df[df['invalid_chars'].str.len()>0].sort_values(by='Sequence')

|

| 39 |

+

all_invalid_chars = set().union(*df['invalid_chars'])

|

| 40 |

+

log_update(f"\tchecking for invalid characters...\n\t\tset of all invalid characters discovered within train_df: {all_invalid_chars}")

|

| 41 |

+

|

| 42 |

+

# Assert no invalid AAs

|

| 43 |

+

assert (df['invalid_chars'].str.len()==0).all()

|

| 44 |

+

df = df.drop(columns=['invalid_chars'])

|

| 45 |

+

|

| 46 |

+

# Check for duplicates - if we find any, REMOVE them from train and keep them in test

|

| 47 |

+

duplicates = df[df.duplicated('Sequence')]['Sequence'].unique().tolist()

|

| 48 |

+

n_rows_with_duplicates = len(df[df['Sequence'].isin(duplicates)])

|

| 49 |

+

log_update(f"\t{len(duplicates)} duplicated sequences, corresponding to {n_rows_with_duplicates} rows")

|

| 50 |

+

|

| 51 |

+

# Look for distribution of duplicates WITHIN train, WITHIN test, and BETWEEN train and test

|

| 52 |

+

# Train only

|

| 53 |

+

duplicates = df.loc[

|

| 54 |

+

(df['Split']=='Train')

|

| 55 |

+

]

|

| 56 |

+

duplicates = duplicates[duplicates.duplicated('Sequence')]['Sequence'].unique().tolist()

|

| 57 |

+

n_rows_with_duplicates = len(df.loc[

|

| 58 |

+

(df['Sequence'].isin(duplicates)) &

|

| 59 |

+

(df['Split']=='Train')

|

| 60 |

+

])

|

| 61 |

+

log_update(f"\t\twithin TRAIN only: {len(duplicates)} duplicated sequences, corresponding to {n_rows_with_duplicates} Train rows")

|

| 62 |

+

|

| 63 |

+

# Test only

|

| 64 |

+

duplicates = df.loc[

|

| 65 |

+

(df['Split']=='Test')

|

| 66 |

+

]

|

| 67 |

+

duplicates = duplicates[duplicates.duplicated('Sequence')]['Sequence'].unique().tolist()

|

| 68 |

+

n_rows_with_duplicates = len(df.loc[

|

| 69 |

+

(df['Sequence'].isin(duplicates)) &

|

| 70 |

+

(df['Split']=='Test')

|

| 71 |

+

])

|

| 72 |

+

log_update(f"\t\twithin TEST only: {len(duplicates)} duplicated sequences, corresponding to {n_rows_with_duplicates} Test rows")

|

| 73 |

+

|

| 74 |

+

# Between train and test

|

| 75 |

+

duplicates_df = df.groupby('Sequence').agg({

|

| 76 |

+

'Split': lambda x: ','.join(set(x))

|

| 77 |

+

}).reset_index()

|

| 78 |

+

duplicates_df = duplicates_df.loc[duplicates_df['Split'].str.contains(',')].reset_index(drop=True)

|

| 79 |

+

duplicates = duplicates_df['Sequence'].unique().tolist()

|

| 80 |

+

n_rows_with_duplicates = len(df[df['Sequence'].isin(duplicates)])

|

| 81 |

+

log_update(f"\t\tduplicates in BOTH TRAIN AND TEST: {len(duplicates)} duplicated sequences, corresponding to {n_rows_with_duplicates} rows")

|

| 82 |

+

log_update(f"\t\tprinting portion of dataframe with train+test shared seqs:\n{duplicates_df.head(5)}")

|

| 83 |

+

|

| 84 |

+

log_update("\tGrouping by sequence, averaging values, and keeping any Train/Test duplicates in the Test set...")

|

| 85 |

+

df = df.replace(np.nan, '')

|

| 86 |

+

df = df.groupby('Sequence').agg(

|

| 87 |

+

Value=('Value', 'mean'),

|

| 88 |

+

Value_STD=('Value', 'std'),

|

| 89 |

+

IDs=('ID', lambda x: ','.join(x)),

|

| 90 |

+

UniProt_IDs=('UniProt_ID', lambda x: ','.join(x)),

|

| 91 |

+

UniProt_Names=('UniProt_Name', lambda x: ','.join(x)),

|

| 92 |

+

Split=('Split', lambda x: ','.join(x))

|

| 93 |

+

).reset_index()

|

| 94 |

+

for col in ['IDs','UniProt_IDs','UniProt_Names','Split']:

|

| 95 |

+

df[col] = df[col].apply(lambda x: [y for y in x.split(',')])

|

| 96 |

+

df[col] = df[col].apply(lambda x: ','.join(x))

|

| 97 |

+

df[col] = df[col].str.strip(',')

|

| 98 |

+

# make sure there are no commas left

|

| 99 |

+

assert len(df[df[col].str.contains(',,')])==0

|

| 100 |

+

# set Split to Test if test is in it

|

| 101 |

+

df['Split'] = df['Split'].apply(lambda x: 'Test' if 'Test' in x else 'Train')

|

| 102 |

+

|

| 103 |

+

# For anything that wasn't duplicated, Value_STD is nan

|

| 104 |

+

log_update("\tChecking coefficients of variation for averaged rows")

|

| 105 |

+

# calculate coefficient of variation, should be < 10

|

| 106 |

+

df['Value_CV'] = 100*df['Value_STD']/df['Value']

|

| 107 |

+

log_update(f"\t\tTotal rows with coefficient of variation (CV)\n\t\t\t<=10%: {len(df[df['Value_CV']<=10])}\n\t\t\t>10%: {len(df[df['Value_CV']>10])}\n\t\t\t>20%: {len(df[df['Value_CV']>20])}")

|

| 108 |

+

|

| 109 |

+

# Ensure there are no duplicates

|

| 110 |

+

assert len(df[df['Sequence'].duplicated()])==0

|

| 111 |

+

log_update(f"\tNo remaining duplicates: {len(df[df['Sequence'].duplicated()])==0}")

|

| 112 |

+

|

| 113 |

+

# Print the final distribution of train and test values

|

| 114 |

+

split_str = df['Split'].value_counts().reset_index().rename(columns={'index': 'Split','Split': 'count'})

|

| 115 |

+

tot_prots = sum(split_str['count'])

|

| 116 |

+

split_str['pcnt'] = round(100*split_str['count']/tot_prots,2)

|

| 117 |

+

split_str = split_str.to_string(index=False)

|

| 118 |

+

split_str = "\t\t" + split_str.replace("\n","\n\t\t")

|

| 119 |

+

log_update(f"\tTotal proteins: {tot_prots}\n\tSplits:\n{split_str}")

|

| 120 |

+

|

| 121 |

+

return df

|

| 122 |

+

|

| 123 |

+

def combine_albatross_seqs(asph, scaled_re, scaled_rg, scaling_exp):

|

| 124 |

+

log_update("\nCombining all four dataframes into one file of ALBATROSS sequences")

|

| 125 |

+

|

| 126 |

+

asph = asph[['Sequence','Value','IDs','UniProt_IDs','UniProt_Names','Split']].rename(columns={'Value':'asph'})

|

| 127 |

+

scaled_re = scaled_re[['Sequence','Value','IDs','UniProt_IDs','UniProt_Names','Split']].rename(columns={'Value':'scaled_re'})

|

| 128 |

+

scaled_rg = scaled_rg[['Sequence','Value','IDs','UniProt_IDs','UniProt_Names','Split']].rename(columns={'Value':'scaled_rg'})

|

| 129 |

+

scaling_exp = scaling_exp[['Sequence','Value','IDs','UniProt_IDs','UniProt_Names','Split']].rename(columns={'Value':'scaling_exp'})

|

| 130 |

+

|

| 131 |

+

combined = asph.merge(scaled_re, on='Sequence',how='outer',suffixes=('_asph', '_scaledre'))\

|

| 132 |

+

.merge(scaled_rg, on='Sequence',how='outer',suffixes=('_scaledre', '_scaledrg'))\

|

| 133 |

+

.merge(scaling_exp, on='Sequence',how='outer',suffixes=('_scaledrg', '_scalingexp')).fillna('')

|

| 134 |

+

|

| 135 |

+

# Make sure something that's in train for one is in train for all, and not test

|

| 136 |

+

combined['IDs'] = combined['IDs_asph']+','+combined['IDs_scaledre']+','+combined['IDs_scaledrg']+','+combined['IDs_scalingexp']

|

| 137 |

+

combined['UniProt_IDs'] = combined['UniProt_IDs_asph']+','+combined['UniProt_IDs_scaledre']+','+combined['UniProt_IDs_scaledrg']+','+combined['UniProt_IDs_scalingexp']

|

| 138 |

+

combined['UniProt_Names'] = combined['UniProt_Names_asph']+','+combined['UniProt_Names_scaledre']+','+combined['UniProt_Names_scaledrg']+','+combined['UniProt_Names_scalingexp']

|

| 139 |

+

combined['Split'] = combined['Split_asph']+','+combined['Split_scaledre']+','+combined['Split_scaledrg']+','+combined['Split_scalingexp']

|

| 140 |

+

|

| 141 |

+

# Make the lists clean

|

| 142 |

+

for col in ['IDs','UniProt_IDs','UniProt_Names','Split']:

|

| 143 |

+

combined[col] = combined[col].apply(lambda x: [y.strip() for y in x.split(',') if len(y)>0])

|

| 144 |

+

combined[col] = combined[col].apply(lambda x: ','.join(set(x)))

|

| 145 |

+

combined[col] = combined[col].str.strip(',')

|

| 146 |

+

# make sure there are no commas left

|

| 147 |

+

assert len(combined[combined[col].str.contains(',,')])==0

|

| 148 |

+

combined = combined[['Sequence','IDs','UniProt_IDs','UniProt_Names','Split','asph','scaled_re','scaled_rg','scaling_exp']] # drop unneeded merge relics

|

| 149 |

+

combined = combined.replace('',np.nan)

|

| 150 |

+

# Make sure there are no sequences where split is both train and test

|

| 151 |

+

log_update("\tChecking for any cases where a protein is Train for one IDR prediction task and Test for another (should NOT happen!)")

|

| 152 |

+

duplicates_df = combined.groupby('Sequence').agg({

|

| 153 |

+

'Split': lambda x: ','.join(set(x))

|

| 154 |

+

}).reset_index()

|

| 155 |

+

duplicates_df = duplicates_df.loc[duplicates_df['Split'].str.contains(',')].reset_index(drop=True)

|

| 156 |

+

duplicates = duplicates_df['Sequence'].unique().tolist()

|

| 157 |

+

n_rows_with_duplicates = len(combined[combined['Sequence'].isin(duplicates)])

|

| 158 |

+

log_update(f"\t\tsequences in BOTH TRAIN AND TEST: {len(duplicates)} sequences, corresponding to {n_rows_with_duplicates} rows")

|

| 159 |

+

if len(duplicates)>0:

|

| 160 |

+

log_update(f"\t\tprinting portion of assert len(combined[combined['asph'].notna()])==len(asph)dataframe with train+test shared seqs:\n{duplicates_df.head(5)}")

|

| 161 |

+

|

| 162 |

+

# Now, get rid of duplicates

|

| 163 |

+

combined = combined.drop_duplicates().reset_index(drop=True)

|

| 164 |

+

duplicates = combined[combined.duplicated('Sequence')]['Sequence'].unique().tolist()

|

| 165 |

+

log_update(f"\tDropped duplicates.\n\tTotal duplicate sequences: {len(duplicates)}\n\tTotal sequences: {len(combined)}")

|

| 166 |

+

assert len(duplicates)==0

|

| 167 |

+

|

| 168 |

+

# See how many columns have multiple entries for each

|

| 169 |

+

log_update(f"\tChecking how many sequences have multiple of the following: ID, UniProt ID, UniProt Name")

|

| 170 |

+

for col in ['IDs','UniProt_IDs','UniProt_Names','Split']:

|

| 171 |

+

n_multiple = len(combined.loc[(combined[col].notna()) & (combined[col].str.contains(','))])

|

| 172 |

+

log_update(f"\t\t{col}: {n_multiple}")

|

| 173 |

+

|

| 174 |

+

# See how many entries there are of each cproperty (should match length of original database)

|

| 175 |

+

assert len(combined[combined['asph'].notna()])==len(asph)

|

| 176 |

+

assert len(combined[combined['scaled_re'].notna()])==len(scaled_re)

|

| 177 |

+

assert len(combined[combined['scaled_rg'].notna()])==len(scaled_rg)

|

| 178 |

+

assert len(combined[combined['scaling_exp'].notna()])==len(scaling_exp)

|

| 179 |

+

log_update("\tSequences with values for each property:")

|

| 180 |

+

for property in ['asph','scaled_re','scaled_rg','scaling_exp']:

|

| 181 |

+

log_update(f"\t\t{property}: {len(combined[combined[property].notna()])}")

|

| 182 |

+

|

| 183 |

+

log_update(f"\nPreview of combined database with columns: {combined.columns}\n{combined.head(10)}")

|

| 184 |

+

return combined

|

| 185 |

+

|

| 186 |

+

def main():

|

| 187 |

+

with open_logfile("data_cleaning_log.txt"):

|

| 188 |

+

# Read in all of the raw data

|

| 189 |

+

raw_data_folder = 'raw_data'

|

| 190 |

+

dtype_dict = {0:str,1:str,2:float}

|

| 191 |

+

rename_dict = {0:'ID',1:'Sequence',2:'Value'}

|

| 192 |

+

|

| 193 |

+

# Read in the test data

|

| 194 |

+

asph_test = pd.read_csv(f"{raw_data_folder}/asph_nat_meth_test.tsv",sep=" ",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 195 |

+

scaled_re_test = pd.read_csv(f"{raw_data_folder}/scaled_re_nat_meth_test.tsv",sep="\t",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 196 |

+

scaled_rg_test = pd.read_csv(f"{raw_data_folder}/scaled_rg_nat_meth_test.tsv",sep="\t",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 197 |

+

scaling_exp_test = pd.read_csv(f"{raw_data_folder}/scaling_exp_nat_meth_test.tsv",sep=" ",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 198 |

+

|

| 199 |

+

# Read in the train data

|

| 200 |

+

asph_train = pd.read_csv(f"{raw_data_folder}/asph_bio_synth_training_data_cleaned_05_09_2023.tsv",sep=" ",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 201 |

+

scaled_re_train = pd.read_csv(f"{raw_data_folder}/scaled_re_bio_synth_training_data_cleaned_05_09_2023.tsv",sep="\t",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 202 |

+

scaled_rg_train = pd.read_csv(f"{raw_data_folder}/scaled_rg_bio_synth_training_data_cleaned_05_09_2023.tsv",sep="\t",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 203 |

+

scaling_exp_train = pd.read_csv(f"{raw_data_folder}/scaling_exp_bio_synth_training_data_cleaned_05_09_2023.tsv",sep=" ",dtype=dtype_dict,header=None).rename(columns=rename_dict)

|

| 204 |

+

|

| 205 |

+

# Concatenate - include columns for split

|

| 206 |

+

asph_test['Split'] = ['Test']*len(asph_test)

|

| 207 |

+

scaled_re_test['Split'] = ['Test']*len(scaled_re_test)

|

| 208 |

+

scaled_rg_test['Split'] = ['Test']*len(scaled_rg_test)

|

| 209 |

+

scaling_exp_test['Split'] = ['Test']*len(scaling_exp_test)

|

| 210 |

+

|

| 211 |

+

asph_train['Split'] = ['Train']*len(asph_train)

|

| 212 |

+

scaled_re_train['Split'] = ['Train']*len(scaled_re_train)

|

| 213 |

+

scaled_rg_train['Split'] = ['Train']*len(scaled_rg_train)

|

| 214 |

+

scaling_exp_train['Split'] = ['Train']*len(scaling_exp_train)

|

| 215 |

+

|

| 216 |

+

asph = pd.concat([asph_test, asph_train])

|

| 217 |

+

scaled_re = pd.concat([scaled_re_test, scaled_re_train])

|

| 218 |

+

scaled_rg = pd.concat([scaled_rg_test, scaled_rg_train])

|

| 219 |

+

scaling_exp = pd.concat([scaling_exp_test, scaling_exp_train])

|

| 220 |

+

|

| 221 |

+

log_update("Initial counts:")

|

| 222 |

+

log_update(f"\tAsphericity: total entries={len(asph)}, not nan entries={len(asph.loc[asph['Value'].notna()])}")

|

| 223 |

+

log_update(f"\tScaled re: total entries={len(scaled_re)}, not nan entries={len(scaled_re.loc[scaled_re['Value'].notna()])}")

|

| 224 |

+

log_update(f"\tScaled rg: total entries={len(scaled_rg)}, not nan entries={len(scaled_rg.loc[scaled_rg['Value'].notna()])}")

|

| 225 |

+

# change any scaled_rg rows with values less than 1 to np.nan, as done in the paper

|

| 226 |

+

scaled_rg = scaled_rg.loc[

|

| 227 |

+

scaled_rg['Value']>=1].reset_index(drop=True)

|

| 228 |

+

log_update(f"\t\tAfter dropping Rg values < 1: total entries={len(scaled_rg)}")

|

| 229 |

+

log_update(f"\tScaling exp: total entries={len(scaling_exp)}, not nan entries={len(scaling_exp.loc[scaling_exp['Value'].notna()])}")

|

| 230 |

+

|

| 231 |

+

# Process the raw data

|

| 232 |

+

log_update(f"Example raw download: asphericity\n{asph.head()}")

|

| 233 |

+

log_update(f"\nCleaning Asphericity")

|

| 234 |

+

asph = process_raw_albatross(asph)

|

| 235 |

+

log_update(f"\nProcessed data: asphericity\n{asph.head()}")

|

| 236 |

+

|

| 237 |

+

log_update(f"\nCleaning Scaled Re")

|

| 238 |

+

scaled_re = process_raw_albatross(scaled_re)

|

| 239 |

+

log_update(f"\nProcessed data: scaled re\n{scaled_re.head()}")

|

| 240 |

+

|

| 241 |

+

log_update(f"\nCleaning Scaled Rg")

|

| 242 |

+

scaled_rg = process_raw_albatross(scaled_rg)

|

| 243 |

+

log_update(f"\nProcessed data: scaled rg\n{scaled_rg.head()}")

|

| 244 |

+

|

| 245 |

+

log_update(f"\nCleaning Scaling Exp")

|

| 246 |

+

scaling_exp = process_raw_albatross(scaling_exp)

|

| 247 |

+

log_update(f"\nProcessed data: scaling exp\n{scaling_exp.head()}")

|

| 248 |

+

|

| 249 |

+

# Give some stats about each dataset

|

| 250 |

+

log_update("\nStats:")

|

| 251 |

+

log_update(f"# Asphericity sequences: {len(asph)}\n\tRange: {min(asph['Value']):.4f}-{max(asph['Value']):.4f}")

|

| 252 |

+

log_update(f"# Scaled Re sequences: {len(scaled_re)}\n\tRange: {min(scaled_re['Value']):.4f}-{max(scaled_re['Value']):.4f}")

|

| 253 |

+

log_update(f"# Scaled Rg sequences: {len(scaled_rg)}\n\tRange: {min(scaled_rg['Value']):.4f}-{max(scaled_rg['Value']):.4f}")

|

| 254 |

+

log_update(f"# Scaling Exponent sequences: {len(scaling_exp)}\n\tRange: {min(scaling_exp['Value']):.4f}-{max(scaling_exp['Value']):.4f}")

|

| 255 |

+

|

| 256 |

+

# Combine

|

| 257 |

+

combined = combine_albatross_seqs(asph, scaled_re, scaled_rg, scaling_exp)

|

| 258 |

+

|

| 259 |

+

# Save processed data

|

| 260 |

+

proc_folder = "processed_data"

|

| 261 |

+

os.makedirs(proc_folder,exist_ok=True)

|

| 262 |

+

combined.to_csv(f"{proc_folder}/all_albatross_seqs_and_properties.csv",index=False)

|

| 263 |

+

|

| 264 |

+

# Plot the data distribution and save it

|

| 265 |

+

values_dict = {

|

| 266 |

+

'Asphericity': asph['Value'].tolist(),

|

| 267 |

+

'End-to-End Distance (Re)': scaled_re['Value'].tolist(),

|

| 268 |

+

'Radius of Gyration (Rg)': scaled_rg['Value'].tolist(),

|

| 269 |

+

'Scaling Exponent': scaling_exp['Value'].tolist()

|

| 270 |

+

}

|

| 271 |

+

train_test_values_dict = {

|

| 272 |

+

'Asphericity': {

|

| 273 |

+

'train': asph[asph['Split']=='Train']['Value'].tolist(),

|

| 274 |

+

'test': asph[asph['Split']=='Test']['Value'].tolist()},

|

| 275 |

+

'End-to-End Distance (Re)': {

|

| 276 |

+

'train': scaled_re[scaled_re['Split']=='Train']['Value'].tolist(),

|

| 277 |

+

'test': scaled_re[scaled_re['Split']=='Test']['Value'].tolist()},

|

| 278 |

+

'Radius of Gyration (Rg)': {

|

| 279 |

+

'train': scaled_rg[scaled_rg['Split']=='Train']['Value'].tolist(),

|

| 280 |

+

'test': scaled_rg[scaled_rg['Split']=='Test']['Value'].tolist()},

|

| 281 |

+

'Scaling Exponent': {

|

| 282 |

+

'train': scaling_exp[scaling_exp['Split']=='Train']['Value'].tolist(),

|

| 283 |

+

'test': scaling_exp[scaling_exp['Split']=='Test']['Value'].tolist()},

|

| 284 |

+

}

|

| 285 |

+

plot_all_values_hist_grid(values_dict, save_path="processed_data/value_histograms.png")

|

| 286 |

+

plot_all_train_val_test_values_hist_grid(train_test_values_dict, save_path="processed_data/train_test_value_histograms.png")

|

| 287 |

+

|

| 288 |

+

if __name__ == "__main__":

|

| 289 |

+

main()

|

fuson_plm/benchmarking/idr_prediction/cluster.py

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from fuson_plm.utils.logging import open_logfile, log_update

|

| 2 |

+

from fuson_plm.benchmarking.idr_prediction.config import CLUSTER

|

| 3 |

+

from fuson_plm.utils.clustering import ensure_mmseqs_in_path, process_fasta, analyze_clustering_result, make_fasta, run_mmseqs_clustering, cluster_summary

|

| 4 |

+

import os

|

| 5 |

+

import pandas as pd

|

| 6 |

+

|

| 7 |

+

def main_old():

|

| 8 |

+

# Read all the input args

|

| 9 |

+

LOG_PATH = "clustering_log.txt"

|

| 10 |

+

INPUT_PATH = CLUSTER.INPUT_PATH

|

| 11 |

+

MIN_SEQ_ID = CLUSTER.MIN_SEQ_ID

|

| 12 |

+

C = CLUSTER.C

|

| 13 |

+

COV_MODE = CLUSTER.COV_MODE

|

| 14 |

+

CLUSTER_MODE = CLUSTER.CLUSTER_MODE

|

| 15 |

+

PATH_TO_MMSEQS = CLUSTER.PATH_TO_MMSEQS

|

| 16 |

+

|

| 17 |

+

with open_logfile(LOG_PATH):

|

| 18 |

+

log_update("Input params from config.py:")

|

| 19 |

+

CLUSTER.print_config(indent='\t')

|

| 20 |

+