Add files using upload-large-folder tool

Browse files- LICENSE +21 -0

- config.json +3 -10

- figures/benchmark.jpg +0 -0

- generation_config.json +9 -0

- special_tokens_map.json +17 -0

- tokenizer.json +0 -0

- tokenizer_config.json +0 -0

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 DeepSeek

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

config.json

CHANGED

|

@@ -32,19 +32,11 @@

|

|

| 32 |

"num_hidden_layers": 61,

|

| 33 |

"num_key_value_heads": 128,

|

| 34 |

"num_nextn_predict_layers": 1,

|

|

|

|

| 35 |

"pretraining_tp": 1,

|

| 36 |

"q_lora_rank": 1536,

|

| 37 |

"qk_nope_head_dim": 128,

|

| 38 |

"qk_rope_head_dim": 64,

|

| 39 |

-

"quantization_config": {

|

| 40 |

-

"activation_scheme": "dynamic",

|

| 41 |

-

"fmt": "e4m3",

|

| 42 |

-

"quant_method": "fp8",

|

| 43 |

-

"weight_block_size": [

|

| 44 |

-

128,

|

| 45 |

-

128

|

| 46 |

-

]

|

| 47 |

-

},

|

| 48 |

"rms_norm_eps": 1e-06,

|

| 49 |

"rope_scaling": {

|

| 50 |

"beta_fast": 32,

|

|

@@ -63,7 +55,8 @@

|

|

| 63 |

"topk_group": 4,

|

| 64 |

"topk_method": "noaux_tc",

|

| 65 |

"torch_dtype": "bfloat16",

|

| 66 |

-

"transformers_version": "4.

|

|

|

|

| 67 |

"use_cache": true,

|

| 68 |

"v_head_dim": 128,

|

| 69 |

"vocab_size": 129280

|

|

|

|

| 32 |

"num_hidden_layers": 61,

|

| 33 |

"num_key_value_heads": 128,

|

| 34 |

"num_nextn_predict_layers": 1,

|

| 35 |

+

"pad_token_id": 128815,

|

| 36 |

"pretraining_tp": 1,

|

| 37 |

"q_lora_rank": 1536,

|

| 38 |

"qk_nope_head_dim": 128,

|

| 39 |

"qk_rope_head_dim": 64,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

"rms_norm_eps": 1e-06,

|

| 41 |

"rope_scaling": {

|

| 42 |

"beta_fast": 32,

|

|

|

|

| 55 |

"topk_group": 4,

|

| 56 |

"topk_method": "noaux_tc",

|

| 57 |

"torch_dtype": "bfloat16",

|

| 58 |

+

"transformers_version": "4.48.1",

|

| 59 |

+

"unsloth_fixed": true,

|

| 60 |

"use_cache": true,

|

| 61 |

"v_head_dim": 128,

|

| 62 |

"vocab_size": 129280

|

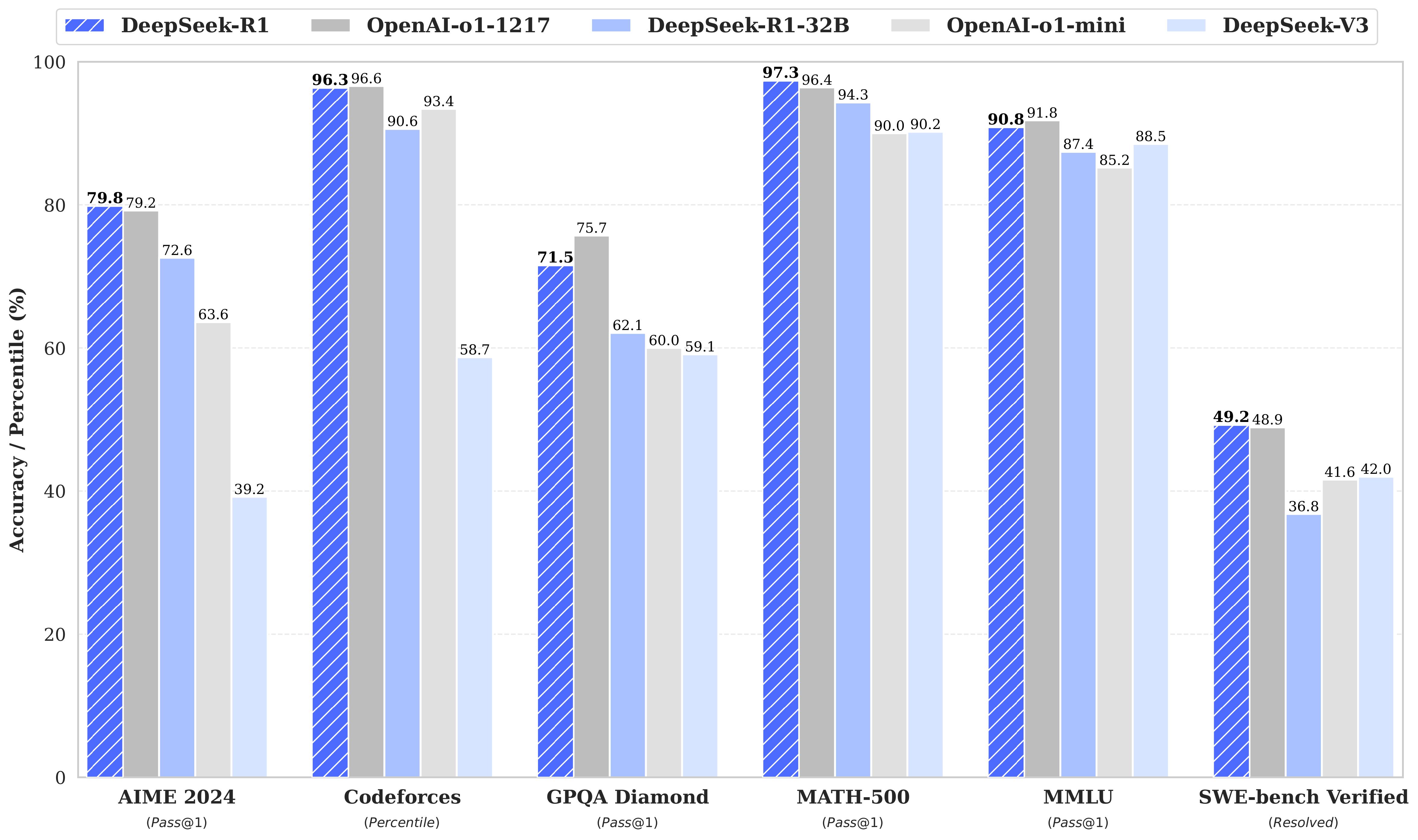

figures/benchmark.jpg

ADDED

|

generation_config.json

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 0,

|

| 4 |

+

"eos_token_id": 1,

|

| 5 |

+

"do_sample": true,

|

| 6 |

+

"temperature": 0.6,

|

| 7 |

+

"top_p": 0.95,

|

| 8 |

+

"transformers_version": "4.39.3"

|

| 9 |

+

}

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<|begin▁of▁sentence|>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": false,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "<|end▁of▁sentence|>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": false,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": "<|PAD▁TOKEN|>"

|

| 17 |

+

}

|

tokenizer.json

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|