charlescxk

commited on

Commit

·

a38a851

1

Parent(s):

2c415b6

update

Browse files- README.md +0 -13

- Upsample/__init__.py +1 -0

- Upsample/__pycache__/__init__.cpython-38.pyc +0 -0

- Upsample/__pycache__/arch_utils.cpython-38.pyc +0 -0

- Upsample/__pycache__/model.cpython-38.pyc +0 -0

- Upsample/__pycache__/rrdbnet_arch.cpython-38.pyc +0 -0

- Upsample/__pycache__/utils.cpython-38.pyc +0 -0

- Upsample/arch_utils.py +197 -0

- Upsample/model.py +93 -0

- Upsample/rrdbnet_arch.py +121 -0

- Upsample/utils.py +135 -0

- app.py +268 -0

- doge.png +0 -0

- equation.png +0 -0

- janus/__init__.py +31 -0

- janus/__pycache__/__init__.cpython-38.pyc +0 -0

- janus/models/__init__.py +28 -0

- janus/models/__pycache__/__init__.cpython-38.pyc +0 -0

- janus/models/__pycache__/clip_encoder.cpython-38.pyc +0 -0

- janus/models/__pycache__/image_processing_vlm.cpython-38.pyc +0 -0

- janus/models/__pycache__/modeling_vlm.cpython-38.pyc +0 -0

- janus/models/__pycache__/processing_vlm.cpython-38.pyc +0 -0

- janus/models/__pycache__/projector.cpython-38.pyc +0 -0

- janus/models/__pycache__/siglip_vit.cpython-38.pyc +0 -0

- janus/models/__pycache__/vq_model.cpython-38.pyc +0 -0

- janus/models/clip_encoder.py +122 -0

- janus/models/image_processing_vlm.py +208 -0

- janus/models/modeling_vlm.py +272 -0

- janus/models/processing_vlm.py +418 -0

- janus/models/projector.py +100 -0

- janus/models/siglip_vit.py +681 -0

- janus/models/vq_model.py +527 -0

- janus/utils/__init__.py +18 -0

- janus/utils/__pycache__/__init__.cpython-38.pyc +0 -0

- janus/utils/__pycache__/conversation.cpython-38.pyc +0 -0

- janus/utils/__pycache__/io.cpython-38.pyc +0 -0

- janus/utils/conversation.py +365 -0

- janus/utils/io.py +89 -0

- requirements.txt +8 -0

- weights/RealESRGAN_x2.pth +3 -0

README.md

DELETED

|

@@ -1,13 +0,0 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: Janus Pro 7B

|

| 3 |

-

emoji: 🐢

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: gray

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 5.13.1

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: mit

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Upsample/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .model import RealESRGAN

|

Upsample/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (213 Bytes). View file

|

|

|

Upsample/__pycache__/arch_utils.cpython-38.pyc

ADDED

|

Binary file (7.14 kB). View file

|

|

|

Upsample/__pycache__/model.cpython-38.pyc

ADDED

|

Binary file (3.11 kB). View file

|

|

|

Upsample/__pycache__/rrdbnet_arch.cpython-38.pyc

ADDED

|

Binary file (4.47 kB). View file

|

|

|

Upsample/__pycache__/utils.cpython-38.pyc

ADDED

|

Binary file (4.05 kB). View file

|

|

|

Upsample/arch_utils.py

ADDED

|

@@ -0,0 +1,197 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import torch

|

| 3 |

+

from torch import nn as nn

|

| 4 |

+

from torch.nn import functional as F

|

| 5 |

+

from torch.nn import init as init

|

| 6 |

+

from torch.nn.modules.batchnorm import _BatchNorm

|

| 7 |

+

|

| 8 |

+

@torch.no_grad()

|

| 9 |

+

def default_init_weights(module_list, scale=1, bias_fill=0, **kwargs):

|

| 10 |

+

"""Initialize network weights.

|

| 11 |

+

|

| 12 |

+

Args:

|

| 13 |

+

module_list (list[nn.Module] | nn.Module): Modules to be initialized.

|

| 14 |

+

scale (float): Scale initialized weights, especially for residual

|

| 15 |

+

blocks. Default: 1.

|

| 16 |

+

bias_fill (float): The value to fill bias. Default: 0

|

| 17 |

+

kwargs (dict): Other arguments for initialization function.

|

| 18 |

+

"""

|

| 19 |

+

if not isinstance(module_list, list):

|

| 20 |

+

module_list = [module_list]

|

| 21 |

+

for module in module_list:

|

| 22 |

+

for m in module.modules():

|

| 23 |

+

if isinstance(m, nn.Conv2d):

|

| 24 |

+

init.kaiming_normal_(m.weight, **kwargs)

|

| 25 |

+

m.weight.data *= scale

|

| 26 |

+

if m.bias is not None:

|

| 27 |

+

m.bias.data.fill_(bias_fill)

|

| 28 |

+

elif isinstance(m, nn.Linear):

|

| 29 |

+

init.kaiming_normal_(m.weight, **kwargs)

|

| 30 |

+

m.weight.data *= scale

|

| 31 |

+

if m.bias is not None:

|

| 32 |

+

m.bias.data.fill_(bias_fill)

|

| 33 |

+

elif isinstance(m, _BatchNorm):

|

| 34 |

+

init.constant_(m.weight, 1)

|

| 35 |

+

if m.bias is not None:

|

| 36 |

+

m.bias.data.fill_(bias_fill)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def make_layer(basic_block, num_basic_block, **kwarg):

|

| 40 |

+

"""Make layers by stacking the same blocks.

|

| 41 |

+

|

| 42 |

+

Args:

|

| 43 |

+

basic_block (nn.module): nn.module class for basic block.

|

| 44 |

+

num_basic_block (int): number of blocks.

|

| 45 |

+

|

| 46 |

+

Returns:

|

| 47 |

+

nn.Sequential: Stacked blocks in nn.Sequential.

|

| 48 |

+

"""

|

| 49 |

+

layers = []

|

| 50 |

+

for _ in range(num_basic_block):

|

| 51 |

+

layers.append(basic_block(**kwarg))

|

| 52 |

+

return nn.Sequential(*layers)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

class ResidualBlockNoBN(nn.Module):

|

| 56 |

+

"""Residual block without BN.

|

| 57 |

+

|

| 58 |

+

It has a style of:

|

| 59 |

+

---Conv-ReLU-Conv-+-

|

| 60 |

+

|________________|

|

| 61 |

+

|

| 62 |

+

Args:

|

| 63 |

+

num_feat (int): Channel number of intermediate features.

|

| 64 |

+

Default: 64.

|

| 65 |

+

res_scale (float): Residual scale. Default: 1.

|

| 66 |

+

pytorch_init (bool): If set to True, use pytorch default init,

|

| 67 |

+

otherwise, use default_init_weights. Default: False.

|

| 68 |

+

"""

|

| 69 |

+

|

| 70 |

+

def __init__(self, num_feat=64, res_scale=1, pytorch_init=False):

|

| 71 |

+

super(ResidualBlockNoBN, self).__init__()

|

| 72 |

+

self.res_scale = res_scale

|

| 73 |

+

self.conv1 = nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=True)

|

| 74 |

+

self.conv2 = nn.Conv2d(num_feat, num_feat, 3, 1, 1, bias=True)

|

| 75 |

+

self.relu = nn.ReLU(inplace=True)

|

| 76 |

+

|

| 77 |

+

if not pytorch_init:

|

| 78 |

+

default_init_weights([self.conv1, self.conv2], 0.1)

|

| 79 |

+

|

| 80 |

+

def forward(self, x):

|

| 81 |

+

identity = x

|

| 82 |

+

out = self.conv2(self.relu(self.conv1(x)))

|

| 83 |

+

return identity + out * self.res_scale

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

class Upsample(nn.Sequential):

|

| 87 |

+

"""Upsample module.

|

| 88 |

+

|

| 89 |

+

Args:

|

| 90 |

+

scale (int): Scale factor. Supported scales: 2^n and 3.

|

| 91 |

+

num_feat (int): Channel number of intermediate features.

|

| 92 |

+

"""

|

| 93 |

+

|

| 94 |

+

def __init__(self, scale, num_feat):

|

| 95 |

+

m = []

|

| 96 |

+

if (scale & (scale - 1)) == 0: # scale = 2^n

|

| 97 |

+

for _ in range(int(math.log(scale, 2))):

|

| 98 |

+

m.append(nn.Conv2d(num_feat, 4 * num_feat, 3, 1, 1))

|

| 99 |

+

m.append(nn.PixelShuffle(2))

|

| 100 |

+

elif scale == 3:

|

| 101 |

+

m.append(nn.Conv2d(num_feat, 9 * num_feat, 3, 1, 1))

|

| 102 |

+

m.append(nn.PixelShuffle(3))

|

| 103 |

+

else:

|

| 104 |

+

raise ValueError(f'scale {scale} is not supported. ' 'Supported scales: 2^n and 3.')

|

| 105 |

+

super(Upsample, self).__init__(*m)

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

def flow_warp(x, flow, interp_mode='bilinear', padding_mode='zeros', align_corners=True):

|

| 109 |

+

"""Warp an image or feature map with optical flow.

|

| 110 |

+

|

| 111 |

+

Args:

|

| 112 |

+

x (Tensor): Tensor with size (n, c, h, w).

|

| 113 |

+

flow (Tensor): Tensor with size (n, h, w, 2), normal value.

|

| 114 |

+

interp_mode (str): 'nearest' or 'bilinear'. Default: 'bilinear'.

|

| 115 |

+

padding_mode (str): 'zeros' or 'border' or 'reflection'.

|

| 116 |

+

Default: 'zeros'.

|

| 117 |

+

align_corners (bool): Before pytorch 1.3, the default value is

|

| 118 |

+

align_corners=True. After pytorch 1.3, the default value is

|

| 119 |

+

align_corners=False. Here, we use the True as default.

|

| 120 |

+

|

| 121 |

+

Returns:

|

| 122 |

+

Tensor: Warped image or feature map.

|

| 123 |

+

"""

|

| 124 |

+

assert x.size()[-2:] == flow.size()[1:3]

|

| 125 |

+

_, _, h, w = x.size()

|

| 126 |

+

# create mesh grid

|

| 127 |

+

grid_y, grid_x = torch.meshgrid(torch.arange(0, h).type_as(x), torch.arange(0, w).type_as(x))

|

| 128 |

+

grid = torch.stack((grid_x, grid_y), 2).float() # W(x), H(y), 2

|

| 129 |

+

grid.requires_grad = False

|

| 130 |

+

|

| 131 |

+

vgrid = grid + flow

|

| 132 |

+

# scale grid to [-1,1]

|

| 133 |

+

vgrid_x = 2.0 * vgrid[:, :, :, 0] / max(w - 1, 1) - 1.0

|

| 134 |

+

vgrid_y = 2.0 * vgrid[:, :, :, 1] / max(h - 1, 1) - 1.0

|

| 135 |

+

vgrid_scaled = torch.stack((vgrid_x, vgrid_y), dim=3)

|

| 136 |

+

output = F.grid_sample(x, vgrid_scaled, mode=interp_mode, padding_mode=padding_mode, align_corners=align_corners)

|

| 137 |

+

|

| 138 |

+

# TODO, what if align_corners=False

|

| 139 |

+

return output

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

def resize_flow(flow, size_type, sizes, interp_mode='bilinear', align_corners=False):

|

| 143 |

+

"""Resize a flow according to ratio or shape.

|

| 144 |

+

|

| 145 |

+

Args:

|

| 146 |

+

flow (Tensor): Precomputed flow. shape [N, 2, H, W].

|

| 147 |

+

size_type (str): 'ratio' or 'shape'.

|

| 148 |

+

sizes (list[int | float]): the ratio for resizing or the final output

|

| 149 |

+

shape.

|

| 150 |

+

1) The order of ratio should be [ratio_h, ratio_w]. For

|

| 151 |

+

downsampling, the ratio should be smaller than 1.0 (i.e., ratio

|

| 152 |

+

< 1.0). For upsampling, the ratio should be larger than 1.0 (i.e.,

|

| 153 |

+

ratio > 1.0).

|

| 154 |

+

2) The order of output_size should be [out_h, out_w].

|

| 155 |

+

interp_mode (str): The mode of interpolation for resizing.

|

| 156 |

+

Default: 'bilinear'.

|

| 157 |

+

align_corners (bool): Whether align corners. Default: False.

|

| 158 |

+

|

| 159 |

+

Returns:

|

| 160 |

+

Tensor: Resized flow.

|

| 161 |

+

"""

|

| 162 |

+

_, _, flow_h, flow_w = flow.size()

|

| 163 |

+

if size_type == 'ratio':

|

| 164 |

+

output_h, output_w = int(flow_h * sizes[0]), int(flow_w * sizes[1])

|

| 165 |

+

elif size_type == 'shape':

|

| 166 |

+

output_h, output_w = sizes[0], sizes[1]

|

| 167 |

+

else:

|

| 168 |

+

raise ValueError(f'Size type should be ratio or shape, but got type {size_type}.')

|

| 169 |

+

|

| 170 |

+

input_flow = flow.clone()

|

| 171 |

+

ratio_h = output_h / flow_h

|

| 172 |

+

ratio_w = output_w / flow_w

|

| 173 |

+

input_flow[:, 0, :, :] *= ratio_w

|

| 174 |

+

input_flow[:, 1, :, :] *= ratio_h

|

| 175 |

+

resized_flow = F.interpolate(

|

| 176 |

+

input=input_flow, size=(output_h, output_w), mode=interp_mode, align_corners=align_corners)

|

| 177 |

+

return resized_flow

|

| 178 |

+

|

| 179 |

+

|

| 180 |

+

# TODO: may write a cpp file

|

| 181 |

+

def pixel_unshuffle(x, scale):

|

| 182 |

+

""" Pixel unshuffle.

|

| 183 |

+

|

| 184 |

+

Args:

|

| 185 |

+

x (Tensor): Input feature with shape (b, c, hh, hw).

|

| 186 |

+

scale (int): Downsample ratio.

|

| 187 |

+

|

| 188 |

+

Returns:

|

| 189 |

+

Tensor: the pixel unshuffled feature.

|

| 190 |

+

"""

|

| 191 |

+

b, c, hh, hw = x.size()

|

| 192 |

+

out_channel = c * (scale**2)

|

| 193 |

+

assert hh % scale == 0 and hw % scale == 0

|

| 194 |

+

h = hh // scale

|

| 195 |

+

w = hw // scale

|

| 196 |

+

x_view = x.view(b, c, h, scale, w, scale)

|

| 197 |

+

return x_view.permute(0, 1, 3, 5, 2, 4).reshape(b, out_channel, h, w)

|

Upsample/model.py

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

from torch.nn import functional as F

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import numpy as np

|

| 6 |

+

import cv2

|

| 7 |

+

from huggingface_hub import hf_hub_url, hf_hub_download

|

| 8 |

+

|

| 9 |

+

from .rrdbnet_arch import RRDBNet

|

| 10 |

+

from .utils import pad_reflect, split_image_into_overlapping_patches, stich_together, \

|

| 11 |

+

unpad_image

|

| 12 |

+

|

| 13 |

+

HF_MODELS = {

|

| 14 |

+

2: dict(

|

| 15 |

+

repo_id='sberbank-ai/Real-ESRGAN',

|

| 16 |

+

filename='RealESRGAN_x2.pth',

|

| 17 |

+

),

|

| 18 |

+

4: dict(

|

| 19 |

+

repo_id='sberbank-ai/Real-ESRGAN',

|

| 20 |

+

filename='RealESRGAN_x4.pth',

|

| 21 |

+

),

|

| 22 |

+

8: dict(

|

| 23 |

+

repo_id='sberbank-ai/Real-ESRGAN',

|

| 24 |

+

filename='RealESRGAN_x8.pth',

|

| 25 |

+

),

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class RealESRGAN:

|

| 30 |

+

def __init__(self, device, scale=4):

|

| 31 |

+

self.device = device

|

| 32 |

+

self.scale = scale

|

| 33 |

+

self.model = RRDBNet(

|

| 34 |

+

num_in_ch=3, num_out_ch=3, num_feat=64,

|

| 35 |

+

num_block=23, num_grow_ch=32, scale=scale

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

def load_weights(self, model_path, download=True):

|

| 39 |

+

if not os.path.exists(model_path) and download:

|

| 40 |

+

assert self.scale in [2, 4, 8], 'You can download models only with scales: 2, 4, 8'

|

| 41 |

+

config = HF_MODELS[self.scale]

|

| 42 |

+

cache_dir = os.path.dirname(model_path)

|

| 43 |

+

local_filename = os.path.basename(model_path)

|

| 44 |

+

config_file_url = hf_hub_url(repo_id=config['repo_id'], filename=config['filename'])

|

| 45 |

+

htr = hf_hub_download(repo_id=config['repo_id'], cache_dir=cache_dir, local_dir=cache_dir,

|

| 46 |

+

filename=config['filename'])

|

| 47 |

+

print(htr)

|

| 48 |

+

# cached_download(config_file_url, cache_dir=cache_dir, force_filename=local_filename)

|

| 49 |

+

print('Weights downloaded to:', os.path.join(cache_dir, local_filename))

|

| 50 |

+

|

| 51 |

+

loadnet = torch.load(model_path)

|

| 52 |

+

if 'params' in loadnet:

|

| 53 |

+

self.model.load_state_dict(loadnet['params'], strict=True)

|

| 54 |

+

elif 'params_ema' in loadnet:

|

| 55 |

+

self.model.load_state_dict(loadnet['params_ema'], strict=True)

|

| 56 |

+

else:

|

| 57 |

+

self.model.load_state_dict(loadnet, strict=True)

|

| 58 |

+

self.model.eval()

|

| 59 |

+

self.model.to(self.device)

|

| 60 |

+

|

| 61 |

+

# @torch.cuda.amp.autocast()

|

| 62 |

+

def predict(self, lr_image, batch_size=4, patches_size=192,

|

| 63 |

+

padding=24, pad_size=15):

|

| 64 |

+

torch.autocast(device_type=self.device.type)

|

| 65 |

+

scale = self.scale

|

| 66 |

+

device = self.device

|

| 67 |

+

lr_image = np.array(lr_image)

|

| 68 |

+

lr_image = pad_reflect(lr_image, pad_size)

|

| 69 |

+

|

| 70 |

+

patches, p_shape = split_image_into_overlapping_patches(

|

| 71 |

+

lr_image, patch_size=patches_size, padding_size=padding

|

| 72 |

+

)

|

| 73 |

+

img = torch.FloatTensor(patches / 255).permute((0, 3, 1, 2)).to(device).detach()

|

| 74 |

+

|

| 75 |

+

with torch.no_grad():

|

| 76 |

+

res = self.model(img[0:batch_size])

|

| 77 |

+

for i in range(batch_size, img.shape[0], batch_size):

|

| 78 |

+

res = torch.cat((res, self.model(img[i:i + batch_size])), 0)

|

| 79 |

+

|

| 80 |

+

sr_image = res.permute((0, 2, 3, 1)).cpu().clamp_(0, 1)

|

| 81 |

+

np_sr_image = sr_image.numpy()

|

| 82 |

+

|

| 83 |

+

padded_size_scaled = tuple(np.multiply(p_shape[0:2], scale)) + (3,)

|

| 84 |

+

scaled_image_shape = tuple(np.multiply(lr_image.shape[0:2], scale)) + (3,)

|

| 85 |

+

np_sr_image = stich_together(

|

| 86 |

+

np_sr_image, padded_image_shape=padded_size_scaled,

|

| 87 |

+

target_shape=scaled_image_shape, padding_size=padding * scale

|

| 88 |

+

)

|

| 89 |

+

sr_img = (np_sr_image * 255).astype(np.uint8)

|

| 90 |

+

sr_img = unpad_image(sr_img, pad_size * scale)

|

| 91 |

+

sr_img = Image.fromarray(sr_img)

|

| 92 |

+

|

| 93 |

+

return sr_img

|

Upsample/rrdbnet_arch.py

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch import nn as nn

|

| 3 |

+

from torch.nn import functional as F

|

| 4 |

+

|

| 5 |

+

from .arch_utils import default_init_weights, make_layer, pixel_unshuffle

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class ResidualDenseBlock(nn.Module):

|

| 9 |

+

"""Residual Dense Block.

|

| 10 |

+

|

| 11 |

+

Used in RRDB block in ESRGAN.

|

| 12 |

+

|

| 13 |

+

Args:

|

| 14 |

+

num_feat (int): Channel number of intermediate features.

|

| 15 |

+

num_grow_ch (int): Channels for each growth.

|

| 16 |

+

"""

|

| 17 |

+

|

| 18 |

+

def __init__(self, num_feat=64, num_grow_ch=32):

|

| 19 |

+

super(ResidualDenseBlock, self).__init__()

|

| 20 |

+

self.conv1 = nn.Conv2d(num_feat, num_grow_ch, 3, 1, 1)

|

| 21 |

+

self.conv2 = nn.Conv2d(num_feat + num_grow_ch, num_grow_ch, 3, 1, 1)

|

| 22 |

+

self.conv3 = nn.Conv2d(num_feat + 2 * num_grow_ch, num_grow_ch, 3, 1, 1)

|

| 23 |

+

self.conv4 = nn.Conv2d(num_feat + 3 * num_grow_ch, num_grow_ch, 3, 1, 1)

|

| 24 |

+

self.conv5 = nn.Conv2d(num_feat + 4 * num_grow_ch, num_feat, 3, 1, 1)

|

| 25 |

+

|

| 26 |

+

self.lrelu = nn.LeakyReLU(negative_slope=0.2, inplace=True)

|

| 27 |

+

|

| 28 |

+

# initialization

|

| 29 |

+

default_init_weights([self.conv1, self.conv2, self.conv3, self.conv4, self.conv5], 0.1)

|

| 30 |

+

|

| 31 |

+

def forward(self, x):

|

| 32 |

+

x1 = self.lrelu(self.conv1(x))

|

| 33 |

+

x2 = self.lrelu(self.conv2(torch.cat((x, x1), 1)))

|

| 34 |

+

x3 = self.lrelu(self.conv3(torch.cat((x, x1, x2), 1)))

|

| 35 |

+

x4 = self.lrelu(self.conv4(torch.cat((x, x1, x2, x3), 1)))

|

| 36 |

+

x5 = self.conv5(torch.cat((x, x1, x2, x3, x4), 1))

|

| 37 |

+

# Emperically, we use 0.2 to scale the residual for better performance

|

| 38 |

+

return x5 * 0.2 + x

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

class RRDB(nn.Module):

|

| 42 |

+

"""Residual in Residual Dense Block.

|

| 43 |

+

|

| 44 |

+

Used in RRDB-Net in ESRGAN.

|

| 45 |

+

|

| 46 |

+

Args:

|

| 47 |

+

num_feat (int): Channel number of intermediate features.

|

| 48 |

+

num_grow_ch (int): Channels for each growth.

|

| 49 |

+

"""

|

| 50 |

+

|

| 51 |

+

def __init__(self, num_feat, num_grow_ch=32):

|

| 52 |

+

super(RRDB, self).__init__()

|

| 53 |

+

self.rdb1 = ResidualDenseBlock(num_feat, num_grow_ch)

|

| 54 |

+

self.rdb2 = ResidualDenseBlock(num_feat, num_grow_ch)

|

| 55 |

+

self.rdb3 = ResidualDenseBlock(num_feat, num_grow_ch)

|

| 56 |

+

|

| 57 |

+

def forward(self, x):

|

| 58 |

+

out = self.rdb1(x)

|

| 59 |

+

out = self.rdb2(out)

|

| 60 |

+

out = self.rdb3(out)

|

| 61 |

+

# Emperically, we use 0.2 to scale the residual for better performance

|

| 62 |

+

return out * 0.2 + x

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

class RRDBNet(nn.Module):

|

| 66 |

+

"""Networks consisting of Residual in Residual Dense Block, which is used

|

| 67 |

+

in ESRGAN.

|

| 68 |

+

|

| 69 |

+

ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks.

|

| 70 |

+

|

| 71 |

+

We extend ESRGAN for scale x2 and scale x1.

|

| 72 |

+

Note: This is one option for scale 1, scale 2 in RRDBNet.

|

| 73 |

+

We first employ the pixel-unshuffle (an inverse operation of pixelshuffle to reduce the spatial size

|

| 74 |

+

and enlarge the channel size before feeding inputs into the main ESRGAN architecture.

|

| 75 |

+

|

| 76 |

+

Args:

|

| 77 |

+

num_in_ch (int): Channel number of inputs.

|

| 78 |

+

num_out_ch (int): Channel number of outputs.

|

| 79 |

+

num_feat (int): Channel number of intermediate features.

|

| 80 |

+

Default: 64

|

| 81 |

+

num_block (int): Block number in the trunk network. Defaults: 23

|

| 82 |

+

num_grow_ch (int): Channels for each growth. Default: 32.

|

| 83 |

+

"""

|

| 84 |

+

|

| 85 |

+

def __init__(self, num_in_ch, num_out_ch, scale=4, num_feat=64, num_block=23, num_grow_ch=32):

|

| 86 |

+

super(RRDBNet, self).__init__()

|

| 87 |

+

self.scale = scale

|

| 88 |

+

if scale == 2:

|

| 89 |

+

num_in_ch = num_in_ch * 4

|

| 90 |

+

elif scale == 1:

|

| 91 |

+

num_in_ch = num_in_ch * 16

|

| 92 |

+

self.conv_first = nn.Conv2d(num_in_ch, num_feat, 3, 1, 1)

|

| 93 |

+

self.body = make_layer(RRDB, num_block, num_feat=num_feat, num_grow_ch=num_grow_ch)

|

| 94 |

+

self.conv_body = nn.Conv2d(num_feat, num_feat, 3, 1, 1)

|

| 95 |

+

# upsample

|

| 96 |

+

self.conv_up1 = nn.Conv2d(num_feat, num_feat, 3, 1, 1)

|

| 97 |

+

self.conv_up2 = nn.Conv2d(num_feat, num_feat, 3, 1, 1)

|

| 98 |

+

if scale == 8:

|

| 99 |

+

self.conv_up3 = nn.Conv2d(num_feat, num_feat, 3, 1, 1)

|

| 100 |

+

self.conv_hr = nn.Conv2d(num_feat, num_feat, 3, 1, 1)

|

| 101 |

+

self.conv_last = nn.Conv2d(num_feat, num_out_ch, 3, 1, 1)

|

| 102 |

+

|

| 103 |

+

self.lrelu = nn.LeakyReLU(negative_slope=0.2, inplace=True)

|

| 104 |

+

|

| 105 |

+

def forward(self, x):

|

| 106 |

+

if self.scale == 2:

|

| 107 |

+

feat = pixel_unshuffle(x, scale=2)

|

| 108 |

+

elif self.scale == 1:

|

| 109 |

+

feat = pixel_unshuffle(x, scale=4)

|

| 110 |

+

else:

|

| 111 |

+

feat = x

|

| 112 |

+

feat = self.conv_first(feat)

|

| 113 |

+

body_feat = self.conv_body(self.body(feat))

|

| 114 |

+

feat = feat + body_feat

|

| 115 |

+

# upsample

|

| 116 |

+

feat = self.lrelu(self.conv_up1(F.interpolate(feat, scale_factor=2, mode='nearest')))

|

| 117 |

+

feat = self.lrelu(self.conv_up2(F.interpolate(feat, scale_factor=2, mode='nearest')))

|

| 118 |

+

if self.scale == 8:

|

| 119 |

+

feat = self.lrelu(self.conv_up3(F.interpolate(feat, scale_factor=2, mode='nearest')))

|

| 120 |

+

out = self.conv_last(self.lrelu(self.conv_hr(feat)))

|

| 121 |

+

return out

|

Upsample/utils.py

ADDED

|

@@ -0,0 +1,135 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import torch

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import os

|

| 5 |

+

import io

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

def pad_reflect(image, pad_size):

|

| 9 |

+

imsize = image.shape

|

| 10 |

+

height, width = imsize[:2]

|

| 11 |

+

new_img = np.zeros([height + pad_size * 2, width + pad_size * 2, imsize[2]]).astype(np.uint8)

|

| 12 |

+

new_img[pad_size:-pad_size, pad_size:-pad_size, :] = image

|

| 13 |

+

|

| 14 |

+

new_img[0:pad_size, pad_size:-pad_size, :] = np.flip(image[0:pad_size, :, :], axis=0) # top

|

| 15 |

+

new_img[-pad_size:, pad_size:-pad_size, :] = np.flip(image[-pad_size:, :, :], axis=0) # bottom

|

| 16 |

+

new_img[:, 0:pad_size, :] = np.flip(new_img[:, pad_size:pad_size * 2, :], axis=1) # left

|

| 17 |

+

new_img[:, -pad_size:, :] = np.flip(new_img[:, -pad_size * 2:-pad_size, :], axis=1) # right

|

| 18 |

+

|

| 19 |

+

return new_img

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def unpad_image(image, pad_size):

|

| 23 |

+

return image[pad_size:-pad_size, pad_size:-pad_size, :]

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def process_array(image_array, expand=True):

|

| 27 |

+

""" Process a 3-dimensional array into a scaled, 4 dimensional batch of size 1. """

|

| 28 |

+

|

| 29 |

+

image_batch = image_array / 255.0

|

| 30 |

+

if expand:

|

| 31 |

+

image_batch = np.expand_dims(image_batch, axis=0)

|

| 32 |

+

return image_batch

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def process_output(output_tensor):

|

| 36 |

+

""" Transforms the 4-dimensional output tensor into a suitable image format. """

|

| 37 |

+

|

| 38 |

+

sr_img = output_tensor.clip(0, 1) * 255

|

| 39 |

+

sr_img = np.uint8(sr_img)

|

| 40 |

+

return sr_img

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def pad_patch(image_patch, padding_size, channel_last=True):

|

| 44 |

+

""" Pads image_patch with with padding_size edge values. """

|

| 45 |

+

|

| 46 |

+

if channel_last:

|

| 47 |

+

return np.pad(

|

| 48 |

+

image_patch,

|

| 49 |

+

((padding_size, padding_size), (padding_size, padding_size), (0, 0)),

|

| 50 |

+

'edge',

|

| 51 |

+

)

|

| 52 |

+

else:

|

| 53 |

+

return np.pad(

|

| 54 |

+

image_patch,

|

| 55 |

+

((0, 0), (padding_size, padding_size), (padding_size, padding_size)),

|

| 56 |

+

'edge',

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

def unpad_patches(image_patches, padding_size):

|

| 61 |

+

return image_patches[:, padding_size:-padding_size, padding_size:-padding_size, :]

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def split_image_into_overlapping_patches(image_array, patch_size, padding_size=2):

|

| 65 |

+

""" Splits the image into partially overlapping patches.

|

| 66 |

+

The patches overlap by padding_size pixels.

|

| 67 |

+

Pads the image twice:

|

| 68 |

+

- first to have a size multiple of the patch size,

|

| 69 |

+

- then to have equal padding at the borders.

|

| 70 |

+

Args:

|

| 71 |

+

image_array: numpy array of the input image.

|

| 72 |

+

patch_size: size of the patches from the original image (without padding).

|

| 73 |

+

padding_size: size of the overlapping area.

|

| 74 |

+

"""

|

| 75 |

+

|

| 76 |

+

xmax, ymax, _ = image_array.shape

|

| 77 |

+

x_remainder = xmax % patch_size

|

| 78 |

+

y_remainder = ymax % patch_size

|

| 79 |

+

|

| 80 |

+

# modulo here is to avoid extending of patch_size instead of 0

|

| 81 |

+

x_extend = (patch_size - x_remainder) % patch_size

|

| 82 |

+

y_extend = (patch_size - y_remainder) % patch_size

|

| 83 |

+

|

| 84 |

+

# make sure the image is divisible into regular patches

|

| 85 |

+

extended_image = np.pad(image_array, ((0, x_extend), (0, y_extend), (0, 0)), 'edge')

|

| 86 |

+

|

| 87 |

+

# add padding around the image to simplify computations

|

| 88 |

+

padded_image = pad_patch(extended_image, padding_size, channel_last=True)

|

| 89 |

+

|

| 90 |

+

xmax, ymax, _ = padded_image.shape

|

| 91 |

+

patches = []

|

| 92 |

+

|

| 93 |

+

x_lefts = range(padding_size, xmax - padding_size, patch_size)

|

| 94 |

+

y_tops = range(padding_size, ymax - padding_size, patch_size)

|

| 95 |

+

|

| 96 |

+

for x in x_lefts:

|

| 97 |

+

for y in y_tops:

|

| 98 |

+

x_left = x - padding_size

|

| 99 |

+

y_top = y - padding_size

|

| 100 |

+

x_right = x + patch_size + padding_size

|

| 101 |

+

y_bottom = y + patch_size + padding_size

|

| 102 |

+

patch = padded_image[x_left:x_right, y_top:y_bottom, :]

|

| 103 |

+

patches.append(patch)

|

| 104 |

+

|

| 105 |

+

return np.array(patches), padded_image.shape

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

def stich_together(patches, padded_image_shape, target_shape, padding_size=4):

|

| 109 |

+

""" Reconstruct the image from overlapping patches.

|

| 110 |

+

After scaling, shapes and padding should be scaled too.

|

| 111 |

+

Args:

|

| 112 |

+

patches: patches obtained with split_image_into_overlapping_patches

|

| 113 |

+

padded_image_shape: shape of the padded image contructed in split_image_into_overlapping_patches

|

| 114 |

+

target_shape: shape of the final image

|

| 115 |

+

padding_size: size of the overlapping area.

|

| 116 |

+

"""

|

| 117 |

+

|

| 118 |

+

xmax, ymax, _ = padded_image_shape

|

| 119 |

+

patches = unpad_patches(patches, padding_size)

|

| 120 |

+

patch_size = patches.shape[1]

|

| 121 |

+

n_patches_per_row = ymax // patch_size

|

| 122 |

+

|

| 123 |

+

complete_image = np.zeros((xmax, ymax, 3))

|

| 124 |

+

|

| 125 |

+

row = -1

|

| 126 |

+

col = 0

|

| 127 |

+

for i in range(len(patches)):

|

| 128 |

+

if i % n_patches_per_row == 0:

|

| 129 |

+

row += 1

|

| 130 |

+

col = 0

|

| 131 |

+

complete_image[

|

| 132 |

+

row * patch_size: (row + 1) * patch_size, col * patch_size: (col + 1) * patch_size, :

|

| 133 |

+

] = patches[i]

|

| 134 |

+

col += 1

|

| 135 |

+

return complete_image[0: target_shape[0], 0: target_shape[1], :]

|

app.py

ADDED

|

@@ -0,0 +1,268 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

from transformers import AutoConfig, AutoModelForCausalLM

|

| 4 |

+

from janus.models import MultiModalityCausalLM, VLChatProcessor

|

| 5 |

+

from janus.utils.io import load_pil_images

|

| 6 |

+

from PIL import Image

|

| 7 |

+

|

| 8 |

+

import numpy as np

|

| 9 |

+

import os

|

| 10 |

+

import time

|

| 11 |

+

from Upsample import RealESRGAN

|

| 12 |

+

import spaces # Import spaces for ZeroGPU compatibility

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

# Load model and processor

|

| 16 |

+

model_path = "deepseek-ai/Janus-Pro-7B"

|

| 17 |

+

config = AutoConfig.from_pretrained(model_path)

|

| 18 |

+

language_config = config.language_config

|

| 19 |

+

language_config._attn_implementation = 'eager'

|

| 20 |

+

vl_gpt = AutoModelForCausalLM.from_pretrained(model_path,

|

| 21 |

+

language_config=language_config,

|

| 22 |

+

trust_remote_code=True)

|

| 23 |

+

if torch.cuda.is_available():

|

| 24 |

+

vl_gpt = vl_gpt.to(torch.bfloat16).cuda()

|

| 25 |

+

else:

|

| 26 |

+

vl_gpt = vl_gpt.to(torch.float16)

|

| 27 |

+

|

| 28 |

+

vl_chat_processor = VLChatProcessor.from_pretrained(model_path)

|

| 29 |

+

tokenizer = vl_chat_processor.tokenizer

|

| 30 |

+

cuda_device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 31 |

+

|

| 32 |

+

# SR model

|

| 33 |

+

sr_model = RealESRGAN(torch.device('cuda' if torch.cuda.is_available() else 'cpu'), scale=2)

|

| 34 |

+

sr_model.load_weights(f'weights/RealESRGAN_x2.pth', download=False)

|

| 35 |

+

|

| 36 |

+

@torch.inference_mode()

|

| 37 |

+

@spaces.GPU(duration=120)

|

| 38 |

+

# Multimodal Understanding function

|

| 39 |

+

def multimodal_understanding(image, question, seed, top_p, temperature):

|

| 40 |

+

# Clear CUDA cache before generating

|

| 41 |

+

torch.cuda.empty_cache()

|

| 42 |

+

|

| 43 |

+

# set seed

|

| 44 |

+

torch.manual_seed(seed)

|

| 45 |

+

np.random.seed(seed)

|

| 46 |

+

torch.cuda.manual_seed(seed)

|

| 47 |

+

|

| 48 |

+

conversation = [

|

| 49 |

+

{

|

| 50 |

+

"role": "<|User|>",

|

| 51 |

+

"content": f"<image_placeholder>\n{question}",

|

| 52 |

+

"images": [image],

|

| 53 |

+

},

|

| 54 |

+

{"role": "<|Assistant|>", "content": ""},

|

| 55 |

+

]

|

| 56 |

+

|

| 57 |

+

pil_images = [Image.fromarray(image)]

|

| 58 |

+

prepare_inputs = vl_chat_processor(

|

| 59 |

+

conversations=conversation, images=pil_images, force_batchify=True

|

| 60 |

+

).to(cuda_device, dtype=torch.bfloat16 if torch.cuda.is_available() else torch.float16)

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

inputs_embeds = vl_gpt.prepare_inputs_embeds(**prepare_inputs)

|

| 64 |

+

|

| 65 |

+

outputs = vl_gpt.language_model.generate(

|

| 66 |

+

inputs_embeds=inputs_embeds,

|

| 67 |

+

attention_mask=prepare_inputs.attention_mask,

|

| 68 |

+

pad_token_id=tokenizer.eos_token_id,

|

| 69 |

+

bos_token_id=tokenizer.bos_token_id,

|

| 70 |

+

eos_token_id=tokenizer.eos_token_id,

|

| 71 |

+

max_new_tokens=512,

|

| 72 |

+

do_sample=False if temperature == 0 else True,

|

| 73 |

+

use_cache=True,

|

| 74 |

+

temperature=temperature,

|

| 75 |

+

top_p=top_p,

|

| 76 |

+

)

|

| 77 |

+

|

| 78 |

+

answer = tokenizer.decode(outputs[0].cpu().tolist(), skip_special_tokens=True)

|

| 79 |

+

return answer

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

def generate(input_ids,

|

| 83 |

+

width,

|

| 84 |

+

height,

|

| 85 |

+

temperature: float = 1,

|

| 86 |

+

parallel_size: int = 5,

|

| 87 |

+

cfg_weight: float = 5,

|

| 88 |

+

image_token_num_per_image: int = 576,

|

| 89 |

+

patch_size: int = 16):

|

| 90 |

+

# Clear CUDA cache before generating

|

| 91 |

+

torch.cuda.empty_cache()

|

| 92 |

+

|

| 93 |

+

tokens = torch.zeros((parallel_size * 2, len(input_ids)), dtype=torch.int).to(cuda_device)

|

| 94 |

+

for i in range(parallel_size * 2):

|

| 95 |

+

tokens[i, :] = input_ids

|

| 96 |

+

if i % 2 != 0:

|

| 97 |

+

tokens[i, 1:-1] = vl_chat_processor.pad_id

|

| 98 |

+

inputs_embeds = vl_gpt.language_model.get_input_embeddings()(tokens)

|

| 99 |

+

generated_tokens = torch.zeros((parallel_size, image_token_num_per_image), dtype=torch.int).to(cuda_device)

|

| 100 |

+

|

| 101 |

+

pkv = None

|

| 102 |

+

for i in range(image_token_num_per_image):

|

| 103 |

+

with torch.no_grad():

|

| 104 |

+

outputs = vl_gpt.language_model.model(inputs_embeds=inputs_embeds,

|

| 105 |

+

use_cache=True,

|

| 106 |

+

past_key_values=pkv)

|

| 107 |

+

pkv = outputs.past_key_values

|

| 108 |

+

hidden_states = outputs.last_hidden_state

|

| 109 |

+

logits = vl_gpt.gen_head(hidden_states[:, -1, :])

|

| 110 |

+

logit_cond = logits[0::2, :]

|

| 111 |

+

logit_uncond = logits[1::2, :]

|

| 112 |

+

logits = logit_uncond + cfg_weight * (logit_cond - logit_uncond)

|

| 113 |

+

probs = torch.softmax(logits / temperature, dim=-1)

|

| 114 |

+

next_token = torch.multinomial(probs, num_samples=1)

|

| 115 |

+

generated_tokens[:, i] = next_token.squeeze(dim=-1)

|

| 116 |

+

next_token = torch.cat([next_token.unsqueeze(dim=1), next_token.unsqueeze(dim=1)], dim=1).view(-1)

|

| 117 |

+

|

| 118 |

+

img_embeds = vl_gpt.prepare_gen_img_embeds(next_token)

|

| 119 |

+

inputs_embeds = img_embeds.unsqueeze(dim=1)

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

patches = vl_gpt.gen_vision_model.decode_code(generated_tokens.to(dtype=torch.int),

|

| 124 |

+

shape=[parallel_size, 8, width // patch_size, height // patch_size])

|

| 125 |

+

|

| 126 |

+

return generated_tokens.to(dtype=torch.int), patches

|

| 127 |

+

|

| 128 |

+

def unpack(dec, width, height, parallel_size=5):

|

| 129 |

+

dec = dec.to(torch.float32).cpu().numpy().transpose(0, 2, 3, 1)

|

| 130 |

+

dec = np.clip((dec + 1) / 2 * 255, 0, 255)

|

| 131 |

+

|

| 132 |

+

visual_img = np.zeros((parallel_size, width, height, 3), dtype=np.uint8)

|

| 133 |

+

visual_img[:, :, :] = dec

|

| 134 |

+

|

| 135 |

+

return visual_img

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

@torch.inference_mode()

|

| 140 |

+

@spaces.GPU(duration=120) # Specify a duration to avoid timeout

|

| 141 |

+

def generate_image(prompt,

|

| 142 |

+

seed=None,

|

| 143 |

+

guidance=5,

|

| 144 |

+

t2i_temperature=1.0):

|

| 145 |

+

# Clear CUDA cache and avoid tracking gradients

|

| 146 |

+

torch.cuda.empty_cache()

|

| 147 |

+

# Set the seed for reproducible results

|

| 148 |

+

if seed is not None:

|

| 149 |

+

torch.manual_seed(seed)

|

| 150 |

+

torch.cuda.manual_seed(seed)

|

| 151 |

+

np.random.seed(seed)

|

| 152 |

+

width = 384

|

| 153 |

+

height = 384

|

| 154 |

+

parallel_size = 5

|

| 155 |

+

|

| 156 |

+

with torch.no_grad():

|

| 157 |

+

messages = [{'role': '<|User|>', 'content': prompt},

|

| 158 |

+

{'role': '<|Assistant|>', 'content': ''}]

|

| 159 |

+

text = vl_chat_processor.apply_sft_template_for_multi_turn_prompts(conversations=messages,

|

| 160 |

+

sft_format=vl_chat_processor.sft_format,

|

| 161 |

+

system_prompt='')

|

| 162 |

+

text = text + vl_chat_processor.image_start_tag

|

| 163 |

+

|

| 164 |

+

input_ids = torch.LongTensor(tokenizer.encode(text))

|

| 165 |

+

output, patches = generate(input_ids,

|

| 166 |

+

width // 16 * 16,

|

| 167 |

+

height // 16 * 16,

|

| 168 |

+

cfg_weight=guidance,

|

| 169 |

+

parallel_size=parallel_size,

|

| 170 |

+

temperature=t2i_temperature)

|

| 171 |

+

images = unpack(patches,

|

| 172 |

+

width // 16 * 16,

|

| 173 |

+

height // 16 * 16,

|

| 174 |

+

parallel_size=parallel_size)

|

| 175 |

+

|

| 176 |

+

# return [Image.fromarray(images[i]).resize((768, 768), Image.LANCZOS) for i in range(parallel_size)]

|

| 177 |

+

stime = time.time()

|

| 178 |

+

ret_images = [image_upsample(Image.fromarray(images[i])) for i in range(parallel_size)]

|

| 179 |

+

print(f'upsample time: {time.time() - stime}')

|

| 180 |

+

return ret_images

|

| 181 |

+

|

| 182 |

+

|

| 183 |

+

@spaces.GPU(duration=60)

|

| 184 |

+

def image_upsample(img: Image.Image) -> Image.Image:

|

| 185 |

+

if img is None:

|

| 186 |

+

raise Exception("Image not uploaded")

|

| 187 |

+

|

| 188 |

+

width, height = img.size

|

| 189 |

+

|

| 190 |

+

if width >= 5000 or height >= 5000:

|

| 191 |

+

raise Exception("The image is too large.")

|

| 192 |

+

|

| 193 |

+

global sr_model

|

| 194 |

+

result = sr_model.predict(img.convert('RGB'))

|

| 195 |

+

return result

|

| 196 |

+

|

| 197 |

+

|

| 198 |

+

# Gradio interface

|

| 199 |

+

with gr.Blocks() as demo:

|

| 200 |

+

gr.Markdown(value="# Multimodal Understanding")

|

| 201 |

+

with gr.Row():

|

| 202 |

+

image_input = gr.Image()

|

| 203 |

+

with gr.Column():

|

| 204 |

+

question_input = gr.Textbox(label="Question")

|

| 205 |

+

und_seed_input = gr.Number(label="Seed", precision=0, value=42)

|

| 206 |

+

top_p = gr.Slider(minimum=0, maximum=1, value=0.95, step=0.05, label="top_p")

|

| 207 |

+

temperature = gr.Slider(minimum=0, maximum=1, value=0.1, step=0.05, label="temperature")

|

| 208 |

+

|

| 209 |

+

understanding_button = gr.Button("Chat")

|

| 210 |

+

understanding_output = gr.Textbox(label="Response")

|

| 211 |

+

|

| 212 |

+

examples_inpainting = gr.Examples(

|

| 213 |

+

label="Multimodal Understanding examples",

|

| 214 |

+

examples=[

|

| 215 |

+

[

|

| 216 |

+

"explain this meme",

|

| 217 |

+

"doge.png",

|

| 218 |

+

],

|

| 219 |

+

[

|

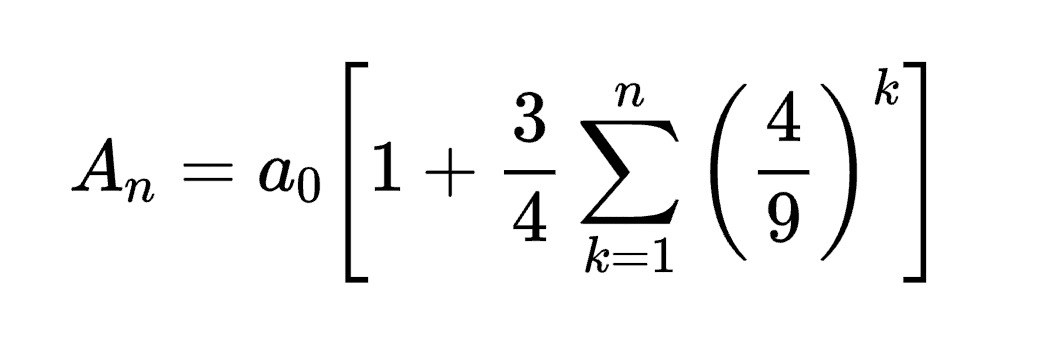

| 220 |

+

"Convert the formula into latex code.",

|

| 221 |

+

"equation.png",

|

| 222 |

+

],

|

| 223 |

+

],

|

| 224 |

+

inputs=[question_input, image_input],

|

| 225 |

+

)

|

| 226 |

+

|

| 227 |

+

|

| 228 |

+

gr.Markdown(value="# Text-to-Image Generation")

|

| 229 |

+

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

with gr.Row():

|

| 233 |

+

cfg_weight_input = gr.Slider(minimum=1, maximum=10, value=5, step=0.5, label="CFG Weight")

|

| 234 |

+

t2i_temperature = gr.Slider(minimum=0, maximum=1, value=1.0, step=0.05, label="temperature")

|

| 235 |

+

|

| 236 |

+

prompt_input = gr.Textbox(label="Prompt. (Prompt in more detail can help produce better images!)")

|

| 237 |

+

seed_input = gr.Number(label="Seed (Optional)", precision=0, value=12345)

|

| 238 |

+

|

| 239 |

+

generation_button = gr.Button("Generate Images")

|

| 240 |

+

|

| 241 |

+

image_output = gr.Gallery(label="Generated Images", columns=2, rows=2, height=300)

|

| 242 |

+

|

| 243 |

+

examples_t2i = gr.Examples(

|

| 244 |

+

label="Text to image generation examples.",

|

| 245 |

+

examples=[

|

| 246 |

+