Upload folder using huggingface_hub

Browse files- .gitattributes +3 -0

- .gradio/certificate.pem +31 -0

- GUIDELINES.md +140 -0

- LICENSE +21 -0

- README.md +158 -6

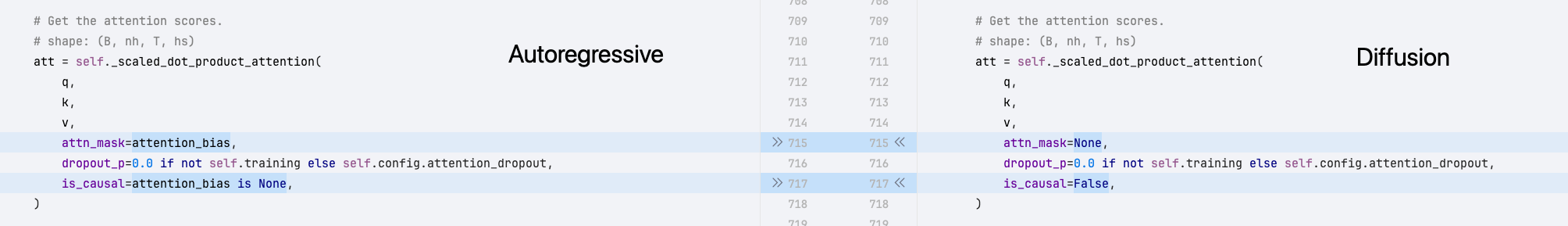

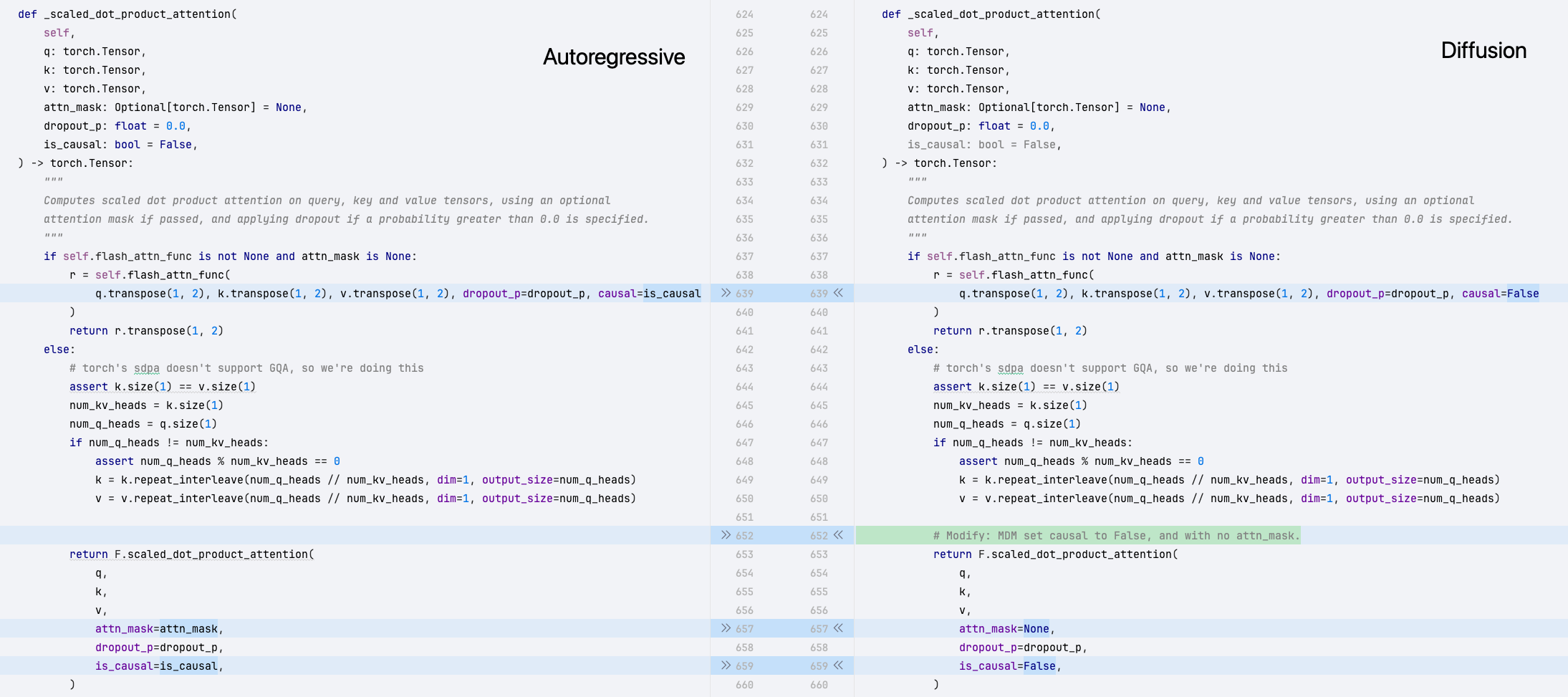

- __pycache__/generate.cpython-310.pyc +0 -0

- app.py +510 -0

- chat.py +45 -0

- generate.py +128 -0

- get_log_likelihood.py +96 -0

- imgs/LLaDA_vs_LLaMA.svg +2772 -0

- imgs/LLaDA_vs_LLaMA_chat.svg +2665 -0

- imgs/diff_remask.gif +3 -0

- imgs/sample.png +3 -0

- imgs/transformer1.png +0 -0

- imgs/transformer2.png +3 -0

- visualization/README.md +31 -0

- visualization/generate.py +144 -0

- visualization/html_to_png.py +30 -0

- visualization/sample_process.txt +64 -0

- visualization/visualization_paper.py +195 -0

- visualization/visualization_zhihu.py +202 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

imgs/diff_remask.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

imgs/sample.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

imgs/transformer2.png filter=lfs diff=lfs merge=lfs -text

|

.gradio/certificate.pem

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

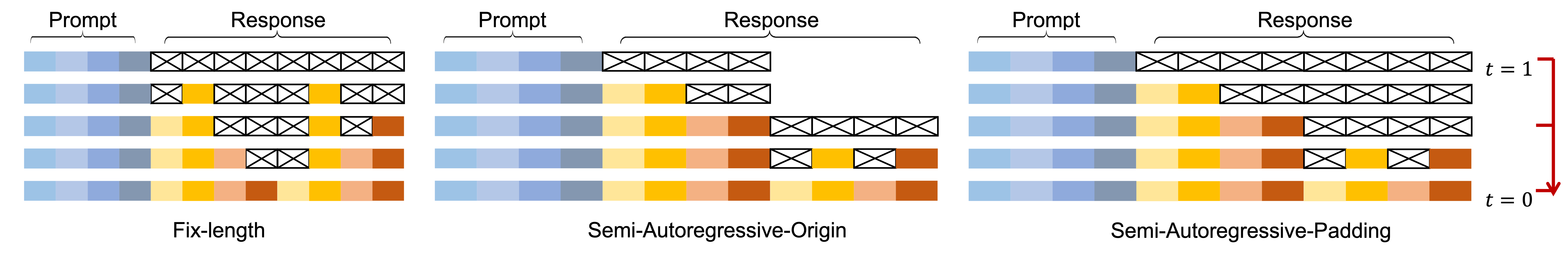

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-----BEGIN CERTIFICATE-----

|

| 2 |

+

MIIFazCCA1OgAwIBAgIRAIIQz7DSQONZRGPgu2OCiwAwDQYJKoZIhvcNAQELBQAw

|

| 3 |

+

TzELMAkGA1UEBhMCVVMxKTAnBgNVBAoTIEludGVybmV0IFNlY3VyaXR5IFJlc2Vh

|

| 4 |

+

cmNoIEdyb3VwMRUwEwYDVQQDEwxJU1JHIFJvb3QgWDEwHhcNMTUwNjA0MTEwNDM4

|

| 5 |

+

WhcNMzUwNjA0MTEwNDM4WjBPMQswCQYDVQQGEwJVUzEpMCcGA1UEChMgSW50ZXJu

|

| 6 |

+

ZXQgU2VjdXJpdHkgUmVzZWFyY2ggR3JvdXAxFTATBgNVBAMTDElTUkcgUm9vdCBY

|

| 7 |

+

MTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCCAgoCggIBAK3oJHP0FDfzm54rVygc

|

| 8 |

+

h77ct984kIxuPOZXoHj3dcKi/vVqbvYATyjb3miGbESTtrFj/RQSa78f0uoxmyF+

|

| 9 |

+

0TM8ukj13Xnfs7j/EvEhmkvBioZxaUpmZmyPfjxwv60pIgbz5MDmgK7iS4+3mX6U

|

| 10 |

+

A5/TR5d8mUgjU+g4rk8Kb4Mu0UlXjIB0ttov0DiNewNwIRt18jA8+o+u3dpjq+sW

|

| 11 |

+

T8KOEUt+zwvo/7V3LvSye0rgTBIlDHCNAymg4VMk7BPZ7hm/ELNKjD+Jo2FR3qyH

|

| 12 |

+

B5T0Y3HsLuJvW5iB4YlcNHlsdu87kGJ55tukmi8mxdAQ4Q7e2RCOFvu396j3x+UC

|

| 13 |

+

B5iPNgiV5+I3lg02dZ77DnKxHZu8A/lJBdiB3QW0KtZB6awBdpUKD9jf1b0SHzUv

|

| 14 |

+

KBds0pjBqAlkd25HN7rOrFleaJ1/ctaJxQZBKT5ZPt0m9STJEadao0xAH0ahmbWn

|

| 15 |

+

OlFuhjuefXKnEgV4We0+UXgVCwOPjdAvBbI+e0ocS3MFEvzG6uBQE3xDk3SzynTn

|

| 16 |

+

jh8BCNAw1FtxNrQHusEwMFxIt4I7mKZ9YIqioymCzLq9gwQbooMDQaHWBfEbwrbw

|

| 17 |

+

qHyGO0aoSCqI3Haadr8faqU9GY/rOPNk3sgrDQoo//fb4hVC1CLQJ13hef4Y53CI

|

| 18 |

+

rU7m2Ys6xt0nUW7/vGT1M0NPAgMBAAGjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNV

|

| 19 |

+

HRMBAf8EBTADAQH/MB0GA1UdDgQWBBR5tFnme7bl5AFzgAiIyBpY9umbbjANBgkq

|

| 20 |

+

hkiG9w0BAQsFAAOCAgEAVR9YqbyyqFDQDLHYGmkgJykIrGF1XIpu+ILlaS/V9lZL

|

| 21 |

+

ubhzEFnTIZd+50xx+7LSYK05qAvqFyFWhfFQDlnrzuBZ6brJFe+GnY+EgPbk6ZGQ

|

| 22 |

+

3BebYhtF8GaV0nxvwuo77x/Py9auJ/GpsMiu/X1+mvoiBOv/2X/qkSsisRcOj/KK

|

| 23 |

+

NFtY2PwByVS5uCbMiogziUwthDyC3+6WVwW6LLv3xLfHTjuCvjHIInNzktHCgKQ5

|

| 24 |

+

ORAzI4JMPJ+GslWYHb4phowim57iaztXOoJwTdwJx4nLCgdNbOhdjsnvzqvHu7Ur

|

| 25 |

+

TkXWStAmzOVyyghqpZXjFaH3pO3JLF+l+/+sKAIuvtd7u+Nxe5AW0wdeRlN8NwdC

|

| 26 |

+

jNPElpzVmbUq4JUagEiuTDkHzsxHpFKVK7q4+63SM1N95R1NbdWhscdCb+ZAJzVc

|

| 27 |

+

oyi3B43njTOQ5yOf+1CceWxG1bQVs5ZufpsMljq4Ui0/1lvh+wjChP4kqKOJ2qxq

|

| 28 |

+

4RgqsahDYVvTH9w7jXbyLeiNdd8XM2w9U/t7y0Ff/9yi0GE44Za4rF2LN9d11TPA

|

| 29 |

+

mRGunUHBcnWEvgJBQl9nJEiU0Zsnvgc/ubhPgXRR4Xq37Z0j4r7g1SgEEzwxA57d

|

| 30 |

+

emyPxgcYxn/eR44/KJ4EBs+lVDR3veyJm+kXQ99b21/+jh5Xos1AnX5iItreGCc=

|

| 31 |

+

-----END CERTIFICATE-----

|

GUIDELINES.md

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Guidelines

|

| 2 |

+

Here, we provide guidelines for the model architecture, pre-training, SFT, and inference of LLaDA.

|

| 3 |

+

|

| 4 |

+

## Model Architecture

|

| 5 |

+

|

| 6 |

+

LLaDA employs a Transformer Encoder as the network architecture for its mask predictor.

|

| 7 |

+

In terms of trainable parameters, the Transformer Encoder is identical to the Transformer

|

| 8 |

+

Decoder. Starting from an autoregressive model, we derive the backbone of LLaDA by simply

|

| 9 |

+

removing the causal mask from the self-attention mechanism as following.

|

| 10 |

+

|

| 11 |

+

<div style="display: flex; justify-content: center; flex-wrap: wrap; gap: 50px;">

|

| 12 |

+

<img src="imgs/transformer1.png" style="width: 90%;" />

|

| 13 |

+

<img src="imgs/transformer2.png" style="width: 90%;" />

|

| 14 |

+

</div>

|

| 15 |

+

|

| 16 |

+

In addition, LLaDA designates a reserved token as the mask token (i.e., 126336).

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

## Pre-training

|

| 20 |

+

The pre-training of LLaDA is straightforward and simple. Starting from an existing

|

| 21 |

+

autoregressive model training code, only a few lines need to be modified.

|

| 22 |

+

We provide the core code (i.e., loss computation) here.

|

| 23 |

+

|

| 24 |

+

```angular2html

|

| 25 |

+

def forward_process(input_ids, eps=1e-3):

|

| 26 |

+

b, l = input_ids.shape

|

| 27 |

+

t = torch.rand(b, device=input_ids.device)

|

| 28 |

+

p_mask = (1 - eps) * t + eps

|

| 29 |

+

p_mask = p_mask[:, None].repeat(1, l)

|

| 30 |

+

|

| 31 |

+

masked_indices = torch.rand((b, l), device=input_ids.device) < p_mask

|

| 32 |

+

# 126336 is used for [MASK] token

|

| 33 |

+

noisy_batch = torch.where(masked_indices, 126336, input_ids)

|

| 34 |

+

return noisy_batch, masked_indices, p_mask

|

| 35 |

+

|

| 36 |

+

# The data is an integer tensor of shape (b, 4096),

|

| 37 |

+

# where b represents the batch size and 4096 is the sequence length.

|

| 38 |

+

input_ids = batch["input_ids"]

|

| 39 |

+

|

| 40 |

+

# We set 1% of the pre-training data to a random length that is uniformly sampled from the range [1, 4096].

|

| 41 |

+

# The following implementation is not elegant and involves some data waste.

|

| 42 |

+

# However, the data waste is minimal, so we ignore it.

|

| 43 |

+

if torch.rand(1) < 0.01:

|

| 44 |

+

random_length = torch.randint(1, input_ids.shape[1] + 1, (1,))

|

| 45 |

+

input_ids = input_ids[:, :random_length]

|

| 46 |

+

|

| 47 |

+

noisy_batch, masked_indices, p_mask = forward_process(input_ids)

|

| 48 |

+

logits = model(input_ids=noisy_batch).logits

|

| 49 |

+

|

| 50 |

+

token_loss = F.cross_entropy(logits[masked_indices], input_ids[masked_indices], reduction='none') / p_mask[masked_indices]

|

| 51 |

+

loss = token_loss.sum() / (input_ids.shape[0] * input_ids.shape[1])

|

| 52 |

+

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

## SFT

|

| 56 |

+

First, please refer to Appendix B.1 for the preprocessing of the SFT data. After preprocessing the data,

|

| 57 |

+

the data format is as follows. For simplicity, we treat each word as a token and set the batch size to 2

|

| 58 |

+

in the following visualization.

|

| 59 |

+

```angular2html

|

| 60 |

+

input_ids:

|

| 61 |

+

<BOS><start_id>user<end_id>\nWhat is the capital of France?<eot_id><start_id>assistant<end_id>\nParis.<EOS><EOS><EOS><EOS><EOS><EOS><EOS><EOS><EOS><EOS>

|

| 62 |

+

<BOS><start_id>user<end_id>\nWhat is the capital of Canada?<eot_id><start_id>assistant<end_id>\nThe capital of Canada is Ottawa, located in Ontario.<EOS>

|

| 63 |

+

|

| 64 |

+

prompt_lengths:

|

| 65 |

+

[17, 17]

|

| 66 |

+

```

|

| 67 |

+

|

| 68 |

+

After preprocessing the SFT data, we can obtain the SFT code by making simple modifications to the pre-training code.

|

| 69 |

+

The key difference from pre-training is that SFT does not add noise to the prompt.

|

| 70 |

+

```angular2html

|

| 71 |

+

input_ids, prompt_lengths = batch["input_ids"], batch["prompt_lengths"]

|

| 72 |

+

|

| 73 |

+

noisy_batch, _, p_mask = forward_process(input_ids)

|

| 74 |

+

|

| 75 |

+

# Do not add noise to the prompt

|

| 76 |

+

token_positions = torch.arange(noisy_batch.shape[1], device=noisy_batch.device).expand(noisy_batch.size(0), noisy_batch.size(1))

|

| 77 |

+

prompt_mask = (temp_tensor < prompt_length.unsqueeze(1))

|

| 78 |

+

noisy_batch[prompt_mask] = input_ids[prompt_mask]

|

| 79 |

+

|

| 80 |

+

# Calculate the answer length (including the padded <EOS> tokens)

|

| 81 |

+

prompt_mask = prompt_mask.to(torch.int64)

|

| 82 |

+

answer_lengths = torch.sum((1 - prompt_mask), dim=-1, keepdim=True)

|

| 83 |

+

answer_lengths = answer_length.repeat(1, noisy_batch.shape[1])

|

| 84 |

+

|

| 85 |

+

masked_indices = (noisy_batch == 126336)

|

| 86 |

+

|

| 87 |

+

logits = model(input_ids=noisy_batch).logits

|

| 88 |

+

|

| 89 |

+

token_loss = F.cross_entropy(logits[masked_indices], input_ids[masked_indices], reduction='none') / p_mask[masked_indices]

|

| 90 |

+

ce_loss = torch.sum(token_loss / answer_lengths[masked_indices]) / input_ids.shape[0]

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

## Sampling

|

| 94 |

+

Overall, we categorize LLaDA's sampling process into three types: fixed-length, semi-autoregressive-origin, and semi-autoregressive-padding.

|

| 95 |

+

**It is worth noting that the semi-autoregressive-origin method was not mentioned in our paper, nor did we provide the corresponding code**.

|

| 96 |

+

However, we include it here because we believe that sharing both our failures and insights from the exploration process is valuable.

|

| 97 |

+

These three sampling methods are illustrated in the figure below.

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

<div style="display: flex; justify-content: center; flex-wrap: wrap; gap: 50px;">

|

| 101 |

+

<img src="imgs/sample.png" style="width: 100%;" />

|

| 102 |

+

</div>

|

| 103 |

+

|

| 104 |

+

For each step in the above three sampling processes, as detailed in Section 2.4 in our paper, the mask predictor

|

| 105 |

+

first predicts all masked tokens simultaneously. Then, a certain proportion of these predictions are remasked.

|

| 106 |

+

To determine which predicted tokens should be re-masked, we can adopt two strategies: *randomly remasking* or

|

| 107 |

+

*low-confidence remasking*. Notably, both remasking strategies can be applied to all three sampling processes

|

| 108 |

+

mentioned above.

|

| 109 |

+

|

| 110 |

+

For the LLaDA-Base model, we adapt low-confidence remasking to the three sampling processes mentioned above.

|

| 111 |

+

We find that fixed-length and semi-autoregressive-padding achieve similar results, whereas semi-autoregressive-origin

|

| 112 |

+

performs slightly worse.

|

| 113 |

+

|

| 114 |

+

For the LLaDA-Instruct model, the situation is slightly more complex.

|

| 115 |

+

|

| 116 |

+

First, if the semi-autoregressive-origin method is used,

|

| 117 |

+

the Instruct model performs poorly. This is because, during SFT, each sequence is a complete sentence (whereas in pre-training,

|

| 118 |

+

many sequences are truncated sentences). As a result, during sampling, given a generated length, regardless of whether it is

|

| 119 |

+

long or short, the Instruct model tends to generate a complete sentence. Unlike the Base model, it does not encounter cases

|

| 120 |

+

where a sentence is only partially generated and needs to be continued.

|

| 121 |

+

|

| 122 |

+

When performing fixed-length sampling with a high answer length (e.g., greater than 512),

|

| 123 |

+

we find that low-confidence remasking results in an unusually high proportion of `<EOS>` tokens in

|

| 124 |

+

the generated sentences, which severely impacts the model's performance. In contrast, this

|

| 125 |

+

issue does not arise when randomly remasking is used.

|

| 126 |

+

|

| 127 |

+

Furthermore, since low-confidence remasking achieved better results in the Base model, we also hoped that it could be applied to

|

| 128 |

+

the Instruct model. We found that combining low-confidence remasking with semi-autoregressive-padding effectively mitigates

|

| 129 |

+

the issue of generating an excessively high proportion of <EOS> tokens. Moreover, this combination achieves

|

| 130 |

+

slightly better results than randomly remasking & fixed-length.

|

| 131 |

+

|

| 132 |

+

You can find more details about the sampling method in our paper.

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2025 NieShenRuc

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,164 @@

|

|

| 1 |

---

|

| 2 |

title: LLaDA

|

| 3 |

-

|

| 4 |

-

colorFrom: red

|

| 5 |

-

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.20.1

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

title: LLaDA

|

| 3 |

+

app_file: app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 5.20.1

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

# Large Language Diffusion Models

|

| 8 |

+

[](https://arxiv.org/abs/2502.09992)

|

| 9 |

+

[](https://huggingface.co/GSAI-ML/LLaDA-8B-Base)

|

| 10 |

+

[](https://huggingface.co/GSAI-ML/LLaDA-8B-Instruct)

|

| 11 |

+

[](https://zhuanlan.zhihu.com/p/24214732238)

|

| 12 |

+

|

| 13 |

+

We introduce LLaDA (<b>L</b>arge <b>La</b>nguage <b>D</b>iffusion with m<b>A</b>sking), a diffusion model with an unprecedented 8B scale, trained entirely from scratch,

|

| 14 |

+

rivaling LLaMA3 8B in performance.

|

| 15 |

+

|

| 16 |

+

<div style="display: flex; justify-content: center; flex-wrap: wrap;">

|

| 17 |

+

<img src="./imgs/LLaDA_vs_LLaMA.svg" style="width: 45%" />

|

| 18 |

+

<img src="./imgs/LLaDA_vs_LLaMA_chat.svg" style="width: 46%" />

|

| 19 |

+

</div>

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

## Inference

|

| 23 |

+

The [LLaDA-8B-Base](https://huggingface.co/GSAI-ML/LLaDA-8B-Base) and [LLaDA-8B-Instruct](https://huggingface.co/GSAI-ML/LLaDA-8B-Instruct) are upload

|

| 24 |

+

in Huggingface. Please first install `transformers==4.38.2` and employ the [transformers](https://huggingface.co/docs/transformers/index) to load.

|

| 25 |

+

|

| 26 |

+

```angular2html

|

| 27 |

+

from transformers import AutoModel, AutoTokenizer

|

| 28 |

+

|

| 29 |

+

tokenizer = AutoTokenizer.from_pretrained('GSAI-ML/LLaDA-8B-Base', trust_remote_code=True)

|

| 30 |

+

model = AutoModel.from_pretrained('GSAI-ML/LLaDA-8B-Base', trust_remote_code=True, torch_dtype=torch.bfloat16)

|

| 31 |

+

```

|

| 32 |

+

|

| 33 |

+

We provide `get_log_likelihood()` and `generate()` functions in `get_log_likelihood.py`

|

| 34 |

+

and `generate.py` respectively, for conditional likelihood evaluation and conditional generation.

|

| 35 |

+

|

| 36 |

+

You can directly run `python chat.py` to have multi-round conversations with LLaDA-8B-Instruct.

|

| 37 |

+

|

| 38 |

+

In addition, please refer to our paper and [GUIDELINES.md](GUIDELINES.md) for more details about the inference methods.

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

## Pre-training and Supervised Fine-Tuning

|

| 42 |

+

|

| 43 |

+

We will not provide the training framework and data as most open-source LLMs do.

|

| 44 |

+

|

| 45 |

+

However, the pre-training and Supervised Fine-Tuning of LLaDA are straightforward. If

|

| 46 |

+

you have a codebase for training an autoregressive model, you can modify it to

|

| 47 |

+

adapt to LLaDA with just a few lines of code.

|

| 48 |

+

|

| 49 |

+

We provide guidelines for the pre-training and SFT of LLaDA in [GUIDELINES.md](GUIDELINES.md).

|

| 50 |

+

You can also refer to [SMDM](https://github.com/ML-GSAI/SMDM), which has a similar training process to LLaDA

|

| 51 |

+

and has open-sourced the training framework.

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

## FAQ

|

| 55 |

+

Here, we address some common questions about LLaDA.

|

| 56 |

+

|

| 57 |

+

### 0. How do I train my own LLaDA?

|

| 58 |

+

Please refer to [GUIDELINES.md](GUIDELINES.md) for the guidelines.

|

| 59 |

+

You can also refer to [SMDM](https://github.com/ML-GSAI/SMDM), which follows the same training

|

| 60 |

+

process as LLaDA and has open-sourced its code.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

### 1. What is the difference between LLaDA and BERT?

|

| 64 |

+

|

| 65 |

+

Our motivation is not to improve BERT, nor to apply image generation methods like [MaskGIT](https://arxiv.org/abs/2202.04200)

|

| 66 |

+

to text. **Our goal is to explore a theoretically complete language modeling approach — masked diffusion models.**

|

| 67 |

+

During this process, we simplified the approach and discovered that the loss function of masked diffusion models

|

| 68 |

+

is related to the loss functions of BERT and MaskGIT. You can find our theoretical research process in Question 7.

|

| 69 |

+

|

| 70 |

+

Specifically, LLaDA employs a masking ratio that varies randomly between 0 and 1, while BERT uses

|

| 71 |

+

a fixed ratio. This subtle difference has significant implications. **The training

|

| 72 |

+

objective of LLaDA is an upper bound on the negative log-likelihood of the model

|

| 73 |

+

distribution, making LLaDA a generative model.** This enables LLaDA to naturally

|

| 74 |

+

perform in-context learning, instruction-following, and ensures Fisher consistency

|

| 75 |

+

for scalability with large datasets and models. You can also find a direct answer

|

| 76 |

+

to this question in Section 2.1 of our paper.

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

### 2. What is the relationship between LLaDA and Transformer?

|

| 80 |

+

Network structure and probabilistic modeling are two distinct approaches that collectively form the

|

| 81 |

+

foundation of language models. LLaDA, like GPT, adopts the

|

| 82 |

+

Transformer architecture. The key difference lies in the probabilistic modeling approach: GPT

|

| 83 |

+

utilizes an autoregressive next-token prediction method,

|

| 84 |

+

while LLaDA employs a diffusion model for probabilistic modeling.

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

### 3. What is the sampling efficiency of LLaDA?

|

| 88 |

+

Currently, LLaDA's sampling speed is slower than the autoregressive baseline for three reasons:

|

| 89 |

+

1. LLaDA samples with a fixed context length;

|

| 90 |

+

2. LLaDA cannot yet leverage techniques like KV-Cache;

|

| 91 |

+

3. LLaDA achieves optimal performance when the number of sampling steps equals the response length.

|

| 92 |

+

Reducing the number of sampling steps leads to a decrease in performance, as detailed in Appendix B.4

|

| 93 |

+

and Appendix B.6 of our paper.

|

| 94 |

+

|

| 95 |

+

In this work, we aim to explore the upper limits of LLaDA's capabilities, **challenging the assumption

|

| 96 |

+

that the key LLM abilities are inherently tied to autoregressive models**. We will continue

|

| 97 |

+

to optimize its efficiency in the future. We believe this research approach is reasonable,

|

| 98 |

+

as verifying the upper limits of diffusion language models' capabilities will provide us with

|

| 99 |

+

more resources and sufficient motivation to optimize efficiency.

|

| 100 |

+

|

| 101 |

+

Recall the development of diffusion models for images, from [DDPM](https://arxiv.org/abs/2006.11239)

|

| 102 |

+

to the [Consistency model](https://arxiv.org/pdf/2410.11081), where sampling speed accelerated nearly

|

| 103 |

+

1000 times over the course of 4 years. **We believe there is significant room for optimization in LLaDA's

|

| 104 |

+

sampling efficiency as well**. Current solutions, including semi-autoregressive sampling (as

|

| 105 |

+

detailed in [GUIDELINES.md](GUIDELINES.md)), can mitigate the fixed context length issue, and

|

| 106 |

+

[consistency distillation](https://arxiv.org/pdf/2502.05415) can reduce the number of sampling steps.

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

### 4. What is the training stability of LLaDA?

|

| 110 |

+

For details on the pre-training process of LLaDA, please refer to Section 2.2 of our paper.

|

| 111 |

+

During the total pre-training on 2.3T tokens, we encountered a training crash (loss becoming NaN)

|

| 112 |

+

only once at 1.2T tokens. Our solution was to resume checkpoint and reduce

|

| 113 |

+

the learning rate from 4e-4 to 1e-4.

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

### 5. Why is the final answer "72" generated earlier than the intermediate calculation step (e.g., 12 × 4 = 48) in Tab4?

|

| 117 |

+

|

| 118 |

+

**The mask predictor has successfully predicted the reasoning process. However, during the

|

| 119 |

+

remasking process, the reasoning steps are masked out again.** As shown in the figure

|

| 120 |

+

below, the non-white background represents the model's generation process, while the

|

| 121 |

+

white-background boxes indicate the predictions made by the mask predictor at each step.

|

| 122 |

+

We adopt a randomly remasking strategy.

|

| 123 |

+

|

| 124 |

+

<div style="display: flex; justify-content: center; flex-wrap: wrap;">

|

| 125 |

+

<img src="./imgs/diff_remask.gif" style="width: 80%" />

|

| 126 |

+

</div>

|

| 127 |

+

|

| 128 |

+

### 6. Why does LLaDA answer 'Bailing' when asked 'Who are you'?

|

| 129 |

+

This is because our pre-training and SFT data were designed for training an autoregressive model,

|

| 130 |

+

whereas LLaDA directly utilizes data that contains identity markers.

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

### 7. Our journey in developing LLaDA?

|

| 134 |

+

LLaDA is built upon our two prior works, [RADD](https://arxiv.org/abs/2406.03736) and

|

| 135 |

+

[SMDM](https://arxiv.org/abs/2410.18514).

|

| 136 |

+

|

| 137 |

+

RADD demonstrated that the **training objective of LLaDA serves as an upper bound on the negative

|

| 138 |

+

log-likelihood** of the model’s distribution, a conclusion also supported by [MD4](https://arxiv.org/abs/2406.04329)

|

| 139 |

+

and [MDLM](https://arxiv.org/abs/2406.07524).

|

| 140 |

+

Furthermore, RADD was the first to theoretically prove that **masked diffusion models do not require time t

|

| 141 |

+

as an input to Transformer**. This insight provides the theoretical

|

| 142 |

+

justification for LLaDA’s unmodified use of the Transformer architecture. Lastly,

|

| 143 |

+

RADD showed that **the training objective of masked diffusion models is equivalent to that of

|

| 144 |

+

any-order autoregressive models**, offering valuable insights into how masked diffusion models can

|

| 145 |

+

overcome the reversal curse.

|

| 146 |

+

|

| 147 |

+

SMDM introduces the first **scaling law** for masked diffusion models and demonstrates that, with the

|

| 148 |

+

same model size and training data, masked diffusion models can achieve downstream benchmark results

|

| 149 |

+

on par with those of autoregressive models. Additionally, SMDM presents a simple, **unsupervised

|

| 150 |

+

classifier-free guidance** method that greatly improves downstream benchmark performance, which has

|

| 151 |

+

been adopted by LLaDA.

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

## Citation

|

| 155 |

+

|

| 156 |

+

```bibtex

|

| 157 |

+

@article{nie2025large,

|

| 158 |

+

title={Large Language Diffusion Models},

|

| 159 |

+

author={Nie, Shen and Zhu, Fengqi and You, Zebin and Zhang, Xiaolu and Ou, Jingyang and Hu, Jun and Zhou, Jun and Lin, Yankai and Wen, Ji-Rong and Li, Chongxuan},

|

| 160 |

+

journal={arXiv preprint arXiv:2502.09992},

|

| 161 |

+

year={2025}

|

| 162 |

+

}

|

| 163 |

+

```

|

| 164 |

|

|

|

__pycache__/generate.cpython-310.pyc

ADDED

|

Binary file (4.47 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,510 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import numpy as np

|

| 3 |

+

import gradio as gr

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

from transformers import AutoTokenizer, AutoModel

|

| 6 |

+

import time

|

| 7 |

+

import re

|

| 8 |

+

|

| 9 |

+

device = 'cuda' if torch.cuda.is_available() else 'cpu'

|

| 10 |

+

print(f"Using device: {device}")

|

| 11 |

+

|

| 12 |

+

# Load model and tokenizer

|

| 13 |

+

tokenizer = AutoTokenizer.from_pretrained('GSAI-ML/LLaDA-8B-Instruct', trust_remote_code=True)

|

| 14 |

+

model = AutoModel.from_pretrained('GSAI-ML/LLaDA-8B-Instruct', trust_remote_code=True,

|

| 15 |

+

torch_dtype=torch.bfloat16).to(device)

|

| 16 |

+

|

| 17 |

+

# Constants

|

| 18 |

+

MASK_TOKEN = "[MASK]"

|

| 19 |

+

MASK_ID = 126336 # The token ID of [MASK] in LLaDA

|

| 20 |

+

|

| 21 |

+

def parse_constraints(constraints_text):

|

| 22 |

+

"""Parse constraints in format: 'position:word, position:word, ...'"""

|

| 23 |

+

constraints = {}

|

| 24 |

+

if not constraints_text:

|

| 25 |

+

return constraints

|

| 26 |

+

|

| 27 |

+

parts = constraints_text.split(',')

|

| 28 |

+

for part in parts:

|

| 29 |

+

if ':' not in part:

|

| 30 |

+

continue

|

| 31 |

+

pos_str, word = part.split(':', 1)

|

| 32 |

+

try:

|

| 33 |

+

pos = int(pos_str.strip())

|

| 34 |

+

word = word.strip()

|

| 35 |

+

if word and pos >= 0:

|

| 36 |

+

constraints[pos] = word

|

| 37 |

+

except ValueError:

|

| 38 |

+

continue

|

| 39 |

+

|

| 40 |

+

return constraints

|

| 41 |

+

|

| 42 |

+

def format_chat_history(history):

|

| 43 |

+

"""

|

| 44 |

+

Format chat history for the LLaDA model

|

| 45 |

+

|

| 46 |

+

Args:

|

| 47 |

+

history: List of [user_message, assistant_message] pairs

|

| 48 |

+

|

| 49 |

+

Returns:

|

| 50 |

+

Formatted conversation for the model

|

| 51 |

+

"""

|

| 52 |

+

messages = []

|

| 53 |

+

for user_msg, assistant_msg in history:

|

| 54 |

+

messages.append({"role": "user", "content": user_msg})

|

| 55 |

+

if assistant_msg: # Skip if None (for the latest user message)

|

| 56 |

+

messages.append({"role": "assistant", "content": assistant_msg})

|

| 57 |

+

|

| 58 |

+

return messages

|

| 59 |

+

|

| 60 |

+

def add_gumbel_noise(logits, temperature):

|

| 61 |

+

'''

|

| 62 |

+

The Gumbel max is a method for sampling categorical distributions.

|

| 63 |

+

According to arXiv:2409.02908, for MDM, low-precision Gumbel Max improves perplexity score but reduces generation quality.

|

| 64 |

+

Thus, we use float64.

|

| 65 |

+

'''

|

| 66 |

+

if temperature <= 0:

|

| 67 |

+

return logits

|

| 68 |

+

|

| 69 |

+

logits = logits.to(torch.float64)

|

| 70 |

+

noise = torch.rand_like(logits, dtype=torch.float64)

|

| 71 |

+

gumbel_noise = (- torch.log(noise)) ** temperature

|

| 72 |

+

return logits.exp() / gumbel_noise

|

| 73 |

+

|

| 74 |

+

def get_num_transfer_tokens(mask_index, steps):

|

| 75 |

+

'''

|

| 76 |

+

In the reverse process, the interval [0, 1] is uniformly discretized into steps intervals.

|

| 77 |

+

Furthermore, because LLaDA employs a linear noise schedule (as defined in Eq. (8)),

|

| 78 |

+

the expected number of tokens transitioned at each step should be consistent.

|

| 79 |

+

This function is designed to precompute the number of tokens that need to be transitioned at each step.

|

| 80 |

+

'''

|

| 81 |

+

mask_num = mask_index.sum(dim=1, keepdim=True)

|

| 82 |

+

|

| 83 |

+

base = mask_num // steps

|

| 84 |

+

remainder = mask_num % steps

|

| 85 |

+

|

| 86 |

+

num_transfer_tokens = torch.zeros(mask_num.size(0), steps, device=mask_index.device, dtype=torch.int64) + base

|

| 87 |

+

|

| 88 |

+

for i in range(mask_num.size(0)):

|

| 89 |

+

num_transfer_tokens[i, :remainder[i]] += 1

|

| 90 |

+

|

| 91 |

+

return num_transfer_tokens

|

| 92 |

+

|

| 93 |

+

def generate_response_with_visualization(messages, gen_length=64, steps=32,

|

| 94 |

+

constraints=None, temperature=0.0, cfg_scale=0.0, block_length=32,

|

| 95 |

+

remasking='low_confidence'):

|

| 96 |

+

"""

|

| 97 |

+

Generate text with LLaDA model with visualization using the same sampling as in generate.py

|

| 98 |

+

|

| 99 |

+

Args:

|

| 100 |

+

messages: List of message dictionaries with 'role' and 'content'

|

| 101 |

+

gen_length: Length of text to generate

|

| 102 |

+

steps: Number of denoising steps

|

| 103 |

+

constraints: Dictionary mapping positions to words

|

| 104 |

+

temperature: Sampling temperature

|

| 105 |

+

cfg_scale: Classifier-free guidance scale

|

| 106 |

+

block_length: Block length for semi-autoregressive generation

|

| 107 |

+

remasking: Remasking strategy ('low_confidence' or 'random')

|

| 108 |

+

|

| 109 |

+

Returns:

|

| 110 |

+

List of visualization states showing the progression and final text

|

| 111 |

+

"""

|

| 112 |

+

|

| 113 |

+

# Process constraints

|

| 114 |

+

if constraints is None:

|

| 115 |

+

constraints = {}

|

| 116 |

+

|

| 117 |

+

# Convert any string constraints to token IDs

|

| 118 |

+

processed_constraints = {}

|

| 119 |

+

for pos, word in constraints.items():

|

| 120 |

+

tokens = tokenizer.encode(" " + word, add_special_tokens=False)

|

| 121 |

+

for i, token_id in enumerate(tokens):

|

| 122 |

+

processed_constraints[pos + i] = token_id

|

| 123 |

+

|

| 124 |

+

# Prepare the prompt using chat template

|

| 125 |

+

chat_input = tokenizer.apply_chat_template(messages, add_generation_prompt=True, tokenize=False)

|

| 126 |

+

input_ids = tokenizer(chat_input)['input_ids']

|

| 127 |

+

input_ids = torch.tensor(input_ids).to(device).unsqueeze(0)

|

| 128 |

+

|

| 129 |

+

# For generation

|

| 130 |

+

prompt_length = input_ids.shape[1]

|

| 131 |

+

|

| 132 |

+

# Initialize the sequence with masks for the response part

|

| 133 |

+

x = torch.full((1, prompt_length + gen_length), MASK_ID, dtype=torch.long).to(device)

|

| 134 |

+

x[:, :prompt_length] = input_ids.clone()

|

| 135 |

+

|

| 136 |

+

# Initialize visualization states for the response part

|

| 137 |

+

visualization_states = []

|

| 138 |

+

|

| 139 |

+

# Add initial state (all masked)

|

| 140 |

+

initial_state = [(MASK_TOKEN, "#444444") for _ in range(gen_length)]

|

| 141 |

+

visualization_states.append(initial_state)

|

| 142 |

+

|

| 143 |

+

# Apply constraints to the initial state

|

| 144 |

+

for pos, token_id in processed_constraints.items():

|

| 145 |

+

absolute_pos = prompt_length + pos

|

| 146 |

+

if absolute_pos < x.shape[1]:

|

| 147 |

+

x[:, absolute_pos] = token_id

|

| 148 |

+

|

| 149 |

+

# Mark prompt positions to exclude them from masking during classifier-free guidance

|

| 150 |

+

prompt_index = (x != MASK_ID)

|

| 151 |

+

|

| 152 |

+

# Ensure block_length is valid

|

| 153 |

+

if block_length > gen_length:

|

| 154 |

+

block_length = gen_length

|

| 155 |

+

|

| 156 |

+

# Calculate number of blocks

|

| 157 |

+

num_blocks = gen_length // block_length

|

| 158 |

+

if gen_length % block_length != 0:

|

| 159 |

+

num_blocks += 1

|

| 160 |

+

|

| 161 |

+

# Adjust steps per block

|

| 162 |

+

steps_per_block = steps // num_blocks

|

| 163 |

+

if steps_per_block < 1:

|

| 164 |

+

steps_per_block = 1

|

| 165 |

+

|

| 166 |

+

# Track the current state of x for visualization

|

| 167 |

+

current_x = x.clone()

|

| 168 |

+

|

| 169 |

+

# Process each block

|

| 170 |

+

for num_block in range(num_blocks):

|

| 171 |

+

# Calculate the start and end indices for the current block

|

| 172 |

+

block_start = prompt_length + num_block * block_length

|

| 173 |

+

block_end = min(prompt_length + (num_block + 1) * block_length, x.shape[1])

|

| 174 |

+

|

| 175 |

+

# Get mask indices for the current block

|

| 176 |

+

block_mask_index = (x[:, block_start:block_end] == MASK_ID)

|

| 177 |

+

|

| 178 |

+

# Skip if no masks in this block

|

| 179 |

+

if not block_mask_index.any():

|

| 180 |

+

continue

|

| 181 |

+

|

| 182 |

+

# Calculate number of tokens to unmask at each step

|

| 183 |

+

num_transfer_tokens = get_num_transfer_tokens(block_mask_index, steps_per_block)

|

| 184 |

+

|

| 185 |

+

# Process each step

|

| 186 |

+

for i in range(steps_per_block):

|

| 187 |

+

# Get all mask positions in the current sequence

|

| 188 |

+

mask_index = (x == MASK_ID)

|

| 189 |

+

|

| 190 |

+

# Skip if no masks

|

| 191 |

+

if not mask_index.any():

|

| 192 |

+

break

|

| 193 |

+

|

| 194 |

+

# Apply classifier-free guidance if enabled

|

| 195 |

+

if cfg_scale > 0.0:

|

| 196 |

+

un_x = x.clone()

|

| 197 |

+

un_x[prompt_index] = MASK_ID

|

| 198 |

+

x_ = torch.cat([x, un_x], dim=0)

|

| 199 |

+

logits = model(x_).logits

|

| 200 |

+

logits, un_logits = torch.chunk(logits, 2, dim=0)

|

| 201 |

+

logits = un_logits + (cfg_scale + 1) * (logits - un_logits)

|

| 202 |

+

else:

|

| 203 |

+

logits = model(x).logits

|

| 204 |

+

|

| 205 |

+

# Apply Gumbel noise for sampling

|

| 206 |

+

logits_with_noise = add_gumbel_noise(logits, temperature=temperature)

|

| 207 |

+

x0 = torch.argmax(logits_with_noise, dim=-1)

|

| 208 |

+

|

| 209 |

+

# Calculate confidence scores for remasking

|

| 210 |

+

if remasking == 'low_confidence':

|

| 211 |

+

p = F.softmax(logits.to(torch.float64), dim=-1)

|

| 212 |

+

x0_p = torch.squeeze(

|

| 213 |

+

torch.gather(p, dim=-1, index=torch.unsqueeze(x0, -1)), -1) # b, l

|

| 214 |

+

elif remasking == 'random':

|

| 215 |

+

x0_p = torch.rand((x0.shape[0], x0.shape[1]), device=x0.device)

|

| 216 |

+

else:

|

| 217 |

+

raise NotImplementedError(f"Remasking strategy '{remasking}' not implemented")

|

| 218 |

+

|

| 219 |

+

# Don't consider positions beyond the current block

|

| 220 |

+

x0_p[:, block_end:] = -float('inf')

|

| 221 |

+

|

| 222 |

+

# Apply predictions where we have masks

|

| 223 |

+

old_x = x.clone()

|

| 224 |

+

x0 = torch.where(mask_index, x0, x)

|

| 225 |

+

confidence = torch.where(mask_index, x0_p, -float('inf'))

|

| 226 |

+

|

| 227 |

+

# Select tokens to unmask based on confidence

|

| 228 |

+

transfer_index = torch.zeros_like(x0, dtype=torch.bool, device=x0.device)

|

| 229 |

+

for j in range(confidence.shape[0]):

|

| 230 |

+

# Only consider positions within the current block for unmasking

|

| 231 |

+

block_confidence = confidence[j, block_start:block_end]

|

| 232 |

+

if i < steps_per_block - 1: # Not the last step

|

| 233 |

+

# Take top-k confidences

|

| 234 |

+

_, select_indices = torch.topk(block_confidence,

|

| 235 |

+

k=min(num_transfer_tokens[j, i].item(),

|

| 236 |

+

block_confidence.numel()))

|

| 237 |

+

# Adjust indices to global positions

|

| 238 |

+

select_indices = select_indices + block_start

|

| 239 |

+

transfer_index[j, select_indices] = True

|

| 240 |

+

else: # Last step - unmask everything remaining

|

| 241 |

+

transfer_index[j, block_start:block_end] = mask_index[j, block_start:block_end]

|

| 242 |

+

|

| 243 |

+

# Apply the selected tokens

|

| 244 |

+

x = torch.where(transfer_index, x0, x)

|

| 245 |

+

|

| 246 |

+

# Ensure constraints are maintained

|

| 247 |

+

for pos, token_id in processed_constraints.items():

|

| 248 |

+

absolute_pos = prompt_length + pos

|

| 249 |

+

if absolute_pos < x.shape[1]:

|

| 250 |

+

x[:, absolute_pos] = token_id

|

| 251 |

+

|

| 252 |

+

# Create visualization state only for the response part

|

| 253 |

+

current_state = []

|

| 254 |

+

for i in range(gen_length):

|

| 255 |

+

pos = prompt_length + i # Absolute position in the sequence

|

| 256 |

+

|

| 257 |

+

if x[0, pos] == MASK_ID:

|

| 258 |

+

# Still masked

|

| 259 |

+

current_state.append((MASK_TOKEN, "#444444")) # Dark gray for masks

|

| 260 |

+

|

| 261 |

+

elif old_x[0, pos] == MASK_ID:

|

| 262 |

+

# Newly revealed in this step

|

| 263 |

+

token = tokenizer.decode([x[0, pos].item()], skip_special_tokens=True)

|

| 264 |

+

# Color based on confidence

|

| 265 |

+

confidence = float(x0_p[0, pos].cpu())

|

| 266 |

+

if confidence < 0.3:

|

| 267 |

+

color = "#FF6666" # Light red

|

| 268 |

+

elif confidence < 0.7:

|

| 269 |

+

color = "#FFAA33" # Orange

|

| 270 |

+

else:

|

| 271 |

+

color = "#66CC66" # Light green

|

| 272 |

+

|

| 273 |

+

current_state.append((token, color))

|

| 274 |

+

|

| 275 |

+

else:

|

| 276 |

+

# Previously revealed

|

| 277 |

+

token = tokenizer.decode([x[0, pos].item()], skip_special_tokens=True)

|

| 278 |

+

current_state.append((token, "#6699CC")) # Light blue

|

| 279 |

+

|

| 280 |

+

visualization_states.append(current_state)

|

| 281 |

+

|

| 282 |

+

# Extract final text (just the assistant's response)

|

| 283 |

+

response_tokens = x[0, prompt_length:]

|

| 284 |

+

final_text = tokenizer.decode(response_tokens,

|

| 285 |

+

skip_special_tokens=True,

|

| 286 |

+

clean_up_tokenization_spaces=True)

|

| 287 |

+

|

| 288 |

+

return visualization_states, final_text

|

| 289 |

+

|

| 290 |

+

css = '''

|

| 291 |

+

.category-legend{display:none}

|

| 292 |

+

button{height: 60px}

|

| 293 |

+

'''

|

| 294 |

+

def create_chatbot_demo():

|

| 295 |

+

with gr.Blocks(css=css) as demo:

|

| 296 |

+

gr.Markdown("# LLaDA - Large Language Diffusion Model Demo")

|

| 297 |

+

gr.Markdown("[model](https://huggingface.co/GSAI-ML/LLaDA-8B-Instruct), [project page](https://ml-gsai.github.io/LLaDA-demo/)")

|

| 298 |

+

|

| 299 |

+

# STATE MANAGEMENT

|

| 300 |

+

chat_history = gr.State([])

|

| 301 |

+

|

| 302 |

+

# UI COMPONENTS

|

| 303 |

+

with gr.Row():

|

| 304 |

+

with gr.Column(scale=3):

|

| 305 |

+

chatbot_ui = gr.Chatbot(label="Conversation", height=500)

|

| 306 |

+

|

| 307 |

+

# Message input

|

| 308 |

+

with gr.Group():

|

| 309 |

+

with gr.Row():

|

| 310 |

+

user_input = gr.Textbox(

|

| 311 |

+

label="Your Message",

|

| 312 |

+

placeholder="Type your message here...",

|

| 313 |

+

show_label=False

|

| 314 |

+

)

|

| 315 |

+

send_btn = gr.Button("Send")

|

| 316 |

+

|

| 317 |

+

constraints_input = gr.Textbox(

|

| 318 |

+

label="Word Constraints",

|

| 319 |

+

info="This model allows for placing specific words at specific positions using 'position:word' format. Example: 1st word once, 6th word 'upon' and 11th word 'time', would be: '0:Once, 5:upon, 10:time",

|

| 320 |

+

placeholder="0:Once, 5:upon, 10:time",

|

| 321 |

+

value=""

|

| 322 |

+

)

|

| 323 |

+

with gr.Column(scale=2):

|

| 324 |

+

output_vis = gr.HighlightedText(

|

| 325 |

+

label="Denoising Process Visualization",

|

| 326 |

+

combine_adjacent=False,

|

| 327 |

+

show_legend=True,

|

| 328 |

+

)

|

| 329 |

+

|

| 330 |

+

# Advanced generation settings

|

| 331 |

+

with gr.Accordion("Generation Settings", open=False):

|

| 332 |

+

with gr.Row():

|

| 333 |

+

gen_length = gr.Slider(

|

| 334 |

+

minimum=16, maximum=128, value=64, step=8,

|

| 335 |

+

label="Generation Length"

|

| 336 |