init space

Browse files- .gitattributes +1 -0

- .gitignore +2 -0

- app.py +41 -0

- bald_face.py +109 -0

- baldgan/__init__.py +0 -0

- baldgan/keras_contrib/__init__.py +0 -0

- baldgan/keras_contrib/layers/__init__.py +0 -0

- baldgan/keras_contrib/layers/normalization/__init__.py +0 -0

- baldgan/keras_contrib/layers/normalization/instancenormalization.py +147 -0

- baldgan/model.py +97 -0

- examples/01.jpg +3 -0

- examples/02.jpg +3 -0

- examples/03.jpg +3 -0

- examples/04.jpg +3 -0

- requirements.txt +6 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.idea/

|

| 2 |

+

__pycache__/

|

app.py

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from bald_face import BaldFace

|

| 3 |

+

|

| 4 |

+

bald = BaldFace()

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def predict(image):

|

| 8 |

+

return bald.make(image)

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

footer = r"""

|

| 12 |

+

<center>

|

| 13 |

+

<b>

|

| 14 |

+

Demo for <a href=''>Lightweight OpenPose</a>

|

| 15 |

+

</b>

|

| 16 |

+

</center>

|

| 17 |

+

"""

|

| 18 |

+

|

| 19 |

+

with gr.Blocks(title="") as app:

|

| 20 |

+

gr.HTML("<center><h1></h1></center>")

|

| 21 |

+

gr.HTML("<center><h3></h3></center>")

|

| 22 |

+

with gr.Row(equal_height=False):

|

| 23 |

+

with gr.Column():

|

| 24 |

+

input_img = gr.Image(type="numpy", label="Input image")

|

| 25 |

+

run_btn = gr.Button(variant="primary")

|

| 26 |

+

with gr.Column():

|

| 27 |

+

output_img = gr.Image(type="pil", label="Output image")

|

| 28 |

+

gr.ClearButton(components=[input_img, output_img], variant="stop")

|

| 29 |

+

|

| 30 |

+

run_btn.click(predict, [input_img], [output_img])

|

| 31 |

+

|

| 32 |

+

with gr.Row():

|

| 33 |

+

blobs = [[f"examples/{x:02d}.jpg"] for x in range(1, 5)]

|

| 34 |

+

examples = gr.Dataset(components=[input_img], samples=blobs)

|

| 35 |

+

examples.click(lambda x: x[0], [examples], [input_img])

|

| 36 |

+

|

| 37 |

+

with gr.Row():

|

| 38 |

+

gr.HTML(footer)

|

| 39 |

+

|

| 40 |

+

app.launch(share=False, debug=True, show_error=True)

|

| 41 |

+

app.queue()

|

bald_face.py

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#############################################################################

|

| 2 |

+

#

|

| 3 |

+

# Source from:

|

| 4 |

+

# https://github.com/leonelhs/baldgan

|

| 5 |

+

# Forked from:

|

| 6 |

+

# https://github.com/david-svitov/baldgan

|

| 7 |

+

# Reimplemented by: Leonel Hernández

|

| 8 |

+

#

|

| 9 |

+

##############################################################################

|

| 10 |

+

import os

|

| 11 |

+

|

| 12 |

+

import PIL.Image

|

| 13 |

+

import cv2

|

| 14 |

+

import numpy as np

|

| 15 |

+

from retinaface import RetinaFace

|

| 16 |

+

from skimage import transform as trans

|

| 17 |

+

from huggingface_hub import hf_hub_download

|

| 18 |

+

|

| 19 |

+

from baldgan.model import buildModel

|

| 20 |

+

|

| 21 |

+

BALDGAN_REPO_ID = "leonelhs/baldgan"

|

| 22 |

+

|

| 23 |

+

os.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID" # see issue #152

|

| 24 |

+

os.environ["CUDA_VISIBLE_DEVICES"] = "-1"

|

| 25 |

+

gpu_id = -1

|

| 26 |

+

|

| 27 |

+

image_size = [256, 256]

|

| 28 |

+

|

| 29 |

+

src = np.array([

|

| 30 |

+

[30.2946, 51.6963],

|

| 31 |

+

[65.5318, 51.5014],

|

| 32 |

+

[48.0252, 71.7366],

|

| 33 |

+

[33.5493, 92.3655],

|

| 34 |

+

[62.7299, 92.2041]], dtype=np.float32)

|

| 35 |

+

|

| 36 |

+

src[:, 0] += 8.0

|

| 37 |

+

src[:, 0] += 15.0

|

| 38 |

+

src[:, 1] += 30.0

|

| 39 |

+

src /= 112

|

| 40 |

+

src *= 200

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

def list2array(values):

|

| 44 |

+

return np.array(list(values))

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def align_face(img):

|

| 48 |

+

faces = RetinaFace.detect_faces(img)

|

| 49 |

+

bounding_boxes = np.array([list2array(faces[face]['facial_area']) for face in faces])

|

| 50 |

+

points = np.array([list2array(faces[face]['landmarks'].values()) for face in faces])

|

| 51 |

+

white_image = np.ones(img.shape, dtype=np.uint8) * 255

|

| 52 |

+

|

| 53 |

+

result_faces = []

|

| 54 |

+

result_masks = []

|

| 55 |

+

result_matrix = []

|

| 56 |

+

|

| 57 |

+

if bounding_boxes.shape[0] > 0:

|

| 58 |

+

det = bounding_boxes[:, 0:4]

|

| 59 |

+

for i in range(det.shape[0]):

|

| 60 |

+

_det = det[i]

|

| 61 |

+

dst = points[i]

|

| 62 |

+

|

| 63 |

+

tform = trans.SimilarityTransform()

|

| 64 |

+

tform.estimate(dst, src)

|

| 65 |

+

M = tform.params[0:2, :]

|

| 66 |

+

warped = cv2.warpAffine(img, M, (image_size[1], image_size[0]), borderValue=0.0)

|

| 67 |

+

mask = cv2.warpAffine(white_image, M, (image_size[1], image_size[0]), borderValue=0.0)

|

| 68 |

+

|

| 69 |

+

result_faces.append(warped)

|

| 70 |

+

result_masks.append(mask)

|

| 71 |

+

result_matrix.append(tform.params[0:3, :])

|

| 72 |

+

|

| 73 |

+

return result_faces, result_masks, result_matrix

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def put_face_back(img, faces, masks, result_matrix):

|

| 77 |

+

for i in range(len(faces)):

|

| 78 |

+

M = np.linalg.inv(result_matrix[i])[0:2]

|

| 79 |

+

warped = cv2.warpAffine(faces[i], M, (img.shape[1], img.shape[0]), borderValue=0.0)

|

| 80 |

+

mask = cv2.warpAffine(masks[i], M, (img.shape[1], img.shape[0]), borderValue=0.0)

|

| 81 |

+

mask = mask // 255

|

| 82 |

+

img = img * (1 - mask)

|

| 83 |

+

img = img.astype(np.uint8)

|

| 84 |

+

img += warped * mask

|

| 85 |

+

return img

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

class BaldFace:

|

| 89 |

+

|

| 90 |

+

def __init__(self):

|

| 91 |

+

self.model = buildModel()

|

| 92 |

+

model_path = hf_hub_download(repo_id=BALDGAN_REPO_ID, filename='model_G_5_170.hdf5')

|

| 93 |

+

self.model.load_weights(model_path)

|

| 94 |

+

|

| 95 |

+

def make(self, image):

|

| 96 |

+

# image = np.array(image)

|

| 97 |

+

faces, masks, matrix = align_face(image)

|

| 98 |

+

result_faces = []

|

| 99 |

+

|

| 100 |

+

for face in faces:

|

| 101 |

+

input_face = np.expand_dims(face, axis=0)

|

| 102 |

+

input_face = input_face / 127.5 - 1.

|

| 103 |

+

result = self.model.predict(input_face)[0]

|

| 104 |

+

result = ((result + 1.) * 127.5)

|

| 105 |

+

result = result.astype(np.uint8)

|

| 106 |

+

result_faces.append(result)

|

| 107 |

+

|

| 108 |

+

img_result = put_face_back(image, result_faces, masks, matrix)

|

| 109 |

+

return PIL.Image.fromarray(img_result)

|

baldgan/__init__.py

ADDED

|

File without changes

|

baldgan/keras_contrib/__init__.py

ADDED

|

File without changes

|

baldgan/keras_contrib/layers/__init__.py

ADDED

|

File without changes

|

baldgan/keras_contrib/layers/normalization/__init__.py

ADDED

|

File without changes

|

baldgan/keras_contrib/layers/normalization/instancenormalization.py

ADDED

|

@@ -0,0 +1,147 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from keras.layers import Layer, InputSpec

|

| 2 |

+

from keras import initializers, regularizers, constraints

|

| 3 |

+

from keras import backend as K

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class InstanceNormalization(Layer):

|

| 7 |

+

"""Instance normalization layer.

|

| 8 |

+

|

| 9 |

+

Normalize the activations of the previous layer at each step,

|

| 10 |

+

i.e. applies a transformation that maintains the mean activation

|

| 11 |

+

close to 0 and the activation standard deviation close to 1.

|

| 12 |

+

|

| 13 |

+

# Arguments

|

| 14 |

+

axis: Integer, the axis that should be normalized

|

| 15 |

+

(typically the features axis).

|

| 16 |

+

For instance, after a `Conv2D` layer with

|

| 17 |

+

`data_format="channels_first"`,

|

| 18 |

+

set `axis=1` in `InstanceNormalization`.

|

| 19 |

+

Setting `axis=None` will normalize all values in each

|

| 20 |

+

instance of the batch.

|

| 21 |

+

Axis 0 is the batch dimension. `axis` cannot be set to 0 to avoid errors.

|

| 22 |

+

epsilon: Small float added to variance to avoid dividing by zero.

|

| 23 |

+

center: If True, add offset of `beta` to normalized tensor.

|

| 24 |

+

If False, `beta` is ignored.

|

| 25 |

+

scale: If True, multiply by `gamma`.

|

| 26 |

+

If False, `gamma` is not used.

|

| 27 |

+

When the next layer is linear (also e.g. `nn.relu`),

|

| 28 |

+

this can be disabled since the scaling

|

| 29 |

+

will be done by the next layer.

|

| 30 |

+

beta_initializer: Initializer for the beta weight.

|

| 31 |

+

gamma_initializer: Initializer for the gamma weight.

|

| 32 |

+

beta_regularizer: Optional regularizer for the beta weight.

|

| 33 |

+

gamma_regularizer: Optional regularizer for the gamma weight.

|

| 34 |

+

beta_constraint: Optional constraint for the beta weight.

|

| 35 |

+

gamma_constraint: Optional constraint for the gamma weight.

|

| 36 |

+

|

| 37 |

+

# Input shape

|

| 38 |

+

Arbitrary. Use the keyword argument `input_shape`

|

| 39 |

+

(tuple of integers, does not include the samples axis)

|

| 40 |

+

when using this layer as the first layer in a Sequential model.

|

| 41 |

+

|

| 42 |

+

# Output shape

|

| 43 |

+

Same shape as input.

|

| 44 |

+

|

| 45 |

+

# References

|

| 46 |

+

- [Layer Normalization](https://arxiv.org/abs/1607.06450)

|

| 47 |

+

- [Instance Normalization: The Missing Ingredient for Fast Stylization](

|

| 48 |

+

https://arxiv.org/abs/1607.08022)

|

| 49 |

+

"""

|

| 50 |

+

def __init__(self,

|

| 51 |

+

axis=None,

|

| 52 |

+

epsilon=1e-3,

|

| 53 |

+

center=True,

|

| 54 |

+

scale=True,

|

| 55 |

+

beta_initializer='zeros',

|

| 56 |

+

gamma_initializer='ones',

|

| 57 |

+

beta_regularizer=None,

|

| 58 |

+

gamma_regularizer=None,

|

| 59 |

+

beta_constraint=None,

|

| 60 |

+

gamma_constraint=None,

|

| 61 |

+

**kwargs):

|

| 62 |

+

super(InstanceNormalization, self).__init__(**kwargs)

|

| 63 |

+

self.supports_masking = True

|

| 64 |

+

self.axis = axis

|

| 65 |

+

self.epsilon = epsilon

|

| 66 |

+

self.center = center

|

| 67 |

+

self.scale = scale

|

| 68 |

+

self.beta_initializer = initializers.get(beta_initializer)

|

| 69 |

+

self.gamma_initializer = initializers.get(gamma_initializer)

|

| 70 |

+

self.beta_regularizer = regularizers.get(beta_regularizer)

|

| 71 |

+

self.gamma_regularizer = regularizers.get(gamma_regularizer)

|

| 72 |

+

self.beta_constraint = constraints.get(beta_constraint)

|

| 73 |

+

self.gamma_constraint = constraints.get(gamma_constraint)

|

| 74 |

+

|

| 75 |

+

def build(self, input_shape):

|

| 76 |

+

ndim = len(input_shape)

|

| 77 |

+

if self.axis == 0:

|

| 78 |

+

raise ValueError('Axis cannot be zero')

|

| 79 |

+

|

| 80 |

+

if (self.axis is not None) and (ndim == 2):

|

| 81 |

+

raise ValueError('Cannot specify axis for rank 1 tensor')

|

| 82 |

+

|

| 83 |

+

self.input_spec = InputSpec(ndim=ndim)

|

| 84 |

+

|

| 85 |

+

if self.axis is None:

|

| 86 |

+

shape = (1,)

|

| 87 |

+

else:

|

| 88 |

+

shape = (input_shape[self.axis],)

|

| 89 |

+

|

| 90 |

+

if self.scale:

|

| 91 |

+

self.gamma = self.add_weight(shape=shape,

|

| 92 |

+

name='gamma',

|

| 93 |

+

initializer=self.gamma_initializer,

|

| 94 |

+

regularizer=self.gamma_regularizer,

|

| 95 |

+

constraint=self.gamma_constraint)

|

| 96 |

+

else:

|

| 97 |

+

self.gamma = None

|

| 98 |

+

if self.center:

|

| 99 |

+

self.beta = self.add_weight(shape=shape,

|

| 100 |

+

name='beta',

|

| 101 |

+

initializer=self.beta_initializer,

|

| 102 |

+

regularizer=self.beta_regularizer,

|

| 103 |

+

constraint=self.beta_constraint)

|

| 104 |

+

else:

|

| 105 |

+

self.beta = None

|

| 106 |

+

self.built = True

|

| 107 |

+

|

| 108 |

+

def call(self, inputs, training=None):

|

| 109 |

+

input_shape = K.int_shape(inputs)

|

| 110 |

+

reduction_axes = list(range(0, len(input_shape)))

|

| 111 |

+

|

| 112 |

+

if self.axis is not None:

|

| 113 |

+

del reduction_axes[self.axis]

|

| 114 |

+

|

| 115 |

+

del reduction_axes[0]

|

| 116 |

+

|

| 117 |

+

mean = K.mean(inputs, reduction_axes, keepdims=True)

|

| 118 |

+

stddev = K.std(inputs, reduction_axes, keepdims=True) + self.epsilon

|

| 119 |

+

normed = (inputs - mean) / stddev

|

| 120 |

+

|

| 121 |

+

broadcast_shape = [1] * len(input_shape)

|

| 122 |

+

if self.axis is not None:

|

| 123 |

+

broadcast_shape[self.axis] = input_shape[self.axis]

|

| 124 |

+

|

| 125 |

+

if self.scale:

|

| 126 |

+

broadcast_gamma = K.reshape(self.gamma, broadcast_shape)

|

| 127 |

+

normed = normed * broadcast_gamma

|

| 128 |

+

if self.center:

|

| 129 |

+

broadcast_beta = K.reshape(self.beta, broadcast_shape)

|

| 130 |

+

normed = normed + broadcast_beta

|

| 131 |

+

return normed

|

| 132 |

+

|

| 133 |

+

def get_config(self):

|

| 134 |

+

config = {

|

| 135 |

+

'axis': self.axis,

|

| 136 |

+

'epsilon': self.epsilon,

|

| 137 |

+

'center': self.center,

|

| 138 |

+

'scale': self.scale,

|

| 139 |

+

'beta_initializer': initializers.serialize(self.beta_initializer),

|

| 140 |

+

'gamma_initializer': initializers.serialize(self.gamma_initializer),

|

| 141 |

+

'beta_regularizer': regularizers.serialize(self.beta_regularizer),

|

| 142 |

+

'gamma_regularizer': regularizers.serialize(self.gamma_regularizer),

|

| 143 |

+

'beta_constraint': constraints.serialize(self.beta_constraint),

|

| 144 |

+

'gamma_constraint': constraints.serialize(self.gamma_constraint)

|

| 145 |

+

}

|

| 146 |

+

base_config = super(InstanceNormalization, self).get_config()

|

| 147 |

+

return dict(list(base_config.items()) + list(config.items()))

|

baldgan/model.py

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import keras.backend as K

|

| 2 |

+

from keras import Model

|

| 3 |

+

from keras.layers import GlobalAveragePooling2D, multiply, LeakyReLU, Permute

|

| 4 |

+

from keras.layers import Input, Dense, Reshape, Dropout, Concatenate

|

| 5 |

+

from keras.src.layers import UpSampling2D, Conv2D

|

| 6 |

+

from .keras_contrib.layers.normalization.instancenormalization import InstanceNormalization

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

def squeeze_excite_block(input, ratio=4):

|

| 10 |

+

""" Create a channel-wise squeeze-excite block

|

| 11 |

+

Args:

|

| 12 |

+

input: input tensor

|

| 13 |

+

filters: number of output filters

|

| 14 |

+

Returns: a keras tensor

|

| 15 |

+

References

|

| 16 |

+

- [Squeeze and Excitation Networks](https://arxiv.org/abs/1709.01507)

|

| 17 |

+

:param input:

|

| 18 |

+

:param ratio:

|

| 19 |

+

"""

|

| 20 |

+

init = input

|

| 21 |

+

channel_axis = 1 if K.image_data_format() == "channels_first" else -1

|

| 22 |

+

filters = init.shape[channel_axis]

|

| 23 |

+

se_shape = (1, 1, filters)

|

| 24 |

+

|

| 25 |

+

se = GlobalAveragePooling2D()(init)

|

| 26 |

+

se = Reshape(se_shape)(se)

|

| 27 |

+

se = Dense(filters // ratio, activation='relu', kernel_initializer='he_normal', use_bias=False)(se)

|

| 28 |

+

se = Dense(filters, activation='sigmoid', kernel_initializer='he_normal', use_bias=False)(se)

|

| 29 |

+

|

| 30 |

+

if K.image_data_format() == 'channels_first':

|

| 31 |

+

se = Permute((3, 1, 2))(se)

|

| 32 |

+

|

| 33 |

+

x = multiply([init, se])

|

| 34 |

+

return x

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def conv2d(layer_input, filters, f_size=4, bn=True, se=False):

|

| 38 |

+

"""Layers used during down sampling"""

|

| 39 |

+

d = Conv2D(filters, kernel_size=f_size, strides=2, padding='same')(layer_input)

|

| 40 |

+

d = LeakyReLU(alpha=0.2)(d)

|

| 41 |

+

if bn:

|

| 42 |

+

d = InstanceNormalization()(d)

|

| 43 |

+

if se:

|

| 44 |

+

d = squeeze_excite_block(d)

|

| 45 |

+

return d

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def deconv2d(layer_input, skip_input, filters, f_size=4, dropout_rate=0):

|

| 49 |

+

"""Layers used during up sampling"""

|

| 50 |

+

u = UpSampling2D(size=2)(layer_input)

|

| 51 |

+

u = Conv2D(filters, kernel_size=f_size, strides=1, padding='same', activation='relu')(u)

|

| 52 |

+

if dropout_rate:

|

| 53 |

+

u = Dropout(dropout_rate)(u)

|

| 54 |

+

u = InstanceNormalization()(u)

|

| 55 |

+

u = Concatenate()([u, skip_input])

|

| 56 |

+

return u

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def atrous(layer_input, filters, f_size=4, bn=True):

|

| 60 |

+

a_list = []

|

| 61 |

+

for rate in [2, 4, 8]:

|

| 62 |

+

a = Conv2D(filters, f_size, dilation_rate=rate, padding='same')(layer_input)

|

| 63 |

+

a_list.append(a)

|

| 64 |

+

a = Concatenate()(a_list)

|

| 65 |

+

a = LeakyReLU(alpha=0.2)(a)

|

| 66 |

+

if bn:

|

| 67 |

+

a = InstanceNormalization()(a)

|

| 68 |

+

return a

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

def buildModel():

|

| 72 |

+

K.set_learning_phase(0)

|

| 73 |

+

|

| 74 |

+

# Image input

|

| 75 |

+

d0 = Input(shape=(256, 256, 3))

|

| 76 |

+

|

| 77 |

+

gf = 64

|

| 78 |

+

# Down sampling

|

| 79 |

+

d1 = conv2d(d0, gf, bn=False, se=True)

|

| 80 |

+

d2 = conv2d(d1, gf * 2, se=True)

|

| 81 |

+

d3 = conv2d(d2, gf * 4, se=True)

|

| 82 |

+

d4 = conv2d(d3, gf * 8)

|

| 83 |

+

d5 = conv2d(d4, gf * 8)

|

| 84 |

+

|

| 85 |

+

a1 = atrous(d5, gf * 8)

|

| 86 |

+

|

| 87 |

+

# Up sampling

|

| 88 |

+

u3 = deconv2d(a1, d4, gf * 8)

|

| 89 |

+

u4 = deconv2d(u3, d3, gf * 4)

|

| 90 |

+

u5 = deconv2d(u4, d2, gf * 2)

|

| 91 |

+

u6 = deconv2d(u5, d1, gf)

|

| 92 |

+

|

| 93 |

+

u7 = UpSampling2D(size=2)(u6)

|

| 94 |

+

|

| 95 |

+

output_img = Conv2D(3, kernel_size=4, strides=1, padding='same', activation='tanh')(u7)

|

| 96 |

+

|

| 97 |

+

return Model(d0, output_img)

|

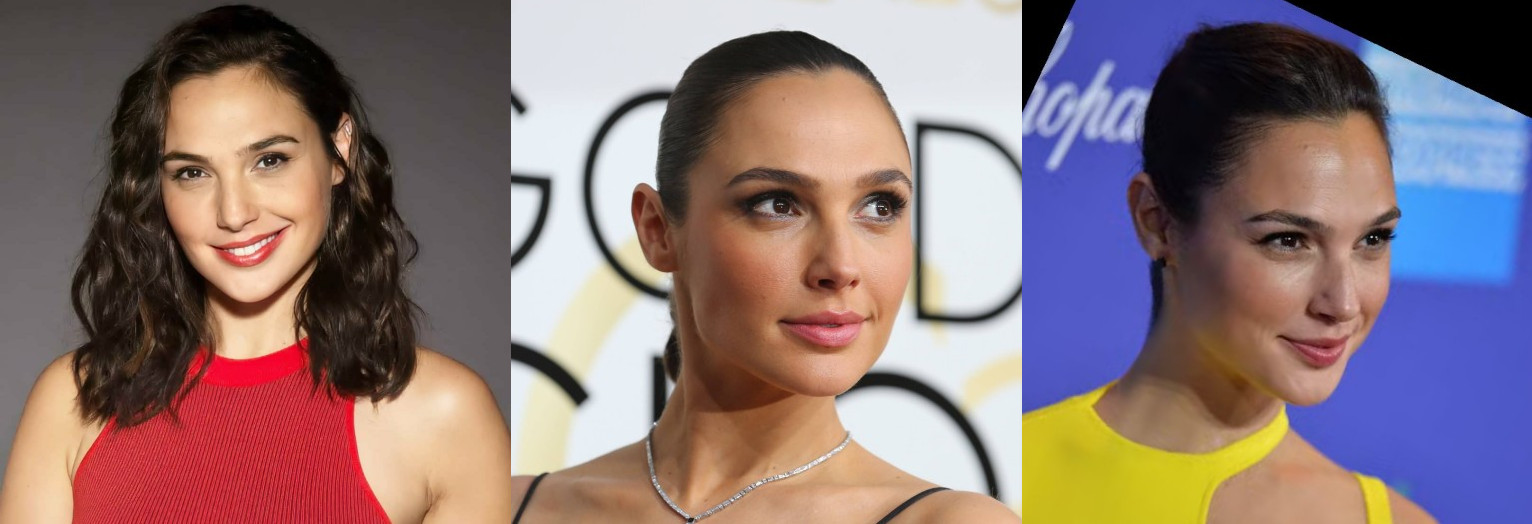

examples/01.jpg

ADDED

|

Git LFS Details

|

examples/02.jpg

ADDED

|

Git LFS Details

|

examples/03.jpg

ADDED

|

Git LFS Details

|

examples/04.jpg

ADDED

|

Git LFS Details

|

requirements.txt

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

retina-face

|

| 2 |

+

keras~=2.15.0

|

| 3 |

+

pillow~=10.1.0

|

| 4 |

+

opencv-python~=4.8.1.78

|

| 5 |

+

numpy~=1.26.2

|

| 6 |

+

scikit-image~=0.22.0

|