Spaces:

Runtime error

Runtime error

update interactive gradio demo

Browse files- .gitattributes +38 -0

- app.py +97 -4

- gpt_helper.py +570 -0

- images/.gitattributes +1 -0

- images/semantic/category/1/1.png +3 -0

- images/semantic/category/1/2.png +3 -0

- images/semantic/category/1/3.png +3 -0

- images/semantic/category/1/4.png +3 -0

- images/semantic/category/1/5.png +3 -0

- images/semantic/category/2/1.png +3 -0

- images/semantic/category/2/2.png +3 -0

- images/semantic/category/2/3.png +3 -0

- images/semantic/category/2/4.png +3 -0

- images/semantic/category/2/5.png +3 -0

- images/semantic/color/1.png +3 -0

- images/semantic/color/2.png +3 -0

- images/semantic/color/3.png +3 -0

- images/semantic/color/4.png +3 -0

- images/semantic/shape/1.png +3 -0

- images/semantic/shape/2.png +3 -0

- images/semantic/shape/3.png +3 -0

- images/semantic/shape/4.png +3 -0

- images/semantic/shape/5.png +3 -0

- images/spatial-pattern/diagonal/1.png +3 -0

- images/spatial-pattern/diagonal/2.png +3 -0

- images/spatial-pattern/diagonal/3.png +3 -0

- images/spatial-pattern/diagonal/4.png +3 -0

- images/spatial-pattern/horizontal/1.png +3 -0

- images/spatial-pattern/horizontal/2.png +3 -0

- images/spatial-pattern/horizontal/3.png +3 -0

- images/spatial-pattern/horizontal/4.png +3 -0

- images/spatial-pattern/horizontal/5.png +3 -0

- images/spatial-pattern/quadrant/1.png +3 -0

- images/spatial-pattern/quadrant/2.png +3 -0

- images/spatial-pattern/quadrant/3.png +3 -0

- images/spatial-pattern/quadrant/4.png +3 -0

- images/spatial-pattern/quadrant/5.png +3 -0

- images/spatial-pattern/vertical/1.png +3 -0

- images/spatial-pattern/vertical/2.png +3 -0

- images/spatial-pattern/vertical/3.png +3 -0

- images/spatial-pattern/vertical/4.png +3 -0

- images/spatial-pattern/vertical/5.png +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,41 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

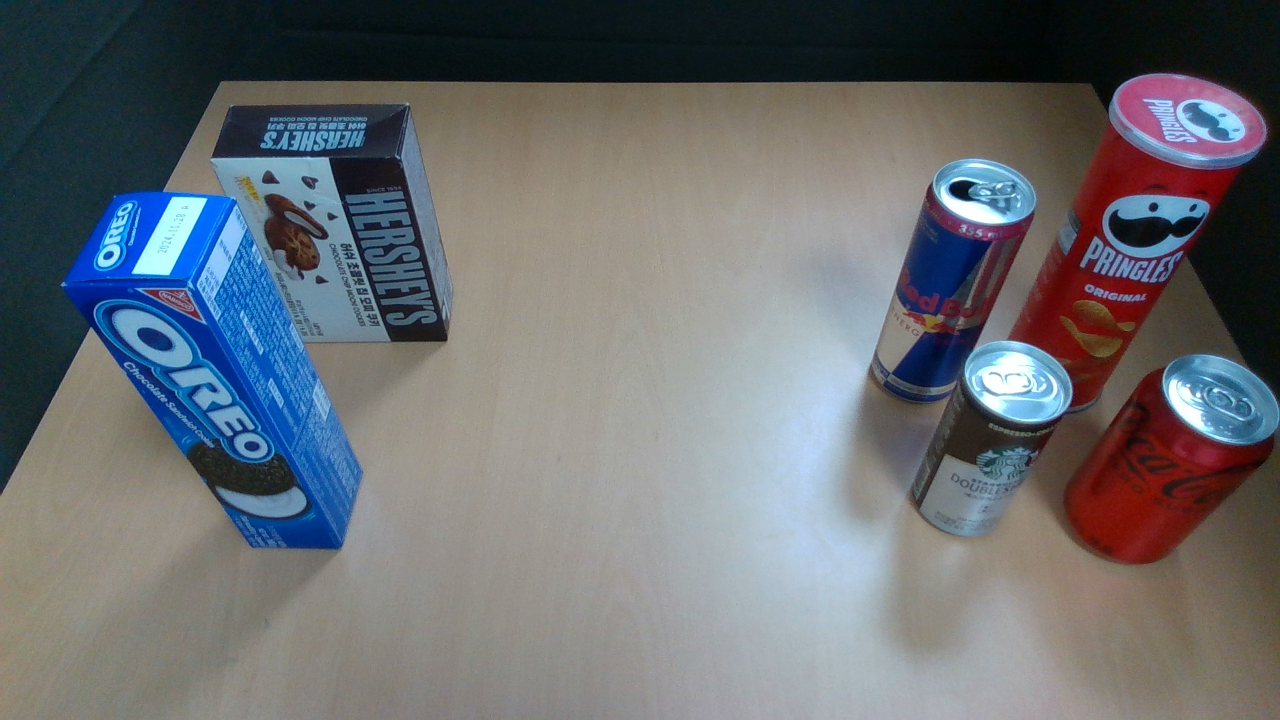

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/semantic/category/1/1.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/semantic/category/1/2.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

images/semantic/category/1/3.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

images/semantic/category/1/4.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

images/semantic/category/1/5.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

images/semantic/category/2/1.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

images/semantic/category/2/2.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

images/semantic/category/2/3.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

images/semantic/category/2/4.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

images/semantic/category/2/5.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

images/semantic/color/1.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

images/semantic/color/2.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

images/semantic/color/3.png filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

images/semantic/color/4.png filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

images/semantic/shape/1.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

images/semantic/shape/2.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

images/semantic/shape/3.png filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

images/semantic/shape/4.png filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

images/semantic/shape/5.png filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

images/spatial-pattern/diagonal/1.png filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

images/spatial-pattern/diagonal/2.png filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

images/spatial-pattern/diagonal/3.png filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

images/spatial-pattern/diagonal/4.png filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

images/spatial-pattern/horizontal/1.png filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

images/spatial-pattern/horizontal/2.png filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

images/spatial-pattern/horizontal/3.png filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

images/spatial-pattern/horizontal/4.png filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

images/spatial-pattern/horizontal/5.png filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

images/spatial-pattern/quadrant/1.png filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

images/spatial-pattern/quadrant/2.png filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

images/spatial-pattern/quadrant/3.png filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

images/spatial-pattern/quadrant/4.png filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

images/spatial-pattern/quadrant/5.png filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

images/spatial-pattern/vertical/1.png filter=lfs diff=lfs merge=lfs -text

|

| 70 |

+

images/spatial-pattern/vertical/2.png filter=lfs diff=lfs merge=lfs -text

|

| 71 |

+

images/spatial-pattern/vertical/3.png filter=lfs diff=lfs merge=lfs -text

|

| 72 |

+

images/spatial-pattern/vertical/4.png filter=lfs diff=lfs merge=lfs -text

|

| 73 |

+

images/spatial-pattern/vertical/5.png filter=lfs diff=lfs merge=lfs -text

|

app.py

CHANGED

|

@@ -1,7 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

| 1 |

import gradio as gr

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

|

| 4 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

|

| 6 |

-

iface = gr.Interface(fn=greet, inputs="text", outputs="text")

|

| 7 |

-

iface.launch(share=True)

|

|

|

|

| 1 |

+

#%%

|

| 2 |

+

import os

|

| 3 |

+

import openai

|

| 4 |

import gradio as gr

|

| 5 |

+

import sys

|

| 6 |

+

sys.path.append('./')

|

| 7 |

+

from gpt_helper import GPT4VisionClass, response_to_json

|

| 8 |

|

| 9 |

+

# Placeholder for the model variable and the confirmation text

|

| 10 |

+

model = None

|

| 11 |

+

model_status = "Model is not initialized."

|

| 12 |

+

|

| 13 |

+

def initialize_model(api_key):

|

| 14 |

+

global model, model_status

|

| 15 |

+

if model is None: # Initialize the model only if it hasn't been already

|

| 16 |

+

model = GPT4VisionClass(key=api_key, max_tokens=1024, temperature=0.9,

|

| 17 |

+

gpt_model="gpt-4-vision-preview",

|

| 18 |

+

role_msg="You are a helpful agent with vision capabilities; do not respond to objects not depicted in images.")

|

| 19 |

+

model_status = "Model initialized successfully with the provided API key."

|

| 20 |

+

else:

|

| 21 |

+

model_status = "Model has already been initialized."

|

| 22 |

+

return model_status

|

| 23 |

+

|

| 24 |

+

def add_text(state, query_text, image_paths=None, images=None):

|

| 25 |

+

if model is None:

|

| 26 |

+

return state, [("Error", "Model is not initialized. Please enter your OpenAI API Key.")]

|

| 27 |

+

images = image_paths if image_paths is not None else images

|

| 28 |

+

response_interaction = model.chat(query_text=query_text, image_paths=image_paths, images=None,

|

| 29 |

+

PRINT_USER_MSG=False, PRINT_GPT_OUTPUT=False,

|

| 30 |

+

RESET_CHAT=False, RETURN_RESPONSE=True, VISUALIZE=False, DETAIL='high')

|

| 31 |

+

result = model._get_response_content()

|

| 32 |

+

state.append((query_text, result))

|

| 33 |

+

return state, state

|

| 34 |

+

|

| 35 |

+

def scenario_button_clicked(scenario_name):

|

| 36 |

+

print(f"Scenario clicked: {scenario_name}")

|

| 37 |

+

return f"Scenario clicked: {scenario_name}"

|

| 38 |

+

|

| 39 |

+

if __name__ == "__main__":

|

| 40 |

+

# Define image paths for each subcategory under the main categories

|

| 41 |

+

image_paths = {

|

| 42 |

+

"Semantic Preference": {

|

| 43 |

+

"Color Preference": "./images/semantic/color/4.png",

|

| 44 |

+

"Shape Preference": "./images/semantic/shape/5.png",

|

| 45 |

+

"Category Preference: Fruits and Beverages ": "./images/semantic/category/1/5.png",

|

| 46 |

+

"Category Preference: Beverages and Snacks": "./images/semantic/category/2/5.png",

|

| 47 |

+

},

|

| 48 |

+

"Spatial Pattern Preference": {

|

| 49 |

+

"Vertical Line": "./images/spatial-pattern/vertical/5.png",

|

| 50 |

+

"Horizontal Line": "./images/spatial-pattern/horizontal/5.png",

|

| 51 |

+

"Diagonal Line": "./images/spatial-pattern/diagonal/4.png",

|

| 52 |

+

"Quadrants": "./images/spatial-pattern/quadrant/5.png",

|

| 53 |

+

},

|

| 54 |

+

}

|

| 55 |

+

|

| 56 |

+

with gr.Blocks() as demo:

|

| 57 |

+

######## Introduction for the demo

|

| 58 |

+

with gr.Column():

|

| 59 |

+

gr.Markdown("""

|

| 60 |

+

<div style='text-align: center;'>

|

| 61 |

+

<span style='font-size: 32px; font-weight: bold;'>[Running Examples] <span style='color: #FF9300;'>C</span>hain-<span style='color: #FF9300;'>o</span>f-<span style='color: #FF9300;'>V</span>isual-<span style='color: #FF9300;'>R</span>esiduals</span>

|

| 62 |

+

</div>

|

| 63 |

+

""")

|

| 64 |

+

gr.Markdown("""

|

| 65 |

+

In this paper, we focus on the problem of inferring underlying human preferences from a sequence of raw visual observations in tabletop manipulation environments with a variety of object types, named **V**isual **P**reference **I**nference (**VPI**).

|

| 66 |

+

To facilitate visual reasoning in the context of manipulation, we introduce the <span style='color: #FF9300;'>C</span>hain-<span style='color: #FF9300;'>o</span>f-<span style='color: #FF9300;'>V</span>isual-<span style='color: #FF9300;'>R</span>esiduals</span> (<span style='color: #FF9300;'>CoVR</span>) method. <span style='color: #FF9300;'>CoVR</span> employs a prompting mechanism

|

| 67 |

+

""")

|

| 68 |

+

|

| 69 |

+

with gr.Row():

|

| 70 |

+

for category, scenarios in image_paths.items():

|

| 71 |

+

with gr.Column():

|

| 72 |

+

gr.Markdown(f"## {category}")

|

| 73 |

+

with gr.Row(wrap=True):

|

| 74 |

+

for scenario, img_path in scenarios.items():

|

| 75 |

+

with gr.Column(layout='horizontal', variant='panel'):

|

| 76 |

+

gr.Image(value=img_path, tool=None).style(width='33%', margin='5px')

|

| 77 |

+

gr.Button(scenario, onclick=lambda x=scenario: scenario_button_clicked(x)).style(width='33%', margin='5px')

|

| 78 |

+

|

| 79 |

+

######## Input OpenAI API Key and display initialization result

|

| 80 |

+

with gr.Row(): # Use gr.Row for horizontal layout

|

| 81 |

+

# API Key Input

|

| 82 |

+

with gr.Column():

|

| 83 |

+

openai_gpt4_key = gr.Textbox(label="OpenAI GPT4 Key", type="password", placeholder="sk..",

|

| 84 |

+

info="You have to provide your own GPT4 keys for this app to function properly")

|

| 85 |

+

initialize_button = gr.Button("Initialize Model")

|

| 86 |

+

# Initialization Button and Result Display

|

| 87 |

+

with gr.Column():

|

| 88 |

+

model_status_text = gr.Text(label="Initialize API Result", info="The result of the model initialization will be displayed here.")

|

| 89 |

+

initialize_button.click(initialize_model, inputs=[openai_gpt4_key], outputs=[model_status_text])

|

| 90 |

+

|

| 91 |

+

######## Chatbot

|

| 92 |

+

chatbot = gr.Chatbot(elem_id="chatbot")

|

| 93 |

+

state = gr.State([])

|

| 94 |

+

with gr.Row():

|

| 95 |

+

query_text = gr.Textbox(show_label=False, placeholder="Enter text and press enter, or upload an image").style(container=False)

|

| 96 |

+

query_text.submit(add_text, inputs=[state, query_text], outputs=[state, chatbot])

|

| 97 |

+

query_text.submit(lambda: "", inputs=None, outputs=query_text)

|

| 98 |

+

|

| 99 |

+

demo.launch(share=True, inline=True)

|

| 100 |

|

|

|

|

|

|

gpt_helper.py

ADDED

|

@@ -0,0 +1,570 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#%%

|

| 2 |

+

import math

|

| 3 |

+

from matplotlib import gridspec

|

| 4 |

+

import matplotlib.pyplot as plt

|

| 5 |

+

import numpy as np

|

| 6 |

+

import urllib.request

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import io

|

| 9 |

+

import re

|

| 10 |

+

import copy

|

| 11 |

+

import os

|

| 12 |

+

import cv2

|

| 13 |

+

import base64

|

| 14 |

+

from io import BytesIO

|

| 15 |

+

import requests

|

| 16 |

+

import openai

|

| 17 |

+

from tenacity import retry, stop_after_attempt, wait_fixed

|

| 18 |

+

from IPython.display import Markdown,display

|

| 19 |

+

from rich.console import Console

|

| 20 |

+

import json

|

| 21 |

+

import os

|

| 22 |

+

import sys

|

| 23 |

+

|

| 24 |

+

#%%

|

| 25 |

+

def visualize_subplots(images, cols=3):

|

| 26 |

+

"""

|

| 27 |

+

Visualize a list of images.

|

| 28 |

+

|

| 29 |

+

Parameters:

|

| 30 |

+

images (list): List of images. Each image can be a path to an image file or a numpy array.

|

| 31 |

+

cols (int): Number of columns in the image grid.

|

| 32 |

+

"""

|

| 33 |

+

imgs_to_show = []

|

| 34 |

+

|

| 35 |

+

# Load images if they are paths, or directly use them if they are numpy arrays

|

| 36 |

+

for img in images:

|

| 37 |

+

if isinstance(img, str): # Assuming it's a file path

|

| 38 |

+

img_data = plt.imread(img)

|

| 39 |

+

elif isinstance(img, np.ndarray): # Assuming it's a numpy array

|

| 40 |

+

img_data = img

|

| 41 |

+

else:

|

| 42 |

+

raise ValueError("Images should be either file paths or numpy arrays.")

|

| 43 |

+

imgs_to_show.append(img_data)

|

| 44 |

+

|

| 45 |

+

N = len(imgs_to_show)

|

| 46 |

+

if N == 0:

|

| 47 |

+

print("No images to display.")

|

| 48 |

+

return

|

| 49 |

+

|

| 50 |

+

rows = int(math.ceil(N / cols))

|

| 51 |

+

gs = gridspec.GridSpec(rows, cols)

|

| 52 |

+

fig = plt.figure(figsize=(cols * 4, rows * 4))

|

| 53 |

+

|

| 54 |

+

for n in range(N):

|

| 55 |

+

ax = fig.add_subplot(gs[n])

|

| 56 |

+

ax.imshow(imgs_to_show[n])

|

| 57 |

+

ax.set_title(f"Image {n + 1}")

|

| 58 |

+

ax.axis('off')

|

| 59 |

+

|

| 60 |

+

fig.tight_layout()

|

| 61 |

+

plt.show()

|

| 62 |

+

|

| 63 |

+

def set_openai_api_key_from_txt(key_path='./key.txt',VERBOSE=True):

|

| 64 |

+

"""

|

| 65 |

+

Set OpenAI API Key from a txt file

|

| 66 |

+

"""

|

| 67 |

+

with open(key_path, 'r') as f:

|

| 68 |

+

OPENAI_API_KEY = f.read()

|

| 69 |

+

openai.api_key = OPENAI_API_KEY

|

| 70 |

+

if VERBOSE:

|

| 71 |

+

print ("OpenAI API Key Ready from [%s]."%(key_path))

|

| 72 |

+

|

| 73 |

+

#%%

|

| 74 |

+

class GPT4VisionClass:

|

| 75 |

+

def __init__(

|

| 76 |

+

self,

|

| 77 |

+

gpt_model: str = "gpt-4-vision-preview",

|

| 78 |

+

role_msg: str = "You are a helpful agent with vision capabilities; do not respond to objects not depicted in images.",

|

| 79 |

+

# key_path='../key/rilab_key.txt',

|

| 80 |

+

key=None,

|

| 81 |

+

max_tokens = 512, temperature = 0.9, n = 1, stop = [], VERBOSE=True,

|

| 82 |

+

image_max_size:int = 512,

|

| 83 |

+

):

|

| 84 |

+

self.gpt_model = gpt_model

|

| 85 |

+

self.role_msg = role_msg

|

| 86 |

+

self.messages = [{"role": "system", "content": f"{role_msg}"}]

|

| 87 |

+

self.init_messages = [{"role": "system", "content": f"{role_msg}"}]

|

| 88 |

+

self.history = [{"role": "system", "content": f"{role_msg}"}]

|

| 89 |

+

self.image_max_size = image_max_size

|

| 90 |

+

|

| 91 |

+

# GPT-4 parameters

|

| 92 |

+

self.max_tokens = max_tokens

|

| 93 |

+

self.temperature = temperature

|

| 94 |

+

self.n = n

|

| 95 |

+

self.stop = stop

|

| 96 |

+

self.VERBOSE = VERBOSE

|

| 97 |

+

if self.VERBOSE:

|

| 98 |

+

self.console = Console()

|

| 99 |

+

self.response = None

|

| 100 |

+

self.image_token_count = 0

|

| 101 |

+

|

| 102 |

+

self._setup_client_with_key(key)

|

| 103 |

+

# self._setup_client(key_path)

|

| 104 |

+

|

| 105 |

+

def _setup_client_with_key(self, key):

|

| 106 |

+

if self.VERBOSE:

|

| 107 |

+

self.console.print(f"[bold cyan]api key:[%s][/bold cyan]" % (key))

|

| 108 |

+

|

| 109 |

+

OPENAI_API_KEY = key

|

| 110 |

+

self.client = openai.OpenAI(api_key=OPENAI_API_KEY)

|

| 111 |

+

|

| 112 |

+

if self.VERBOSE:

|

| 113 |

+

self.console.print(

|

| 114 |

+

"[bold cyan]Chat agent using [%s] initialized with the follow role:[%s][/bold cyan]"

|

| 115 |

+

% (self.gpt_model, self.role_msg)

|

| 116 |

+

)

|

| 117 |

+

|

| 118 |

+

def _setup_client(self, key_path):

|

| 119 |

+

if self.VERBOSE:

|

| 120 |

+

self.console.print(f"[bold cyan]key_path:[%s][/bold cyan]" % (key_path))

|

| 121 |

+

|

| 122 |

+

with open(key_path, "r") as f:

|

| 123 |

+

OPENAI_API_KEY = f.read()

|

| 124 |

+

self.client = openai.OpenAI(api_key=OPENAI_API_KEY)

|

| 125 |

+

|

| 126 |

+

if self.VERBOSE:

|

| 127 |

+

self.console.print(

|

| 128 |

+

"[bold cyan]Chat agent using [%s] initialized with the follow role:[%s][/bold cyan]"

|

| 129 |

+

% (self.gpt_model, self.role_msg)

|

| 130 |

+

)

|

| 131 |

+

|

| 132 |

+

def _backup_chat(self):

|

| 133 |

+

self.init_messages = copy.copy(self.messages)

|

| 134 |

+

|

| 135 |

+

def _get_response_content(self):

|

| 136 |

+

if self.response:

|

| 137 |

+

return self.response.choices[0].message.content

|

| 138 |

+

else:

|

| 139 |

+

return None

|

| 140 |

+

|

| 141 |

+

def _get_response_status(self):

|

| 142 |

+

if self.response:

|

| 143 |

+

return self.response.choices[0].message.finish_reason

|

| 144 |

+

else:

|

| 145 |

+

return None

|

| 146 |

+

|

| 147 |

+

def _encode_image_path(self, image_path):

|

| 148 |

+

# with open(image_path, "rb") as image_file:

|

| 149 |

+

image_pil = Image.open(image_path)

|

| 150 |

+

image_pil.thumbnail(

|

| 151 |

+

(self.image_max_size, self.image_max_size)

|

| 152 |

+

)

|

| 153 |

+

image_pil_rgb = image_pil.convert("RGB")

|

| 154 |

+

# change pil to base64 string

|

| 155 |

+

img_buf = io.BytesIO()

|

| 156 |

+

image_pil_rgb.save(img_buf, format="PNG")

|

| 157 |

+

return base64.b64encode(img_buf.getvalue()).decode('utf-8')

|

| 158 |

+

|

| 159 |

+

def _encode_image(self, image):

|

| 160 |

+

"""

|

| 161 |

+

Save the image to a temporary file and encode it to base64

|

| 162 |

+

"""

|

| 163 |

+

# save Image:PIL to temp file

|

| 164 |

+

cv2.imwrite("temp.jpg", np.array(image))

|

| 165 |

+

with open("temp.jpg", "rb") as image_file:

|

| 166 |

+

encoded_image = base64.b64encode(image_file.read()).decode('utf-8')

|

| 167 |

+

os.remove("temp.jpg")

|

| 168 |

+

return encoded_image

|

| 169 |

+

|

| 170 |

+

def _count_image_tokens(self, width, height):

|

| 171 |

+

h = ceil(height / 512)

|

| 172 |

+

w = ceil(width / 512)

|

| 173 |

+

n = w * h

|

| 174 |

+

total = 85 + 170 * n

|

| 175 |

+

return total

|

| 176 |

+

|

| 177 |

+

def set_common_prompt(self, common_prompt):

|

| 178 |

+

self.messages.append({"role": "system", "content": common_prompt})

|

| 179 |

+

|

| 180 |

+

# @retry(stop=stop_after_attempt(10), wait=wait_fixed(5))

|

| 181 |

+

def chat(

|

| 182 |

+

self,

|

| 183 |

+

query_text,

|

| 184 |

+

image_paths=[], images=None, APPEND=True,

|

| 185 |

+

PRINT_USER_MSG=True,

|

| 186 |

+

PRINT_GPT_OUTPUT=True,

|

| 187 |

+

RESET_CHAT=False,

|

| 188 |

+

RETURN_RESPONSE=True,

|

| 189 |

+

MAX_TOKENS = 512,

|

| 190 |

+

VISUALIZE = False,

|

| 191 |

+

DETAIL = "auto",

|

| 192 |

+

CROP = None,

|

| 193 |

+

):

|

| 194 |

+

"""

|

| 195 |

+

image_paths: list of image paths

|

| 196 |

+

images: list of images

|

| 197 |

+

You can only provide either image_paths or image.

|

| 198 |

+

"""

|

| 199 |

+

if DETAIL:

|

| 200 |

+

self.console.print(f"[bold cyan]DETAIL:[/bold cyan] {DETAIL}")

|

| 201 |

+

self.detail = DETAIL

|

| 202 |

+

content = [{"type": "text", "text": query_text}]

|

| 203 |

+

content_image_not_encoded = [{"type": "text", "text": query_text}]

|

| 204 |

+

# Prepare the history temp

|

| 205 |

+

if image_paths is not None:

|

| 206 |

+

local_imgs = []

|

| 207 |

+

for image_path_idx, image_path in enumerate(image_paths):

|

| 208 |

+

with Image.open(image_path) as img:

|

| 209 |

+

width, height = img.size

|

| 210 |

+

if CROP:

|

| 211 |

+

img = img.crop(CROP)

|

| 212 |

+

width, height = img.size

|

| 213 |

+

# convert PIL to numpy array

|

| 214 |

+

local_imgs.append(np.array(img))

|

| 215 |

+

self.image_token_count += self._count_image_tokens(width, height)

|

| 216 |

+

|

| 217 |

+

print(f"[{image_path_idx}/{len(image_paths)}] image_path: {image_path}")

|

| 218 |

+

base64_image = self._encode_image_path(image_path)

|

| 219 |

+

image_content = {

|

| 220 |

+

"type": "image_url",

|

| 221 |

+

"image_url": {

|

| 222 |

+

"url": f"data:image/jpeg;base64,{base64_image}",

|

| 223 |

+

"detail": self.detail

|

| 224 |

+

}

|

| 225 |

+

}

|

| 226 |

+

image_content_in_numpy_array = {

|

| 227 |

+

"type": "image_numpy",

|

| 228 |

+

"image": np.array(Image.open(image_path))

|

| 229 |

+

}

|

| 230 |

+

content.append(image_content)

|

| 231 |

+

content_image_not_encoded.append(image_content_in_numpy_array)

|

| 232 |

+

elif images is not None:

|

| 233 |

+

local_imgs = []

|

| 234 |

+

for image_idx, image in enumerate(images):

|

| 235 |

+

image_pil = Image.fromarray(image)

|

| 236 |

+

if CROP:

|

| 237 |

+

image_pil = image_pil.crop(CROP)

|

| 238 |

+

local_imgs.append(image_pil)

|

| 239 |

+

# width, height = image_pil.size

|

| 240 |

+

image_pil.thumbnail(

|

| 241 |

+

(self.image_max_size, self.image_max_size)

|

| 242 |

+

)

|

| 243 |

+

width, height = image_pil.size

|

| 244 |

+

self.image_token_count += self._count_image_tokens(width, height)

|

| 245 |

+

self.console.print(f"[deep_sky_blue3][{image_idx+1}/{len(images)}] Image provided: [Original]: {image.shape}, [Downsize]: {image_pil.size}[/deep_sky_blue3]")

|

| 246 |

+

base64_image = self._encode_image(image_pil)

|

| 247 |

+

image_content = {

|

| 248 |

+

"type": "image_url",

|

| 249 |

+

"image_url": {

|

| 250 |

+

"url": f"data:image/jpeg;base64,{base64_image}",

|

| 251 |

+

"detail": self.detail

|

| 252 |

+

}

|

| 253 |

+

}

|

| 254 |

+

image_content_in_numpy_array = {

|

| 255 |

+

"type": "image_numpy",

|

| 256 |

+

"image": image

|

| 257 |

+

}

|

| 258 |

+

content.append(image_content)

|

| 259 |

+

content_image_not_encoded.append(image_content_in_numpy_array)

|

| 260 |

+

else:

|

| 261 |

+

self.console.print("[bold red]Neither image_paths nor images are provided.[/bold red]")

|

| 262 |

+

|

| 263 |

+

if VISUALIZE:

|

| 264 |

+

if image_paths:

|

| 265 |

+

self.console.print("[deep_sky_blue3][VISUALIZE][/deep_sky_blue3]")

|

| 266 |

+

if CROP:

|

| 267 |

+

visualize_subplots(local_imgs)

|

| 268 |

+

else:

|

| 269 |

+

visualize_subplots(image_paths)

|

| 270 |

+

elif images:

|

| 271 |

+

self.console.print("[deep_sky_blue3][VISUALIZE][/deep_sky_blue3]")

|

| 272 |

+

if CROP:

|

| 273 |

+

local_imgs = np.array(local_imgs)

|

| 274 |

+

visualize_subplots(local_imgs)

|

| 275 |

+

else:

|

| 276 |

+

visualize_subplots(images)

|

| 277 |

+

|

| 278 |

+

self.messages.append({"role": "user", "content": content})

|

| 279 |

+

self.history.append({"role": "user", "content": content_image_not_encoded})

|

| 280 |

+

payload = self.create_payload(model=self.gpt_model)

|

| 281 |

+

self.response = self.client.chat.completions.create(**payload)

|

| 282 |

+

|

| 283 |

+

if PRINT_USER_MSG:

|

| 284 |

+

self.console.print("[deep_sky_blue3][USER_MSG][/deep_sky_blue3]")

|

| 285 |

+

print(query_text)

|

| 286 |

+

if PRINT_GPT_OUTPUT:

|

| 287 |

+

self.console.print("[spring_green4][GPT_OUTPUT][/spring_green4]")

|

| 288 |

+

print(self._get_response_content())

|

| 289 |

+

# Reset

|

| 290 |

+

if RESET_CHAT:

|

| 291 |

+

self.messages = self.init_messages

|

| 292 |

+

# Return

|

| 293 |

+

if RETURN_RESPONSE:

|

| 294 |

+

return self._get_response_content()

|

| 295 |

+

|

| 296 |

+

@retry(stop=stop_after_attempt(10), wait=wait_fixed(5))

|

| 297 |

+

def chat_multiple_images(self, image_paths, query_text, model="gpt-4-vision-preview", max_tokens=300):

|

| 298 |

+

messages = [

|

| 299 |

+

{

|

| 300 |

+

"role": "user",

|

| 301 |

+

"content": [{"type": "text", "text": query_text}]

|

| 302 |

+

}

|

| 303 |

+

]

|

| 304 |

+

for image_path in image_paths:

|

| 305 |

+

base64_image = self._encode_image(image_path)

|

| 306 |

+

messages[0]["content"].append(

|

| 307 |

+

{"type": "image_url", "image_url": {"url": f"data:image/jpeg;base64,{base64_image}"}}

|

| 308 |

+

)

|

| 309 |

+

response = self.client.chat.completions.create(

|

| 310 |

+

model=model,

|

| 311 |

+

messages=messages,

|

| 312 |

+

max_tokens=max_tokens

|

| 313 |

+

)

|

| 314 |

+

return response

|

| 315 |

+

|

| 316 |

+

@retry(stop=stop_after_attempt(10), wait=wait_fixed(5))

|

| 317 |

+

def generate_image(self, prompt, size="1024x1024", quality="standard", n=1):

|

| 318 |

+

response = self.client.images.generate(

|

| 319 |

+

model="dall-e-3",

|

| 320 |

+

prompt=prompt,

|

| 321 |

+

size=size,

|

| 322 |

+

quality=quality,

|

| 323 |

+

n=n

|

| 324 |

+

)

|

| 325 |

+

return response

|

| 326 |

+

|

| 327 |

+

def visualize_image(self, image_response):

|

| 328 |

+

image_url = image_response.data[0].url

|

| 329 |

+

# Open the URL and convert the image to a NumPy array

|

| 330 |

+

with urllib.request.urlopen(image_url) as url:

|

| 331 |

+

img = Image.open(url)

|

| 332 |

+

img_array = np.array(img)

|

| 333 |

+

|

| 334 |

+

plt.imshow(img_array)

|

| 335 |

+

plt.axis('off')

|

| 336 |

+

plt.show()

|

| 337 |

+

|

| 338 |

+

def create_payload(self,model):

|

| 339 |

+

payload = {

|

| 340 |

+

"model": model,

|

| 341 |

+

"messages": self.messages,

|

| 342 |

+

"max_tokens": self.max_tokens,

|

| 343 |

+

"temperature": self.temperature,

|

| 344 |

+

"n": self.n

|

| 345 |

+

}

|

| 346 |

+

if len(self.stop) > 0:

|

| 347 |

+

payload["stop"] = self.stop

|

| 348 |

+

return payload

|

| 349 |

+

|

| 350 |

+

def save_interaction(self, data, file_path: str = "./scripts/interaction_history.json"):

|

| 351 |

+

"""

|

| 352 |

+

Save the chat history to a JSON file.

|

| 353 |

+

The history includes the user role, content, and images stored as NumPy arrays.

|

| 354 |

+

"""

|

| 355 |

+

self.history = data.copy()

|

| 356 |

+

history_to_save = []

|

| 357 |

+

for entry in self.history:

|

| 358 |

+

entry_to_save = {

|

| 359 |

+

"role": entry["role"],

|

| 360 |

+

"content": []

|

| 361 |

+

}

|

| 362 |

+

# Check if 'content' is a string or a list

|

| 363 |

+

if isinstance(entry["content"], str):

|

| 364 |

+

entry_to_save["content"].append({"type": "text", "text": entry["content"]})

|

| 365 |

+

elif isinstance(entry["content"], list):

|

| 366 |

+

for content in entry["content"]:

|

| 367 |

+

if content["type"] == "text":

|

| 368 |

+

entry_to_save["content"].append(content)

|

| 369 |

+

elif content["type"] == "image_numpy":

|

| 370 |

+

entry_to_save["content"].append({"type": "image_numpy", "image": content["image"].tolist()})

|

| 371 |

+

elif content["type"] == "image_url":

|

| 372 |

+

entry_to_save["content"].append(content)

|

| 373 |

+

history_to_save.append(entry_to_save)

|

| 374 |

+

with open(file_path, "w") as file:

|

| 375 |

+

json.dump(history_to_save, file, indent=4)

|

| 376 |

+

|

| 377 |

+

if self.VERBOSE:

|

| 378 |

+

self.console.print(f"[bold green]Chat history saved to {file_path}[/bold green]")

|

| 379 |

+

|

| 380 |

+

def get_total_token(self):

|

| 381 |

+

"""

|

| 382 |

+

Get total token used

|

| 383 |

+

"""

|

| 384 |

+

if self.VERBOSE:

|

| 385 |

+

self.console.print(f"[bold cyan]Total token used: {self.response.usage.total_tokens}[/bold cyan]")

|

| 386 |

+

return self.response.usage.total_tokens

|

| 387 |

+

|

| 388 |

+

def get_image_token(self):

|

| 389 |

+

"""

|

| 390 |

+

Get image token used

|

| 391 |

+

"""

|

| 392 |

+

if self.VERBOSE:

|

| 393 |

+

self.console.print(f"[bold cyan]Image token used: {self.image_token_count}[/bold cyan]")

|

| 394 |

+

return self.image_token_count

|

| 395 |

+

|

| 396 |

+

def reset_tokens(self):

|

| 397 |

+

"""

|

| 398 |

+

Reset total and image token used

|

| 399 |

+

"""

|

| 400 |

+

self.response.usage.total_tokens = 0

|

| 401 |

+

self.image_token_count = 0

|

| 402 |

+

if self.VERBOSE:

|

| 403 |

+

self.console.print(f"[bold cyan]Image token reset[/bold cyan]")

|

| 404 |

+

|

| 405 |

+

from math import ceil

|

| 406 |

+

|

| 407 |

+

def count_image_tokens(width: int, height: int):

|

| 408 |

+

h = ceil(height / 512)

|

| 409 |

+

w = ceil(width / 512)

|

| 410 |

+

n = w * h

|

| 411 |

+

total = 85 + 170 * n

|

| 412 |

+

return total

|

| 413 |

+

|

| 414 |

+

def printmd(string):

|

| 415 |

+

display(Markdown(string))

|

| 416 |

+

|

| 417 |

+

def extract_quoted_words(string):

|

| 418 |

+

quoted_words = re.findall(r'"([^"]*)"', string)

|

| 419 |

+

return quoted_words

|

| 420 |

+

|

| 421 |

+

def response_to_json(response):

|

| 422 |

+

# Remove the markdown code block formatting

|

| 423 |

+

response_strip = response.strip('```json\n').rstrip('```')

|

| 424 |

+

# Convert the cleaned string to a JSON object

|

| 425 |

+

try:

|

| 426 |

+

response_json = json.loads(response_strip)

|

| 427 |

+

except json.JSONDecodeError as e:

|

| 428 |

+

response_json = None

|

| 429 |

+

error_message = str(e)

|

| 430 |

+

|

| 431 |

+

return response_json, error_message if response_json is None else ""

|

| 432 |

+

|

| 433 |

+

def match_objects(response_object_names, original_object_names, type_conversion):

|

| 434 |

+

matched_objects = []

|

| 435 |

+

|

| 436 |

+

for res_obj_name in response_object_names:

|

| 437 |

+

components = res_obj_name.split('_')

|

| 438 |

+

converted_components = set()

|

| 439 |

+

|

| 440 |

+

# Applying type conversion and creating a unique set of components

|

| 441 |

+

for comp in components:

|

| 442 |

+

converted_comp = type_conversion.get(comp, comp)

|

| 443 |

+

converted_components.add(converted_comp)

|

| 444 |

+

# Check if the unique set of converted components is in any of the original object names

|

| 445 |

+

for original in original_object_names:

|

| 446 |

+

if all(converted_comp in original for converted_comp in converted_components):

|

| 447 |

+

matched_objects.append(original)

|

| 448 |

+

break

|

| 449 |

+

else:

|

| 450 |

+

print(f"No match found for {res_obj_name}")

|

| 451 |

+

print(f"Type manually in the set of {original_object_names}:")

|

| 452 |

+

matched_objects.append(input())

|

| 453 |

+

|

| 454 |

+

return matched_objects

|

| 455 |

+

|

| 456 |

+

def parse_and_get_action(response_json, option_idx, original_objects, type_conversion):

|

| 457 |

+

func_call_list = []

|

| 458 |

+

action = response_json["options"][option_idx-1]["action"]

|

| 459 |

+

|

| 460 |

+

# Splitting actions correctly if there are multiple actions

|

| 461 |

+

if isinstance(action, str):

|

| 462 |

+

actions = [act.strip() + ')' for act in action.split('),') if act.strip()]

|

| 463 |

+

elif isinstance(action, list):

|

| 464 |

+

actions = action

|

| 465 |

+

else:

|

| 466 |

+

raise ValueError("Action must be a string or a list of strings")

|

| 467 |

+

|

| 468 |

+

for act in actions:

|

| 469 |

+

# Handle special cases; none-action / done-action

|

| 470 |

+

if act in ["move_object(None, None)", "set_done()"]:

|

| 471 |

+

func_call_list.append(f"{act}")

|

| 472 |

+

continue

|

| 473 |

+

|

| 474 |

+

# Regular action processing

|

| 475 |

+

func_name, args = act.split('(', 1)

|

| 476 |

+

args = args.rstrip(')')

|

| 477 |

+

args_list = args.split(', ')

|

| 478 |

+

new_args = []

|

| 479 |

+

|

| 480 |

+

for arg in args_list:

|

| 481 |

+

arg_parts = arg.split('_')

|

| 482 |

+

# Applying type conversion to each part of arg

|

| 483 |

+

converted_arg_parts = [type_conversion.get(part, part) for part in arg_parts]

|

| 484 |

+

matched_name = match_objects(["_".join(converted_arg_parts)], original_objects, type_conversion)

|

| 485 |

+

if matched_name:

|

| 486 |

+

arg = matched_name[0]

|

| 487 |

+

|

| 488 |

+

new_args.append(f'"{arg}"')

|

| 489 |

+

|

| 490 |

+

func_call = f"{func_name}({', '.join(new_args)})"

|

| 491 |

+

func_call_list.append(func_call)

|

| 492 |

+

|

| 493 |

+

return func_call_list

|

| 494 |

+

|

| 495 |

+

def parse_actions_to_executable_strings(response_json, option_idx, env):

|

| 496 |

+

actions = response_json["options"][option_idx - 1]["actions"]

|

| 497 |

+

executable_strings = []

|

| 498 |

+

stored_results = {}

|

| 499 |

+

|

| 500 |

+

for action in actions:

|

| 501 |

+

function_name = action["function"]

|

| 502 |

+

arguments = action["arguments"]

|

| 503 |

+

|

| 504 |

+

# Preparing the arguments for the function call

|

| 505 |

+

prepared_args = []

|

| 506 |

+

for arg in arguments:

|

| 507 |

+

|

| 508 |

+

if arg == "None": # Handling the case where the argument is "None"

|

| 509 |

+

prepared_args.append(None)

|

| 510 |

+

elif arg in stored_results:

|

| 511 |

+

# Use the variable name directly

|

| 512 |

+

prepared_args.append(stored_results[arg])

|

| 513 |

+

else:

|

| 514 |

+

# Format the argument as a string or use as is

|

| 515 |

+

prepared_arg = f'"{arg}"' if isinstance(arg, str) else arg

|

| 516 |

+

prepared_args.append(prepared_arg)

|

| 517 |

+

|

| 518 |

+

# Format the executable string

|

| 519 |

+

if "store_result_as" in action:

|

| 520 |

+

result_var = action["store_result_as"]

|

| 521 |

+

exec_str = f'{result_var} = env.{function_name}({", ".join(map(str, prepared_args))})'

|

| 522 |

+

stored_results[result_var] = result_var # Store the variable name for later use

|

| 523 |

+

else:

|

| 524 |

+

exec_str = f'env.{function_name}({", ".join(map(str, prepared_args))})'

|

| 525 |

+

|

| 526 |

+

executable_strings.append(exec_str)

|

| 527 |

+

|

| 528 |

+

return executable_strings

|

| 529 |

+

|

| 530 |

+

def extract_arguments(response_json):

|

| 531 |

+

# Regular expression pattern to extract arguments from action

|

| 532 |

+

pattern = r'move_object\(([^)]+)\)'

|

| 533 |

+

|

| 534 |

+

# List to hold extracted arguments

|

| 535 |

+

extracted_arguments = []

|

| 536 |

+

|

| 537 |

+

# Iterate over each option in response_json

|

| 538 |

+

for option in response_json.get("options", []):

|

| 539 |

+

action = option.get("action", "")

|

| 540 |

+

match = re.search(pattern, action)

|

| 541 |

+

|

| 542 |

+

if match:

|

| 543 |

+

# Extract the content inside parentheses and split by comma

|

| 544 |

+

arguments = match.group(1)

|

| 545 |

+

args = [arg.strip() for arg in arguments.split(',')]

|

| 546 |

+

extracted_arguments.append(args)

|

| 547 |

+

|

| 548 |

+

return extracted_arguments

|

| 549 |

+

|

| 550 |

+

def decode_image(base64_image_string):

|

| 551 |

+

"""

|

| 552 |

+

Decodes a Base64 encoded image string and returns it as a NumPy array.

|

| 553 |

+

|

| 554 |

+

Parameters:

|

| 555 |

+

base64_image_string (str): A Base64 encoded image string.

|

| 556 |

+

|

| 557 |

+

Returns:

|

| 558 |

+

numpy.ndarray: A NumPy array representing the image if successful, None otherwise.

|

| 559 |

+

"""

|

| 560 |

+

# Remove Data URI scheme if present

|

| 561 |

+

if "," in base64_image_string:

|

| 562 |

+

base64_image_string = base64_image_string.split(',')[1]

|

| 563 |

+

|

| 564 |

+

try:

|

| 565 |

+

image_data = base64.b64decode(base64_image_string)

|

| 566 |

+

image = Image.open(BytesIO(image_data))

|

| 567 |

+

return np.array(image)

|

| 568 |

+

except Exception as e:

|

| 569 |

+

print(f"An error occurred: {e}")

|

| 570 |

+

return None

|

images/.gitattributes

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

images/semantic/category/1/1.png

ADDED

|

Git LFS Details

|

images/semantic/category/1/2.png

ADDED

|

Git LFS Details

|

images/semantic/category/1/3.png

ADDED

|

Git LFS Details

|

images/semantic/category/1/4.png

ADDED

|

Git LFS Details

|

images/semantic/category/1/5.png

ADDED

|

Git LFS Details

|

images/semantic/category/2/1.png

ADDED

|

Git LFS Details

|

images/semantic/category/2/2.png

ADDED

|

Git LFS Details

|

images/semantic/category/2/3.png

ADDED

|

Git LFS Details

|

images/semantic/category/2/4.png

ADDED

|

Git LFS Details

|

images/semantic/category/2/5.png

ADDED

|

Git LFS Details

|

images/semantic/color/1.png

ADDED

|

Git LFS Details

|

images/semantic/color/2.png

ADDED

|

Git LFS Details

|

images/semantic/color/3.png

ADDED

|

Git LFS Details

|

images/semantic/color/4.png

ADDED

|

Git LFS Details

|

images/semantic/shape/1.png

ADDED

|

Git LFS Details

|

images/semantic/shape/2.png

ADDED

|

Git LFS Details

|

images/semantic/shape/3.png

ADDED

|

Git LFS Details

|

images/semantic/shape/4.png

ADDED

|

Git LFS Details

|

images/semantic/shape/5.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/diagonal/1.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/diagonal/2.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/diagonal/3.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/diagonal/4.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/horizontal/1.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/horizontal/2.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/horizontal/3.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/horizontal/4.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/horizontal/5.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/quadrant/1.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/quadrant/2.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/quadrant/3.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/quadrant/4.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/quadrant/5.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/vertical/1.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/vertical/2.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/vertical/3.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/vertical/4.png

ADDED

|

Git LFS Details

|

images/spatial-pattern/vertical/5.png

ADDED

|

Git LFS Details

|