+

+ +

+

+

+

+

+

+

+

+ +

+

+ +

+ +

+ +

+ +

+ +

+ +

+

+

+

+ +

+ +

+ +

+ +

+ +

+ +

+

+

+

+

+

+

+

+

+

+ +

+

+

+

+

+

+

+

+

+

+

+ +

+ +

+

+

+

+ +

+

+

+

+

+

+

+

+ +

+ +

+

+

+

+

+

+ +

+ +

+

+

+

+

+ +

+ +

+ +

+

+

+

+

+

+

+

+

+

+

+

+ +

+

+

+

+

+

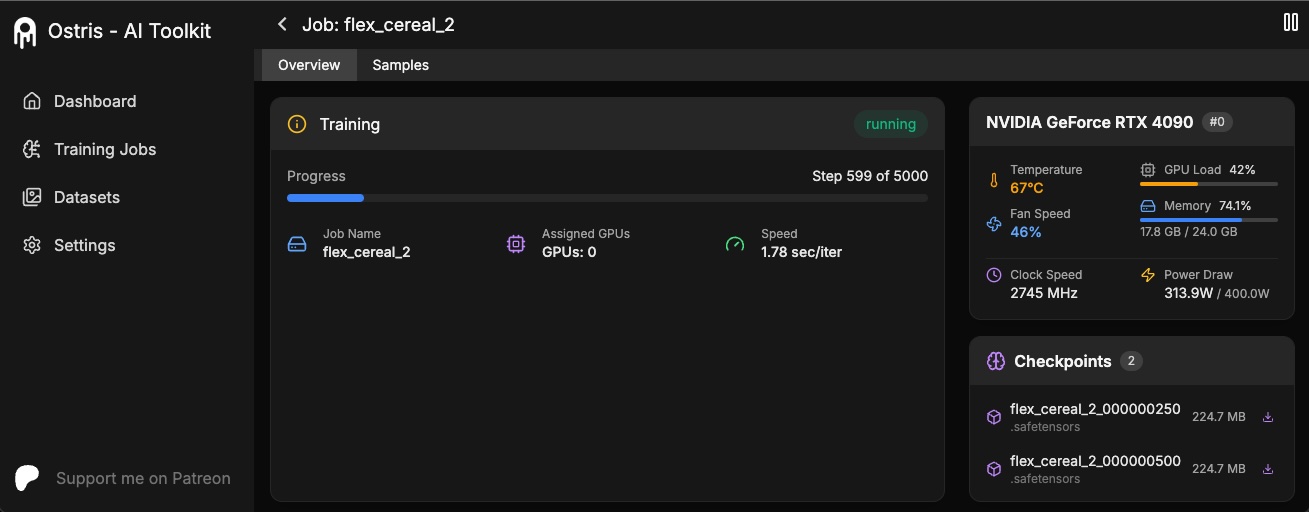

+The AI Toolkit UI is a web interface for the AI Toolkit. It allows you to easily start, stop, and monitor jobs. It also allows you to easily train models with a few clicks. It also allows you to set a token for the UI to prevent unauthorized access so it is mostly safe to run on an exposed server.

+

+## Running the UI

+

+Requirements:

+- Node.js > 18

+

+The UI does not need to be kept running for the jobs to run. It is only needed to start/stop/monitor jobs. The commands below

+will install / update the UI and it's dependencies and start the UI.

+

+```bash

+cd ui

+npm run build_and_start

+```

+

+You can now access the UI at `http://localhost:8675` or `http://

+

+The AI Toolkit UI is a web interface for the AI Toolkit. It allows you to easily start, stop, and monitor jobs. It also allows you to easily train models with a few clicks. It also allows you to set a token for the UI to prevent unauthorized access so it is mostly safe to run on an exposed server.

+

+## Running the UI

+

+Requirements:

+- Node.js > 18

+

+The UI does not need to be kept running for the jobs to run. It is only needed to start/stop/monitor jobs. The commands below

+will install / update the UI and it's dependencies and start the UI.

+

+```bash

+cd ui

+npm run build_and_start

+```

+

+You can now access the UI at `http://localhost:8675` or `http://You can optionally add a custom caption for each image (or use an AI model for this). [trigger] will represent your concept sentence/trigger word.