Update app.py

Browse files

app.py

CHANGED

|

@@ -49,22 +49,20 @@ output.style(grid=2, height="")

|

|

| 49 |

|

| 50 |

description = \

|

| 51 |

"""

|

| 52 |

-

<p style='text-align: center;'>

|

|

|

|

|

|

|

|

|

|

| 53 |

|

| 54 |

-

|

|

|

|

| 55 |

|

| 56 |

-

<img id='visitor-badge' alt='visitor badge' src='https://visitor-badge.glitch.me/badge?page_id=gradio-blocks.sd-img-variations' style='display: inline-block' /><br />

|

| 57 |

-

Generate variations on an input image using a fine-tuned version of Stable Diffusion.

|

| 58 |

-

Trained by [Justin Pinkney](https://www.justinpinkney.com) ([@Buntworthy](https://twitter.com/Buntworthy)) at [Lambda](https://lambdalabs.com/)

|

| 59 |

-

This version has been ported to 🤗 Diffusers library, see more details on how to use this version in the [Lambda Diffusers repo](https://github.com/LambdaLabsML/lambda-diffusers).

|

| 60 |

-

|

| 61 |

-

__For the original training code see [this repo](https://github.com/justinpinkney/stable-diffusion).__

|

| 62 |

-

|

| 63 |

</p>

|

| 64 |

"""

|

| 65 |

|

| 66 |

article = \

|

| 67 |

"""

|

|

|

|

| 68 |

## How does this work?

|

| 69 |

The normal Stable Diffusion model is trained to be conditioned on text input. This version has had the original text encoder (from CLIP) removed, and replaced with

|

| 70 |

the CLIP _image_ encoder instead. So instead of generating images based a text input, images are generated to match CLIP's embedding of the image.

|

|

|

|

| 49 |

|

| 50 |

description = \

|

| 51 |

"""

|

| 52 |

+

<p style='text-align: center;'>This demo is running on CPU. Working version fixed by Sylvain <a href='https://twitter.com/fffiloni' target='_blank'>@fffiloni</a>. You'll get 4 images variations. NSFW filters enabled.<img id='visitor-badge' alt='visitor badge' src='https://visitor-badge.glitch.me/badge?page_id=gradio-blocks.sd-img-variations' style='display: inline-block' /><br />

|

| 53 |

+

Generate variations on an input image using a fine-tuned version of Stable Diffusion.<br />

|

| 54 |

+

Trained by <a href='https://www.justinpinkney.com' target='_blank'>Justin Pinkney</a> (<a href='https://twitter.com/Buntworthy' target='_blank'>@Buntworthy</a>) at <a href='https://lambdalabs.com/' target='_blank'>Lambda</a><br />

|

| 55 |

+

This version has been ported to 🤗 Diffusers library, see more details on how to use this version in the <a href='https://github.com/LambdaLabsML/lambda-diffusers' target='_blank'>Lambda Diffusers repo</a>.<br />

|

| 56 |

|

| 57 |

+

For the original training code see <a href='https://github.com/justinpinkney/stable-diffusion' target='_blank'>this repo</a>.

|

| 58 |

+

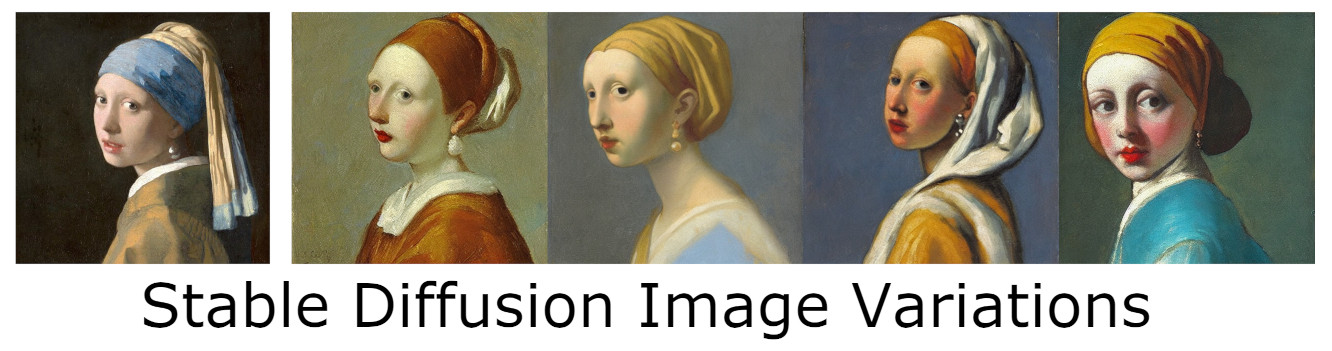

<img src='https://raw.githubusercontent.com/justinpinkney/stable-diffusion/main/assets/im-vars-thin.jpg'/>

|

| 59 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 60 |

</p>

|

| 61 |

"""

|

| 62 |

|

| 63 |

article = \

|

| 64 |

"""

|

| 65 |

+

|

| 66 |

## How does this work?

|

| 67 |

The normal Stable Diffusion model is trained to be conditioned on text input. This version has had the original text encoder (from CLIP) removed, and replaced with

|

| 68 |

the CLIP _image_ encoder instead. So instead of generating images based a text input, images are generated to match CLIP's embedding of the image.

|