Spaces:

Runtime error

Runtime error

Commit

·

d2a8669

1

Parent(s):

255e550

First commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- Homepage.py +59 -0

- README.md +8 -8

- ali.graphml +220 -0

- imgs/fairup_architecture.jpeg +0 -0

- imgs/fairup_architecture.png +0 -0

- imgs/logo_ovgu_dtdh.png +0 -0

- imgs/logo_ovgu_fin_en.jpg +0 -0

- nba.graphml +0 -0

- pages/1_Framework.py +796 -0

- pages/ovgu_logo.png +0 -0

- pages/setup.sh +7 -0

- presets/Presets.py +259 -0

- presets/__pycache__/FairGNN_preset.cpython-310.pyc +0 -0

- presets/__pycache__/Presets.cpython-310.pyc +0 -0

- requirements.txt +3 -0

- src/__pycache__/fainress_component.cpython-37.pyc +0 -0

- src/__pycache__/fainress_component.cpython-39.pyc +0 -0

- src/__pycache__/utils.cpython-37.pyc +0 -0

- src/__pycache__/utils.cpython-39.pyc +0 -0

- src/aif360/README.md +0 -0

- src/aif360/__init__.py +4 -0

- src/aif360/__pycache__/__init__.cpython-37.pyc +0 -0

- src/aif360/__pycache__/__init__.cpython-39.pyc +0 -0

- src/aif360/__pycache__/decorating_metaclass.cpython-37.pyc +0 -0

- src/aif360/__pycache__/decorating_metaclass.cpython-39.pyc +0 -0

- src/aif360/aif360-r/.Rbuildignore +12 -0

- src/aif360/aif360-r/.gitignore +7 -0

- src/aif360/aif360-r/CODEOFCONDUCT.md +44 -0

- src/aif360/aif360-r/CONTRIBUTING.md +30 -0

- src/aif360/aif360-r/DESCRIPTION +24 -0

- src/aif360/aif360-r/LICENSE.md +194 -0

- src/aif360/aif360-r/NAMESPACE +24 -0

- src/aif360/aif360-r/R/binary_label_dataset_metric.R +43 -0

- src/aif360/aif360-r/R/classification_metric.R +114 -0

- src/aif360/aif360-r/R/dataset.R +71 -0

- src/aif360/aif360-r/R/dataset_metric.R +42 -0

- src/aif360/aif360-r/R/import.R +23 -0

- src/aif360/aif360-r/R/inprocessing_adversarial_debiasing.R +73 -0

- src/aif360/aif360-r/R/inprocessing_prejudice_remover.R +26 -0

- src/aif360/aif360-r/R/postprocessing_reject_option_classification.R +85 -0

- src/aif360/aif360-r/R/preprocessing_disparate_impact_remover.R +27 -0

- src/aif360/aif360-r/R/preprocessing_reweighing.R +25 -0

- src/aif360/aif360-r/R/standard_datasets.R +31 -0

- src/aif360/aif360-r/R/utils.R +89 -0

- src/aif360/aif360-r/R/zzz.R +4 -0

- src/aif360/aif360-r/README.Rmd +150 -0

- src/aif360/aif360-r/README.md +155 -0

- src/aif360/aif360-r/cran-comments.md +10 -0

- src/aif360/aif360-r/inst/examples/test.R +20 -0

- src/aif360/aif360-r/inst/extdata/actual_data.csv +21 -0

Homepage.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from PIL import Image

|

| 3 |

+

import os

|

| 4 |

+

|

| 5 |

+

if 'STREAMLIT_PRODUCTION' in os.environ:

|

| 6 |

+

# Running on Streamlit Sharing

|

| 7 |

+

with open('test.yml', 'r') as file:

|

| 8 |

+

environment = file.read()

|

| 9 |

+

with open('test_tmp.yml', 'w') as file:

|

| 10 |

+

file.write(environment.replace('prefix: /', ''))

|

| 11 |

+

os.system('conda env create -f test_tmp.yml')

|

| 12 |

+

os.system('source activate ./envs/$(head -1 test_tmp.yml | cut -d " " -f2)')

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

st.set_page_config(

|

| 16 |

+

page_title="Homepage",

|

| 17 |

+

layout="wide"

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

# Create a text box

|

| 21 |

+

#text_box = st.text_input("Enter some text:")

|

| 22 |

+

|

| 23 |

+

# Create an expander to display additional information

|

| 24 |

+

#with st.expander("More information"):

|

| 25 |

+

# st.write("This section contains additional information about the text box.")

|

| 26 |

+

|

| 27 |

+

# Display the text box

|

| 28 |

+

#st.write("You entered:", text_box)

|

| 29 |

+

|

| 30 |

+

logo_ovgu_fin = Image.open('imgs/logo_ovgu_fin_en.jpg')

|

| 31 |

+

st.image(logo_ovgu_fin)

|

| 32 |

+

|

| 33 |

+

st.title("FairUP: a Framework for Fairness Analysis of Graph Neural Network-Based User Profiling Models 🚀")

|

| 34 |

+

st.markdown("##### *Mohamed Abdelrazek, Erasmo Purificato, Ludovico Boratto, and Ernesto William De Luca*")

|

| 35 |

+

|

| 36 |

+

st.markdown("## Description")

|

| 37 |

+

st.markdown("""

|

| 38 |

+

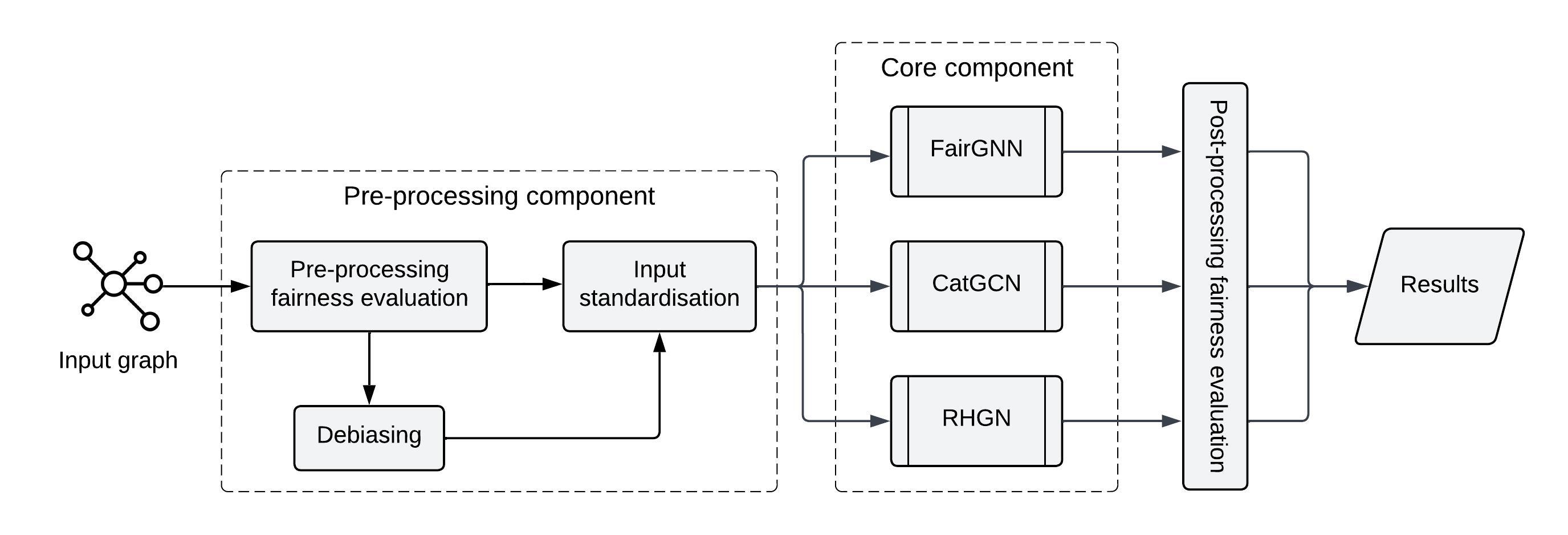

**FairUP** is a standardised framework that empowers researchers and practitioners to simultaneously analyse state-of-the-art Graph Neural Network-based models for user profiling task, in terms of classification performance and fairness metrics scores.

|

| 39 |

+

|

| 40 |

+

The framework, whose architecture is shown below, presents several components, which allow end-users to:

|

| 41 |

+

* compute the fairness of the input dataset by means of a pre-processing fairness metric, i.e. *disparate impact*;

|

| 42 |

+

* mitigate the unfairness of the dataset, if needed, by applying different debiasing methods, i.e. *sampling*, *reweighting* and *disparate impact remover*;

|

| 43 |

+

* standardise the input (a graph in Neo4J or NetworkX format) for each of the included GNNs;

|

| 44 |

+

* train one or more GNN models, specifying the parameters for each of them;

|

| 45 |

+

* evaluate post-hoc fairness by exploiting four metrics, i.e. *statistical parity*, *equal opportunity*, *overall accuracy equality*, *treatment equality*.

|

| 46 |

+

""")

|

| 47 |

+

|

| 48 |

+

# st.markdown('##### We have developed a comprehensive framework for Graph Neural Networks-based user profiling models that empowers researchers and users to simultaneously train multiple models and analyze their outcomes. This framework includes tools for mitigating bias, ensuring fairness, and increasing model interpretability. Our approach allows for the incorporation of debiasing techniques into the training process, which helps to minimize the impact of societal biases on model performance. In addition, our framework supports multiple evaluation metrics, enabling the user to compare and contrast the performance of different models.')

|

| 49 |

+

|

| 50 |

+

# Vertical space

|

| 51 |

+

st.text("")

|

| 52 |

+

|

| 53 |

+

fairup = Image.open('imgs/fairup_architecture.png')

|

| 54 |

+

st.image(fairup, caption="Logical architecture of FairUP framework")

|

| 55 |

+

|

| 56 |

+

#st.text("")

|

| 57 |

+

#st.markdown('##### The framework is divided into 3 components: the Pre-processing component, the Core component, and the Post-processing fairness evaluation component')

|

| 58 |

+

|

| 59 |

+

#st.sidebar.success("Select a page")

|

README.md

CHANGED

|

@@ -1,12 +1,12 @@

|

|

| 1 |

---

|

| 2 |

-

title: FairUP

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

-

sdk: streamlit

|

| 7 |

-

sdk_version: 1.19.0

|

| 8 |

-

app_file:

|

| 9 |

-

pinned: false

|

| 10 |

license: cc-by-4.0

|

| 11 |

---

|

| 12 |

|

|

|

|

| 1 |

---

|

| 2 |

+

title: FairUP

|

| 3 |

+

emoji: 🚀

|

| 4 |

+

colorFrom: blue

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: streamlit

|

| 7 |

+

sdk_version: 1.19.0

|

| 8 |

+

app_file: Homepage.py

|

| 9 |

+

pinned: false

|

| 10 |

license: cc-by-4.0

|

| 11 |

---

|

| 12 |

|

ali.graphml

ADDED

|

@@ -0,0 +1,220 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<?xml version='1.0' encoding='utf-8'?>

|

| 2 |

+

<graphml xmlns="http://graphml.graphdrawing.org/xmlns" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://graphml.graphdrawing.org/xmlns http://graphml.graphdrawing.org/xmlns/1.0/graphml.xsd"><key id="d17" for="node" attr.name="price" attr.type="double"/>

|

| 3 |

+

<key id="d16" for="node" attr.name="brand" attr.type="double"/>

|

| 4 |

+

<key id="d15" for="node" attr.name="customer" attr.type="long"/>

|

| 5 |

+

<key id="d14" for="node" attr.name="campaign_id" attr.type="long"/>

|

| 6 |

+

<key id="d13" for="node" attr.name="cate_id" attr.type="long"/>

|

| 7 |

+

<key id="d12" for="node" attr.name="clk" attr.type="long"/>

|

| 8 |

+

<key id="d11" for="node" attr.name="nonclk" attr.type="long"/>

|

| 9 |

+

<key id="d10" for="node" attr.name="pid" attr.type="string"/>

|

| 10 |

+

<key id="d9" for="node" attr.name="adgroup_id" attr.type="long"/>

|

| 11 |

+

<key id="d8" for="node" attr.name="time_stamp" attr.type="long"/>

|

| 12 |

+

<key id="d7" for="node" attr.name="new_user_class_level" attr.type="double"/>

|

| 13 |

+

<key id="d6" for="node" attr.name="occupation" attr.type="double"/>

|

| 14 |

+

<key id="d5" for="node" attr.name="shopping_level" attr.type="double"/>

|

| 15 |

+

<key id="d4" for="node" attr.name="pvalue_level" attr.type="double"/>

|

| 16 |

+

<key id="d3" for="node" attr.name="age_level" attr.type="double"/>

|

| 17 |

+

<key id="d2" for="node" attr.name="final_gender_code" attr.type="double"/>

|

| 18 |

+

<key id="d1" for="node" attr.name="cms_group_id" attr.type="double"/>

|

| 19 |

+

<key id="d0" for="node" attr.name="cms_segid" attr.type="double"/>

|

| 20 |

+

<graph edgedefault="directed"><node id="523">

|

| 21 |

+

<data key="d0">5.0</data>

|

| 22 |

+

<data key="d1">2.0</data>

|

| 23 |

+

<data key="d2">2.0</data>

|

| 24 |

+

<data key="d3">2.0</data>

|

| 25 |

+

<data key="d4">1.0</data>

|

| 26 |

+

<data key="d5">3.0</data>

|

| 27 |

+

<data key="d6">1.0</data>

|

| 28 |

+

<data key="d7">2.0</data>

|

| 29 |

+

<data key="d8">1494506876</data>

|

| 30 |

+

<data key="d9">95657</data>

|

| 31 |

+

<data key="d10">430548_1007</data>

|

| 32 |

+

<data key="d11">1</data>

|

| 33 |

+

<data key="d12">0</data>

|

| 34 |

+

<data key="d13">6412</data>

|

| 35 |

+

<data key="d14">160512</data>

|

| 36 |

+

<data key="d15">82513</data>

|

| 37 |

+

<data key="d16">26994.0</data>

|

| 38 |

+

<data key="d17">619.0</data>

|

| 39 |

+

</node>

|

| 40 |

+

<node id="830671">

|

| 41 |

+

<data key="d0">34.0</data>

|

| 42 |

+

<data key="d1">4.0</data>

|

| 43 |

+

<data key="d2">2.0</data>

|

| 44 |

+

<data key="d3">4.0</data>

|

| 45 |

+

<data key="d4">3.0</data>

|

| 46 |

+

<data key="d5">3.0</data>

|

| 47 |

+

<data key="d6">0.0</data>

|

| 48 |

+

<data key="d7">3.0</data>

|

| 49 |

+

<data key="d8">1494668843</data>

|

| 50 |

+

<data key="d9">95657</data>

|

| 51 |

+

<data key="d10">430539_1007</data>

|

| 52 |

+

<data key="d11">1</data>

|

| 53 |

+

<data key="d12">0</data>

|

| 54 |

+

<data key="d13">6412</data>

|

| 55 |

+

<data key="d14">160512</data>

|

| 56 |

+

<data key="d15">82513</data>

|

| 57 |

+

<data key="d16">26994.0</data>

|

| 58 |

+

<data key="d17">619.0</data>

|

| 59 |

+

</node>

|

| 60 |

+

<node id="567632">

|

| 61 |

+

<data key="d0">66.0</data>

|

| 62 |

+

<data key="d1">9.0</data>

|

| 63 |

+

<data key="d2">1.0</data>

|

| 64 |

+

<data key="d3">3.0</data>

|

| 65 |

+

<data key="d4">2.0</data>

|

| 66 |

+

<data key="d5">3.0</data>

|

| 67 |

+

<data key="d6">0.0</data>

|

| 68 |

+

<data key="d7">2.0</data>

|

| 69 |

+

<data key="d8">1494603840</data>

|

| 70 |

+

<data key="d9">95657</data>

|

| 71 |

+

<data key="d10">430539_1007</data>

|

| 72 |

+

<data key="d11">1</data>

|

| 73 |

+

<data key="d12">0</data>

|

| 74 |

+

<data key="d13">6412</data>

|

| 75 |

+

<data key="d14">160512</data>

|

| 76 |

+

<data key="d15">82513</data>

|

| 77 |

+

<data key="d16">26994.0</data>

|

| 78 |

+

<data key="d17">619.0</data>

|

| 79 |

+

</node>

|

| 80 |

+

<node id="16333">

|

| 81 |

+

<data key="d0">91.0</data>

|

| 82 |

+

<data key="d1">11.0</data>

|

| 83 |

+

<data key="d2">1.0</data>

|

| 84 |

+

<data key="d3">5.0</data>

|

| 85 |

+

<data key="d4">2.0</data>

|

| 86 |

+

<data key="d5">3.0</data>

|

| 87 |

+

<data key="d6">0.0</data>

|

| 88 |

+

<data key="d7">2.0</data>

|

| 89 |

+

<data key="d8">1494561928</data>

|

| 90 |

+

<data key="d9">95657</data>

|

| 91 |

+

<data key="d10">430539_1007</data>

|

| 92 |

+

<data key="d11">1</data>

|

| 93 |

+

<data key="d12">0</data>

|

| 94 |

+

<data key="d13">6412</data>

|

| 95 |

+

<data key="d14">160512</data>

|

| 96 |

+

<data key="d15">82513</data>

|

| 97 |

+

<data key="d16">26994.0</data>

|

| 98 |

+

<data key="d17">619.0</data>

|

| 99 |

+

</node>

|

| 100 |

+

<node id="521847">

|

| 101 |

+

<data key="d0">20.0</data>

|

| 102 |

+

<data key="d1">3.0</data>

|

| 103 |

+

<data key="d2">2.0</data>

|

| 104 |

+

<data key="d3">3.0</data>

|

| 105 |

+

<data key="d4">2.0</data>

|

| 106 |

+

<data key="d5">3.0</data>

|

| 107 |

+

<data key="d6">0.0</data>

|

| 108 |

+

<data key="d7">3.0</data>

|

| 109 |

+

<data key="d8">1494579049</data>

|

| 110 |

+

<data key="d9">95657</data>

|

| 111 |

+

<data key="d10">430548_1007</data>

|

| 112 |

+

<data key="d11">1</data>

|

| 113 |

+

<data key="d12">0</data>

|

| 114 |

+

<data key="d13">6412</data>

|

| 115 |

+

<data key="d14">160512</data>

|

| 116 |

+

<data key="d15">82513</data>

|

| 117 |

+

<data key="d16">26994.0</data>

|

| 118 |

+

<data key="d17">619.0</data>

|

| 119 |

+

</node>

|

| 120 |

+

<node id="227111">

|

| 121 |

+

<data key="d0">8.0</data>

|

| 122 |

+

<data key="d1">2.0</data>

|

| 123 |

+

<data key="d2">2.0</data>

|

| 124 |

+

<data key="d3">2.0</data>

|

| 125 |

+

<data key="d4">2.0</data>

|

| 126 |

+

<data key="d5">3.0</data>

|

| 127 |

+

<data key="d6">0.0</data>

|

| 128 |

+

<data key="d7">3.0</data>

|

| 129 |

+

<data key="d8">1494559984</data>

|

| 130 |

+

<data key="d9">95657</data>

|

| 131 |

+

<data key="d10">430539_1007</data>

|

| 132 |

+

<data key="d11">1</data>

|

| 133 |

+

<data key="d12">0</data>

|

| 134 |

+

<data key="d13">6412</data>

|

| 135 |

+

<data key="d14">160512</data>

|

| 136 |

+

<data key="d15">82513</data>

|

| 137 |

+

<data key="d16">26994.0</data>

|

| 138 |

+

<data key="d17">619.0</data>

|

| 139 |

+

</node>

|

| 140 |

+

<node id="632984">

|

| 141 |

+

<data key="d0">89.0</data>

|

| 142 |

+

<data key="d1">11.0</data>

|

| 143 |

+

<data key="d2">1.0</data>

|

| 144 |

+

<data key="d3">5.0</data>

|

| 145 |

+

<data key="d4">1.0</data>

|

| 146 |

+

<data key="d5">3.0</data>

|

| 147 |

+

<data key="d6">0.0</data>

|

| 148 |

+

<data key="d7">4.0</data>

|

| 149 |

+

<data key="d8">1494566502</data>

|

| 150 |

+

<data key="d9">95657</data>

|

| 151 |

+

<data key="d10">430548_1007</data>

|

| 152 |

+

<data key="d11">1</data>

|

| 153 |

+

<data key="d12">0</data>

|

| 154 |

+

<data key="d13">6412</data>

|

| 155 |

+

<data key="d14">160512</data>

|

| 156 |

+

<data key="d15">82513</data>

|

| 157 |

+

<data key="d16">26994.0</data>

|

| 158 |

+

<data key="d17">619.0</data>

|

| 159 |

+

</node>

|

| 160 |

+

<node id="912028">

|

| 161 |

+

<data key="d0">20.0</data>

|

| 162 |

+

<data key="d1">3.0</data>

|

| 163 |

+

<data key="d2">2.0</data>

|

| 164 |

+

<data key="d3">3.0</data>

|

| 165 |

+

<data key="d4">2.0</data>

|

| 166 |

+

<data key="d5">3.0</data>

|

| 167 |

+

<data key="d6">0.0</data>

|

| 168 |

+

<data key="d7">3.0</data>

|

| 169 |

+

<data key="d8">1494276088</data>

|

| 170 |

+

<data key="d9">95657</data>

|

| 171 |

+

<data key="d10">430539_1007</data>

|

| 172 |

+

<data key="d11">1</data>

|

| 173 |

+

<data key="d12">0</data>

|

| 174 |

+

<data key="d13">6412</data>

|

| 175 |

+

<data key="d14">160512</data>

|

| 176 |

+

<data key="d15">82513</data>

|

| 177 |

+

<data key="d16">26994.0</data>

|

| 178 |

+

<data key="d17">619.0</data>

|

| 179 |

+

</node>

|

| 180 |

+

<node id="120208">

|

| 181 |

+

<data key="d0">77.0</data>

|

| 182 |

+

<data key="d1">10.0</data>

|

| 183 |

+

<data key="d2">1.0</data>

|

| 184 |

+

<data key="d3">4.0</data>

|

| 185 |

+

<data key="d4">1.0</data>

|

| 186 |

+

<data key="d5">3.0</data>

|

| 187 |

+

<data key="d6">0.0</data>

|

| 188 |

+

<data key="d7">2.0</data>

|

| 189 |

+

<data key="d8">1494563600</data>

|

| 190 |

+

<data key="d9">95657</data>

|

| 191 |

+

<data key="d10">430548_1007</data>

|

| 192 |

+

<data key="d11">1</data>

|

| 193 |

+

<data key="d12">0</data>

|

| 194 |

+

<data key="d13">6412</data>

|

| 195 |

+

<data key="d14">160512</data>

|

| 196 |

+

<data key="d15">82513</data>

|

| 197 |

+

<data key="d16">26994.0</data>

|

| 198 |

+

<data key="d17">619.0</data>

|

| 199 |

+

</node>

|

| 200 |

+

<node id="390080">

|

| 201 |

+

<data key="d0">6.0</data>

|

| 202 |

+

<data key="d1">2.0</data>

|

| 203 |

+

<data key="d2">2.0</data>

|

| 204 |

+

<data key="d3">2.0</data>

|

| 205 |

+

<data key="d4">2.0</data>

|

| 206 |

+

<data key="d5">2.0</data>

|

| 207 |

+

<data key="d6">0.0</data>

|

| 208 |

+

<data key="d7">4.0</data>

|

| 209 |

+

<data key="d8">1494296612</data>

|

| 210 |

+

<data key="d9">95657</data>

|

| 211 |

+

<data key="d10">430539_1007</data>

|

| 212 |

+

<data key="d11">1</data>

|

| 213 |

+

<data key="d12">0</data>

|

| 214 |

+

<data key="d13">6412</data>

|

| 215 |

+

<data key="d14">160512</data>

|

| 216 |

+

<data key="d15">82513</data>

|

| 217 |

+

<data key="d16">26994.0</data>

|

| 218 |

+

<data key="d17">619.0</data>

|

| 219 |

+

</node>

|

| 220 |

+

</graph></graphml>

|

imgs/fairup_architecture.jpeg

ADDED

|

imgs/fairup_architecture.png

ADDED

|

imgs/logo_ovgu_dtdh.png

ADDED

|

imgs/logo_ovgu_fin_en.jpg

ADDED

|

nba.graphml

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

pages/1_Framework.py

ADDED

|

@@ -0,0 +1,796 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from PIL import Image

|

| 3 |

+

import time

|

| 4 |

+

import pandas as pd

|

| 5 |

+

import os

|

| 6 |

+

import paramiko

|

| 7 |

+

import threading

|

| 8 |

+

import queue

|

| 9 |

+

import warnings

|

| 10 |

+

import re

|

| 11 |

+

import subprocess

|

| 12 |

+

from presets import Presets

|

| 13 |

+

import random

|

| 14 |

+

#from src import main

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

st.set_page_config(layout="wide")

|

| 19 |

+

st.warning('Note: We are running out with GPU problems. The GNN models are currently running on CPU and some of the Framework capabilities may not be available. We apologise for the inconvenience and we will fix that soon.', icon="⚠️")

|

| 20 |

+

|

| 21 |

+

st.header('')

|

| 22 |

+

ovgu_img = Image.open('imgs/logo_ovgu_fin_en.jpg')

|

| 23 |

+

st.image(ovgu_img)

|

| 24 |

+

st.title("FairUP: a Framework for Fairness Analysis of Graph Neural Network-Based User Profiling Models. 🚀")

|

| 25 |

+

|

| 26 |

+

warnings.filterwarnings("ignore", category=DeprecationWarning)

|

| 27 |

+

warnings.filterwarnings("ignore", category=RuntimeWarning)

|

| 28 |

+

warnings.filterwarnings("ignore")

|

| 29 |

+

|

| 30 |

+

nba_columns = ['user_id', 'SALARY', 'AGE', 'MP', 'FG', 'FGA', 'FG%', '3P', '3PA',

|

| 31 |

+

'3P%', '2P', '2PA', '2P%', 'eFG%', 'FT', 'FTA', 'FT%', 'ORB', 'DRB',

|

| 32 |

+

'TRB', 'AST', 'STL', 'BLK', 'TOV', 'PF_x', 'POINTS', 'GP', 'MPG',

|

| 33 |

+

'ORPM', 'DRPM', 'RPM', 'WINS_RPM', 'PIE', 'PACE', 'W', 'player_height',

|

| 34 |

+

'player_weight', 'country', 'C', 'PF_y', 'PF-C', 'PG', 'SF', 'SG',

|

| 35 |

+

'ATL', 'ATL/CLE', 'ATL/LAL', 'BKN', 'BKN/WSH', 'BOS', 'CHA', 'CHI',

|

| 36 |

+

'CHI/OKC', 'CLE', 'CLE/DAL', 'CLE/MIA', 'DAL', 'DAL/BKN', 'DAL/PHI',

|

| 37 |

+

'DEN', 'DEN/CHA', 'DEN/POR', 'DET', 'GS', 'GS/CHA', 'GS/SAC', 'HOU',

|

| 38 |

+

'HOU/LAL', 'HOU/MEM', 'IND', 'LAC', 'LAL', 'MEM', 'MIA', 'MIL',

|

| 39 |

+

'MIL/CHA', 'MIN', 'NO', 'NO/DAL', 'NO/MEM', 'NO/MIL', 'NO/MIN/SAC',

|

| 40 |

+

'NO/ORL', 'NO/SAC', 'NY', 'NY/PHI', 'OKC', 'ORL', 'ORL/TOR', 'PHI',

|

| 41 |

+

'PHI/OKC', 'PHX', 'POR', 'SA', 'SAC', 'TOR', 'UTAH', 'WSH']

|

| 42 |

+

|

| 43 |

+

pokec_columns = ['user_id',

|

| 44 |

+

'public',

|

| 45 |

+

'completion_percentage',

|

| 46 |

+

'gender',

|

| 47 |

+

'region',

|

| 48 |

+

'AGE',

|

| 49 |

+

'I_am_working_in_field',

|

| 50 |

+

'spoken_languages_indicator',

|

| 51 |

+

'anglicky',

|

| 52 |

+

'nemecky',

|

| 53 |

+

'rusky',

|

| 54 |

+

'francuzsky',

|

| 55 |

+

'spanielsky',

|

| 56 |

+

'taliansky',

|

| 57 |

+

'slovensky',

|

| 58 |

+

'japonsky',

|

| 59 |

+

'hobbies_indicator',

|

| 60 |

+

'priatelia',

|

| 61 |

+

'sportovanie',

|

| 62 |

+

'pocuvanie hudby',

|

| 63 |

+

'pozeranie filmov',

|

| 64 |

+

'spanie',

|

| 65 |

+

'kupalisko',

|

| 66 |

+

'party',

|

| 67 |

+

'cestovanie',

|

| 68 |

+

'kino',

|

| 69 |

+

'diskoteky',

|

| 70 |

+

'nakupovanie',

|

| 71 |

+

'tancovanie',

|

| 72 |

+

'turistika',

|

| 73 |

+

'surfovanie po webe',

|

| 74 |

+

'praca s pc',

|

| 75 |

+

'sex',

|

| 76 |

+

'pc hry',

|

| 77 |

+

'stanovanie',

|

| 78 |

+

'varenie',

|

| 79 |

+

'jedlo',

|

| 80 |

+

'fotografovanie',

|

| 81 |

+

'citanie',

|

| 82 |

+

'malovanie',

|

| 83 |

+

'chovatelstvo',

|

| 84 |

+

'domace prace',

|

| 85 |

+

'divadlo',

|

| 86 |

+

'prace okolo domu',

|

| 87 |

+

'prace v zahrade',

|

| 88 |

+

'chodenie do muzei',

|

| 89 |

+

'zberatelstvo',

|

| 90 |

+

'hackovanie',

|

| 91 |

+

'I_most_enjoy_good_food_indicator',

|

| 92 |

+

'pri telke',

|

| 93 |

+

'v dobrej restauracii',

|

| 94 |

+

'pri svieckach s partnerom',

|

| 95 |

+

'v posteli',

|

| 96 |

+

'v prirode',

|

| 97 |

+

'z partnerovho bruska',

|

| 98 |

+

'v kuchyni pri stole',

|

| 99 |

+

'pets_indicator',

|

| 100 |

+

'pes',

|

| 101 |

+

'mam psa',

|

| 102 |

+

'nemam ziadne',

|

| 103 |

+

'macka',

|

| 104 |

+

'rybky',

|

| 105 |

+

'mam macku',

|

| 106 |

+

'mam rybky',

|

| 107 |

+

'vtacik',

|

| 108 |

+

'body_type_indicator',

|

| 109 |

+

'priemerna',

|

| 110 |

+

'vysportovana',

|

| 111 |

+

'chuda',

|

| 112 |

+

'velka a pekna',

|

| 113 |

+

'tak trosku pri sebe',

|

| 114 |

+

'eye_color_indicator',

|

| 115 |

+

'hnede',

|

| 116 |

+

'modre',

|

| 117 |

+

'zelene',

|

| 118 |

+

'hair_color_indicator',

|

| 119 |

+

'cierne',

|

| 120 |

+

'blond',

|

| 121 |

+

'plave',

|

| 122 |

+

'hair_type_indicator',

|

| 123 |

+

'kratke',

|

| 124 |

+

'dlhe',

|

| 125 |

+

'rovne',

|

| 126 |

+

'po plecia',

|

| 127 |

+

'kucerave',

|

| 128 |

+

'na jezka',

|

| 129 |

+

'completed_level_of_education_indicator',

|

| 130 |

+

'stredoskolske',

|

| 131 |

+

'zakladne',

|

| 132 |

+

'vysokoskolske',

|

| 133 |

+

'ucnovske',

|

| 134 |

+

'favourite_color_indicator',

|

| 135 |

+

'modra',

|

| 136 |

+

'cierna',

|

| 137 |

+

'cervena',

|

| 138 |

+

'biela',

|

| 139 |

+

'zelena',

|

| 140 |

+

'fialova',

|

| 141 |

+

'zlta',

|

| 142 |

+

'ruzova',

|

| 143 |

+

'oranzova',

|

| 144 |

+

'hneda',

|

| 145 |

+

'relation_to_smoking_indicator',

|

| 146 |

+

'nefajcim',

|

| 147 |

+

'fajcim pravidelne',

|

| 148 |

+

'fajcim prilezitostne',

|

| 149 |

+

'uz nefajcim',

|

| 150 |

+

'relation_to_alcohol_indicator',

|

| 151 |

+

'pijem prilezitostne',

|

| 152 |

+

'abstinent',

|

| 153 |

+

'nepijem',

|

| 154 |

+

'on_pokec_i_am_looking_for_indicator',

|

| 155 |

+

'dobreho priatela',

|

| 156 |

+

'priatelku',

|

| 157 |

+

'niekoho na chatovanie',

|

| 158 |

+

'udrzujem vztahy s priatelmi',

|

| 159 |

+

'vaznu znamost',

|

| 160 |

+

'sexualneho partnera',

|

| 161 |

+

'dlhodoby seriozny vztah',

|

| 162 |

+

'love_is_for_me_indicator',

|

| 163 |

+

'nie je nic lepsie',

|

| 164 |

+

'ako byt zamilovany(a)',

|

| 165 |

+

'v laske vidim zmysel zivota',

|

| 166 |

+

'v laske som sa sklamal(a)',

|

| 167 |

+

'preto som velmi opatrny(a)',

|

| 168 |

+

'laska je zakladom vyrovnaneho sexualneho zivota',

|

| 169 |

+

'romanticka laska nie je pre mna',

|

| 170 |

+

'davam prednost realite',

|

| 171 |

+

'relation_to_casual_sex_indicator',

|

| 172 |

+

'nedokazem mat s niekym sex bez lasky',

|

| 173 |

+

'to skutocne zalezi len na okolnostiach',

|

| 174 |

+

'sex mozem mat iba s niekym',

|

| 175 |

+

'koho dobre poznam',

|

| 176 |

+

'dokazem mat sex s kymkolvek',

|

| 177 |

+

'kto dobre vyzera',

|

| 178 |

+

'my_partner_should_be_indicator',

|

| 179 |

+

'mojou chybajucou polovickou',

|

| 180 |

+

'laskou mojho zivota',

|

| 181 |

+

'moj najlepsi priatel',

|

| 182 |

+

'absolutne zodpovedny a spolahlivy',

|

| 183 |

+

'hlavne spolocensky typ',

|

| 184 |

+

'clovek',

|

| 185 |

+

'ktoreho uplne respektujem',

|

| 186 |

+

'hlavne dobry milenec',

|

| 187 |

+

'niekto',

|

| 188 |

+

'marital_status_indicator',

|

| 189 |

+

'slobodny(a)',

|

| 190 |

+

'mam vazny vztah',

|

| 191 |

+

'zenaty (vydata)',

|

| 192 |

+

'rozvedeny(a)',

|

| 193 |

+

'slobodny',

|

| 194 |

+

'relation_to_children_indicator',

|

| 195 |

+

'v buducnosti chcem mat deti',

|

| 196 |

+

'I_like_movies_indicator',

|

| 197 |

+

'komedie',

|

| 198 |

+

'akcne',

|

| 199 |

+

'horory',

|

| 200 |

+

'serialy',

|

| 201 |

+

'romanticke',

|

| 202 |

+

'rodinne',

|

| 203 |

+

'sci-fi',

|

| 204 |

+

'historicke',

|

| 205 |

+

'vojnove',

|

| 206 |

+

'zahadne',

|

| 207 |

+

'mysteriozne',

|

| 208 |

+

'dokumentarne',

|

| 209 |

+

'eroticke',

|

| 210 |

+

'dramy',

|

| 211 |

+

'fantasy',

|

| 212 |

+

'muzikaly',

|

| 213 |

+

'kasove trhaky',

|

| 214 |

+

'umelecke',

|

| 215 |

+

'alternativne',

|

| 216 |

+

'I_like_watching_movie_indicator',

|

| 217 |

+

'doma z gauca',

|

| 218 |

+

'v kine',

|

| 219 |

+

'u priatela',

|

| 220 |

+

'priatelky',

|

| 221 |

+

'I_like_music_indicator',

|

| 222 |

+

'disko',

|

| 223 |

+

'pop',

|

| 224 |

+

'rock',

|

| 225 |

+

'rap',

|

| 226 |

+

'techno',

|

| 227 |

+

'house',

|

| 228 |

+

'hitparadovky',

|

| 229 |

+

'sladaky',

|

| 230 |

+

'hip-hop',

|

| 231 |

+

'metal',

|

| 232 |

+

'soundtracky',

|

| 233 |

+

'punk',

|

| 234 |

+

'oldies',

|

| 235 |

+

'folklor a ludovky',

|

| 236 |

+

'folk a country',

|

| 237 |

+

'jazz',

|

| 238 |

+

'klasicka hudba',

|

| 239 |

+

'opery',

|

| 240 |

+

'alternativa',

|

| 241 |

+

'trance',

|

| 242 |

+

'I_mostly_like_listening_to_music_indicator',

|

| 243 |

+

'kedykolvek a kdekolvek',

|

| 244 |

+

'na posteli',

|

| 245 |

+

'pri chodzi',

|

| 246 |

+

'na dobru noc',

|

| 247 |

+

'na diskoteke',

|

| 248 |

+

's partnerom',

|

| 249 |

+

'vo vani',

|

| 250 |

+

'v aute',

|

| 251 |

+

'na koncerte',

|

| 252 |

+

'pri sexe',

|

| 253 |

+

'v praci',

|

| 254 |

+

'the_idea_of_good_evening_indicator',

|

| 255 |

+

'pozerat dobry film v tv',

|

| 256 |

+

'pocuvat dobru hudbu',

|

| 257 |

+

's kamaratmi do baru',

|

| 258 |

+

'ist do kina alebo divadla',

|

| 259 |

+

'surfovat na sieti a chatovat',

|

| 260 |

+

'ist na koncert',

|

| 261 |

+

'citat dobru knihu',

|

| 262 |

+

'nieco dobre uvarit',

|

| 263 |

+

'zhasnut svetla a meditovat',

|

| 264 |

+

'ist do posilnovne',

|

| 265 |

+

'I_like_specialties_from_kitchen_indicator',

|

| 266 |

+

'slovenskej',

|

| 267 |

+

'talianskej',

|

| 268 |

+

'cinskej',

|

| 269 |

+

'mexickej',

|

| 270 |

+

'francuzskej',

|

| 271 |

+

'greckej',

|

| 272 |

+

'morske zivocichy',

|

| 273 |

+

'vegetarianskej',

|

| 274 |

+

'japonskej',

|

| 275 |

+

'indickej',

|

| 276 |

+

'I_am_going_to_concerts_indicator',

|

| 277 |

+

'ja na koncerty nechodim',

|

| 278 |

+

'zriedkavo',

|

| 279 |

+

'my_active_sports_indicator',

|

| 280 |

+

'plavanie',

|

| 281 |

+

'futbal',

|

| 282 |

+

'kolieskove korcule',

|

| 283 |

+

'lyzovanie',

|

| 284 |

+

'korculovanie',

|

| 285 |

+

'behanie',

|

| 286 |

+

'posilnovanie',

|

| 287 |

+

'tenis',

|

| 288 |

+

'hokej',

|

| 289 |

+

'basketbal',

|

| 290 |

+

'snowboarding',

|

| 291 |

+

'pingpong',

|

| 292 |

+

'auto-moto sporty',

|

| 293 |

+

'bedminton',

|

| 294 |

+

'volejbal',

|

| 295 |

+

'aerobik',

|

| 296 |

+

'bojove sporty',

|

| 297 |

+

'hadzana',

|

| 298 |

+

'skateboarding',

|

| 299 |

+

'my_passive_sports_indicator',

|

| 300 |

+

'baseball',

|

| 301 |

+

'golf',

|

| 302 |

+

'horolezectvo',

|

| 303 |

+

'bezkovanie',

|

| 304 |

+

'surfing',

|

| 305 |

+

'I_like_books_indicator',

|

| 306 |

+

'necitam knihy',

|

| 307 |

+

'o zabave',

|

| 308 |

+

'humor',

|

| 309 |

+

'hry',

|

| 310 |

+

'historicke romany',

|

| 311 |

+

'rozpravky',

|

| 312 |

+

'odbornu literaturu',

|

| 313 |

+

'psychologicku literaturu',

|

| 314 |

+

'literaturu pre rozvoj osobnosti',

|

| 315 |

+

'cestopisy',

|

| 316 |

+

'literaturu faktu',

|

| 317 |

+

'poeziu',

|

| 318 |

+

'zivotopisne a pamate',

|

| 319 |

+

'pocitacovu literaturu',

|

| 320 |

+

'filozoficku literaturu',

|

| 321 |

+

'literaturu o umeni a architekture']

|

| 322 |

+

|

| 323 |

+

alibaba_columns = ['userid', 'final_gender_code', 'age_level', 'pvalue_level', 'occupation', 'new_user_class_level ', 'adgroup_id', 'clk', 'cate_id']

|

| 324 |

+

jd_columns = ['user_id',

|

| 325 |

+

'gender',

|

| 326 |

+

'age_range',

|

| 327 |

+

'item_id',

|

| 328 |

+

'cid1',

|

| 329 |

+

'cid2',

|

| 330 |

+

'cid3',

|

| 331 |

+

'cid1_name',

|

| 332 |

+

'cid2_name',

|

| 333 |

+

'cid3_name',

|

| 334 |

+

'brand_code',

|

| 335 |

+

'price',

|

| 336 |

+

'item_name',

|

| 337 |

+

'seg_name']

|

| 338 |

+

|

| 339 |

+

##############################

|

| 340 |

+

# Preset

|

| 341 |

+

preset_question = st.radio("Do you want to apply a preset?", ("No", "Yes"))

|

| 342 |

+

with st.expander("More information"):

|

| 343 |

+

st.write("A preset is a pre-defined parameter and model settings that can be choosen by the user to test the Framework easily.")

|

| 344 |

+

st.write("Each preset option is defined by the model name and (in brackets) the dataset which it will be trained on.")

|

| 345 |

+

if preset_question == 'Yes':

|

| 346 |

+

preset_list = ['FairGNN (NBA)', 'RHGN (Alibaba)', 'CatGCN (Alibaba)']

|

| 347 |

+

preset = st.selectbox('Select Preset', preset_list)

|

| 348 |

+

# implment presets as functions?

|

| 349 |

+

if preset == 'FairGNN (NBA)':

|

| 350 |

+

model_type, predict_attr, sens_attr = Presets.FairGNN_NBA()

|

| 351 |

+

elif preset == 'RHGN (Alibaba)':

|

| 352 |

+

model_type, predict_attr, sens_attr = Presets.RHGN_Alibaba()

|

| 353 |

+

elif preset == 'CatGCN (Alibaba)':

|

| 354 |

+

model_type, predict_attr, sens_attr = Presets.CatGCN_Alibaba()

|

| 355 |

+

|

| 356 |

+

Presets.experiment_begin(model_type, predict_attr, sens_attr)

|

| 357 |

+

|

| 358 |

+

elif preset_question == 'No':

|

| 359 |

+

dataset = st.selectbox("Which dataset do you want to evaluate?", ("NBA", "Pokec-z", "Alibaba", "JD"))

|

| 360 |

+

if dataset == "NBA":

|

| 361 |

+

dataset = 'nba'

|

| 362 |

+

predict_attr = st.selectbox("Select prediction label", nba_columns)

|

| 363 |

+

sens_attr = st.selectbox("Select sensitive attribute", nba_columns)

|

| 364 |

+

elif dataset == "Pokec-z":

|

| 365 |

+

dataset = 'pokec_z'

|

| 366 |

+

predict_attr = st.selectbox("Select prediction label", pokec_columns)

|

| 367 |

+

sens_attr = st.selectbox("Select sensitive attribute", pokec_columns)

|

| 368 |

+

elif dataset == "Alibaba":

|

| 369 |

+

dataset = 'alibaba'

|

| 370 |

+

predict_attr = st.selectbox("Select prediction label", alibaba_columns)

|

| 371 |

+

sens_attr = st.selectbox("Select sensitive attribute", alibaba_columns)

|

| 372 |

+

elif dataset == 'JD':

|

| 373 |

+

dataset = 'tecent'

|

| 374 |

+

predict_attr = st.selectbox("Select prediction label", jd_columns)

|

| 375 |

+

sens_attr = st.selectbox("Select sensitive attribute", jd_columns)

|

| 376 |

+

|

| 377 |

+

|

| 378 |

+

# todo get all columns of the selected dataset and change this to a selectbox

|

| 379 |

+

#predict_attr = st.text_input("Enter the prediction label")

|

| 380 |

+

#sens_attr = st.text_input("Enter the senstive attribute")

|

| 381 |

+

def read_output(stdout, queue):

|

| 382 |

+

for line in stdout:

|

| 383 |

+

queue.put(line.strip())

|

| 384 |

+

|

| 385 |

+

def execute_command_fairness(dataset, sens_attr, predict_attr):

|

| 386 |

+

with st.spinner("Loading..."):

|

| 387 |

+

time.sleep(1)

|

| 388 |

+

#ssh = paramiko.SSHClient()

|

| 389 |

+

# Automatically add the server's host key (for the first connection only)

|

| 390 |

+

#ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy())

|

| 391 |

+

|

| 392 |

+

# Connect to the remote server

|

| 393 |

+

#ssh.connect('141.44.31.206', username='abdelrazek', password='Mohamed')

|

| 394 |

+

|

| 395 |

+

#if dataset == 'nba':

|

| 396 |

+

# stdin_new, stdout_new, stderr_new = ssh.exec_command('cd /home/abdelrazek/framework-for-fairness-analysis-and-mitigation-main && /home/abdelrazek/anaconda3/envs/test/bin/python3 main.py --calc_fairness True --dataset_name {} --dataset_path ../nba.csv --special_case True --sens_attr {} --predict_attr {} --type 1'.format(dataset, sens_attr, predict_attr), get_pty=True)

|

| 397 |

+

#elif dataset == 'alibaba':

|

| 398 |

+

# stdin_new, stdout_new, stderr_new = ssh.exec_command('cd /home/abdelrazek/framework-for-fairness-analysis-and-mitigation-main && /home/abdelrazek/anaconda3/envs/test/bin/python3 main.py --calc_fairness True --dataset_name {} --dataset_path ../alibaba_small.csv --special_case True --sens_attr {} --predict_attr {} --type 1'.format(dataset, sens_attr, predict_attr), get_pty=True)

|

| 399 |

+

#elif dataset == 'tecent':

|

| 400 |

+

# stdin_new, stdout_new, stderr_new = ssh.exec_command('cd /home/abdelrazek/framework-for-fairness-analysis-and-mitigation-main && /home/abdelrazek/anaconda3/envs/test/bin/python3 main.py --calc_fairness True --dataset_name {} --dataset_path ../JD_small.csv --special_case True --sens_attr {} --predict_attr {} --type 1'.format(dataset, sens_attr, predict_attr), get_pty=True)

|

| 401 |

+

#elif dataset == 'pokec_z':

|

| 402 |

+