Spaces:

Runtime error

Runtime error

coder

commited on

Commit

·

9cc66e2

1

Parent(s):

4d36038

primer_commit

Browse files- Home.py +105 -0

- README.md +1 -1

- core/controllers/main_process.py +30 -0

- core/controllers/pages_controller.py +132 -0

- core/estilos/about_me.css +41 -0

- core/estilos/home.css +61 -0

- core/estilos/live_demo.css +81 -0

- core/estilos/main.css +5 -0

- core/estilos/teoria.css +81 -0

- core/imagenes/result.png +0 -0

- core/imagenes/shiba.png +0 -0

- pages/Aboutme.py +90 -0

- pages/LiveDemo.py +144 -0

- pages/Teoria.py +216 -0

- requirements.txt +8 -0

Home.py

ADDED

|

@@ -0,0 +1,105 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from core.controllers.pages_controller import Page

|

| 2 |

+

from pages.Teoria import Teoria

|

| 3 |

+

from pages.LiveDemo import Live_Demo

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class Home(Page):

|

| 7 |

+

variables_globales = {

|

| 8 |

+

}

|

| 9 |

+

archivos_css = ["main",

|

| 10 |

+

"home"]

|

| 11 |

+

|

| 12 |

+

def __init__(self, title=str("Bienvenido"), icon=str("🖼️"), init_page=False):

|

| 13 |

+

super().__init__()

|

| 14 |

+

if init_page:

|

| 15 |

+

self.new_page(title=title, icon=icon)

|

| 16 |

+

self.new_body(True)

|

| 17 |

+

self.init_globals(globals=self.variables_globales)

|

| 18 |

+

for archivo in self.archivos_css:

|

| 19 |

+

self.cargar_css(archivo_css=archivo)

|

| 20 |

+

|

| 21 |

+

def agregar_card_bienvenido(self, columna):

|

| 22 |

+

card_bienvenido = columna.container()

|

| 23 |

+

|

| 24 |

+

card_bienvenido.header("Sobre la clasificación de imágenes",

|

| 25 |

+

help=None)

|

| 26 |

+

card_bienvenido.markdown(unsafe_allow_html=False,

|

| 27 |

+

help=None,

|

| 28 |

+

body="""

|

| 29 |

+

La **clasificación de imágenes en visión artificial** consiste en enseñar a una computadora a **identificar la categoría general de una fotografía**, como "perro" o "coche", en lugar de analizar detalles específicos o ubicar objetos.

|

| 30 |

+

|

| 31 |

+

Este **proceso permite a la computadora** reconocer patrones y realizar **predicciones precisas en nuevas imágenes**.

|

| 32 |

+

""")

|

| 33 |

+

|

| 34 |

+

imagen_demo1, imagen_demo2 = card_bienvenido.columns(2, gap="small")

|

| 35 |

+

src_img_1 = self.imgg.open("core/imagenes/shiba.png")

|

| 36 |

+

src_img_2 = self.imgg.open("core/imagenes/result.png")

|

| 37 |

+

imagen_demo1.image(src_img_1,

|

| 38 |

+

use_column_width="auto")

|

| 39 |

+

imagen_demo2.image(src_img_2,

|

| 40 |

+

use_column_width="auto")

|

| 41 |

+

|

| 42 |

+

card_bienvenido.markdown(unsafe_allow_html=False,

|

| 43 |

+

help=None,

|

| 44 |

+

# Esto se logra mediante el entrenamiento de **algoritmos de aprendizaje profundo**, como las **redes neuronales convolucionales (CNN)** o modelos basados en **Transformers**. Estos algoritmos se entrenan utilizando un **amplio conjunto de datos** de imágenes etiquetadas, donde cada imagen tiene una **etiqueta que describe** su contenido (por ejemplo, "gato" o "árbol").

|

| 45 |

+

body="""

|

| 46 |

+

A continuación veremos cómo la librería Transformers utiliza el **modelo pre-entrenado Google/ViT**, entrenado con un conjunto de datos de más de 14 millones de imágenes, etiquetadas en más de 21,000 clases diferentes, todas con una resolución de 224x224.

|

| 47 |

+

""")

|

| 48 |

+

|

| 49 |

+

def agregar_card_teoria(self, columna):

|

| 50 |

+

card_teoria = columna.container()

|

| 51 |

+

card_teoria.header("Teoría",

|

| 52 |

+

help=None)

|

| 53 |

+

card_teoria.markdown(unsafe_allow_html=True,

|

| 54 |

+

help=None,

|

| 55 |

+

body="""<div id='texto_boton_teoria'>

|

| 56 |

+

Conoce mas sobre los principios, fundamentos, personajes, avances:

|

| 57 |

+

</div>

|

| 58 |

+

""")

|

| 59 |

+

card_teoria.button("Ver mas",

|

| 60 |

+

help="Botón hacia página de teoría",

|

| 61 |

+

on_click=self.hacia_teoria,

|

| 62 |

+

type="secondary",

|

| 63 |

+

use_container_width=True)

|

| 64 |

+

|

| 65 |

+

def hacia_teoria(self):

|

| 66 |

+

Teoria(init_page=True).build()

|

| 67 |

+

self.page().stop()

|

| 68 |

+

|

| 69 |

+

def agregar_card_live_demo(self, columna):

|

| 70 |

+

card_live_demo = columna.container()

|

| 71 |

+

card_live_demo.header("Demo",

|

| 72 |

+

help=None)

|

| 73 |

+

card_live_demo.markdown(unsafe_allow_html=False,

|

| 74 |

+

help=None,

|

| 75 |

+

body="""

|

| 76 |

+

Accede a la **demo** interactiva **utilizando** **transformers + google/ViT**.

|

| 77 |

+

""")

|

| 78 |

+

card_live_demo.button("Live-Demo",

|

| 79 |

+

help="Botón hacia página de live-demo",

|

| 80 |

+

on_click=self.hacia_demo,

|

| 81 |

+

type="secondary",

|

| 82 |

+

use_container_width=True)

|

| 83 |

+

|

| 84 |

+

def hacia_demo(self):

|

| 85 |

+

Live_Demo(init_page=True).build()

|

| 86 |

+

self.page().stop()

|

| 87 |

+

|

| 88 |

+

def agregar_card_about_me(self, columna):

|

| 89 |

+

card_about_me = columna.container()

|

| 90 |

+

card_about_me.header("Coder160",

|

| 91 |

+

help=None)

|

| 92 |

+

|

| 93 |

+

def build(self):

|

| 94 |

+

# secciones

|

| 95 |

+

columna_bienvenido, columna_botones = self.get_body().columns(

|

| 96 |

+

[0.7, 0.3], gap="medium")

|

| 97 |

+

if self.user_logged_in():

|

| 98 |

+

self.agregar_card_bienvenido(columna_bienvenido)

|

| 99 |

+

self.agregar_card_teoria(columna_botones)

|

| 100 |

+

self.agregar_card_live_demo(columna_botones)

|

| 101 |

+

self.agregar_card_about_me(columna_botones)

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

if __name__ == "__main__":

|

| 105 |

+

Home(init_page=True).build()

|

README.md

CHANGED

|

@@ -5,7 +5,7 @@ colorFrom: yellow

|

|

| 5 |

colorTo: gray

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.26.0

|

| 8 |

-

app_file:

|

| 9 |

pinned: false

|

| 10 |

license: gpl-3.0

|

| 11 |

---

|

|

|

|

| 5 |

colorTo: gray

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: 1.26.0

|

| 8 |

+

app_file: Home.py

|

| 9 |

pinned: false

|

| 10 |

license: gpl-3.0

|

| 11 |

---

|

core/controllers/main_process.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import ViTImageProcessor, ViTForImageClassification

|

| 2 |

+

from PIL import Image

|

| 3 |

+

from io import BytesIO

|

| 4 |

+

import requests

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class Generador():

|

| 8 |

+

def __init__(self, configuraciones):

|

| 9 |

+

self.modelo = configuraciones.get('model')

|

| 10 |

+

self.tokenizer = configuraciones.get('tokenizer')

|

| 11 |

+

|

| 12 |

+

def generar_prediccion(self, imagen_bytes):

|

| 13 |

+

# @title **Ejemplo práctico**

|

| 14 |

+

prediccion = None

|

| 15 |

+

try:

|

| 16 |

+

# Inicializamos los procesadores y el modelo

|

| 17 |

+

procesador = ViTImageProcessor.from_pretrained(self.tokenizer)

|

| 18 |

+

modelo = ViTForImageClassification.from_pretrained(self.modelo)

|

| 19 |

+

# Procesamos nuestra imagen

|

| 20 |

+

inputs = procesador(images=imagen_bytes, return_tensors="pt")

|

| 21 |

+

outputs = modelo(**inputs)

|

| 22 |

+

logits = outputs.logits

|

| 23 |

+

# Obtenemos las predicciones

|

| 24 |

+

predicted_class_idx = logits.argmax(-1).item()

|

| 25 |

+

prediccion = modelo.config.id2label[predicted_class_idx]

|

| 26 |

+

except Exception as error:

|

| 27 |

+

print(f"No es Chems\n{error}")

|

| 28 |

+

prediccion = error

|

| 29 |

+

finally:

|

| 30 |

+

self.prediccion = str(prediccion)

|

core/controllers/pages_controller.py

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as BaseBuilder

|

| 2 |

+

from PIL import Image

|

| 3 |

+

import json

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class Page():

|

| 7 |

+

def __init__(self):

|

| 8 |

+

self.__ = BaseBuilder

|

| 9 |

+

self.imgg = Image

|

| 10 |

+

|

| 11 |

+

def page(self):

|

| 12 |

+

return self.__

|

| 13 |

+

|

| 14 |

+

def new_page(self, title: str, icon=str(), color_divider="rainbow"):

|

| 15 |

+

self.page().set_page_config(page_title=title,

|

| 16 |

+

page_icon=icon,

|

| 17 |

+

layout="wide")

|

| 18 |

+

self.page().title(f"Clasificación de imágenes con Visión Artificial",

|

| 19 |

+

anchor="titulo-proyecto",

|

| 20 |

+

help=None)

|

| 21 |

+

self.page().subheader(f"{title} {icon}",

|

| 22 |

+

anchor="titulo-pagina",

|

| 23 |

+

divider=color_divider,

|

| 24 |

+

help=None)

|

| 25 |

+

self.check_password()

|

| 26 |

+

|

| 27 |

+

def new_body(self, new=False):

|

| 28 |

+

self.__body = BaseBuilder.empty() if new else self.page().container()

|

| 29 |

+

|

| 30 |

+

def get_body(self):

|

| 31 |

+

return self.__body

|

| 32 |

+

|

| 33 |

+

def init_globals(self, globals=dict({})):

|

| 34 |

+

for _k, _v in globals.items():

|

| 35 |

+

if self.get_global(_k,None) is None:

|

| 36 |

+

self.set_global(_k, _v)

|

| 37 |

+

|

| 38 |

+

def set_global(self, key=str(), value=None):

|

| 39 |

+

self.page().session_state[key] = value

|

| 40 |

+

|

| 41 |

+

def get_global(self, key=str(), default=None, is_secret=False):

|

| 42 |

+

if is_secret:

|

| 43 |

+

return self.page().secrets.get(key, default)

|

| 44 |

+

else:

|

| 45 |

+

return self.page().session_state.get(key, default)

|

| 46 |

+

|

| 47 |

+

def cargar_css(self, archivo_css=str("default")):

|

| 48 |

+

ruta = f"core/estilos/{archivo_css}.css"

|

| 49 |

+

try:

|

| 50 |

+

with open(ruta) as archivo:

|

| 51 |

+

self.page().markdown(

|

| 52 |

+

f'<style>{archivo.read()}</style>', unsafe_allow_html=True)

|

| 53 |

+

except Exception as er:

|

| 54 |

+

print(f"Error:\n{er}")

|

| 55 |

+

|

| 56 |

+

def check_password(self):

|

| 57 |

+

if self.user_logged_in():

|

| 58 |

+

self.page().sidebar.success("👨💻 Conectado")

|

| 59 |

+

self.page().sidebar.button("Logout", use_container_width=True,

|

| 60 |

+

type="primary", on_click=self.logout)

|

| 61 |

+

return True

|

| 62 |

+

else:

|

| 63 |

+

self.page().sidebar.subheader("# ¡👨💻 Desbloquea todas las funciones!")

|

| 64 |

+

self.page().sidebar.write("¡Ingresa tu Usuario y Contraseña!")

|

| 65 |

+

self.page().sidebar.text_input("Usuario", value="",

|

| 66 |

+

on_change=self.login, key="USUARIO")

|

| 67 |

+

self.page().sidebar.text_input("Password", type="password",

|

| 68 |

+

on_change=self.login, key="PASSWORD", value="")

|

| 69 |

+

self.page().sidebar.button("LogIn", use_container_width=True, on_click=self.login)

|

| 70 |

+

return False

|

| 71 |

+

|

| 72 |

+

def user_logged_in(self):

|

| 73 |

+

return self.get_global('logged_in', False)

|

| 74 |

+

|

| 75 |

+

def login(self):

|

| 76 |

+

_config = self.get_global('PRIVATE_CONFIG', dict({}), True)

|

| 77 |

+

_usuario = self.get_global("USUARIO")

|

| 78 |

+

_registros = self.get_global("registros", None, True)

|

| 79 |

+

_factor = int(_config['FPSSWD'])

|

| 80 |

+

if self.codificar(_usuario, _factor) in _registros:

|

| 81 |

+

if self.codificar(self.get_global("PASSWORD"), _factor) == _registros[self.codificar(_usuario, _factor)]:

|

| 82 |

+

del self.page().session_state["USUARIO"]

|

| 83 |

+

del self.page().session_state["PASSWORD"]

|

| 84 |

+

self.set_global('hf_key', _config['HUGGINGFACE_KEY'])

|

| 85 |

+

self.set_global('logged_in', True)

|

| 86 |

+

else:

|

| 87 |

+

self.logout("😕 Ups! Contraseña Incorrecta")

|

| 88 |

+

else:

|

| 89 |

+

self.logout("😕 Ups! Nombre de Usuario Incorrecto")

|

| 90 |

+

|

| 91 |

+

def logout(self, mensaje=str("¡Vuelva Pronto!")):

|

| 92 |

+

self.page().sidebar.error(mensaje)

|

| 93 |

+

self.set_global('hf_key')

|

| 94 |

+

self.set_global('logged_in')

|

| 95 |

+

|

| 96 |

+

@staticmethod

|

| 97 |

+

def codificar(palabra=str(), modificador=None):

|

| 98 |

+

# Acepta:

|

| 99 |

+

# ABCDEFGHIJKLMNOPQRSTUVWXYZ

|

| 100 |

+

# abcdefghijklmnopqrstuvwxyz

|

| 101 |

+

# 1234567890!#$-_=%&/()*[]

|

| 102 |

+

codigo = str()

|

| 103 |

+

try:

|

| 104 |

+

for _byte in bytearray(palabra.strip(), 'utf-8'):

|

| 105 |

+

# x = f(y) => la variable 'x' estará en función de la variable 'y'

|

| 106 |

+

# Si ... f(y) = y² * k => donde:

|

| 107 |

+

# x es el valor decimal del input en bytes

|

| 108 |

+

# modificador es un número real variable, definido por el usuario

|

| 109 |

+

_y = int(format(_byte, 'b'), 2)

|

| 110 |

+

_x = int(pow(_y, 2) * modificador)

|

| 111 |

+

# magia

|

| 112 |

+

codigo = f" {bin(_x)}{codigo}"

|

| 113 |

+

except Exception as error:

|

| 114 |

+

print(f"Error Codificando:\n{error}")

|

| 115 |

+

return codigo.strip()

|

| 116 |

+

|

| 117 |

+

@staticmethod

|

| 118 |

+

def decodificar(codigo=str(), modificador=None):

|

| 119 |

+

# SOLO DECODIFICA SU ECUACIÓN INVERSA...

|

| 120 |

+

palabra = str()

|

| 121 |

+

try:

|

| 122 |

+

for _byte_str in codigo.strip().split(' '):

|

| 123 |

+

# entonces...podemos decir que y = √(x/k)

|

| 124 |

+

# es su ecuación inversa correspondiente

|

| 125 |

+

_x = int(_byte_str, 2)

|

| 126 |

+

_y = int(pow((_x/modificador), 1/2))

|

| 127 |

+

letra = _y.to_bytes(_y.bit_length(), 'big').decode()

|

| 128 |

+

# magia

|

| 129 |

+

palabra = f"{letra}{palabra}"

|

| 130 |

+

except Exception as error:

|

| 131 |

+

print(f"Error Decodificando:\n{error}")

|

| 132 |

+

return palabra.stip()

|

core/estilos/about_me.css

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/* Estilo Cards */

|

| 2 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"] {

|

| 3 |

+

background-color: #070707d1;

|

| 4 |

+

box-shadow: 1px 1px 2px 2px #000000d2;

|

| 5 |

+

box-sizing: border-box;

|

| 6 |

+

padding: 4px 0px 20px 0px;

|

| 7 |

+

border-radius: 14px;

|

| 8 |

+

backdrop-filter: blur(4px);

|

| 9 |

+

transition: ease-out;

|

| 10 |

+

transition-property: background-color box-shadow transition;

|

| 11 |

+

transition-duration: 88ms;

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

/* Estilo Cards:Hover*/

|

| 15 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 16 |

+

background-color: #070707d0;

|

| 17 |

+

border: 2px thin;

|

| 18 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 19 |

+

transition: ease-in;

|

| 20 |

+

transition-property: background-color box-shadow transition;

|

| 21 |

+

transition-duration: 110ms;

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

/* Interno Card: Titulo */

|

| 26 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stHeadingContainer {

|

| 27 |

+

text-align: center;

|

| 28 |

+

align-self: center;

|

| 29 |

+

}

|

| 30 |

+

|

| 31 |

+

/* Interno Card: Texto */

|

| 32 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stTextLabelWrapper>[data-testid="stText"] {

|

| 33 |

+

padding: 0px 8px 0px 8px;

|

| 34 |

+

}

|

| 35 |

+

|

| 36 |

+

/* Interno Card: Row Imágenes */

|

| 37 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"] {

|

| 38 |

+

padding: 0px 8px 0px 8px;

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

|

core/estilos/home.css

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/* Estilo Cards */

|

| 2 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"] {

|

| 3 |

+

background-color: #070707ef;

|

| 4 |

+

box-shadow: 1px 1px 2px 2px #000000d2;

|

| 5 |

+

box-sizing: border-box;

|

| 6 |

+

padding: 4px 0px 20px 0px;

|

| 7 |

+

border-radius: 14px;

|

| 8 |

+

backdrop-filter: blur(4px);

|

| 9 |

+

transition: ease-out;

|

| 10 |

+

transition-property: background-color box-shadow transition;

|

| 11 |

+

transition-duration: 88ms;

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

/* Estilo Cards:Hover*/

|

| 15 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 16 |

+

background-color: #070707d0;

|

| 17 |

+

border: 2px thin;

|

| 18 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 19 |

+

transition: ease-in;

|

| 20 |

+

transition-property: background-color box-shadow transition;

|

| 21 |

+

transition-duration: 110ms;

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

/* Interno Card: Titulo */

|

| 26 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stHeadingContainer {

|

| 27 |

+

text-align: center;

|

| 28 |

+

align-self: center;

|

| 29 |

+

}

|

| 30 |

+

|

| 31 |

+

/* Interno Card: Texto */

|

| 32 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stTextLabelWrapper>[data-testid="stText"] {

|

| 33 |

+

padding: 0px 8px 0px 8px;

|

| 34 |

+

}

|

| 35 |

+

|

| 36 |

+

/* Interno Card: Markup */

|

| 37 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stMarkdown>[data-testid="stMarkdownContainer"] {

|

| 38 |

+

padding: 0px 8px 0px 8px;

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

/* Interno Card: Row imagenes */

|

| 42 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"] {

|

| 43 |

+

padding: 0px 8px 0px 8px;

|

| 44 |

+

display: flex;

|

| 45 |

+

}

|

| 46 |

+

|

| 47 |

+

/* Interno Card: Imágenes */

|

| 48 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"]>[data-testid="column"] {

|

| 49 |

+

display: flex;

|

| 50 |

+

justify-content: center;

|

| 51 |

+

align-items: center;

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

/* Interno Card: Botones */

|

| 55 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>[data-testid="stButton"]>.stTooltipIcon>div>[data-testid="stTooltipIcon"]>[data-testid="tooltipHoverTarget"]{

|

| 56 |

+

padding: 8px;

|

| 57 |

+

display: flex;

|

| 58 |

+

justify-content: center;

|

| 59 |

+

text-align: center;

|

| 60 |

+

width: 100%;

|

| 61 |

+

}

|

core/estilos/live_demo.css

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/* Estilo Cards */

|

| 2 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"] {

|

| 3 |

+

background-color: #070707ef;

|

| 4 |

+

box-shadow: 1px 1px 2px 2px #000000d2;

|

| 5 |

+

box-sizing: border-box;

|

| 6 |

+

padding: 4px 0px 20px 0px;

|

| 7 |

+

border-radius: 14px;

|

| 8 |

+

backdrop-filter: blur(4px);

|

| 9 |

+

transition: ease-out;

|

| 10 |

+

transition-property: background-color box-shadow transition;

|

| 11 |

+

transition-duration: 88ms;

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

/* Estilo Cards:Hover*/

|

| 15 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 16 |

+

background-color: #070707d0;

|

| 17 |

+

border: 2px thin;

|

| 18 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 19 |

+

transition: ease-in;

|

| 20 |

+

transition-property: background-color box-shadow transition;

|

| 21 |

+

transition-duration: 110ms;

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

/* Interno Card: Titulo */

|

| 26 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stHeadingContainer {

|

| 27 |

+

text-align: center;

|

| 28 |

+

align-self: center;

|

| 29 |

+

}

|

| 30 |

+

|

| 31 |

+

/* Interno Card: Texto */

|

| 32 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stTextLabelWrapper>[data-testid="stText"] {

|

| 33 |

+

padding: 0px 8px 0px 8px;

|

| 34 |

+

}

|

| 35 |

+

|

| 36 |

+

/* Interno Card: Markup */

|

| 37 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stMarkdown>[data-testid="stMarkdownContainer"] {

|

| 38 |

+

padding: 0px 8px 0px 8px;

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

/* Interno Card: Row imagenes */

|

| 42 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"] {

|

| 43 |

+

padding: 0px 8px 0px 8px;

|

| 44 |

+

display: flex;

|

| 45 |

+

}

|

| 46 |

+

|

| 47 |

+

/* Interno Card: Imágenes */

|

| 48 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"]>[data-testid="column"] {

|

| 49 |

+

display: flex;

|

| 50 |

+

justify-content: center;

|

| 51 |

+

align-items: center;

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

/* Interno Card: Botones */

|

| 55 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>[data-testid="stButton"]>.stTooltipIcon>div>[data-testid="stTooltipIcon"]>[data-testid="tooltipHoverTarget"]{

|

| 56 |

+

padding: 8px;

|

| 57 |

+

display: flex;

|

| 58 |

+

justify-content: center;

|

| 59 |

+

text-align: center;

|

| 60 |

+

width: 100%;

|

| 61 |

+

}

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

/* Interno Card: Expander */

|

| 65 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stExpander"]>ul {

|

| 66 |

+

background-color: transparent;

|

| 67 |

+

box-shadow: none;

|

| 68 |

+

border: none;

|

| 69 |

+

}

|

| 70 |

+

|

| 71 |

+

/* Estilo Expander:Hover*/

|

| 72 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 73 |

+

background-color: #070707d0;

|

| 74 |

+

border: 2px thin;

|

| 75 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 76 |

+

transition: ease-in;

|

| 77 |

+

transition-property: background-color box-shadow transition;

|

| 78 |

+

transition-duration: 110ms;

|

| 79 |

+

}

|

| 80 |

+

|

| 81 |

+

|

core/estilos/main.css

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/* Contenedor principal de la aplicación*/

|

| 2 |

+

section>[data-testid="block-container"]{

|

| 3 |

+

height: 100vh;

|

| 4 |

+

padding: 2em 3em 2em 3em;

|

| 5 |

+

}

|

core/estilos/teoria.css

ADDED

|

@@ -0,0 +1,81 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

/* Estilo Cards */

|

| 2 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"] {

|

| 3 |

+

background-color: #070707ef;

|

| 4 |

+

box-shadow: 1px 1px 2px 2px #000000d2;

|

| 5 |

+

box-sizing: border-box;

|

| 6 |

+

padding: 4px 0px 20px 0px;

|

| 7 |

+

border-radius: 14px;

|

| 8 |

+

backdrop-filter: blur(4px);

|

| 9 |

+

transition: ease-out;

|

| 10 |

+

transition-property: background-color box-shadow transition;

|

| 11 |

+

transition-duration: 88ms;

|

| 12 |

+

}

|

| 13 |

+

|

| 14 |

+

/* Estilo Cards:Hover*/

|

| 15 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 16 |

+

background-color: #070707d0;

|

| 17 |

+

border: 2px thin;

|

| 18 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 19 |

+

transition: ease-in;

|

| 20 |

+

transition-property: background-color box-shadow transition;

|

| 21 |

+

transition-duration: 110ms;

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

/* Interno Card: Titulo */

|

| 26 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stHeadingContainer {

|

| 27 |

+

text-align: center;

|

| 28 |

+

align-self: center;

|

| 29 |

+

}

|

| 30 |

+

|

| 31 |

+

/* Interno Card: Texto */

|

| 32 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stTextLabelWrapper>[data-testid="stText"] {

|

| 33 |

+

padding: 0px 8px 0px 8px;

|

| 34 |

+

}

|

| 35 |

+

|

| 36 |

+

/* Interno Card: Markup */

|

| 37 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>.stMarkdown>[data-testid="stMarkdownContainer"] {

|

| 38 |

+

padding: 0px 8px 0px 8px;

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

/* Interno Card: Row imagenes */

|

| 42 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"] {

|

| 43 |

+

padding: 0px 8px 0px 8px;

|

| 44 |

+

display: flex;

|

| 45 |

+

}

|

| 46 |

+

|

| 47 |

+

/* Interno Card: Imágenes */

|

| 48 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stHorizontalBlock"]>[data-testid="column"] {

|

| 49 |

+

display: flex;

|

| 50 |

+

justify-content: center;

|

| 51 |

+

align-items: center;

|

| 52 |

+

}

|

| 53 |

+

|

| 54 |

+

/* Interno Card: Botones */

|

| 55 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="element-container"]>[data-testid="stButton"]>.stTooltipIcon>div>[data-testid="stTooltipIcon"]>[data-testid="tooltipHoverTarget"]{

|

| 56 |

+

padding: 8px;

|

| 57 |

+

display: flex;

|

| 58 |

+

justify-content: center;

|

| 59 |

+

text-align: center;

|

| 60 |

+

width: 100%;

|

| 61 |

+

}

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

/* Interno Card: Expander */

|

| 65 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]>[data-testid="stExpander"]>ul {

|

| 66 |

+

background-color: transparent;

|

| 67 |

+

box-shadow: none;

|

| 68 |

+

border: none;

|

| 69 |

+

}

|

| 70 |

+

|

| 71 |

+

/* Estilo Expander:Hover*/

|

| 72 |

+

[data-testid="stVerticalBlock"]>[style*="flex-direction: column;"]>[data-testid="stVerticalBlock"]:hover {

|

| 73 |

+

background-color: #070707d0;

|

| 74 |

+

border: 2px thin;

|

| 75 |

+

box-shadow: 2px 2px 3px 3px #000000fe;

|

| 76 |

+

transition: ease-in;

|

| 77 |

+

transition-property: background-color box-shadow transition;

|

| 78 |

+

transition-duration: 110ms;

|

| 79 |

+

}

|

| 80 |

+

|

| 81 |

+

|

core/imagenes/result.png

ADDED

|

core/imagenes/shiba.png

ADDED

|

pages/Aboutme.py

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from core.controllers.pages_controller import Page

|

| 2 |

+

from PIL import Image

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class AboutMe(Page):

|

| 6 |

+

variables_globales = {

|

| 7 |

+

}

|

| 8 |

+

archivos_css = ["main",

|

| 9 |

+

"about_me"]

|

| 10 |

+

|

| 11 |

+

def __init__(self, title=str("whoami"), icon=str("🖼️"), init_page=False):

|

| 12 |

+

super().__init__()

|

| 13 |

+

if init_page:

|

| 14 |

+

self.new_page(title=title, icon=icon)

|

| 15 |

+

self.new_body(True)

|

| 16 |

+

self.init_globals(globals=self.variables_globales)

|

| 17 |

+

for archivo in self.archivos_css:

|

| 18 |

+

self.cargar_css(archivo_css=archivo)

|

| 19 |

+

|

| 20 |

+

def agregar_card_bienvenido(self, columna):

|

| 21 |

+

card_bienvenido = columna.container()

|

| 22 |

+

|

| 23 |

+

card_bienvenido.header("Sobre la clasificación de imágenes",

|

| 24 |

+

help=None)

|

| 25 |

+

card_bienvenido.markdown(unsafe_allow_html=False,

|

| 26 |

+

help=None,

|

| 27 |

+

body="""

|

| 28 |

+

La **clasificación de imágenes en visión artificial** consiste en enseñar a una computadora a **identificar la categoría general de una fotografía**, como "perro" o "coche", en lugar de analizar detalles específicos o ubicar objetos.

|

| 29 |

+

|

| 30 |

+

Este **proceso permite a la computadora** reconocer patrones y realizar **predicciones precisas en nuevas imágenes**.

|

| 31 |

+

""")

|

| 32 |

+

|

| 33 |

+

imagen_demo1, imagen_demo2 = card_bienvenido.columns(2, gap="small")

|

| 34 |

+

src_img_1 = Image.open("core/imagenes/shiba.png")

|

| 35 |

+

src_img_2 = Image.open("core/imagenes/result.png")

|

| 36 |

+

imagen_demo1.image(src_img_1,

|

| 37 |

+

use_column_width="auto")

|

| 38 |

+

imagen_demo2.image(src_img_2,

|

| 39 |

+

use_column_width="auto")

|

| 40 |

+

|

| 41 |

+

card_bienvenido.markdown(unsafe_allow_html=False,

|

| 42 |

+

help=None,

|

| 43 |

+

# Esto se logra mediante el entrenamiento de **algoritmos de aprendizaje profundo**, como las **redes neuronales convolucionales (CNN)** o modelos basados en **Transformers**. Estos algoritmos se entrenan utilizando un **amplio conjunto de datos** de imágenes etiquetadas, donde cada imagen tiene una **etiqueta que describe** su contenido (por ejemplo, "gato" o "árbol").

|

| 44 |

+

body="""

|

| 45 |

+

A continuación veremos cómo la librería Transformers utiliza el **modelo pre-entrenado Google/ViT**, entrenado con un conjunto de datos de más de 14 millones de imágenes, etiquetadas en más de 21,000 clases diferentes, todas con una resolución de 224x224.

|

| 46 |

+

""")

|

| 47 |

+

|

| 48 |

+

def agregar_card_teoria(self, columna):

|

| 49 |

+

card_teoria = columna.container()

|

| 50 |

+

card_teoria.header("Teoría",

|

| 51 |

+

help=None)

|

| 52 |

+

|

| 53 |

+

def agregar_card_live_demo(self, columna):

|

| 54 |

+

card_live_demo = columna.container()

|

| 55 |

+

card_live_demo.header("Demo",

|

| 56 |

+

help=None)

|

| 57 |

+

|

| 58 |

+

def agregar_card_about_me(self, columna):

|

| 59 |

+

card_about_me = columna.container()

|

| 60 |

+

card_about_me.header("Desarrollado por:",

|

| 61 |

+

help=None)

|

| 62 |

+

card_about_me.subheader("coder160",

|

| 63 |

+

help=None)

|

| 64 |

+

|

| 65 |

+

social1, social2, social3, social4 = card_about_me.columns(

|

| 66 |

+

4, gap="medium")

|

| 67 |

+

social1.image("https://imagedelivery.net/5MYSbk45M80qAwecrlKzdQ/8c7c0f2b-5efe-45d3-3041-f6295bd2e600/preview",

|

| 68 |

+

use_column_width="auto")

|

| 69 |

+

social2.image("https://imagedelivery.net/5MYSbk45M80qAwecrlKzdQ/8c7c0f2b-5efe-45d3-3041-f6295bd2e600/preview",

|

| 70 |

+

use_column_width="auto")

|

| 71 |

+

social3.image("https://imagedelivery.net/5MYSbk45M80qAwecrlKzdQ/8c7c0f2b-5efe-45d3-3041-f6295bd2e600/preview",

|

| 72 |

+

use_column_width="auto")

|

| 73 |

+

social4.image("https://imagedelivery.net/5MYSbk45M80qAwecrlKzdQ/8c7c0f2b-5efe-45d3-3041-f6295bd2e600/preview",

|

| 74 |

+

use_column_width="auto")

|

| 75 |

+

|

| 76 |

+

def build(self):

|

| 77 |

+

# secciones

|

| 78 |

+

columna_bienvenido, columna_contenido = self.get_body().columns(2, gap="small")

|

| 79 |

+

seccion_teoria, seccion_live_demo = columna_contenido.columns(

|

| 80 |

+

2, gap="large")

|

| 81 |

+

if self.user_logged_in():

|

| 82 |

+

|

| 83 |

+

self.agregar_card_bienvenido(columna_bienvenido)

|

| 84 |

+

self.agregar_card_teoria(seccion_teoria)

|

| 85 |

+

self.agregar_card_live_demo(seccion_live_demo)

|

| 86 |

+

self.agregar_card_about_me(columna_contenido)

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

if __name__ == "__main__":

|

| 90 |

+

AboutMe(init_page=True).build()

|

pages/LiveDemo.py

ADDED

|

@@ -0,0 +1,144 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from core.controllers.pages_controller import Page

|

| 2 |

+

from core.controllers.main_process import Generador

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class Live_Demo(Page):

|

| 6 |

+

variables_globales = {

|

| 7 |

+

"img_bytes": None,

|

| 8 |

+

"img_src": None,

|

| 9 |

+

"settings": {

|

| 10 |

+

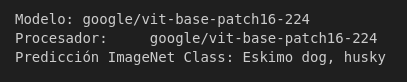

"model": str("google/vit-base-patch16-224"),

|

| 11 |

+

"tokenizer": str("google/vit-base-patch16-224"),

|

| 12 |

+

},

|

| 13 |

+

"img_output": None,

|

| 14 |

+

"predicciones": None,

|

| 15 |

+

}

|

| 16 |

+

archivos_css = ["main",

|

| 17 |

+

"live_demo"]

|

| 18 |

+

|

| 19 |

+

def __init__(self, title=str("Live-Demo"), icon=str("🖼️"), init_page=False):

|

| 20 |

+

super().__init__()

|

| 21 |

+

if init_page:

|

| 22 |

+

self.new_page(title=title, icon=icon)

|

| 23 |

+

self.new_body(True)

|

| 24 |

+

self.init_globals(globals=self.variables_globales)

|

| 25 |

+

for archivo in self.archivos_css:

|

| 26 |

+

self.cargar_css(archivo_css=archivo)

|

| 27 |

+

|

| 28 |

+

def obtener_bytes(self, archivo):

|

| 29 |

+

self.set_global(key='img_src', value=archivo)

|

| 30 |

+

self.set_global(key='img_bytes', value=archivo.getvalue())

|

| 31 |

+

|

| 32 |

+

def actualizar_modelo_tokenizer(self, modelo, tokenizer):

|

| 33 |

+

_settings = {'model': modelo,

|

| 34 |

+

'tokenizer': tokenizer}

|

| 35 |

+

self.set_global(key='settings', value=_settings)

|

| 36 |

+

|

| 37 |

+

def procesar_imagen(self):

|

| 38 |

+

proceso = Generador(configuraciones=self.get_global('settings'))

|

| 39 |

+

proceso.generar_prediccion(imagen_bytes=self.get_global('img_bytes'))

|

| 40 |

+

self.set_global(key='img_output', value=self.get_global('img_bytes'))

|

| 41 |

+

self.set_global(key='predicciones', value=proceso.prediccion)

|

| 42 |

+

|

| 43 |

+

def archivo_expander(self, section):

|

| 44 |

+

archivo_expander = section.expander(

|

| 45 |

+

expanded=False,

|

| 46 |

+

label="Desde su galería"

|

| 47 |

+

)

|

| 48 |

+

_archivo = archivo_expander.file_uploader(

|

| 49 |

+

label="GALERIA",

|

| 50 |

+

accept_multiple_files=False,

|

| 51 |

+

label_visibility="visible"

|

| 52 |

+

)

|

| 53 |

+

if (archivo_expander.button(label="Cargar Archivo", help="Suba un archivo.",

|

| 54 |

+

type="secondary", use_container_width=True) and _archivo is not None):

|

| 55 |

+

self.obtener_bytes(_archivo)

|

| 56 |

+

|

| 57 |

+

def camara_expander(self, section):

|

| 58 |

+

camara_expander = section.expander(

|

| 59 |

+

expanded=False,

|

| 60 |

+

label="Desde su cámara"

|

| 61 |

+

)

|

| 62 |

+

_captura = camara_expander.camera_input(

|

| 63 |

+

label="CAMARA",

|

| 64 |

+

label_visibility="visible"

|

| 65 |

+

)

|

| 66 |

+

if (camara_expander.button(label="Cargar Captura", help="Tome una fotografía.",

|

| 67 |

+

type="secondary", use_container_width=True) and _captura is not None):

|

| 68 |

+

self.obtener_bytes(_captura)

|

| 69 |

+

|

| 70 |

+

def preview_expander(self, section):

|

| 71 |

+

preview = section.expander(

|

| 72 |

+

expanded=True,

|

| 73 |

+

label="Todo listo"

|

| 74 |

+

)

|

| 75 |

+

if self.get_global('img_bytes', None) is not None:

|

| 76 |

+

preview.image(

|

| 77 |

+

image=self.get_global('img_bytes'),

|

| 78 |

+

caption="Su imagen",

|

| 79 |

+

use_column_width="auto",

|

| 80 |

+

channels="RGB",

|

| 81 |

+

output_format="auto"

|

| 82 |

+

)

|

| 83 |

+

if preview.button(label="LAUNCH", help="Procesar imagen",

|

| 84 |

+

type="secondary", use_container_width=True):

|

| 85 |

+

self.procesar_imagen()

|

| 86 |

+

|

| 87 |

+

def config_expander(self, section):

|

| 88 |

+

modelo = section.text_input(

|

| 89 |

+

label="MODELO",

|

| 90 |

+

value=self.get_global('settings').get('model'),

|

| 91 |

+

key=None,

|

| 92 |

+

help=None,

|

| 93 |

+

on_change=None,

|

| 94 |

+

disabled=False,

|

| 95 |

+

label_visibility="visible"

|

| 96 |

+

)

|

| 97 |

+

tokenizer = section.text_input(

|

| 98 |

+

label="TOKENIZER",

|

| 99 |

+

value=self.get_global('settings').get('tokenizer'),

|

| 100 |

+

key=None,

|

| 101 |

+

help=None,

|

| 102 |

+

on_change=None,

|

| 103 |

+

disabled=False,

|

| 104 |

+

label_visibility="visible"

|

| 105 |

+

)

|

| 106 |

+

if section.button(label="Configurar", help="Actualice configuraciones",

|

| 107 |

+

type="secondary", use_container_width=True):

|

| 108 |

+

self.actualizar_modelo_tokenizer(modelo, tokenizer)

|

| 109 |

+

|

| 110 |

+

def agregar_card_inputs(self, columna):

|

| 111 |

+

card_inputs = columna.container()

|

| 112 |

+

source_tab, config_tab = card_inputs.tabs(

|

| 113 |

+

["Imagen", "Configuraciones"]

|

| 114 |

+

)

|

| 115 |

+

self.archivo_expander(source_tab)

|

| 116 |

+

self.camara_expander(source_tab)

|

| 117 |

+

self.config_expander(config_tab)

|

| 118 |

+

self.preview_expander(card_inputs)

|

| 119 |

+

|

| 120 |

+

def agregar_card_outputs(self, columna):

|

| 121 |

+

card_teoria = columna.container()

|

| 122 |

+

output = card_teoria.expander(

|

| 123 |

+

expanded=True,

|

| 124 |

+

label="Su resultado"

|

| 125 |

+

)

|

| 126 |

+

if self.get_global('img_output', None) is not None:

|

| 127 |

+

output.image(

|

| 128 |

+

image=self.get_global('img_output'),

|

| 129 |

+

caption="Su resultado",

|

| 130 |

+

use_column_width="auto",

|

| 131 |

+

channels="RGB",

|

| 132 |

+

output_format="auto"

|

| 133 |

+

)

|

| 134 |

+

|

| 135 |

+

def build(self):

|

| 136 |

+

# secciones

|

| 137 |

+

columna_inputs, columna_outputs = self.get_body().columns(2, gap="small")

|

| 138 |

+

if self.user_logged_in():

|

| 139 |

+

self.agregar_card_inputs(columna_inputs)

|

| 140 |

+

self.agregar_card_outputs(columna_outputs)

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

if __name__ == "__main__":

|

| 144 |

+

Live_Demo(init_page=True).build()

|

pages/Teoria.py

ADDED

|

@@ -0,0 +1,216 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|