diff --git a/.gitattributes b/.gitattributes

index a6344aac8c09253b3b630fb776ae94478aa0275b..afb6ff454f89ad48020a1e53df1ecf77bbe1717b 100644

--- a/.gitattributes

+++ b/.gitattributes

@@ -33,3 +33,9 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

*.zip filter=lfs diff=lfs merge=lfs -text

*.zst filter=lfs diff=lfs merge=lfs -text

*tfevents* filter=lfs diff=lfs merge=lfs -text

+docs/images/fastcpu-webui.png filter=lfs diff=lfs merge=lfs -text

+docs/images/fastsdcpu-android-termux-pixel7.png filter=lfs diff=lfs merge=lfs -text

+docs/images/fastsdcpu-gui.jpg filter=lfs diff=lfs merge=lfs -text

+docs/images/fastsdcpu-screenshot.png filter=lfs diff=lfs merge=lfs -text

+docs/images/fastsdcpu-webui.png filter=lfs diff=lfs merge=lfs -text

+docs/images/fastsdcpu_flux_on_cpu.png filter=lfs diff=lfs merge=lfs -text

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..0542bc81f9b21a2a82d07487b7417141731b413f

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,7 @@

+env

+*.bak

+*.pyc

+__pycache__

+results

+# excluding user settings for the GUI frontend

+configs/settings.yaml

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..ab84360cb0e940f6e4af2c0731e51cac0f66d19a

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2023 Rupesh Sreeraman

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/Readme.md b/Readme.md

new file mode 100644

index 0000000000000000000000000000000000000000..cff2b33cacd274f61d27de389202cd418a326e3d

--- /dev/null

+++ b/Readme.md

@@ -0,0 +1,673 @@

+# FastSD CPU :sparkles:[](https://github.com/openvinotoolkit/awesome-openvino)

+

+<div align="center">

+ <a href="https://trendshift.io/repositories/3957" target="_blank"><img src="https://trendshift.io/api/badge/repositories/3957" alt="rupeshs%2Ffastsdcpu | Trendshift" style="width: 250px; height: 55px;" width="250" height="55"/></a>

+</div>

+

+FastSD CPU is a faster version of Stable Diffusion on CPU. Based on [Latent Consistency Models](https://github.com/luosiallen/latent-consistency-model) and

+[Adversarial Diffusion Distillation](https://nolowiz.com/fast-stable-diffusion-on-cpu-using-fastsd-cpu-and-openvino/).

+

+

+The following interfaces are available :

+

+- Desktop GUI, basic text to image generation (Qt,faster)

+- WebUI (Advanced features,Lora,controlnet etc)

+- CLI (CommandLine Interface)

+

+🚀 Using __OpenVINO(SDXS-512-0.9)__, it took __0.82 seconds__ (__820 milliseconds__) to create a single 512x512 image on a __Core i7-12700__.

+

+## Table of Contents

+

+- [Supported Platforms](#Supported platforms)

+- [Dependencies](#dependencies)

+- [Memory requirements](#memory-requirements)

+- [Features](#features)

+- [Benchmarks](#fast-inference-benchmarks)

+- [OpenVINO Support](#openvino)

+- [Installation](#installation)

+- [AI PC Support - OpenVINO](#ai-pc-support)

+- [GGUF support (Flux)](#gguf-support)

+- [Real-time text to image (EXPERIMENTAL)](#real-time-text-to-image)

+- [Models](#models)

+- [How to use Lora models](#useloramodels)

+- [How to use controlnet](#usecontrolnet)

+- [Android](#android)

+- [Raspberry Pi 4](#raspberry)

+- [Orange Pi 5](#orangepi)

+- [API Support](#apisupport)

+- [License](#license)

+- [Contributors](#contributors)

+

+## Supported platforms⚡️

+

+FastSD CPU works on the following platforms:

+

+- Windows

+- Linux

+- Mac

+- Android + Termux

+- Raspberry PI 4

+

+## Dependencies

+

+- Python 3.10 or Python 3.11 (Please ensure that you have a working Python 3.10 or Python 3.11 installation available on the system)

+

+## Memory requirements

+

+Minimum system RAM requirement for FastSD CPU.

+

+Model (LCM,OpenVINO): SD Turbo, 1 step, 512 x 512

+

+Model (LCM-LoRA): Dreamshaper v8, 3 step, 512 x 512

+

+| Mode | Min RAM |

+| --------------------- | ------------- |

+| LCM | 2 GB |

+| LCM-LoRA | 4 GB |

+| OpenVINO | 11 GB |

+

+If we enable Tiny decoder(TAESD) we can save some memory(2GB approx) for example in OpenVINO mode memory usage will become 9GB.

+

+:exclamation: Please note that guidance scale >1 increases RAM usage and slow inference speed.

+

+## Features

+

+- Desktop GUI, web UI and CLI

+- Supports 256,512,768,1024 image sizes

+- Supports Windows,Linux,Mac

+- Saves images and diffusion setting used to generate the image

+- Settings to control,steps,guidance and seed

+- Added safety checker setting

+- Maximum inference steps increased to 25

+- Added [OpenVINO](https://github.com/openvinotoolkit/openvino) support

+- Fixed OpenVINO image reproducibility issue

+- Fixed OpenVINO high RAM usage,thanks [deinferno](https://github.com/deinferno)

+- Added multiple image generation support

+- Application settings

+- Added Tiny Auto Encoder for SD (TAESD) support, 1.4x speed boost (Fast,moderate quality)

+- Safety checker disabled by default

+- Added SDXL,SSD1B - 1B LCM models

+- Added LCM-LoRA support, works well for fine-tuned Stable Diffusion model 1.5 or SDXL models

+- Added negative prompt support in LCM-LoRA mode

+- LCM-LoRA models can be configured using text configuration file

+- Added support for custom models for OpenVINO (LCM-LoRA baked)

+- OpenVINO models now supports negative prompt (Set guidance >1.0)

+- Real-time inference support,generates images while you type (experimental)

+- Fast 2,3 steps inference

+- Lcm-Lora fused models for faster inference

+- Supports integrated GPU(iGPU) using OpenVINO (export DEVICE=GPU)

+- 5.7x speed using OpenVINO(steps: 2,tiny autoencoder)

+- Image to Image support (Use Web UI)

+- OpenVINO image to image support

+- Fast 1 step inference (SDXL Turbo)

+- Added SD Turbo support

+- Added image to image support for Turbo models (Pytorch and OpenVINO)

+- Added image variations support

+- Added 2x upscaler (EDSR and Tiled SD upscale (experimental)),thanks [monstruosoft](https://github.com/monstruosoft) for SD upscale

+- Works on Android + Termux + PRoot

+- Added interactive CLI,thanks [monstruosoft](https://github.com/monstruosoft)

+- Added basic lora support to CLI and WebUI

+- ONNX EDSR 2x upscale

+- Add SDXL-Lightning support

+- Add SDXL-Lightning OpenVINO support (int8)

+- Add multilora support,thanks [monstruosoft](https://github.com/monstruosoft)

+- Add basic ControlNet v1.1 support(LCM-LoRA mode),thanks [monstruosoft](https://github.com/monstruosoft)

+- Add ControlNet annotators(Canny,Depth,LineArt,MLSD,NormalBAE,Pose,SoftEdge,Shuffle)

+- Add SDXS-512 0.9 support

+- Add SDXS-512 0.9 OpenVINO,fast 1 step inference (0.8 seconds to generate 512x512 image)

+- Default model changed to SDXS-512-0.9

+- Faster realtime image generation

+- Add NPU device check

+- Revert default model to SDTurbo

+- Update realtime UI

+- Add hypersd support

+- 1 step fast inference support for SDXL and SD1.5

+- Experimental support for single file Safetensors SD 1.5 models(Civitai models), simply add local model path to configs/stable-diffusion-models.txt file.

+- Add REST API support

+- Add Aura SR (4x)/GigaGAN based upscaler support

+- Add Aura SR v2 upscaler support

+- Add FLUX.1 schnell OpenVINO int 4 support

+- Add CLIP skip support

+- Add token merging support

+- Add Intel AI PC support

+- AI PC NPU(Power efficient inference using OpenVINO) supports, text to image ,image to image and image variations support

+- Add [TAEF1 (Tiny autoencoder for FLUX.1) openvino](https://huggingface.co/rupeshs/taef1-openvino) support

+- Add Image to Image and Image Variations Qt GUI support,thanks [monstruosoft](https://github.com/monstruosoft)

+

+<a id="fast-inference-benchmarks"></a>

+

+## Fast Inference Benchmarks

+

+### 🚀 Fast 1 step inference with Hyper-SD

+

+#### Stable diffuion 1.5

+

+Works with LCM-LoRA mode.

+Fast 1 step inference supported on `runwayml/stable-diffusion-v1-5` model,select `rupeshs/hypersd-sd1-5-1-step-lora` lcm_lora model from the settings.

+

+#### Stable diffuion XL

+

+Works with LCM and LCM-OpenVINO mode.

+

+- *Hyper-SD SDXL 1 step* - [rupeshs/hyper-sd-sdxl-1-step](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step)

+

+- *Hyper-SD SDXL 1 step OpenVINO* - [rupeshs/hyper-sd-sdxl-1-step-openvino-int8](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step-openvino-int8)

+

+#### Inference Speed

+

+Tested on Core i7-12700 to generate __768x768__ image(1 step).

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| Pytorch | 19s |

+| OpenVINO | 13s |

+| OpenVINO + TAESDXL | 6.3s |

+

+### Fastest 1 step inference (SDXS-512-0.9)

+

+:exclamation:This is an experimental model, only text to image workflow is supported.

+

+#### Inference Speed

+

+Tested on Core i7-12700 to generate __512x512__ image(1 step).

+

+__SDXS-512-0.9__

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| Pytorch | 4.8s |

+| OpenVINO | 3.8s |

+| OpenVINO + TAESD | __0.82s__ |

+

+### 🚀 Fast 1 step inference (SD/SDXL Turbo - Adversarial Diffusion Distillation,ADD)

+

+Added support for ultra fast 1 step inference using [sdxl-turbo](https://huggingface.co/stabilityai/sdxl-turbo) model

+

+:exclamation: These SD turbo models are intended for research purpose only.

+

+#### Inference Speed

+

+Tested on Core i7-12700 to generate __512x512__ image(1 step).

+

+__SD Turbo__

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| Pytorch | 7.8s |

+| OpenVINO | 5s |

+| OpenVINO + TAESD | 1.7s |

+

+__SDXL Turbo__

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| Pytorch | 10s |

+| OpenVINO | 5.6s |

+| OpenVINO + TAESDXL | 2.5s |

+

+### 🚀 Fast 2 step inference (SDXL-Lightning - Adversarial Diffusion Distillation)

+

+SDXL-Lightning works with LCM and LCM-OpenVINO mode.You can select these models from app settings.

+

+Tested on Core i7-12700 to generate __768x768__ image(2 steps).

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| Pytorch | 18s |

+| OpenVINO | 12s |

+| OpenVINO + TAESDXL | 10s |

+

+- *SDXL-Lightning* - [rupeshs/SDXL-Lightning-2steps](https://huggingface.co/rupeshs/SDXL-Lightning-2steps)

+

+- *SDXL-Lightning OpenVINO* - [rupeshs/SDXL-Lightning-2steps-openvino-int8](https://huggingface.co/rupeshs/SDXL-Lightning-2steps-openvino-int8)

+

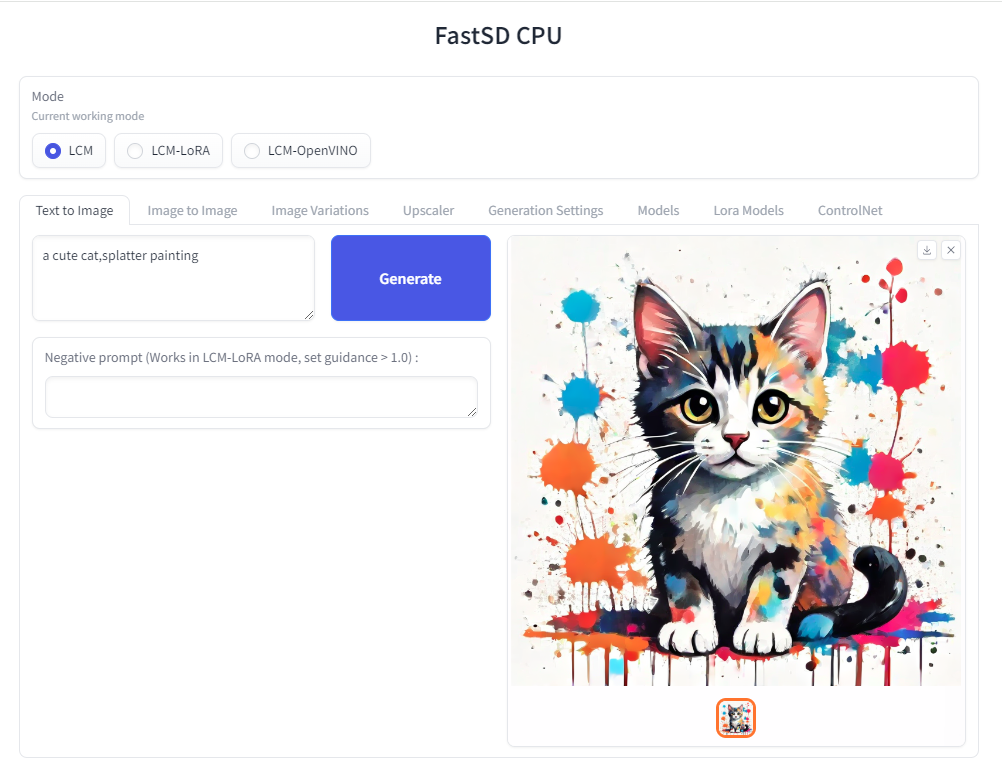

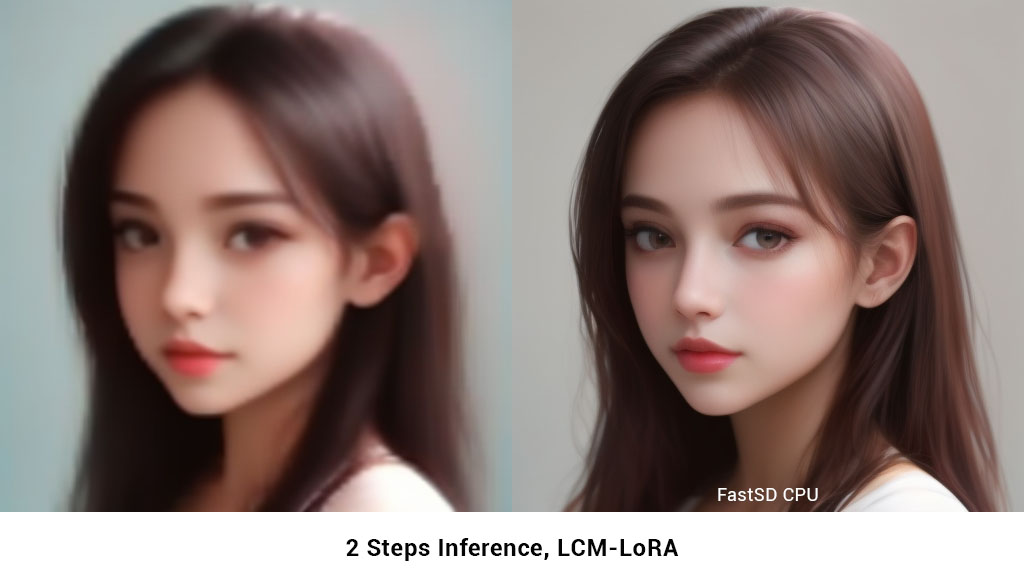

+### 2 Steps fast inference (LCM)

+

+FastSD CPU supports 2 to 3 steps fast inference using LCM-LoRA workflow. It works well with SD 1.5 models.

+

+

+

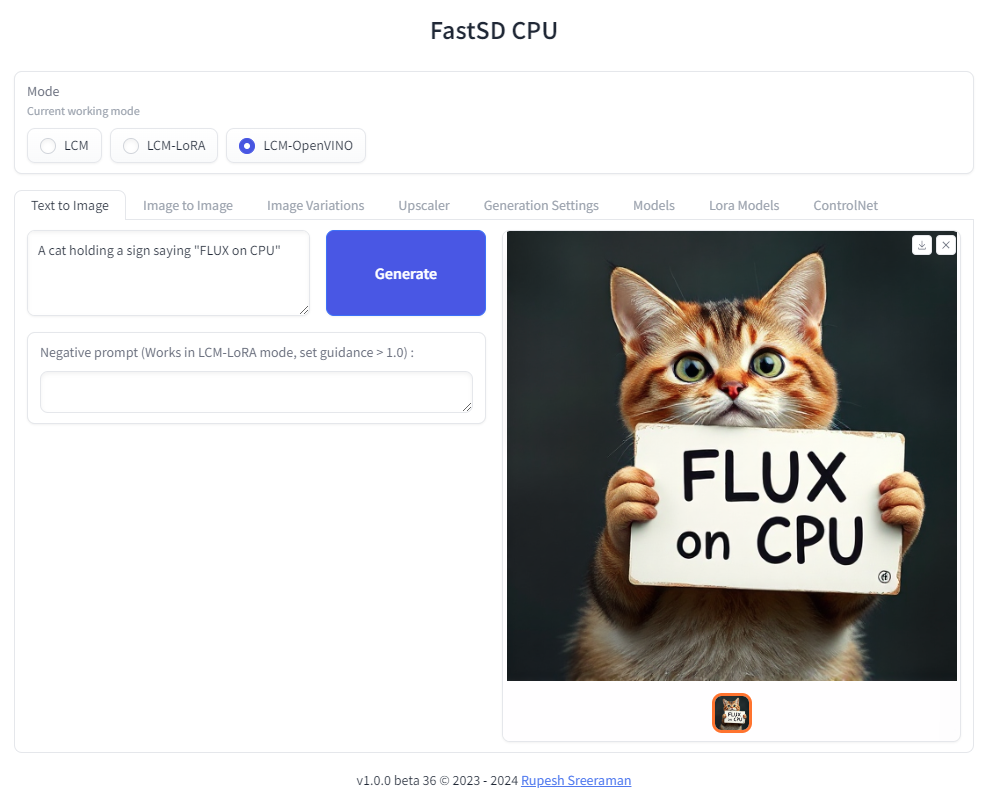

+### FLUX.1-schnell OpenVINO support

+

+

+

+:exclamation: Important - Please note the following points with FLUX workflow

+

+- As of now only text to image generation mode is supported

+- Use OpenVINO mode

+- Use int4 model - *rupeshs/FLUX.1-schnell-openvino-int4*

+- 512x512 image generation needs around __30GB__ system RAM

+

+Tested on Intel Core i7-12700 to generate __512x512__ image(3 steps).

+

+| Diffusion Pipeline | Latency |

+| --------------------- | ------------- |

+| OpenVINO | 4 min 30sec |

+

+### Benchmark scripts

+

+To benchmark run the following batch file on Windows:

+

+- `benchmark.bat` - To benchmark Pytorch

+- `benchmark-openvino.bat` - To benchmark OpenVINO

+

+Alternatively you can run benchmarks by passing `-b` command line argument in CLI mode.

+<a id="openvino"></a>

+

+## OpenVINO support

+

+Fast SD CPU utilizes [OpenVINO](https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/overview.html) to speed up the inference speed.

+Thanks [deinferno](https://github.com/deinferno) for the OpenVINO model contribution.

+We can get 2x speed improvement when using OpenVINO.

+Thanks [Disty0](https://github.com/Disty0) for the conversion script.

+

+### OpenVINO SDXL models

+

+These are models converted to use directly use it with FastSD CPU. These models are compressed to int8 to reduce the file size (10GB to 4.4 GB) using [NNCF](https://github.com/openvinotoolkit/nncf)

+

+- Hyper-SD SDXL 1 step - [rupeshs/hyper-sd-sdxl-1-step-openvino-int8](https://huggingface.co/rupeshs/hyper-sd-sdxl-1-step-openvino-int8)

+- SDXL Lightning 2 steps - [rupeshs/SDXL-Lightning-2steps-openvino-int8](https://huggingface.co/rupeshs/SDXL-Lightning-2steps-openvino-int8)

+

+### OpenVINO SD Turbo models

+

+We have converted SD/SDXL Turbo models to OpenVINO for fast inference on CPU. These models are intended for research purpose only. Also we converted TAESDXL MODEL to OpenVINO and

+

+- *SD Turbo OpenVINO* - [rupeshs/sd-turbo-openvino](https://huggingface.co/rupeshs/sd-turbo-openvino)

+- *SDXL Turbo OpenVINO int8* - [rupeshs/sdxl-turbo-openvino-int8](https://huggingface.co/rupeshs/sdxl-turbo-openvino-int8)

+- *TAESDXL OpenVINO* - [rupeshs/taesdxl-openvino](https://huggingface.co/rupeshs/taesdxl-openvino)

+

+You can directly use these models in FastSD CPU.

+

+### Convert SD 1.5 models to OpenVINO LCM-LoRA fused models

+

+We first creates LCM-LoRA baked in model,replaces the scheduler with LCM and then converts it into OpenVINO model. For more details check [LCM OpenVINO Converter](https://github.com/rupeshs/lcm-openvino-converter), you can use this tools to convert any StableDiffusion 1.5 fine tuned models to OpenVINO.

+

+<a id="ai-pc-support"></a>

+

+## Intel AI PC support - OpenVINO (CPU, GPU, NPU)

+

+Fast SD now supports AI PC with Intel® Core™ Ultra Processors. [To learn more about AI PC and OpenVINO](https://nolowiz.com/ai-pc-and-openvino-quick-and-simple-guide/).

+

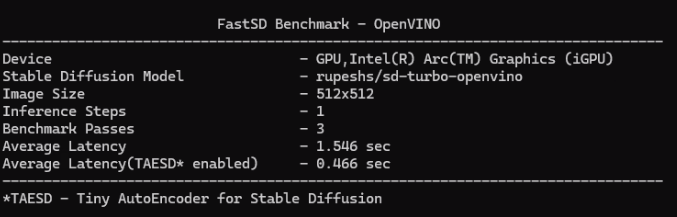

+### GPU

+

+For GPU mode `set device=GPU` and run webui. FastSD GPU benchmark on AI PC as shown below.

+

+

+

+### NPU

+

+FastSD CPU now supports power efficient NPU (Neural Processing Unit) that comes with Intel Core Ultra processors.

+

+FastSD tested with following Intel processor's NPUs:

+

+- Intel Core Ultra Series 1 (Meteor Lake)

+- Intel Core Ultra Series 2 (Lunar Lake)

+

+Currently FastSD support this model for NPU [rupeshs/sd15-lcm-square-openvino-int8](https://huggingface.co/rupeshs/sd15-lcm-square-openvino-int8).

+

+Supports following modes on NPU :

+

+- Text to image

+- Image to image

+- Image variations

+

+To run model in NPU follow these steps (Please make sure that your AI PC's NPU driver is the latest):

+

+- Start webui

+- Select LCM-OpenVINO mode

+- Select the models settings tab and select OpenVINO model `rupeshs/sd15-lcm-square-openvino-int8`

+- Set device envionment variable `set DEVICE=NPU`

+- Now it will run on the NPU

+

+This is heterogeneous computing since text encoder and Unet will use NPU and VAE will use GPU for processing. Thanks to OpenVINO.

+

+Please note that tiny auto encoder will not work in NPU mode.

+

+*Thanks to Intel for providing AI PC dev kit and Tiber cloud access to test FastSD, special thanks to [Pooja Baraskar](https://github.com/Pooja-B),[Dmitriy Pastushenkov](https://github.com/DimaPastushenkov).*

+

+<a id="gguf-support"></a>

+

+## GGUF support - Flux

+

+[GGUF](https://github.com/ggerganov/ggml/blob/master/docs/gguf.md) Flux model supported via [stablediffusion.cpp](https://github.com/leejet/stable-diffusion.cpp) shared library. Currently Flux Schenell model supported.

+

+To use GGUF model use web UI and select GGUF mode.

+

+Tested on Windows and Linux.

+

+:exclamation: Main advantage here we reduced minimum system RAM required for Flux workflow to around __12 GB__.

+

+Supported mode - Text to image

+

+### How to run Flux GGUF model

+

+- Download stablediffusion.cpp prebuilt shared library and place it inside fastsdcpu folder

+ For Windows users, download [stable-diffusion.dll](https://huggingface.co/rupeshs/FastSD-Flux-GGUF/blob/main/stable-diffusion.dll)

+

+ For Linux users download [libstable-diffusion.so](https://huggingface.co/rupeshs/FastSD-Flux-GGUF/blob/main/libstable-diffusion.so)

+

+ You can also build the library manully by following the guide *"Build stablediffusion.cpp shared library for GGUF flux model support"*

+

+- Download __diffusion model__ from [flux1-schnell-q4_0.gguf](https://huggingface.co/rupeshs/FastSD-Flux-GGUF/blob/main/flux1-schnell-q4_0.gguf) and place it inside `models/gguf/diffusion` directory

+- Download __clip model__ from [clip_l_q4_0.gguf](https://huggingface.co/rupeshs/FastSD-Flux-GGUF/blob/main/clip_l_q4_0.gguf) and place it inside `models/gguf/clip` directory

+- Download __T5-XXL model__ from [t5xxl_q4_0.gguf](https://huggingface.co/rupeshs/FastSD-Flux-GGUF/blob/main/t5xxl_q4_0.gguf) and place it inside `models/gguf/t5xxl` directory

+- Download __VAE model__ from [ae.safetensors](https://huggingface.co/black-forest-labs/FLUX.1-schnell/blob/main/ae.safetensors) and place it inside `models/gguf/vae` directory

+- Start web UI and select GGUF mode

+- Select the models settings tab and select GGUF diffusion,clip_l,t5xxl and VAE models.

+- Enter your prompt and generate image

+

+### Build stablediffusion.cpp shared library for GGUF flux model support(Optional)

+

+To build the stablediffusion.cpp library follow these steps

+

+- `git clone https://github.com/leejet/stable-diffusion.cpp`

+- `cd stable-diffusion.cpp`

+- `git pull origin master`

+- `git submodule init`

+- `git submodule update`

+- `git checkout 14206fd48832ab600d9db75f15acb5062ae2c296`

+- `cmake . -DSD_BUILD_SHARED_LIBS=ON`

+- `cmake --build . --config Release`

+- Copy the stablediffusion dll/so file to fastsdcpu folder

+

+<a id="real-time-text-to-image"></a>

+

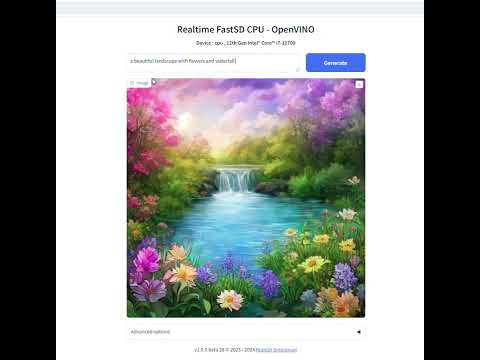

+## Real-time text to image (EXPERIMENTAL)

+

+We can generate real-time text to images using FastSD CPU.

+

+__CPU (OpenVINO)__

+

+Near real-time inference on CPU using OpenVINO, run the `start-realtime.bat` batch file and open the link in browser (Resolution : 512x512,Latency : 0.82s on Intel Core i7)

+

+Watch YouTube video :

+

+[](https://www.youtube.com/watch?v=0XMiLc_vsyI)

+

+## Models

+

+To use single file [Safetensors](https://huggingface.co/docs/safetensors/en/index) SD 1.5 models(Civit AI) follow this [YouTube tutorial](https://www.youtube.com/watch?v=zZTfUZnXJVk). Use LCM-LoRA Mode for single file safetensors.

+

+Fast SD supports LCM models and LCM-LoRA models.

+

+### LCM Models

+

+These models can be configured in `configs/lcm-models.txt` file.

+

+### OpenVINO models

+

+These are LCM-LoRA baked in models. These models can be configured in `configs/openvino-lcm-models.txt` file

+

+### LCM-LoRA models

+

+These models can be configured in `configs/lcm-lora-models.txt` file.

+

+- *lcm-lora-sdv1-5* - distilled consistency adapter for [runwayml/stable-diffusion-v1-5](https://huggingface.co/runwayml/stable-diffusion-v1-5)

+- *lcm-lora-sdxl* - Distilled consistency adapter for [stable-diffusion-xl-base-1.0](https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0)

+- *lcm-lora-ssd-1b* - Distilled consistency adapter for [segmind/SSD-1B](https://huggingface.co/segmind/SSD-1B)

+

+These models are used with Stablediffusion base models `configs/stable-diffusion-models.txt`.

+

+:exclamation: Currently no support for OpenVINO LCM-LoRA models.

+

+### How to add new LCM-LoRA models

+

+To add new model follow the steps:

+For example we will add `wavymulder/collage-diffusion`, you can give Stable diffusion 1.5 Or SDXL,SSD-1B fine tuned models.

+

+1. Open `configs/stable-diffusion-models.txt` file in text editor.

+2. Add the model ID `wavymulder/collage-diffusion` or locally cloned path.

+

+Updated file as shown below :

+

+```Lykon/dreamshaper-8

+Fictiverse/Stable_Diffusion_PaperCut_Model

+stabilityai/stable-diffusion-xl-base-1.0

+runwayml/stable-diffusion-v1-5

+segmind/SSD-1B

+stablediffusionapi/anything-v5

+wavymulder/collage-diffusion

+```

+

+Similarly we can update `configs/lcm-lora-models.txt` file with lcm-lora ID.

+

+### How to use LCM-LoRA models offline

+

+Please follow the steps to run LCM-LoRA models offline :

+

+- In the settings ensure that "Use locally cached model" setting is ticked.

+- Download the model for example `latent-consistency/lcm-lora-sdv1-5`

+Run the following commands:

+

+```

+git lfs install

+git clone https://huggingface.co/latent-consistency/lcm-lora-sdv1-5

+```

+

+Copy the cloned model folder path for example "D:\demo\lcm-lora-sdv1-5" and update the `configs/lcm-lora-models.txt` file as shown below :

+

+```

+D:\demo\lcm-lora-sdv1-5

+latent-consistency/lcm-lora-sdxl

+latent-consistency/lcm-lora-ssd-1b

+```

+

+- Open the app and select the newly added local folder in the combo box menu.

+- That's all!

+<a id="useloramodels"></a>

+

+## How to use Lora models

+

+Place your lora models in "lora_models" folder. Use LCM or LCM-Lora mode.

+You can download lora model (.safetensors/Safetensor) from [Civitai](https://civitai.com/) or [Hugging Face](https://huggingface.co/)

+E.g: [cutecartoonredmond](https://civitai.com/models/207984/cutecartoonredmond-15v-cute-cartoon-lora-for-liberteredmond-sd-15?modelVersionId=234192)

+<a id="usecontrolnet"></a>

+

+## ControlNet support

+

+We can use ControlNet in LCM-LoRA mode.

+

+Download ControlNet models from [ControlNet-v1-1](https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/tree/main).Download and place controlnet models in "controlnet_models" folder.

+

+Use the medium size models (723 MB)(For example : <https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/blob/main/control_v11p_sd15_canny_fp16.safetensors>)

+

+## Installation

+

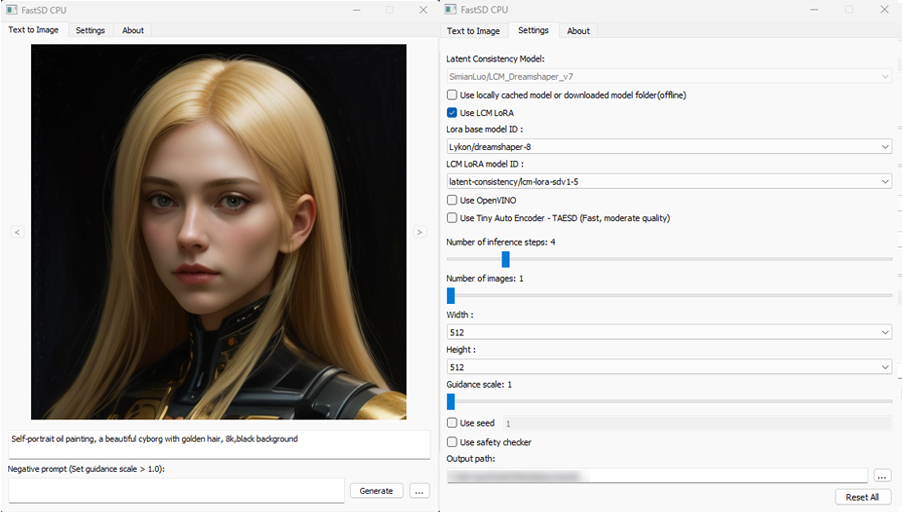

+### FastSD CPU on Windows

+

+

+

+:exclamation:__You must have a working Python installation.(Recommended : Python 3.10 or 3.11 )__

+

+To install FastSD CPU on Windows run the following steps :

+

+- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

+- Double click `install.bat` (It will take some time to install,depending on your internet speed.)

+- You can run in desktop GUI mode or web UI mode.

+

+#### Desktop GUI

+

+- To start desktop GUI double click `start.bat`

+

+#### Web UI

+

+- To start web UI double click `start-webui.bat`

+

+### FastSD CPU on Linux

+

+:exclamation:__Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.__

+

+- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

+- In the terminal, enter into fastsdcpu directory

+- Run the following command

+

+ `chmod +x install.sh`

+

+ `./install.sh`

+

+#### To start Desktop GUI

+

+ `./start.sh`

+

+#### To start Web UI

+

+ `./start-webui.sh`

+

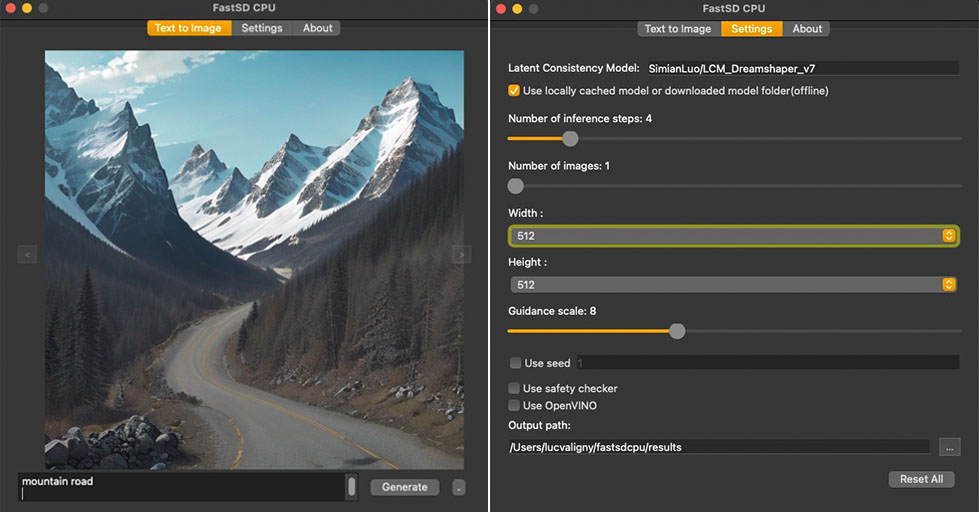

+### FastSD CPU on Mac

+

+

+

+:exclamation:__Ensure that you have Python 3.9 or 3.10 or 3.11 version installed.__

+

+Run the following commands to install FastSD CPU on Mac :

+

+- Clone/download this repo or download [release](https://github.com/rupeshs/fastsdcpu/releases).

+- In the terminal, enter into fastsdcpu directory

+- Run the following command

+

+ `chmod +x install-mac.sh`

+

+ `./install-mac.sh`

+

+#### To start Desktop GUI

+

+ `./start.sh`

+

+#### To start Web UI

+

+ `./start-webui.sh`

+

+Thanks [Autantpourmoi](https://github.com/Autantpourmoi) for Mac testing.

+

+:exclamation:We don't support OpenVINO on Mac (M1/M2/M3 chips, but *does* work on Intel chips).

+

+If you want to increase image generation speed on Mac(M1/M2 chip) try this:

+

+`export DEVICE=mps` and start app `start.sh`

+

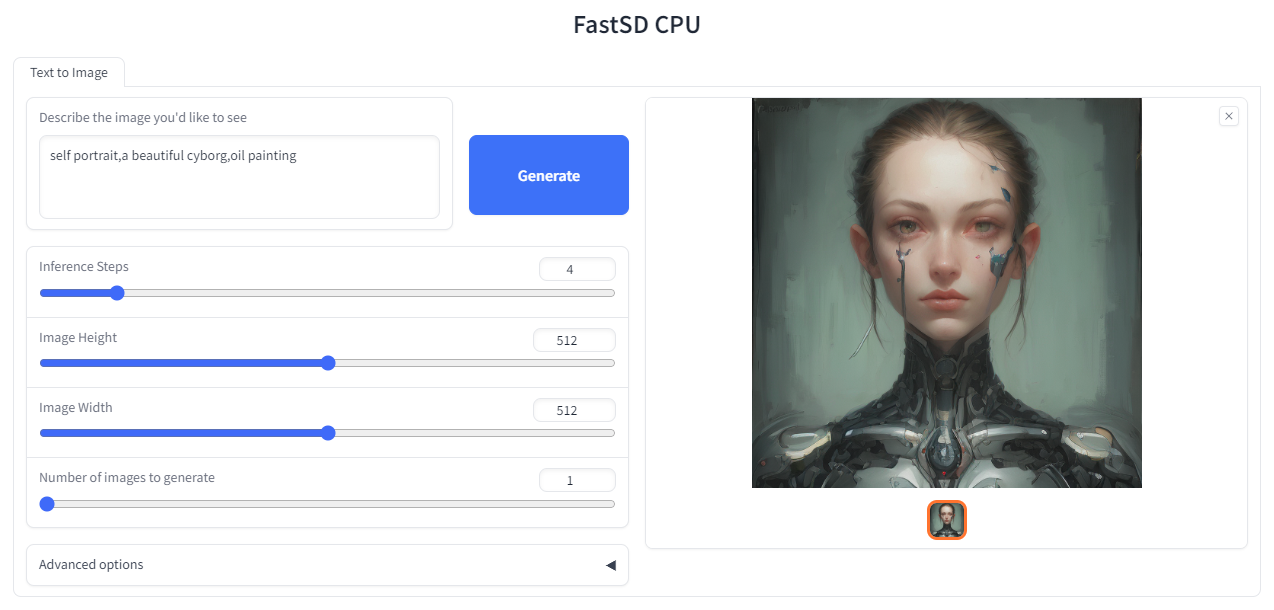

+#### Web UI screenshot

+

+

+

+### Google Colab

+

+Due to the limitation of using CPU/OpenVINO inside colab, we are using GPU with colab.

+[](https://colab.research.google.com/drive/1SuAqskB-_gjWLYNRFENAkIXZ1aoyINqL?usp=sharing)

+

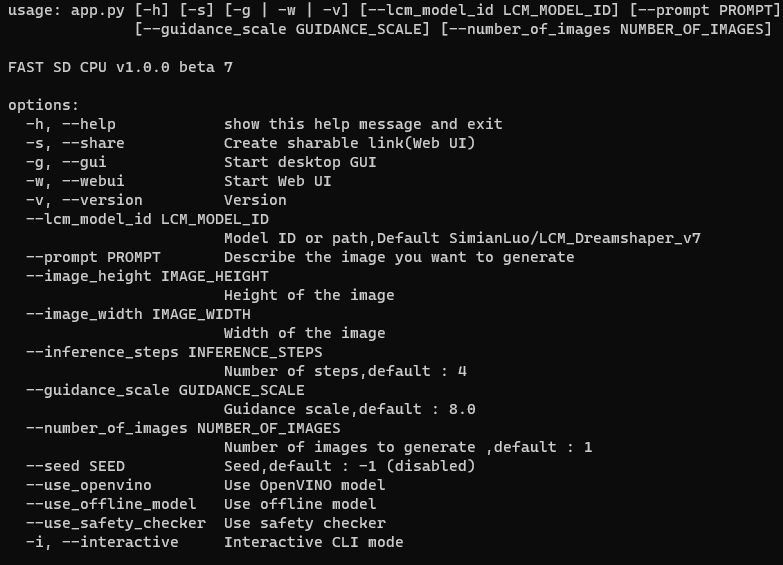

+### CLI mode (Advanced users)

+

+

+

+ Open the terminal and enter into fastsdcpu folder.

+ Activate virtual environment using the command:

+

+##### Windows users

+

+ (Suppose FastSD CPU available in the directory "D:\fastsdcpu")

+ `D:\fastsdcpu\env\Scripts\activate.bat`

+

+##### Linux users

+

+ `source env/bin/activate`

+

+Start CLI `src/app.py -h`

+

+<a id="android"></a>

+

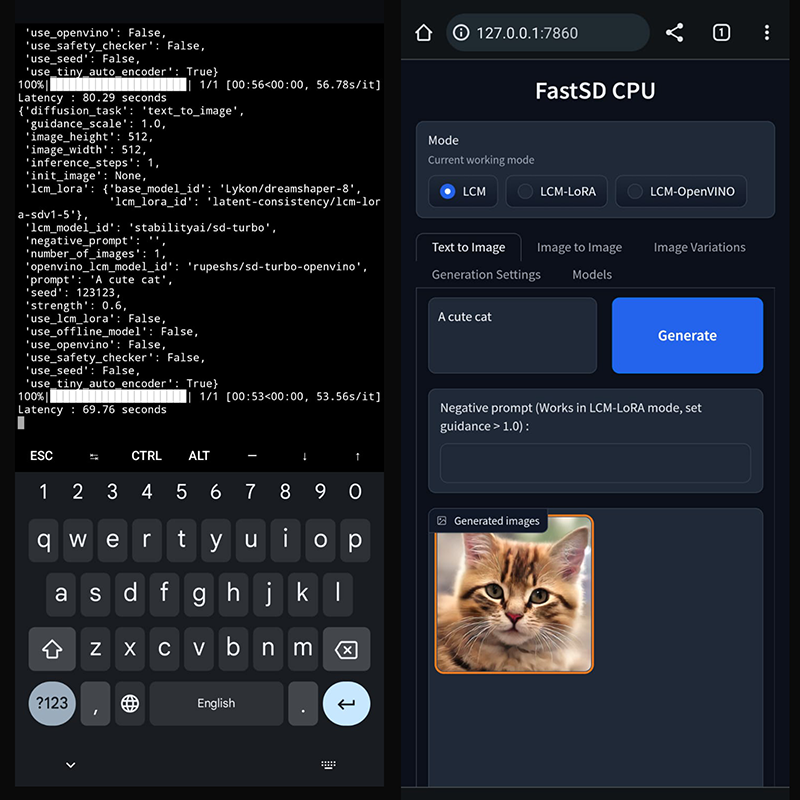

+## Android (Termux + PRoot)

+

+FastSD CPU running on Google Pixel 7 Pro.

+

+

+

+### 1. Prerequisites

+

+First you have to [install Termux](https://wiki.termux.com/wiki/Installing_from_F-Droid) and [install PRoot](https://wiki.termux.com/wiki/PRoot). Then install and login to Ubuntu in PRoot.

+

+### 2. Install FastSD CPU

+

+Run the following command to install without Qt GUI.

+

+ `proot-distro login ubuntu`

+

+ `./install.sh --disable-gui`

+

+ After the installation you can use WebUi.

+

+ `./start-webui.sh`

+

+ Note : If you get `libgl.so.1` import error run `apt-get install ffmpeg`.

+

+ Thanks [patienx](https://github.com/patientx) for this guide [Step by step guide to installing FASTSDCPU on ANDROID](https://github.com/rupeshs/fastsdcpu/discussions/123)

+

+Another step by step guide to run FastSD on Android is [here](https://nolowiz.com/how-to-install-and-run-fastsd-cpu-on-android-temux-step-by-step-guide/)

+

+<a id="raspberry"></a>

+

+## Raspberry PI 4 support

+

+Thanks [WGNW_MGM] for Raspberry PI 4 testing.FastSD CPU worked without problems.

+System configuration - Raspberry Pi 4 with 4GB RAM, 8GB of SWAP memory.

+

+<a id="orangepi"></a>

+

+## Orange Pi 5 support

+

+Thanks [khanumballz](https://github.com/khanumballz) for testing FastSD CPU with Orange PI 5.

+[Here is a video of FastSD CPU running on Orange Pi 5](https://www.youtube.com/watch?v=KEJiCU0aK8o).

+

+<a id="apisupport"></a>

+

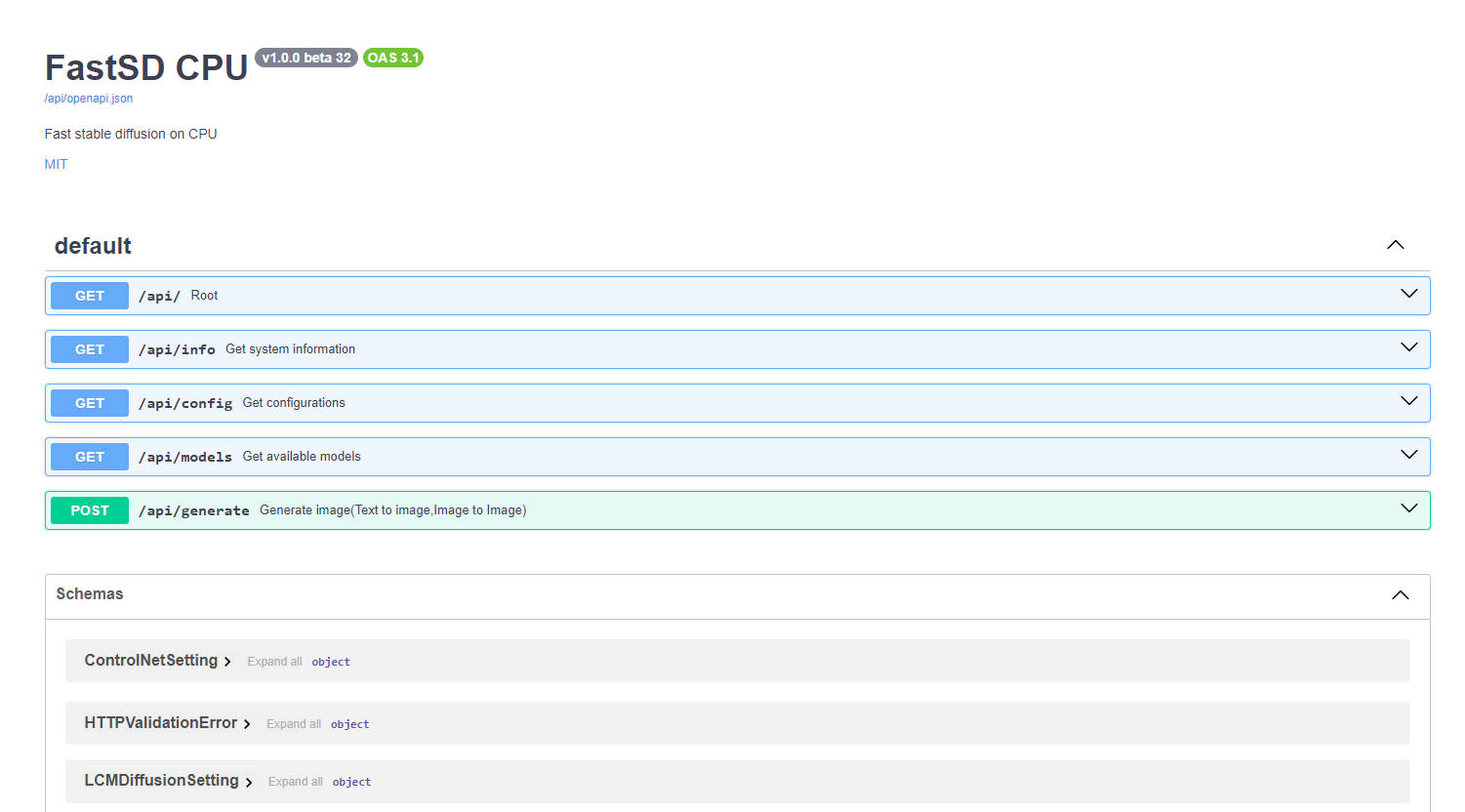

+## API support

+

+

+

+FastSD CPU supports basic API endpoints. Following API endpoints are available :

+

+- /api/info - To get system information

+- /api/config - Get configuration

+- /api/models - List all available models

+- /api/generate - Generate images (Text to image,image to image)

+

+To start FastAPI in webserver mode run:

+``python src/app.py --api``

+

+or use `start-webserver.sh` for Linux and `start-webserver.bat` for Windows.

+

+Access API documentation locally at <http://localhost:8000/api/docs> .

+

+Generated image is JPEG image encoded as base64 string.

+In the image-to-image mode input image should be encoded as base64 string.

+

+To generate an image a minimal request `POST /api/generate` with body :

+

+```

+{

+ "prompt": "a cute cat",

+ "use_openvino": true

+}

+```

+

+## Known issues

+

+- TAESD will not work with OpenVINO image to image workflow

+

+## License

+

+The fastsdcpu project is available as open source under the terms of the [MIT license](https://github.com/rupeshs/fastsdcpu/blob/main/LICENSE)

+

+## Disclaimer

+

+Users are granted the freedom to create images using this tool, but they are obligated to comply with local laws and utilize it responsibly. The developers will not assume any responsibility for potential misuse by users.

+

+<a id="contributors"></a>

+

+## Thanks to all our contributors

+

+Original Author & Maintainer - [Rupesh Sreeraman](https://github.com/rupeshs)

+

+We thank all contributors for their time and hard work!

+

+<a href="https://github.com/rupeshs/fastsdcpu/graphs/contributors">

+ <img src="https://contrib.rocks/image?repo=rupeshs/fastsdcpu" />

+</a>

diff --git a/THIRD-PARTY-LICENSES b/THIRD-PARTY-LICENSES

new file mode 100644

index 0000000000000000000000000000000000000000..9fb29b1d3d52582f6047a41d8f5622de43d24868

--- /dev/null

+++ b/THIRD-PARTY-LICENSES

@@ -0,0 +1,143 @@

+stablediffusion.cpp - MIT

+

+OpenVINO stablediffusion engine - Apache 2

+

+SD Turbo - STABILITY AI NON-COMMERCIAL RESEARCH COMMUNITY LICENSE AGREEMENT

+

+MIT License

+

+Copyright (c) 2023 leejet

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

+

+ERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+Definitions.

+

+"License" shall mean the terms and conditions for use, reproduction, and distribution as defined by Sections 1 through 9 of this document.

+

+"Licensor" shall mean the copyright owner or entity authorized by the copyright owner that is granting the License.

+

+"Legal Entity" shall mean the union of the acting entity and all other entities that control, are controlled by, or are under common control with that entity. For the purposes of this definition, "control" means (i) the power, direct or indirect, to cause the direction or management of such entity, whether by contract or otherwise, or (ii) ownership of fifty percent (50%) or more of the outstanding shares, or (iii) beneficial ownership of such entity.

+

+"You" (or "Your") shall mean an individual or Legal Entity exercising permissions granted by this License.

+

+"Source" form shall mean the preferred form for making modifications, including but not limited to software source code, documentation source, and configuration files.

+

+"Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation, and conversions to other media types.

+

+"Work" shall mean the work of authorship, whether in Source or Object form, made available under the License, as indicated by a copyright notice that is included in or attached to the work (an example is provided in the Appendix below).

+

+"Derivative Works" shall mean any work, whether in Source or Object form, that is based on (or derived from) the Work and for which the editorial revisions, annotations, elaborations, or other modifications represent, as a whole, an original work of authorship. For the purposes of this License, Derivative Works shall not include works that remain separable from, or merely link (or bind by name) to the interfaces of, the Work and Derivative Works thereof.

+

+"Contribution" shall mean any work of authorship, including the original version of the Work and any modifications or additions to that Work or Derivative Works thereof, that is intentionally submitted to Licensor for inclusion in the Work by the copyright owner or by an individual or Legal Entity authorized to submit on behalf of the copyright owner. For the purposes of this definition, "submitted" means any form of electronic, verbal, or written communication sent to the Licensor or its representatives, including but not limited to communication on electronic mailing lists, source code control systems, and issue tracking systems that are managed by, or on behalf of, the Licensor for the purpose of discussing and improving the Work, but excluding communication that is conspicuously marked or otherwise designated in writing by the copyright owner as "Not a Contribution."

+

+"Contributor" shall mean Licensor and any individual or Legal Entity on behalf of whom a Contribution has been received by Licensor and subsequently incorporated within the Work.

+

+Grant of Copyright License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license to reproduce, prepare Derivative Works of, publicly display, publicly perform, sublicense, and distribute the Work and such Derivative Works in Source or Object form.

+

+Grant of Patent License. Subject to the terms and conditions of this License, each Contributor hereby grants to You a perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable (except as stated in this section) patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer the Work, where such license applies only to those patent claims licensable by such Contributor that are necessarily infringed by their Contribution(s) alone or by combination of their Contribution(s) with the Work to which such Contribution(s) was submitted. If You institute patent litigation against any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the Work or a Contribution incorporated within the Work constitutes direct or contributory patent infringement, then any patent licenses granted to You under this License for that Work shall terminate as of the date such litigation is filed.

+

+Redistribution. You may reproduce and distribute copies of the Work or Derivative Works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions:

+

+(a) You must give any other recipients of the Work or Derivative Works a copy of this License; and

+

+(b) You must cause any modified files to carry prominent notices stating that You changed the files; and

+

+(c) You must retain, in the Source form of any Derivative Works that You distribute, all copyright, patent, trademark, and attribution notices from the Source form of the Work, excluding those notices that do not pertain to any part of the Derivative Works; and

+

+(d) If the Work includes a "NOTICE" text file as part of its distribution, then any Derivative Works that You distribute must include a readable copy of the attribution notices contained within such NOTICE file, excluding those notices that do not pertain to any part of the Derivative Works, in at least one of the following places: within a NOTICE text file distributed as part of the Derivative Works; within the Source form or documentation, if provided along with the Derivative Works; or, within a display generated by the Derivative Works, if and wherever such third-party notices normally appear. The contents of the NOTICE file are for informational purposes only and do not modify the License. You may add Your own attribution notices within Derivative Works that You distribute, alongside or as an addendum to the NOTICE text from the Work, provided that such additional attribution notices cannot be construed as modifying the License.

+

+You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such Derivative Works as a whole, provided Your use, reproduction, and distribution of the Work otherwise complies with the conditions stated in this License.

+

+Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions.

+

+Trademarks. This License does not grant permission to use the trade names, trademarks, service marks, or product names of the Licensor, except as required for reasonable and customary use in describing the origin of the Work and reproducing the content of the NOTICE file.

+

+Disclaimer of Warranty. Unless required by applicable law or agreed to in writing, Licensor provides the Work (and each Contributor provides its Contributions) on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied, including, without limitation, any warranties or conditions of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A PARTICULAR PURPOSE. You are solely responsible for determining the appropriateness of using or redistributing the Work and assume any risks associated with Your exercise of permissions under this License.

+

+Limitation of Liability. In no event and under no legal theory, whether in tort (including negligence), contract, or otherwise, unless required by applicable law (such as deliberate and grossly negligent acts) or agreed to in writing, shall any Contributor be liable to You for damages, including any direct, indirect, special, incidental, or consequential damages of any character arising as a result of this License or out of the use or inability to use the Work (including but not limited to damages for loss of goodwill, work stoppage, computer failure or malfunction, or any and all other commercial damages or losses), even if such Contributor has been advised of the possibility of such damages.

+

+Accepting Warranty or Additional Liability. While redistributing the Work or Derivative Works thereof, You may choose to offer, and charge a fee for, acceptance of support, warranty, indemnity, or other liability obligations and/or rights consistent with this License. However, in accepting such obligations, You may act only on Your own behalf and on Your sole responsibility, not on behalf of any other Contributor, and only if You agree to indemnify, defend, and hold each Contributor harmless for any liability incurred by, or claims asserted against, such Contributor by reason of your accepting any such warranty or additional liability.

+

+END OF TERMS AND CONDITIONS

+

+APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+Copyright [yyyy] [name of copyright owner]

+

+Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

+

+ <http://www.apache.org/licenses/LICENSE-2.0>

+Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

+

+STABILITY AI NON-COMMERCIAL RESEARCH COMMUNITY LICENSE AGREEMENT

+Dated: November 28, 2023

+

+By using or distributing any portion or element of the Models, Software, Software Products or Derivative Works, you agree to be bound by this Agreement.

+

+"Agreement" means this Stable Non-Commercial Research Community License Agreement.

+

+“AUP” means the Stability AI Acceptable Use Policy available at <https://stability.ai/use-policy>, as may be updated from time to time.

+

+"Derivative Work(s)” means (a) any derivative work of the Software Products as recognized by U.S. copyright laws and (b) any modifications to a Model, and any other model created which is based on or derived from the Model or the Model’s output. For clarity, Derivative Works do not include the output of any Model.

+

+“Documentation” means any specifications, manuals, documentation, and other written information provided by Stability AI related to the Software.

+

+"Licensee" or "you" means you, or your employer or any other person or entity (if you are entering into this Agreement on such person or entity's behalf), of the age required under applicable laws, rules or regulations to provide legal consent and that has legal authority to bind your employer or such other person or entity if you are entering in this Agreement on their behalf.

+

+“Model(s)" means, collectively, Stability AI’s proprietary models and algorithms, including machine-learning models, trained model weights and other elements of the foregoing, made available under this Agreement.

+

+“Non-Commercial Uses” means exercising any of the rights granted herein for the purpose of research or non-commercial purposes. Non-Commercial Uses does not include any production use of the Software Products or any Derivative Works.

+

+"Stability AI" or "we" means Stability AI Ltd. and its affiliates.

+

+"Software" means Stability AI’s proprietary software made available under this Agreement.

+

+“Software Products” means the Models, Software and Documentation, individually or in any combination.

+

+1. License Rights and Redistribution.

+

+a. Subject to your compliance with this Agreement, the AUP (which is hereby incorporated herein by reference), and the Documentation, Stability AI grants you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty free and limited license under Stability AI’s intellectual property or other rights owned or controlled by Stability AI embodied in the Software Products to use, reproduce, distribute, and create Derivative Works of, the Software Products, in each case for Non-Commercial Uses only.

+

+b. You may not use the Software Products or Derivative Works to enable third parties to use the Software Products or Derivative Works as part of your hosted service or via your APIs, whether you are adding substantial additional functionality thereto or not. Merely distributing the Software Products or Derivative Works for download online without offering any related service (ex. by distributing the Models on HuggingFace) is not a violation of this subsection. If you wish to use the Software Products or any Derivative Works for commercial or production use or you wish to make the Software Products or any Derivative Works available to third parties via your hosted service or your APIs, contact Stability AI at <https://stability.ai/contact>.

+

+c. If you distribute or make the Software Products, or any Derivative Works thereof, available to a third party, the Software Products, Derivative Works, or any portion thereof, respectively, will remain subject to this Agreement and you must (i) provide a copy of this Agreement to such third party, and (ii) retain the following attribution notice within a "Notice" text file distributed as a part of such copies: "This Stability AI Model is licensed under the Stability AI Non-Commercial Research Community License, Copyright (c) Stability AI Ltd. All Rights Reserved.” If you create a Derivative Work of a Software Product, you may add your own attribution notices to the Notice file included with the Software Product, provided that you clearly indicate which attributions apply to the Software Product and you must state in the NOTICE file that you changed the Software Product and how it was modified.

+

+2. Disclaimer of Warranty. UNLESS REQUIRED BY APPLICABLE LAW, THE SOFTWARE PRODUCTS AND ANY OUTPUT AND RESULTS THERE FROM ARE PROVIDED ON AN "AS IS" BASIS, WITHOUT WARRANTIES OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE FOR DETERMINING THE APPROPRIATENESS OF USING OR REDISTRIBUTING THE SOFTWARE PRODUCTS, DERIVATIVE WORKS OR ANY OUTPUT OR RESULTS AND ASSUME ANY RISKS ASSOCIATED WITH YOUR USE OF THE SOFTWARE PRODUCTS, DERIVATIVE WORKS AND ANY OUTPUT AND RESULTS.

+

+3. Limitation of Liability. IN NO EVENT WILL STABILITY AI OR ITS AFFILIATES BE LIABLE UNDER ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, TORT, NEGLIGENCE, PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT, FOR ANY LOST PROFITS OR ANY DIRECT, INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR PUNITIVE DAMAGES, EVEN IF STABILITY AI OR ITS AFFILIATES HAVE BEEN ADVISED OF THE POSSIBILITY OF ANY OF THE FOREGOING.

+

+4. Intellectual Property.

+

+a. No trademark licenses are granted under this Agreement, and in connection with the Software Products or Derivative Works, neither Stability AI nor Licensee may use any name or mark owned by or associated with the other or any of its affiliates, except as required for reasonable and customary use in describing and redistributing the Software Products or Derivative Works.

+

+b. Subject to Stability AI’s ownership of the Software Products and Derivative Works made by or for Stability AI, with respect to any Derivative Works that are made by you, as between you and Stability AI, you are and will be the owner of such Derivative Works

+

+c. If you institute litigation or other proceedings against Stability AI (including a cross-claim or counterclaim in a lawsuit) alleging that the Software Products, Derivative Works or associated outputs or results, or any portion of any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensable by you, then any licenses granted to you under this Agreement shall terminate as of the date such litigation or claim is filed or instituted. You will indemnify and hold harmless Stability AI from and against any claim by any third party arising out of or related to your use or distribution of the Software Products or Derivative Works in violation of this Agreement.

+

+5. Term and Termination. The term of this Agreement will commence upon your acceptance of this Agreement or access to the Software Products and will continue in full force and effect until terminated in accordance with the terms and conditions herein. Stability AI may terminate this Agreement if you are in breach of any term or condition of this Agreement. Upon termination of this Agreement, you shall delete and cease use of any Software Products or Derivative Works. Sections 2-4 shall survive the termination of this Agreement.

diff --git a/benchmark-openvino.bat b/benchmark-openvino.bat

new file mode 100644

index 0000000000000000000000000000000000000000..c42dd4b3dfda63bca515f23a788ee5f417dbb2e4

--- /dev/null

+++ b/benchmark-openvino.bat

@@ -0,0 +1,23 @@

+@echo off

+setlocal

+

+set "PYTHON_COMMAND=python"

+

+call python --version > nul 2>&1

+if %errorlevel% equ 0 (

+ echo Python command check :OK

+) else (

+ echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

+ pause

+ exit /b 1

+

+)

+

+:check_python_version

+for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

+ set "python_version=%%I"

+)

+

+echo Python version: %python_version%

+

+call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b --use_openvino --openvino_lcm_model_id "rupeshs/sd-turbo-openvino"

\ No newline at end of file

diff --git a/benchmark.bat b/benchmark.bat

new file mode 100644

index 0000000000000000000000000000000000000000..97e8d9da773696f2ce57e69572fa9973977b3f7e

--- /dev/null

+++ b/benchmark.bat

@@ -0,0 +1,23 @@

+@echo off

+setlocal

+

+set "PYTHON_COMMAND=python"

+

+call python --version > nul 2>&1

+if %errorlevel% equ 0 (

+ echo Python command check :OK

+) else (

+ echo "Error: Python command not found, please install Python (Recommended : Python 3.10 or Python 3.11) and try again"

+ pause

+ exit /b 1

+

+)

+

+:check_python_version

+for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

+ set "python_version=%%I"

+)

+

+echo Python version: %python_version%

+

+call "%~dp0env\Scripts\activate.bat" && %PYTHON_COMMAND% src/app.py -b

\ No newline at end of file

diff --git a/configs/lcm-lora-models.txt b/configs/lcm-lora-models.txt

new file mode 100644

index 0000000000000000000000000000000000000000..f252571ecfc0936d6374e83c3cdfd2f87508ff69

--- /dev/null

+++ b/configs/lcm-lora-models.txt

@@ -0,0 +1,4 @@

+latent-consistency/lcm-lora-sdv1-5

+latent-consistency/lcm-lora-sdxl

+latent-consistency/lcm-lora-ssd-1b

+rupeshs/hypersd-sd1-5-1-step-lora

\ No newline at end of file

diff --git a/configs/lcm-models.txt b/configs/lcm-models.txt

new file mode 100644

index 0000000000000000000000000000000000000000..9721ed6f43a6ccc00d3cd456d44f6632674e359c

--- /dev/null

+++ b/configs/lcm-models.txt

@@ -0,0 +1,8 @@

+stabilityai/sd-turbo

+rupeshs/sdxs-512-0.9-orig-vae

+rupeshs/hyper-sd-sdxl-1-step

+rupeshs/SDXL-Lightning-2steps

+stabilityai/sdxl-turbo

+SimianLuo/LCM_Dreamshaper_v7

+latent-consistency/lcm-sdxl

+latent-consistency/lcm-ssd-1b

\ No newline at end of file

diff --git a/configs/openvino-lcm-models.txt b/configs/openvino-lcm-models.txt

new file mode 100644

index 0000000000000000000000000000000000000000..656096d1bbabe6472a65869773b14a6c5bb9ec62

--- /dev/null

+++ b/configs/openvino-lcm-models.txt

@@ -0,0 +1,9 @@

+rupeshs/sd-turbo-openvino

+rupeshs/sdxs-512-0.9-openvino

+rupeshs/hyper-sd-sdxl-1-step-openvino-int8

+rupeshs/SDXL-Lightning-2steps-openvino-int8

+rupeshs/sdxl-turbo-openvino-int8

+rupeshs/LCM-dreamshaper-v7-openvino

+Disty0/LCM_SoteMix

+rupeshs/FLUX.1-schnell-openvino-int4

+rupeshs/sd15-lcm-square-openvino-int8

\ No newline at end of file

diff --git a/configs/stable-diffusion-models.txt b/configs/stable-diffusion-models.txt

new file mode 100644

index 0000000000000000000000000000000000000000..d5d21c9c5e64bb55c642243d27c230f04c6aab58

--- /dev/null

+++ b/configs/stable-diffusion-models.txt

@@ -0,0 +1,7 @@

+Lykon/dreamshaper-8

+Fictiverse/Stable_Diffusion_PaperCut_Model

+stabilityai/stable-diffusion-xl-base-1.0

+runwayml/stable-diffusion-v1-5

+segmind/SSD-1B

+stablediffusionapi/anything-v5

+prompthero/openjourney-v4

\ No newline at end of file

diff --git a/controlnet_models/Readme.txt b/controlnet_models/Readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..fdf39a3d9ae7982663447fff8c0b38edcd91353c

--- /dev/null

+++ b/controlnet_models/Readme.txt

@@ -0,0 +1,3 @@

+Place your ControlNet models in this folder.

+You can download controlnet model (.safetensors) from https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/tree/main

+E.g: https://huggingface.co/comfyanonymous/ControlNet-v1-1_fp16_safetensors/blob/main/control_v11p_sd15_canny_fp16.safetensors

\ No newline at end of file

diff --git a/docs/images/2steps-inference.jpg b/docs/images/2steps-inference.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..42a00185b1594bed3d19846a04898d5074f049c8

Binary files /dev/null and b/docs/images/2steps-inference.jpg differ

diff --git a/docs/images/ARCGPU.png b/docs/images/ARCGPU.png

new file mode 100644

index 0000000000000000000000000000000000000000..1abdaceefdbbf4454f5785930bbdfaa5c2424629

Binary files /dev/null and b/docs/images/ARCGPU.png differ

diff --git a/docs/images/fastcpu-cli.png b/docs/images/fastcpu-cli.png

new file mode 100644

index 0000000000000000000000000000000000000000..41592e190a16115fe59a63b40022cad4f36a5f93

Binary files /dev/null and b/docs/images/fastcpu-cli.png differ

diff --git a/docs/images/fastcpu-webui.png b/docs/images/fastcpu-webui.png

new file mode 100644

index 0000000000000000000000000000000000000000..8fbaae6d761978fab13c45d535235fed3878ce2a

--- /dev/null

+++ b/docs/images/fastcpu-webui.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:d26b4e4bc41d515a730b757a6a984724aa59497235bf771b25e5a7d9c2d5680f

+size 263249

diff --git a/docs/images/fastsdcpu-android-termux-pixel7.png b/docs/images/fastsdcpu-android-termux-pixel7.png

new file mode 100644

index 0000000000000000000000000000000000000000..da4845a340ec3331f7e552558ece96c1968ac68a

--- /dev/null

+++ b/docs/images/fastsdcpu-android-termux-pixel7.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:0e18187cb43b8e905971fd607e6640a66c2877d4dc135647425f3cb66d7f22ae

+size 298843

diff --git a/docs/images/fastsdcpu-api.png b/docs/images/fastsdcpu-api.png

new file mode 100644

index 0000000000000000000000000000000000000000..639aa5da86c4d136351e2fda43745ef55c4b9314

Binary files /dev/null and b/docs/images/fastsdcpu-api.png differ

diff --git a/docs/images/fastsdcpu-gui.jpg b/docs/images/fastsdcpu-gui.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..698517415079debc82a55c4180f2472a3ed74679

--- /dev/null

+++ b/docs/images/fastsdcpu-gui.jpg

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:03c1fe3b5ea4dfcc25654c4fc76fc392a59bdf668b1c45e5e6fc14edcf2fac5c

+size 207258

diff --git a/docs/images/fastsdcpu-mac-gui.jpg b/docs/images/fastsdcpu-mac-gui.jpg

new file mode 100644

index 0000000000000000000000000000000000000000..098e4d189d198072079cae811b6c4f18e869b02a

Binary files /dev/null and b/docs/images/fastsdcpu-mac-gui.jpg differ

diff --git a/docs/images/fastsdcpu-screenshot.png b/docs/images/fastsdcpu-screenshot.png

new file mode 100644

index 0000000000000000000000000000000000000000..4cfe1931059b4bf6c6218521883e218fd9a698c6

--- /dev/null

+++ b/docs/images/fastsdcpu-screenshot.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:3729a8a87629800c63ca98ed36c1a48c7c0bc64c02a144e396aca05e7c529ee6

+size 293249

diff --git a/docs/images/fastsdcpu-webui.png b/docs/images/fastsdcpu-webui.png

new file mode 100644

index 0000000000000000000000000000000000000000..d5528d7a934cfcfbbf67c4598bd7506eadeae0d4

--- /dev/null

+++ b/docs/images/fastsdcpu-webui.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:b1378a52fab340ad566e69074f93af40a82f92afb29d8a3b27cbe218b5ee4bff

+size 380162

diff --git a/docs/images/fastsdcpu_flux_on_cpu.png b/docs/images/fastsdcpu_flux_on_cpu.png

new file mode 100644

index 0000000000000000000000000000000000000000..53dc5ff72f7c442284133cb66e68a7bf665e4586

--- /dev/null

+++ b/docs/images/fastsdcpu_flux_on_cpu.png

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:5e42851d654dc88a75e479cb6dcf25bc1e7a7463f7c2eb2a98ec77e2b3c74e06

+size 383111

diff --git a/install-mac.sh b/install-mac.sh

new file mode 100644

index 0000000000000000000000000000000000000000..a9456397f2a826da7ed79835ba25f585e1da2d24

--- /dev/null

+++ b/install-mac.sh

@@ -0,0 +1,31 @@

+#!/usr/bin/env bash

+echo Starting FastSD CPU env installation...

+set -e

+PYTHON_COMMAND="python3"

+

+if ! command -v python3 &>/dev/null; then

+ if ! command -v python &>/dev/null; then

+ echo "Error: Python not found, please install python 3.8 or higher and try again"

+ exit 1

+ fi

+fi

+

+if command -v python &>/dev/null; then

+ PYTHON_COMMAND="python"

+fi

+

+echo "Found $PYTHON_COMMAND command"

+

+python_version=$($PYTHON_COMMAND --version 2>&1 | awk '{print $2}')

+echo "Python version : $python_version"

+

+BASEDIR=$(pwd)

+

+$PYTHON_COMMAND -m venv "$BASEDIR/env"

+# shellcheck disable=SC1091

+source "$BASEDIR/env/bin/activate"

+pip install torch

+pip install -r "$BASEDIR/requirements.txt"

+chmod +x "start.sh"

+chmod +x "start-webui.sh"

+read -n1 -r -p "FastSD CPU installation completed,press any key to continue..." key

\ No newline at end of file

diff --git a/install.bat b/install.bat

new file mode 100644

index 0000000000000000000000000000000000000000..b05db0bf251352685c046c107a82e28e2f08c7cf

--- /dev/null

+++ b/install.bat

@@ -0,0 +1,29 @@

+

+@echo off

+setlocal

+echo Starting FastSD CPU env installation...

+

+set "PYTHON_COMMAND=python"

+

+call python --version > nul 2>&1

+if %errorlevel% equ 0 (

+ echo Python command check :OK

+) else (

+ echo "Error: Python command not found,please install Python(Recommended : Python 3.10 or Python 3.11) and try again."

+ pause

+ exit /b 1

+

+)

+

+:check_python_version

+for /f "tokens=2" %%I in ('%PYTHON_COMMAND% --version 2^>^&1') do (

+ set "python_version=%%I"

+)

+

+echo Python version: %python_version%

+

+%PYTHON_COMMAND% -m venv "%~dp0env"

+call "%~dp0env\Scripts\activate.bat" && pip install torch==2.2.2 --index-url https://download.pytorch.org/whl/cpu

+call "%~dp0env\Scripts\activate.bat" && pip install -r "%~dp0requirements.txt"

+echo FastSD CPU env installation completed.

+pause

\ No newline at end of file

diff --git a/install.sh b/install.sh

new file mode 100644

index 0000000000000000000000000000000000000000..3ed8d5c889ba1e8dec7c62ba7d80719c9f0fc5f8

--- /dev/null

+++ b/install.sh

@@ -0,0 +1,28 @@

+#!/usr/bin/env bash

+echo Starting FastSD CPU env installation...

+set -e

+PYTHON_COMMAND="python3"

+

+if ! command -v python3 &>/dev/null; then

+ if ! command -v python &>/dev/null; then

+ echo "Error: Python not found, please install python 3.8 or higher and try again"

+ exit 1

+ fi

+fi

+

+if command -v python &>/dev/null; then

+ PYTHON_COMMAND="python"

+fi

+

+echo "Found $PYTHON_COMMAND command"

+

+python_version=$($PYTHON_COMMAND --version 2>&1 | awk '{print $2}')

+echo "Python version : $python_version"

+

+BASEDIR=$(pwd)

+

+pip install torch==2.2.2 --index-url https://download.pytorch.org/whl/cpu

+pip install -r "$BASEDIR/requirements.txt"

+

+chmod +x "start.sh"

+chmod +x "start-webui.sh"

diff --git a/lora_models/HoloEnV2.safetensors b/lora_models/HoloEnV2.safetensors

new file mode 100644

index 0000000000000000000000000000000000000000..8e90d7a03c5dbed1156e1b4b7ea6065f4078ca4b

--- /dev/null

+++ b/lora_models/HoloEnV2.safetensors

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:b30945096a4f2ff4a52fcafaa8481c6a5d52073be323674a16b189ceb66d39a6

+size 151111711

diff --git a/lora_models/Readme.txt b/lora_models/Readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..e4748a642837930fdcb903e42273ffaf5ccda888

--- /dev/null

+++ b/lora_models/Readme.txt

@@ -0,0 +1,3 @@

+Place your lora models in this folder.

+You can download lora model (.safetensors/Safetensor) from Civitai (https://civitai.com/) or Hugging Face(https://huggingface.co/)

+E.g: https://civitai.com/models/207984/cutecartoonredmond-15v-cute-cartoon-lora-for-liberteredmond-sd-15?modelVersionId=234192

\ No newline at end of file

diff --git a/models/gguf/clip/readme.txt b/models/gguf/clip/readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..48b307e2be7fbaf7cce08d578e8c8e7e6037936f

--- /dev/null

+++ b/models/gguf/clip/readme.txt

@@ -0,0 +1 @@

+Place CLIP model files here"

diff --git a/models/gguf/diffusion/readme.txt b/models/gguf/diffusion/readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..4625665b369085b5941d284b63b42afd3c22629a

--- /dev/null

+++ b/models/gguf/diffusion/readme.txt

@@ -0,0 +1 @@

+Place your diffusion gguf model files here

diff --git a/models/gguf/t5xxl/readme.txt b/models/gguf/t5xxl/readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..e9c586e46a0201cef38fc5a759a730eab48086d0

--- /dev/null

+++ b/models/gguf/t5xxl/readme.txt

@@ -0,0 +1 @@

+Place T5-XXL model files here

diff --git a/models/gguf/vae/readme.txt b/models/gguf/vae/readme.txt

new file mode 100644

index 0000000000000000000000000000000000000000..15c39ec7f9d9ae275f6bceab50acd6bb9cf83714

--- /dev/null

+++ b/models/gguf/vae/readme.txt

@@ -0,0 +1 @@

+Place VAE model files here

diff --git a/requirements.txt b/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..8d94aac19c006ba7578538673f658d951cc56d50

--- /dev/null

+++ b/requirements.txt

@@ -0,0 +1,18 @@

+accelerate==0.33.0

+diffusers==0.30.0

+transformers==4.41.2

+Pillow==9.4.0

+openvino==2024.4.0

+optimum-intel==1.18.2

+onnx==1.16.0

+onnxruntime==1.17.3

+pydantic==2.4.2

+typing-extensions==4.8.0

+pyyaml==6.0.1

+gradio==5.6.0

+peft==0.6.1

+opencv-python==4.8.1.78

+omegaconf==2.3.0

+controlnet-aux==0.0.7

+mediapipe==0.10.9

+tomesd==0.1.3

\ No newline at end of file

diff --git a/src/__init__.py b/src/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/src/app.py b/src/app.py

new file mode 100644

index 0000000000000000000000000000000000000000..dfa966c22b685592345c187070763252e0a32702

--- /dev/null

+++ b/src/app.py

@@ -0,0 +1,535 @@

+import json

+from argparse import ArgumentParser

+

+import constants

+from backend.controlnet import controlnet_settings_from_dict

+from backend.models.gen_images import ImageFormat

+from backend.models.lcmdiffusion_setting import DiffusionTask

+from backend.upscale.tiled_upscale import generate_upscaled_image

+from constants import APP_VERSION, DEVICE

+from frontend.webui.image_variations_ui import generate_image_variations

+from models.interface_types import InterfaceType

+from paths import FastStableDiffusionPaths

+from PIL import Image

+from state import get_context, get_settings

+from utils import show_system_info

+from backend.device import get_device_name

+

+parser = ArgumentParser(description=f"FAST SD CPU {constants.APP_VERSION}")

+parser.add_argument(

+ "-s",

+ "--share",

+ action="store_true",

+ help="Create sharable link(Web UI)",

+ required=False,

+)

+group = parser.add_mutually_exclusive_group(required=False)

+group.add_argument(

+ "-g",

+ "--gui",

+ action="store_true",

+ help="Start desktop GUI",

+)

+group.add_argument(

+ "-w",

+ "--webui",

+ action="store_true",

+ help="Start Web UI",

+)

+group.add_argument(

+ "-a",

+ "--api",

+ action="store_true",

+ help="Start Web API server",

+)

+group.add_argument(

+ "-r",

+ "--realtime",

+ action="store_true",

+ help="Start realtime inference UI(experimental)",

+)

+group.add_argument(

+ "-v",

+ "--version",

+ action="store_true",

+ help="Version",

+)

+

+parser.add_argument(

+ "-b",

+ "--benchmark",

+ action="store_true",

+ help="Run inference benchmark on the selected device",

+)

+parser.add_argument(

+ "--lcm_model_id",

+ type=str,

+ help="Model ID or path,Default stabilityai/sd-turbo",

+ default="stabilityai/sd-turbo",

+)

+parser.add_argument(

+ "--openvino_lcm_model_id",

+ type=str,

+ help="OpenVINO Model ID or path,Default rupeshs/sd-turbo-openvino",

+ default="rupeshs/sd-turbo-openvino",

+)

+parser.add_argument(

+ "--prompt",

+ type=str,

+ help="Describe the image you want to generate",

+ default="",

+)

+parser.add_argument(

+ "--negative_prompt",

+ type=str,

+ help="Describe what you want to exclude from the generation",

+ default="",

+)

+parser.add_argument(

+ "--image_height",

+ type=int,

+ help="Height of the image",

+ default=512,

+)

+parser.add_argument(

+ "--image_width",

+ type=int,

+ help="Width of the image",

+ default=512,

+)

+parser.add_argument(

+ "--inference_steps",

+ type=int,

+ help="Number of steps,default : 1",

+ default=1,

+)

+parser.add_argument(

+ "--guidance_scale",

+ type=float,

+ help="Guidance scale,default : 1.0",

+ default=1.0,

+)

+

+parser.add_argument(

+ "--number_of_images",

+ type=int,

+ help="Number of images to generate ,default : 1",

+ default=1,

+)

+parser.add_argument(

+ "--seed",

+ type=int,

+ help="Seed,default : -1 (disabled) ",

+ default=-1,

+)

+parser.add_argument(

+ "--use_openvino",

+ action="store_true",

+ help="Use OpenVINO model",

+)

+

+parser.add_argument(

+ "--use_offline_model",

+ action="store_true",

+ help="Use offline model",

+)

+parser.add_argument(

+ "--clip_skip",

+ type=int,

+ help="CLIP Skip (1-12), default : 1 (disabled) ",

+ default=1,

+)

+parser.add_argument(

+ "--token_merging",

+ type=float,

+ help="Token merging scale, 0.0 - 1.0, default : 0.0",

+ default=0.0,

+)

+

+parser.add_argument(

+ "--use_safety_checker",

+ action="store_true",

+ help="Use safety checker",

+)

+parser.add_argument(

+ "--use_lcm_lora",

+ action="store_true",

+ help="Use LCM-LoRA",

+)

+parser.add_argument(

+ "--base_model_id",

+ type=str,

+ help="LCM LoRA base model ID,Default Lykon/dreamshaper-8",

+ default="Lykon/dreamshaper-8",

+)

+parser.add_argument(

+ "--lcm_lora_id",

+ type=str,

+ help="LCM LoRA model ID,Default latent-consistency/lcm-lora-sdv1-5",

+ default="latent-consistency/lcm-lora-sdv1-5",

+)

+parser.add_argument(

+ "-i",

+ "--interactive",

+ action="store_true",

+ help="Interactive CLI mode",

+)

+parser.add_argument(

+ "-t",

+ "--use_tiny_auto_encoder",

+ action="store_true",

+ help="Use tiny auto encoder for SD (TAESD)",

+)

+parser.add_argument(

+ "-f",

+ "--file",

+ type=str,

+ help="Input image for img2img mode",

+ default="",

+)

+parser.add_argument(

+ "--img2img",

+ action="store_true",

+ help="img2img mode; requires input file via -f argument",

+)

+parser.add_argument(

+ "--batch_count",

+ type=int,

+ help="Number of sequential generations",

+ default=1,

+)

+parser.add_argument(

+ "--strength",

+ type=float,

+ help="Denoising strength for img2img and Image variations",

+ default=0.3,

+)

+parser.add_argument(

+ "--sdupscale",

+ action="store_true",

+ help="Tiled SD upscale,works only for the resolution 512x512,(2x upscale)",

+)

+parser.add_argument(

+ "--upscale",

+ action="store_true",

+ help="EDSR SD upscale ",

+)

+parser.add_argument(

+ "--custom_settings",

+ type=str,

+ help="JSON file containing custom generation settings",

+ default=None,

+)

+parser.add_argument(

+ "--usejpeg",

+ action="store_true",

+ help="Images will be saved as JPEG format",

+)

+parser.add_argument(

+ "--noimagesave",

+ action="store_true",

+ help="Disable image saving",

+)

+parser.add_argument(

+ "--lora",

+ type=str,

+ help="LoRA model full path e.g D:\lora_models\CuteCartoon15V-LiberteRedmodModel-Cartoon-CuteCartoonAF.safetensors",

+ default=None,

+)

+parser.add_argument(

+ "--lora_weight",

+ type=float,

+ help="LoRA adapter weight [0 to 1.0]",

+ default=0.5,

+)

+parser.add_argument(

+ "--port",

+ type=int,

+ help="Web server port",

+ default=8000,

+)

+

+args = parser.parse_args()

+

+if args.version:

+ print(APP_VERSION)

+ exit()

+

+# parser.print_help()

+print("FastSD CPU - ", APP_VERSION)

+show_system_info()

+print(f"Using device : {constants.DEVICE}")

+

+if args.webui:

+ app_settings = get_settings()

+else:

+ app_settings = get_settings()

+

+print(f"Found {len(app_settings.lcm_models)} LCM models in config/lcm-models.txt")

+print(

+ f"Found {len(app_settings.stable_diffsuion_models)} stable diffusion models in config/stable-diffusion-models.txt"

+)

+print(

+ f"Found {len(app_settings.lcm_lora_models)} LCM-LoRA models in config/lcm-lora-models.txt"

+)

+print(

+ f"Found {len(app_settings.openvino_lcm_models)} OpenVINO LCM models in config/openvino-lcm-models.txt"

+)

+

+if args.noimagesave:

+ app_settings.settings.generated_images.save_image = False

+else:

+ app_settings.settings.generated_images.save_image = True

+

+if not args.realtime:

+ # To minimize realtime mode dependencies

+ from backend.upscale.upscaler import upscale_image

+ from frontend.cli_interactive import interactive_mode

+

+if args.gui:

+ from frontend.gui.ui import start_gui

+

+ print("Starting desktop GUI mode(Qt)")

+ start_gui(

+ [],

+ app_settings,

+ )

+elif args.webui:

+ from frontend.webui.ui import start_webui

+

+ print("Starting web UI mode")

+ start_webui(

+ args.share,

+ )

+elif args.realtime:

+ from frontend.webui.realtime_ui import start_realtime_text_to_image

+

+ print("Starting realtime text to image(EXPERIMENTAL)")

+ start_realtime_text_to_image(args.share)

+elif args.api:

+ from backend.api.web import start_web_server

+

+ start_web_server(args.port)

+

+else:

+ context = get_context(InterfaceType.CLI)

+ config = app_settings.settings

+

+ if args.use_openvino:

+ config.lcm_diffusion_setting.openvino_lcm_model_id = args.openvino_lcm_model_id

+ else:

+ config.lcm_diffusion_setting.lcm_model_id = args.lcm_model_id

+

+ config.lcm_diffusion_setting.prompt = args.prompt

+ config.lcm_diffusion_setting.negative_prompt = args.negative_prompt

+ config.lcm_diffusion_setting.image_height = args.image_height

+ config.lcm_diffusion_setting.image_width = args.image_width

+ config.lcm_diffusion_setting.guidance_scale = args.guidance_scale

+ config.lcm_diffusion_setting.number_of_images = args.number_of_images

+ config.lcm_diffusion_setting.inference_steps = args.inference_steps

+ config.lcm_diffusion_setting.strength = args.strength

+ config.lcm_diffusion_setting.seed = args.seed

+ config.lcm_diffusion_setting.use_openvino = args.use_openvino

+ config.lcm_diffusion_setting.use_tiny_auto_encoder = args.use_tiny_auto_encoder

+ config.lcm_diffusion_setting.use_lcm_lora = args.use_lcm_lora

+ config.lcm_diffusion_setting.lcm_lora.base_model_id = args.base_model_id

+ config.lcm_diffusion_setting.lcm_lora.lcm_lora_id = args.lcm_lora_id

+ config.lcm_diffusion_setting.diffusion_task = DiffusionTask.text_to_image.value

+ config.lcm_diffusion_setting.lora.enabled = False

+ config.lcm_diffusion_setting.lora.path = args.lora

+ config.lcm_diffusion_setting.lora.weight = args.lora_weight

+ config.lcm_diffusion_setting.lora.fuse = True

+ if config.lcm_diffusion_setting.lora.path:

+ config.lcm_diffusion_setting.lora.enabled = True

+ if args.usejpeg:

+ config.generated_images.format = ImageFormat.JPEG.value.upper()

+ if args.seed > -1:

+ config.lcm_diffusion_setting.use_seed = True

+ else:

+ config.lcm_diffusion_setting.use_seed = False

+ config.lcm_diffusion_setting.use_offline_model = args.use_offline_model

+ config.lcm_diffusion_setting.clip_skip = args.clip_skip

+ config.lcm_diffusion_setting.token_merging = args.token_merging

+ config.lcm_diffusion_setting.use_safety_checker = args.use_safety_checker

+

+ # Read custom settings from JSON file

+ custom_settings = {}

+ if args.custom_settings:

+ with open(args.custom_settings) as f:

+ custom_settings = json.load(f)

+

+ # Basic ControlNet settings; if ControlNet is enabled, an image is