Upload 30 files

Browse files- docker/.env.example +938 -0

- docker/README.md +99 -0

- docker/certbot/README.md +76 -0

- docker/certbot/docker-entrypoint.sh +30 -0

- docker/certbot/update-cert.template.txt +19 -0

- docker/couchbase-server/Dockerfile +4 -0

- docker/couchbase-server/init-cbserver.sh +44 -0

- docker/docker-compose-template.yaml +576 -0

- docker/docker-compose.middleware.yaml +123 -0

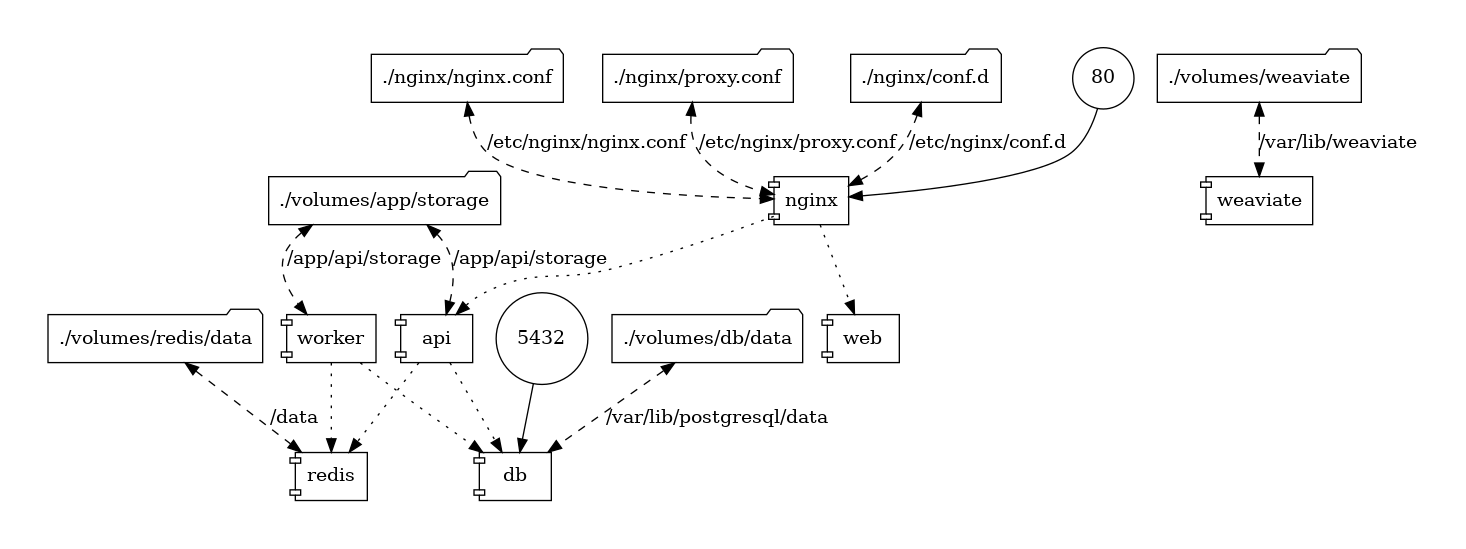

- docker/docker-compose.png +0 -0

- docker/docker-compose.yaml +971 -0

- docker/elasticsearch/docker-entrypoint.sh +25 -0

- docker/generate_docker_compose +112 -0

- docker/middleware.env.example +89 -0

- docker/nginx/conf.d/default.conf.template +37 -0

- docker/nginx/docker-entrypoint.sh +39 -0

- docker/nginx/https.conf.template +9 -0

- docker/nginx/nginx.conf.template +34 -0

- docker/nginx/proxy.conf.template +11 -0

- docker/nginx/ssl/.gitkeep +0 -0

- docker/ssrf_proxy/docker-entrypoint.sh +42 -0

- docker/ssrf_proxy/squid.conf.template +51 -0

- docker/startupscripts/init.sh +13 -0

- docker/startupscripts/init_user.script +10 -0

- docker/volumes/myscale/config/users.d/custom_users_config.xml +17 -0

- docker/volumes/oceanbase/init.d/vec_memory.sql +1 -0

- docker/volumes/opensearch/opensearch_dashboards.yml +222 -0

- docker/volumes/sandbox/conf/config.yaml +14 -0

- docker/volumes/sandbox/conf/config.yaml.example +35 -0

- docker/volumes/sandbox/dependencies/python-requirements.txt +0 -0

docker/.env.example

ADDED

|

@@ -0,0 +1,938 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ------------------------------

|

| 2 |

+

# Environment Variables for API service & worker

|

| 3 |

+

# ------------------------------

|

| 4 |

+

|

| 5 |

+

# ------------------------------

|

| 6 |

+

# Common Variables

|

| 7 |

+

# ------------------------------

|

| 8 |

+

|

| 9 |

+

# The backend URL of the console API,

|

| 10 |

+

# used to concatenate the authorization callback.

|

| 11 |

+

# If empty, it is the same domain.

|

| 12 |

+

# Example: https://api.console.dify.ai

|

| 13 |

+

CONSOLE_API_URL=

|

| 14 |

+

|

| 15 |

+

# The front-end URL of the console web,

|

| 16 |

+

# used to concatenate some front-end addresses and for CORS configuration use.

|

| 17 |

+

# If empty, it is the same domain.

|

| 18 |

+

# Example: https://console.dify.ai

|

| 19 |

+

CONSOLE_WEB_URL=

|

| 20 |

+

|

| 21 |

+

# Service API Url,

|

| 22 |

+

# used to display Service API Base Url to the front-end.

|

| 23 |

+

# If empty, it is the same domain.

|

| 24 |

+

# Example: https://api.dify.ai

|

| 25 |

+

SERVICE_API_URL=

|

| 26 |

+

|

| 27 |

+

# WebApp API backend Url,

|

| 28 |

+

# used to declare the back-end URL for the front-end API.

|

| 29 |

+

# If empty, it is the same domain.

|

| 30 |

+

# Example: https://api.app.dify.ai

|

| 31 |

+

APP_API_URL=

|

| 32 |

+

|

| 33 |

+

# WebApp Url,

|

| 34 |

+

# used to display WebAPP API Base Url to the front-end.

|

| 35 |

+

# If empty, it is the same domain.

|

| 36 |

+

# Example: https://app.dify.ai

|

| 37 |

+

APP_WEB_URL=

|

| 38 |

+

|

| 39 |

+

# File preview or download Url prefix.

|

| 40 |

+

# used to display File preview or download Url to the front-end or as Multi-model inputs;

|

| 41 |

+

# Url is signed and has expiration time.

|

| 42 |

+

FILES_URL=

|

| 43 |

+

|

| 44 |

+

# ------------------------------

|

| 45 |

+

# Server Configuration

|

| 46 |

+

# ------------------------------

|

| 47 |

+

|

| 48 |

+

# The log level for the application.

|

| 49 |

+

# Supported values are `DEBUG`, `INFO`, `WARNING`, `ERROR`, `CRITICAL`

|

| 50 |

+

LOG_LEVEL=INFO

|

| 51 |

+

# Log file path

|

| 52 |

+

LOG_FILE=/app/logs/server.log

|

| 53 |

+

# Log file max size, the unit is MB

|

| 54 |

+

LOG_FILE_MAX_SIZE=20

|

| 55 |

+

# Log file max backup count

|

| 56 |

+

LOG_FILE_BACKUP_COUNT=5

|

| 57 |

+

# Log dateformat

|

| 58 |

+

LOG_DATEFORMAT=%Y-%m-%d %H:%M:%S

|

| 59 |

+

# Log Timezone

|

| 60 |

+

LOG_TZ=UTC

|

| 61 |

+

|

| 62 |

+

# Debug mode, default is false.

|

| 63 |

+

# It is recommended to turn on this configuration for local development

|

| 64 |

+

# to prevent some problems caused by monkey patch.

|

| 65 |

+

DEBUG=false

|

| 66 |

+

|

| 67 |

+

# Flask debug mode, it can output trace information at the interface when turned on,

|

| 68 |

+

# which is convenient for debugging.

|

| 69 |

+

FLASK_DEBUG=false

|

| 70 |

+

|

| 71 |

+

# A secretkey that is used for securely signing the session cookie

|

| 72 |

+

# and encrypting sensitive information on the database.

|

| 73 |

+

# You can generate a strong key using `openssl rand -base64 42`.

|

| 74 |

+

SECRET_KEY=sk-9f73s3ljTXVcMT3Blb3ljTqtsKiGHXVcMT3BlbkFJLK7U

|

| 75 |

+

|

| 76 |

+

# Password for admin user initialization.

|

| 77 |

+

# If left unset, admin user will not be prompted for a password

|

| 78 |

+

# when creating the initial admin account.

|

| 79 |

+

# The length of the password cannot exceed 30 charactors.

|

| 80 |

+

INIT_PASSWORD=

|

| 81 |

+

|

| 82 |

+

# Deployment environment.

|

| 83 |

+

# Supported values are `PRODUCTION`, `TESTING`. Default is `PRODUCTION`.

|

| 84 |

+

# Testing environment. There will be a distinct color label on the front-end page,

|

| 85 |

+

# indicating that this environment is a testing environment.

|

| 86 |

+

DEPLOY_ENV=PRODUCTION

|

| 87 |

+

|

| 88 |

+

# Whether to enable the version check policy.

|

| 89 |

+

# If set to empty, https://updates.dify.ai will be called for version check.

|

| 90 |

+

CHECK_UPDATE_URL=https://updates.dify.ai

|

| 91 |

+

|

| 92 |

+

# Used to change the OpenAI base address, default is https://api.openai.com/v1.

|

| 93 |

+

# When OpenAI cannot be accessed in China, replace it with a domestic mirror address,

|

| 94 |

+

# or when a local model provides OpenAI compatible API, it can be replaced.

|

| 95 |

+

OPENAI_API_BASE=https://api.openai.com/v1

|

| 96 |

+

|

| 97 |

+

# When enabled, migrations will be executed prior to application startup

|

| 98 |

+

# and the application will start after the migrations have completed.

|

| 99 |

+

MIGRATION_ENABLED=true

|

| 100 |

+

|

| 101 |

+

# File Access Time specifies a time interval in seconds for the file to be accessed.

|

| 102 |

+

# The default value is 300 seconds.

|

| 103 |

+

FILES_ACCESS_TIMEOUT=300

|

| 104 |

+

|

| 105 |

+

# Access token expiration time in minutes

|

| 106 |

+

ACCESS_TOKEN_EXPIRE_MINUTES=60

|

| 107 |

+

|

| 108 |

+

# Refresh token expiration time in days

|

| 109 |

+

REFRESH_TOKEN_EXPIRE_DAYS=30

|

| 110 |

+

|

| 111 |

+

# The maximum number of active requests for the application, where 0 means unlimited, should be a non-negative integer.

|

| 112 |

+

APP_MAX_ACTIVE_REQUESTS=0

|

| 113 |

+

APP_MAX_EXECUTION_TIME=1200

|

| 114 |

+

|

| 115 |

+

# ------------------------------

|

| 116 |

+

# Container Startup Related Configuration

|

| 117 |

+

# Only effective when starting with docker image or docker-compose.

|

| 118 |

+

# ------------------------------

|

| 119 |

+

|

| 120 |

+

# API service binding address, default: 0.0.0.0, i.e., all addresses can be accessed.

|

| 121 |

+

DIFY_BIND_ADDRESS=0.0.0.0

|

| 122 |

+

|

| 123 |

+

# API service binding port number, default 5001.

|

| 124 |

+

DIFY_PORT=5001

|

| 125 |

+

|

| 126 |

+

# The number of API server workers, i.e., the number of workers.

|

| 127 |

+

# Formula: number of cpu cores x 2 + 1 for sync, 1 for Gevent

|

| 128 |

+

# Reference: https://docs.gunicorn.org/en/stable/design.html#how-many-workers

|

| 129 |

+

SERVER_WORKER_AMOUNT=1

|

| 130 |

+

|

| 131 |

+

# Defaults to gevent. If using windows, it can be switched to sync or solo.

|

| 132 |

+

SERVER_WORKER_CLASS=gevent

|

| 133 |

+

|

| 134 |

+

# Default number of worker connections, the default is 10.

|

| 135 |

+

SERVER_WORKER_CONNECTIONS=10

|

| 136 |

+

|

| 137 |

+

# Similar to SERVER_WORKER_CLASS.

|

| 138 |

+

# If using windows, it can be switched to sync or solo.

|

| 139 |

+

CELERY_WORKER_CLASS=

|

| 140 |

+

|

| 141 |

+

# Request handling timeout. The default is 200,

|

| 142 |

+

# it is recommended to set it to 360 to support a longer sse connection time.

|

| 143 |

+

GUNICORN_TIMEOUT=360

|

| 144 |

+

|

| 145 |

+

# The number of Celery workers. The default is 1, and can be set as needed.

|

| 146 |

+

CELERY_WORKER_AMOUNT=

|

| 147 |

+

|

| 148 |

+

# Flag indicating whether to enable autoscaling of Celery workers.

|

| 149 |

+

#

|

| 150 |

+

# Autoscaling is useful when tasks are CPU intensive and can be dynamically

|

| 151 |

+

# allocated and deallocated based on the workload.

|

| 152 |

+

#

|

| 153 |

+

# When autoscaling is enabled, the maximum and minimum number of workers can

|

| 154 |

+

# be specified. The autoscaling algorithm will dynamically adjust the number

|

| 155 |

+

# of workers within the specified range.

|

| 156 |

+

#

|

| 157 |

+

# Default is false (i.e., autoscaling is disabled).

|

| 158 |

+

#

|

| 159 |

+

# Example:

|

| 160 |

+

# CELERY_AUTO_SCALE=true

|

| 161 |

+

CELERY_AUTO_SCALE=false

|

| 162 |

+

|

| 163 |

+

# The maximum number of Celery workers that can be autoscaled.

|

| 164 |

+

# This is optional and only used when autoscaling is enabled.

|

| 165 |

+

# Default is not set.

|

| 166 |

+

CELERY_MAX_WORKERS=

|

| 167 |

+

|

| 168 |

+

# The minimum number of Celery workers that can be autoscaled.

|

| 169 |

+

# This is optional and only used when autoscaling is enabled.

|

| 170 |

+

# Default is not set.

|

| 171 |

+

CELERY_MIN_WORKERS=

|

| 172 |

+

|

| 173 |

+

# API Tool configuration

|

| 174 |

+

API_TOOL_DEFAULT_CONNECT_TIMEOUT=10

|

| 175 |

+

API_TOOL_DEFAULT_READ_TIMEOUT=60

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

# ------------------------------

|

| 179 |

+

# Database Configuration

|

| 180 |

+

# The database uses PostgreSQL. Please use the public schema.

|

| 181 |

+

# It is consistent with the configuration in the 'db' service below.

|

| 182 |

+

# ------------------------------

|

| 183 |

+

|

| 184 |

+

DB_USERNAME=postgres

|

| 185 |

+

DB_PASSWORD=difyai123456

|

| 186 |

+

DB_HOST=db

|

| 187 |

+

DB_PORT=5432

|

| 188 |

+

DB_DATABASE=dify

|

| 189 |

+

# The size of the database connection pool.

|

| 190 |

+

# The default is 30 connections, which can be appropriately increased.

|

| 191 |

+

SQLALCHEMY_POOL_SIZE=30

|

| 192 |

+

# Database connection pool recycling time, the default is 3600 seconds.

|

| 193 |

+

SQLALCHEMY_POOL_RECYCLE=3600

|

| 194 |

+

# Whether to print SQL, default is false.

|

| 195 |

+

SQLALCHEMY_ECHO=false

|

| 196 |

+

|

| 197 |

+

# Maximum number of connections to the database

|

| 198 |

+

# Default is 100

|

| 199 |

+

#

|

| 200 |

+

# Reference: https://www.postgresql.org/docs/current/runtime-config-connection.html#GUC-MAX-CONNECTIONS

|

| 201 |

+

POSTGRES_MAX_CONNECTIONS=100

|

| 202 |

+

|

| 203 |

+

# Sets the amount of shared memory used for postgres's shared buffers.

|

| 204 |

+

# Default is 128MB

|

| 205 |

+

# Recommended value: 25% of available memory

|

| 206 |

+

# Reference: https://www.postgresql.org/docs/current/runtime-config-resource.html#GUC-SHARED-BUFFERS

|

| 207 |

+

POSTGRES_SHARED_BUFFERS=128MB

|

| 208 |

+

|

| 209 |

+

# Sets the amount of memory used by each database worker for working space.

|

| 210 |

+

# Default is 4MB

|

| 211 |

+

#

|

| 212 |

+

# Reference: https://www.postgresql.org/docs/current/runtime-config-resource.html#GUC-WORK-MEM

|

| 213 |

+

POSTGRES_WORK_MEM=4MB

|

| 214 |

+

|

| 215 |

+

# Sets the amount of memory reserved for maintenance activities.

|

| 216 |

+

# Default is 64MB

|

| 217 |

+

#

|

| 218 |

+

# Reference: https://www.postgresql.org/docs/current/runtime-config-resource.html#GUC-MAINTENANCE-WORK-MEM

|

| 219 |

+

POSTGRES_MAINTENANCE_WORK_MEM=64MB

|

| 220 |

+

|

| 221 |

+

# Sets the planner's assumption about the effective cache size.

|

| 222 |

+

# Default is 4096MB

|

| 223 |

+

#

|

| 224 |

+

# Reference: https://www.postgresql.org/docs/current/runtime-config-query.html#GUC-EFFECTIVE-CACHE-SIZE

|

| 225 |

+

POSTGRES_EFFECTIVE_CACHE_SIZE=4096MB

|

| 226 |

+

|

| 227 |

+

# ------------------------------

|

| 228 |

+

# Redis Configuration

|

| 229 |

+

# This Redis configuration is used for caching and for pub/sub during conversation.

|

| 230 |

+

# ------------------------------

|

| 231 |

+

|

| 232 |

+

REDIS_HOST=redis

|

| 233 |

+

REDIS_PORT=6379

|

| 234 |

+

REDIS_USERNAME=

|

| 235 |

+

REDIS_PASSWORD=difyai123456

|

| 236 |

+

REDIS_USE_SSL=false

|

| 237 |

+

REDIS_DB=0

|

| 238 |

+

|

| 239 |

+

# Whether to use Redis Sentinel mode.

|

| 240 |

+

# If set to true, the application will automatically discover and connect to the master node through Sentinel.

|

| 241 |

+

REDIS_USE_SENTINEL=false

|

| 242 |

+

|

| 243 |

+

# List of Redis Sentinel nodes. If Sentinel mode is enabled, provide at least one Sentinel IP and port.

|

| 244 |

+

# Format: `<sentinel1_ip>:<sentinel1_port>,<sentinel2_ip>:<sentinel2_port>,<sentinel3_ip>:<sentinel3_port>`

|

| 245 |

+

REDIS_SENTINELS=

|

| 246 |

+

REDIS_SENTINEL_SERVICE_NAME=

|

| 247 |

+

REDIS_SENTINEL_USERNAME=

|

| 248 |

+

REDIS_SENTINEL_PASSWORD=

|

| 249 |

+

REDIS_SENTINEL_SOCKET_TIMEOUT=0.1

|

| 250 |

+

|

| 251 |

+

# List of Redis Cluster nodes. If Cluster mode is enabled, provide at least one Cluster IP and port.

|

| 252 |

+

# Format: `<Cluster1_ip>:<Cluster1_port>,<Cluster2_ip>:<Cluster2_port>,<Cluster3_ip>:<Cluster3_port>`

|

| 253 |

+

REDIS_USE_CLUSTERS=false

|

| 254 |

+

REDIS_CLUSTERS=

|

| 255 |

+

REDIS_CLUSTERS_PASSWORD=

|

| 256 |

+

|

| 257 |

+

# ------------------------------

|

| 258 |

+

# Celery Configuration

|

| 259 |

+

# ------------------------------

|

| 260 |

+

|

| 261 |

+

# Use redis as the broker, and redis db 1 for celery broker.

|

| 262 |

+

# Format as follows: `redis://<redis_username>:<redis_password>@<redis_host>:<redis_port>/<redis_database>`

|

| 263 |

+

# Example: redis://:difyai123456@redis:6379/1

|

| 264 |

+

# If use Redis Sentinel, format as follows: `sentinel://<sentinel_username>:<sentinel_password>@<sentinel_host>:<sentinel_port>/<redis_database>`

|

| 265 |

+

# Example: sentinel://localhost:26379/1;sentinel://localhost:26380/1;sentinel://localhost:26381/1

|

| 266 |

+

CELERY_BROKER_URL=redis://:difyai123456@redis:6379/1

|

| 267 |

+

BROKER_USE_SSL=false

|

| 268 |

+

|

| 269 |

+

# If you are using Redis Sentinel for high availability, configure the following settings.

|

| 270 |

+

CELERY_USE_SENTINEL=false

|

| 271 |

+

CELERY_SENTINEL_MASTER_NAME=

|

| 272 |

+

CELERY_SENTINEL_SOCKET_TIMEOUT=0.1

|

| 273 |

+

|

| 274 |

+

# ------------------------------

|

| 275 |

+

# CORS Configuration

|

| 276 |

+

# Used to set the front-end cross-domain access policy.

|

| 277 |

+

# ------------------------------

|

| 278 |

+

|

| 279 |

+

# Specifies the allowed origins for cross-origin requests to the Web API,

|

| 280 |

+

# e.g. https://dify.app or * for all origins.

|

| 281 |

+

WEB_API_CORS_ALLOW_ORIGINS=*

|

| 282 |

+

|

| 283 |

+

# Specifies the allowed origins for cross-origin requests to the console API,

|

| 284 |

+

# e.g. https://cloud.dify.ai or * for all origins.

|

| 285 |

+

CONSOLE_CORS_ALLOW_ORIGINS=*

|

| 286 |

+

|

| 287 |

+

# ------------------------------

|

| 288 |

+

# File Storage Configuration

|

| 289 |

+

# ------------------------------

|

| 290 |

+

|

| 291 |

+

# The type of storage to use for storing user files.

|

| 292 |

+

STORAGE_TYPE=opendal

|

| 293 |

+

|

| 294 |

+

# Apache OpenDAL Configuration

|

| 295 |

+

# The configuration for OpenDAL consists of the following format: OPENDAL_<SCHEME_NAME>_<CONFIG_NAME>.

|

| 296 |

+

# You can find all the service configurations (CONFIG_NAME) in the repository at: https://github.com/apache/opendal/tree/main/core/src/services.

|

| 297 |

+

# Dify will scan configurations starting with OPENDAL_<SCHEME_NAME> and automatically apply them.

|

| 298 |

+

# The scheme name for the OpenDAL storage.

|

| 299 |

+

OPENDAL_SCHEME=fs

|

| 300 |

+

# Configurations for OpenDAL Local File System.

|

| 301 |

+

OPENDAL_FS_ROOT=storage

|

| 302 |

+

|

| 303 |

+

# S3 Configuration

|

| 304 |

+

#

|

| 305 |

+

S3_ENDPOINT=

|

| 306 |

+

S3_REGION=us-east-1

|

| 307 |

+

S3_BUCKET_NAME=difyai

|

| 308 |

+

S3_ACCESS_KEY=

|

| 309 |

+

S3_SECRET_KEY=

|

| 310 |

+

# Whether to use AWS managed IAM roles for authenticating with the S3 service.

|

| 311 |

+

# If set to false, the access key and secret key must be provided.

|

| 312 |

+

S3_USE_AWS_MANAGED_IAM=false

|

| 313 |

+

|

| 314 |

+

# Azure Blob Configuration

|

| 315 |

+

#

|

| 316 |

+

AZURE_BLOB_ACCOUNT_NAME=difyai

|

| 317 |

+

AZURE_BLOB_ACCOUNT_KEY=difyai

|

| 318 |

+

AZURE_BLOB_CONTAINER_NAME=difyai-container

|

| 319 |

+

AZURE_BLOB_ACCOUNT_URL=https://<your_account_name>.blob.core.windows.net

|

| 320 |

+

|

| 321 |

+

# Google Storage Configuration

|

| 322 |

+

#

|

| 323 |

+

GOOGLE_STORAGE_BUCKET_NAME=your-bucket-name

|

| 324 |

+

GOOGLE_STORAGE_SERVICE_ACCOUNT_JSON_BASE64=

|

| 325 |

+

|

| 326 |

+

# The Alibaba Cloud OSS configurations,

|

| 327 |

+

#

|

| 328 |

+

ALIYUN_OSS_BUCKET_NAME=your-bucket-name

|

| 329 |

+

ALIYUN_OSS_ACCESS_KEY=your-access-key

|

| 330 |

+

ALIYUN_OSS_SECRET_KEY=your-secret-key

|

| 331 |

+

ALIYUN_OSS_ENDPOINT=https://oss-ap-southeast-1-internal.aliyuncs.com

|

| 332 |

+

ALIYUN_OSS_REGION=ap-southeast-1

|

| 333 |

+

ALIYUN_OSS_AUTH_VERSION=v4

|

| 334 |

+

# Don't start with '/'. OSS doesn't support leading slash in object names.

|

| 335 |

+

ALIYUN_OSS_PATH=your-path

|

| 336 |

+

|

| 337 |

+

# Tencent COS Configuration

|

| 338 |

+

#

|

| 339 |

+

TENCENT_COS_BUCKET_NAME=your-bucket-name

|

| 340 |

+

TENCENT_COS_SECRET_KEY=your-secret-key

|

| 341 |

+

TENCENT_COS_SECRET_ID=your-secret-id

|

| 342 |

+

TENCENT_COS_REGION=your-region

|

| 343 |

+

TENCENT_COS_SCHEME=your-scheme

|

| 344 |

+

|

| 345 |

+

# Oracle Storage Configuration

|

| 346 |

+

#

|

| 347 |

+

OCI_ENDPOINT=https://objectstorage.us-ashburn-1.oraclecloud.com

|

| 348 |

+

OCI_BUCKET_NAME=your-bucket-name

|

| 349 |

+

OCI_ACCESS_KEY=your-access-key

|

| 350 |

+

OCI_SECRET_KEY=your-secret-key

|

| 351 |

+

OCI_REGION=us-ashburn-1

|

| 352 |

+

|

| 353 |

+

# Huawei OBS Configuration

|

| 354 |

+

#

|

| 355 |

+

HUAWEI_OBS_BUCKET_NAME=your-bucket-name

|

| 356 |

+

HUAWEI_OBS_SECRET_KEY=your-secret-key

|

| 357 |

+

HUAWEI_OBS_ACCESS_KEY=your-access-key

|

| 358 |

+

HUAWEI_OBS_SERVER=your-server-url

|

| 359 |

+

|

| 360 |

+

# Volcengine TOS Configuration

|

| 361 |

+

#

|

| 362 |

+

VOLCENGINE_TOS_BUCKET_NAME=your-bucket-name

|

| 363 |

+

VOLCENGINE_TOS_SECRET_KEY=your-secret-key

|

| 364 |

+

VOLCENGINE_TOS_ACCESS_KEY=your-access-key

|

| 365 |

+

VOLCENGINE_TOS_ENDPOINT=your-server-url

|

| 366 |

+

VOLCENGINE_TOS_REGION=your-region

|

| 367 |

+

|

| 368 |

+

# Baidu OBS Storage Configuration

|

| 369 |

+

#

|

| 370 |

+

BAIDU_OBS_BUCKET_NAME=your-bucket-name

|

| 371 |

+

BAIDU_OBS_SECRET_KEY=your-secret-key

|

| 372 |

+

BAIDU_OBS_ACCESS_KEY=your-access-key

|

| 373 |

+

BAIDU_OBS_ENDPOINT=your-server-url

|

| 374 |

+

|

| 375 |

+

# Supabase Storage Configuration

|

| 376 |

+

#

|

| 377 |

+

SUPABASE_BUCKET_NAME=your-bucket-name

|

| 378 |

+

SUPABASE_API_KEY=your-access-key

|

| 379 |

+

SUPABASE_URL=your-server-url

|

| 380 |

+

|

| 381 |

+

# ------------------------------

|

| 382 |

+

# Vector Database Configuration

|

| 383 |

+

# ------------------------------

|

| 384 |

+

|

| 385 |

+

# The type of vector store to use.

|

| 386 |

+

# Supported values are `weaviate`, `qdrant`, `milvus`, `myscale`, `relyt`, `pgvector`, `pgvecto-rs`, `chroma`, `opensearch`, `tidb_vector`, `oracle`, `tencent`, `elasticsearch`, `elasticsearch-ja`, `analyticdb`, `couchbase`, `vikingdb`, `oceanbase`.

|

| 387 |

+

VECTOR_STORE=weaviate

|

| 388 |

+

|

| 389 |

+

# The Weaviate endpoint URL. Only available when VECTOR_STORE is `weaviate`.

|

| 390 |

+

WEAVIATE_ENDPOINT=http://weaviate:8080

|

| 391 |

+

WEAVIATE_API_KEY=WVF5YThaHlkYwhGUSmCRgsX3tD5ngdN8pkih

|

| 392 |

+

|

| 393 |

+

# The Qdrant endpoint URL. Only available when VECTOR_STORE is `qdrant`.

|

| 394 |

+

QDRANT_URL=http://qdrant:6333

|

| 395 |

+

QDRANT_API_KEY=difyai123456

|

| 396 |

+

QDRANT_CLIENT_TIMEOUT=20

|

| 397 |

+

QDRANT_GRPC_ENABLED=false

|

| 398 |

+

QDRANT_GRPC_PORT=6334

|

| 399 |

+

|

| 400 |

+

# Milvus configuration Only available when VECTOR_STORE is `milvus`.

|

| 401 |

+

# The milvus uri.

|

| 402 |

+

MILVUS_URI=http://127.0.0.1:19530

|

| 403 |

+

MILVUS_TOKEN=

|

| 404 |

+

MILVUS_USER=root

|

| 405 |

+

MILVUS_PASSWORD=Milvus

|

| 406 |

+

MILVUS_ENABLE_HYBRID_SEARCH=False

|

| 407 |

+

|

| 408 |

+

# MyScale configuration, only available when VECTOR_STORE is `myscale`

|

| 409 |

+

# For multi-language support, please set MYSCALE_FTS_PARAMS with referring to:

|

| 410 |

+

# https://myscale.com/docs/en/text-search/#understanding-fts-index-parameters

|

| 411 |

+

MYSCALE_HOST=myscale

|

| 412 |

+

MYSCALE_PORT=8123

|

| 413 |

+

MYSCALE_USER=default

|

| 414 |

+

MYSCALE_PASSWORD=

|

| 415 |

+

MYSCALE_DATABASE=dify

|

| 416 |

+

MYSCALE_FTS_PARAMS=

|

| 417 |

+

|

| 418 |

+

# Couchbase configurations, only available when VECTOR_STORE is `couchbase`

|

| 419 |

+

# The connection string must include hostname defined in the docker-compose file (couchbase-server in this case)

|

| 420 |

+

COUCHBASE_CONNECTION_STRING=couchbase://couchbase-server

|

| 421 |

+

COUCHBASE_USER=Administrator

|

| 422 |

+

COUCHBASE_PASSWORD=password

|

| 423 |

+

COUCHBASE_BUCKET_NAME=Embeddings

|

| 424 |

+

COUCHBASE_SCOPE_NAME=_default

|

| 425 |

+

|

| 426 |

+

# pgvector configurations, only available when VECTOR_STORE is `pgvector`

|

| 427 |

+

PGVECTOR_HOST=pgvector

|

| 428 |

+

PGVECTOR_PORT=5432

|

| 429 |

+

PGVECTOR_USER=postgres

|

| 430 |

+

PGVECTOR_PASSWORD=difyai123456

|

| 431 |

+

PGVECTOR_DATABASE=dify

|

| 432 |

+

PGVECTOR_MIN_CONNECTION=1

|

| 433 |

+

PGVECTOR_MAX_CONNECTION=5

|

| 434 |

+

|

| 435 |

+

# pgvecto-rs configurations, only available when VECTOR_STORE is `pgvecto-rs`

|

| 436 |

+

PGVECTO_RS_HOST=pgvecto-rs

|

| 437 |

+

PGVECTO_RS_PORT=5432

|

| 438 |

+

PGVECTO_RS_USER=postgres

|

| 439 |

+

PGVECTO_RS_PASSWORD=difyai123456

|

| 440 |

+

PGVECTO_RS_DATABASE=dify

|

| 441 |

+

|

| 442 |

+

# analyticdb configurations, only available when VECTOR_STORE is `analyticdb`

|

| 443 |

+

ANALYTICDB_KEY_ID=your-ak

|

| 444 |

+

ANALYTICDB_KEY_SECRET=your-sk

|

| 445 |

+

ANALYTICDB_REGION_ID=cn-hangzhou

|

| 446 |

+

ANALYTICDB_INSTANCE_ID=gp-ab123456

|

| 447 |

+

ANALYTICDB_ACCOUNT=testaccount

|

| 448 |

+

ANALYTICDB_PASSWORD=testpassword

|

| 449 |

+

ANALYTICDB_NAMESPACE=dify

|

| 450 |

+

ANALYTICDB_NAMESPACE_PASSWORD=difypassword

|

| 451 |

+

ANALYTICDB_HOST=gp-test.aliyuncs.com

|

| 452 |

+

ANALYTICDB_PORT=5432

|

| 453 |

+

ANALYTICDB_MIN_CONNECTION=1

|

| 454 |

+

ANALYTICDB_MAX_CONNECTION=5

|

| 455 |

+

|

| 456 |

+

# TiDB vector configurations, only available when VECTOR_STORE is `tidb`

|

| 457 |

+

TIDB_VECTOR_HOST=tidb

|

| 458 |

+

TIDB_VECTOR_PORT=4000

|

| 459 |

+

TIDB_VECTOR_USER=

|

| 460 |

+

TIDB_VECTOR_PASSWORD=

|

| 461 |

+

TIDB_VECTOR_DATABASE=dify

|

| 462 |

+

|

| 463 |

+

# Tidb on qdrant configuration, only available when VECTOR_STORE is `tidb_on_qdrant`

|

| 464 |

+

TIDB_ON_QDRANT_URL=http://127.0.0.1

|

| 465 |

+

TIDB_ON_QDRANT_API_KEY=dify

|

| 466 |

+

TIDB_ON_QDRANT_CLIENT_TIMEOUT=20

|

| 467 |

+

TIDB_ON_QDRANT_GRPC_ENABLED=false

|

| 468 |

+

TIDB_ON_QDRANT_GRPC_PORT=6334

|

| 469 |

+

TIDB_PUBLIC_KEY=dify

|

| 470 |

+

TIDB_PRIVATE_KEY=dify

|

| 471 |

+

TIDB_API_URL=http://127.0.0.1

|

| 472 |

+

TIDB_IAM_API_URL=http://127.0.0.1

|

| 473 |

+

TIDB_REGION=regions/aws-us-east-1

|

| 474 |

+

TIDB_PROJECT_ID=dify

|

| 475 |

+

TIDB_SPEND_LIMIT=100

|

| 476 |

+

|

| 477 |

+

# Chroma configuration, only available when VECTOR_STORE is `chroma`

|

| 478 |

+

CHROMA_HOST=127.0.0.1

|

| 479 |

+

CHROMA_PORT=8000

|

| 480 |

+

CHROMA_TENANT=default_tenant

|

| 481 |

+

CHROMA_DATABASE=default_database

|

| 482 |

+

CHROMA_AUTH_PROVIDER=chromadb.auth.token_authn.TokenAuthClientProvider

|

| 483 |

+

CHROMA_AUTH_CREDENTIALS=

|

| 484 |

+

|

| 485 |

+

# Oracle configuration, only available when VECTOR_STORE is `oracle`

|

| 486 |

+

ORACLE_HOST=oracle

|

| 487 |

+

ORACLE_PORT=1521

|

| 488 |

+

ORACLE_USER=dify

|

| 489 |

+

ORACLE_PASSWORD=dify

|

| 490 |

+

ORACLE_DATABASE=FREEPDB1

|

| 491 |

+

|

| 492 |

+

# relyt configurations, only available when VECTOR_STORE is `relyt`

|

| 493 |

+

RELYT_HOST=db

|

| 494 |

+

RELYT_PORT=5432

|

| 495 |

+

RELYT_USER=postgres

|

| 496 |

+

RELYT_PASSWORD=difyai123456

|

| 497 |

+

RELYT_DATABASE=postgres

|

| 498 |

+

|

| 499 |

+

# open search configuration, only available when VECTOR_STORE is `opensearch`

|

| 500 |

+

OPENSEARCH_HOST=opensearch

|

| 501 |

+

OPENSEARCH_PORT=9200

|

| 502 |

+

OPENSEARCH_USER=admin

|

| 503 |

+

OPENSEARCH_PASSWORD=admin

|

| 504 |

+

OPENSEARCH_SECURE=true

|

| 505 |

+

|

| 506 |

+

# tencent vector configurations, only available when VECTOR_STORE is `tencent`

|

| 507 |

+

TENCENT_VECTOR_DB_URL=http://127.0.0.1

|

| 508 |

+

TENCENT_VECTOR_DB_API_KEY=dify

|

| 509 |

+

TENCENT_VECTOR_DB_TIMEOUT=30

|

| 510 |

+

TENCENT_VECTOR_DB_USERNAME=dify

|

| 511 |

+

TENCENT_VECTOR_DB_DATABASE=dify

|

| 512 |

+

TENCENT_VECTOR_DB_SHARD=1

|

| 513 |

+

TENCENT_VECTOR_DB_REPLICAS=2

|

| 514 |

+

|

| 515 |

+

# ElasticSearch configuration, only available when VECTOR_STORE is `elasticsearch`

|

| 516 |

+

ELASTICSEARCH_HOST=0.0.0.0

|

| 517 |

+

ELASTICSEARCH_PORT=9200

|

| 518 |

+

ELASTICSEARCH_USERNAME=elastic

|

| 519 |

+

ELASTICSEARCH_PASSWORD=elastic

|

| 520 |

+

KIBANA_PORT=5601

|

| 521 |

+

|

| 522 |

+

# baidu vector configurations, only available when VECTOR_STORE is `baidu`

|

| 523 |

+

BAIDU_VECTOR_DB_ENDPOINT=http://127.0.0.1:5287

|

| 524 |

+

BAIDU_VECTOR_DB_CONNECTION_TIMEOUT_MS=30000

|

| 525 |

+

BAIDU_VECTOR_DB_ACCOUNT=root

|

| 526 |

+

BAIDU_VECTOR_DB_API_KEY=dify

|

| 527 |

+

BAIDU_VECTOR_DB_DATABASE=dify

|

| 528 |

+

BAIDU_VECTOR_DB_SHARD=1

|

| 529 |

+

BAIDU_VECTOR_DB_REPLICAS=3

|

| 530 |

+

|

| 531 |

+

# VikingDB configurations, only available when VECTOR_STORE is `vikingdb`

|

| 532 |

+

VIKINGDB_ACCESS_KEY=your-ak

|

| 533 |

+

VIKINGDB_SECRET_KEY=your-sk

|

| 534 |

+

VIKINGDB_REGION=cn-shanghai

|

| 535 |

+

VIKINGDB_HOST=api-vikingdb.xxx.volces.com

|

| 536 |

+

VIKINGDB_SCHEMA=http

|

| 537 |

+

VIKINGDB_CONNECTION_TIMEOUT=30

|

| 538 |

+

VIKINGDB_SOCKET_TIMEOUT=30

|

| 539 |

+

|

| 540 |

+

# Lindorm configuration, only available when VECTOR_STORE is `lindorm`

|

| 541 |

+

LINDORM_URL=http://lindorm:30070

|

| 542 |

+

LINDORM_USERNAME=lindorm

|

| 543 |

+

LINDORM_PASSWORD=lindorm

|

| 544 |

+

|

| 545 |

+

# OceanBase Vector configuration, only available when VECTOR_STORE is `oceanbase`

|

| 546 |

+

OCEANBASE_VECTOR_HOST=oceanbase

|

| 547 |

+

OCEANBASE_VECTOR_PORT=2881

|

| 548 |

+

OCEANBASE_VECTOR_USER=root@test

|

| 549 |

+

OCEANBASE_VECTOR_PASSWORD=difyai123456

|

| 550 |

+

OCEANBASE_VECTOR_DATABASE=test

|

| 551 |

+

OCEANBASE_CLUSTER_NAME=difyai

|

| 552 |

+

OCEANBASE_MEMORY_LIMIT=6G

|

| 553 |

+

|

| 554 |

+

# Upstash Vector configuration, only available when VECTOR_STORE is `upstash`

|

| 555 |

+

UPSTASH_VECTOR_URL=https://xxx-vector.upstash.io

|

| 556 |

+

UPSTASH_VECTOR_TOKEN=dify

|

| 557 |

+

|

| 558 |

+

# ------------------------------

|

| 559 |

+

# Knowledge Configuration

|

| 560 |

+

# ------------------------------

|

| 561 |

+

|

| 562 |

+

# Upload file size limit, default 15M.

|

| 563 |

+

UPLOAD_FILE_SIZE_LIMIT=15

|

| 564 |

+

|

| 565 |

+

# The maximum number of files that can be uploaded at a time, default 5.

|

| 566 |

+

UPLOAD_FILE_BATCH_LIMIT=5

|

| 567 |

+

|

| 568 |

+

# ETL type, support: `dify`, `Unstructured`

|

| 569 |

+

# `dify` Dify's proprietary file extraction scheme

|

| 570 |

+

# `Unstructured` Unstructured.io file extraction scheme

|

| 571 |

+

ETL_TYPE=dify

|

| 572 |

+

|

| 573 |

+

# Unstructured API path and API key, needs to be configured when ETL_TYPE is Unstructured

|

| 574 |

+

# Or using Unstructured for document extractor node for pptx.

|

| 575 |

+

# For example: http://unstructured:8000/general/v0/general

|

| 576 |

+

UNSTRUCTURED_API_URL=

|

| 577 |

+

UNSTRUCTURED_API_KEY=

|

| 578 |

+

SCARF_NO_ANALYTICS=true

|

| 579 |

+

|

| 580 |

+

# ------------------------------

|

| 581 |

+

# Model Configuration

|

| 582 |

+

# ------------------------------

|

| 583 |

+

|

| 584 |

+

# The maximum number of tokens allowed for prompt generation.

|

| 585 |

+

# This setting controls the upper limit of tokens that can be used by the LLM

|

| 586 |

+

# when generating a prompt in the prompt generation tool.

|

| 587 |

+

# Default: 512 tokens.

|

| 588 |

+

PROMPT_GENERATION_MAX_TOKENS=512

|

| 589 |

+

|

| 590 |

+

# The maximum number of tokens allowed for code generation.

|

| 591 |

+

# This setting controls the upper limit of tokens that can be used by the LLM

|

| 592 |

+

# when generating code in the code generation tool.

|

| 593 |

+

# Default: 1024 tokens.

|

| 594 |

+

CODE_GENERATION_MAX_TOKENS=1024

|

| 595 |

+

|

| 596 |

+

# ------------------------------

|

| 597 |

+

# Multi-modal Configuration

|

| 598 |

+

# ------------------------------

|

| 599 |

+

|

| 600 |

+

# The format of the image/video/audio/document sent when the multi-modal model is input,

|

| 601 |

+

# the default is base64, optional url.

|

| 602 |

+

# The delay of the call in url mode will be lower than that in base64 mode.

|

| 603 |

+

# It is generally recommended to use the more compatible base64 mode.

|

| 604 |

+

# If configured as url, you need to configure FILES_URL as an externally accessible address so that the multi-modal model can access the image/video/audio/document.

|

| 605 |

+

MULTIMODAL_SEND_FORMAT=base64

|

| 606 |

+

# Upload image file size limit, default 10M.

|

| 607 |

+

UPLOAD_IMAGE_FILE_SIZE_LIMIT=10

|

| 608 |

+

# Upload video file size limit, default 100M.

|

| 609 |

+

UPLOAD_VIDEO_FILE_SIZE_LIMIT=100

|

| 610 |

+

# Upload audio file size limit, default 50M.

|

| 611 |

+

UPLOAD_AUDIO_FILE_SIZE_LIMIT=50

|

| 612 |

+

|

| 613 |

+

# ------------------------------

|

| 614 |

+

# Sentry Configuration

|

| 615 |

+

# Used for application monitoring and error log tracking.

|

| 616 |

+

# ------------------------------

|

| 617 |

+

SENTRY_DSN=

|

| 618 |

+

|

| 619 |

+

# API Service Sentry DSN address, default is empty, when empty,

|

| 620 |

+

# all monitoring information is not reported to Sentry.

|

| 621 |

+

# If not set, Sentry error reporting will be disabled.

|

| 622 |

+

API_SENTRY_DSN=

|

| 623 |

+

# API Service The reporting ratio of Sentry events, if it is 0.01, it is 1%.

|

| 624 |

+

API_SENTRY_TRACES_SAMPLE_RATE=1.0

|

| 625 |

+

# API Service The reporting ratio of Sentry profiles, if it is 0.01, it is 1%.

|

| 626 |

+

API_SENTRY_PROFILES_SAMPLE_RATE=1.0

|

| 627 |

+

|

| 628 |

+

# Web Service Sentry DSN address, default is empty, when empty,

|

| 629 |

+

# all monitoring information is not reported to Sentry.

|

| 630 |

+

# If not set, Sentry error reporting will be disabled.

|

| 631 |

+

WEB_SENTRY_DSN=

|

| 632 |

+

|

| 633 |

+

# ------------------------------

|

| 634 |

+

# Notion Integration Configuration

|

| 635 |

+

# Variables can be obtained by applying for Notion integration: https://www.notion.so/my-integrations

|

| 636 |

+

# ------------------------------

|

| 637 |

+

|

| 638 |

+

# Configure as "public" or "internal".

|

| 639 |

+

# Since Notion's OAuth redirect URL only supports HTTPS,

|

| 640 |

+

# if deploying locally, please use Notion's internal integration.

|

| 641 |

+

NOTION_INTEGRATION_TYPE=public

|

| 642 |

+

# Notion OAuth client secret (used for public integration type)

|

| 643 |

+

NOTION_CLIENT_SECRET=

|

| 644 |

+

# Notion OAuth client id (used for public integration type)

|

| 645 |

+

NOTION_CLIENT_ID=

|

| 646 |

+

# Notion internal integration secret.

|

| 647 |

+

# If the value of NOTION_INTEGRATION_TYPE is "internal",

|

| 648 |

+

# you need to configure this variable.

|

| 649 |

+

NOTION_INTERNAL_SECRET=

|

| 650 |

+

|

| 651 |

+

# ------------------------------

|

| 652 |

+

# Mail related configuration

|

| 653 |

+

# ------------------------------

|

| 654 |

+

|

| 655 |

+

# Mail type, support: resend, smtp

|

| 656 |

+

MAIL_TYPE=resend

|

| 657 |

+

|

| 658 |

+

# Default send from email address, if not specified

|

| 659 |

+

MAIL_DEFAULT_SEND_FROM=

|

| 660 |

+

|

| 661 |

+

# API-Key for the Resend email provider, used when MAIL_TYPE is `resend`.

|

| 662 |

+

RESEND_API_URL=https://api.resend.com

|

| 663 |

+

RESEND_API_KEY=your-resend-api-key

|

| 664 |

+

|

| 665 |

+

|

| 666 |

+

# SMTP server configuration, used when MAIL_TYPE is `smtp`

|

| 667 |

+

SMTP_SERVER=

|

| 668 |

+

SMTP_PORT=465

|

| 669 |

+

SMTP_USERNAME=

|

| 670 |

+

SMTP_PASSWORD=

|

| 671 |

+

SMTP_USE_TLS=true

|

| 672 |

+

SMTP_OPPORTUNISTIC_TLS=false

|

| 673 |

+

|

| 674 |

+

# ------------------------------

|

| 675 |

+

# Others Configuration

|

| 676 |

+

# ------------------------------

|

| 677 |

+

|

| 678 |

+

# Maximum length of segmentation tokens for indexing

|

| 679 |

+

INDEXING_MAX_SEGMENTATION_TOKENS_LENGTH=4000

|

| 680 |

+

|

| 681 |

+

# Member invitation link valid time (hours),

|

| 682 |

+

# Default: 72.

|

| 683 |

+

INVITE_EXPIRY_HOURS=72

|

| 684 |

+

|

| 685 |

+

# Reset password token valid time (minutes),

|

| 686 |

+

RESET_PASSWORD_TOKEN_EXPIRY_MINUTES=5

|

| 687 |

+

|

| 688 |

+

# The sandbox service endpoint.

|

| 689 |

+

CODE_EXECUTION_ENDPOINT=http://sandbox:8194

|

| 690 |

+

CODE_EXECUTION_API_KEY=dify-sandbox

|

| 691 |

+

CODE_MAX_NUMBER=9223372036854775807

|

| 692 |

+

CODE_MIN_NUMBER=-9223372036854775808

|

| 693 |

+

CODE_MAX_DEPTH=5

|

| 694 |

+

CODE_MAX_PRECISION=20

|

| 695 |

+

CODE_MAX_STRING_LENGTH=80000

|

| 696 |

+

CODE_MAX_STRING_ARRAY_LENGTH=30

|

| 697 |

+

CODE_MAX_OBJECT_ARRAY_LENGTH=30

|

| 698 |

+

CODE_MAX_NUMBER_ARRAY_LENGTH=1000

|

| 699 |

+

CODE_EXECUTION_CONNECT_TIMEOUT=10

|

| 700 |

+

CODE_EXECUTION_READ_TIMEOUT=60

|

| 701 |

+

CODE_EXECUTION_WRITE_TIMEOUT=10

|

| 702 |

+

TEMPLATE_TRANSFORM_MAX_LENGTH=80000

|

| 703 |

+

|

| 704 |

+

# Workflow runtime configuration

|

| 705 |

+

WORKFLOW_MAX_EXECUTION_STEPS=500

|

| 706 |

+

WORKFLOW_MAX_EXECUTION_TIME=1200

|

| 707 |

+

WORKFLOW_CALL_MAX_DEPTH=5

|

| 708 |

+

MAX_VARIABLE_SIZE=204800

|

| 709 |

+

WORKFLOW_PARALLEL_DEPTH_LIMIT=3

|

| 710 |

+

WORKFLOW_FILE_UPLOAD_LIMIT=10

|

| 711 |

+

|

| 712 |

+

# HTTP request node in workflow configuration

|

| 713 |

+

HTTP_REQUEST_NODE_MAX_BINARY_SIZE=10485760

|

| 714 |

+

HTTP_REQUEST_NODE_MAX_TEXT_SIZE=1048576

|

| 715 |

+

|

| 716 |

+

# SSRF Proxy server HTTP URL

|

| 717 |

+

SSRF_PROXY_HTTP_URL=http://ssrf_proxy:3128

|

| 718 |

+

# SSRF Proxy server HTTPS URL

|

| 719 |

+

SSRF_PROXY_HTTPS_URL=http://ssrf_proxy:3128

|

| 720 |

+

|

| 721 |

+

# ------------------------------

|

| 722 |

+

# Environment Variables for web Service

|

| 723 |

+

# ------------------------------

|

| 724 |

+

|

| 725 |

+

# The timeout for the text generation in millisecond

|

| 726 |

+

TEXT_GENERATION_TIMEOUT_MS=60000

|

| 727 |

+

|

| 728 |

+

# ------------------------------

|

| 729 |

+

# Environment Variables for db Service

|

| 730 |

+

# ------------------------------

|

| 731 |

+

|

| 732 |

+

PGUSER=${DB_USERNAME}

|

| 733 |

+

# The password for the default postgres user.

|

| 734 |

+

POSTGRES_PASSWORD=${DB_PASSWORD}

|

| 735 |

+

# The name of the default postgres database.

|

| 736 |

+

POSTGRES_DB=${DB_DATABASE}

|

| 737 |

+

# postgres data directory

|

| 738 |

+

PGDATA=/var/lib/postgresql/data/pgdata

|

| 739 |

+

|

| 740 |

+

# ------------------------------

|

| 741 |

+

# Environment Variables for sandbox Service

|

| 742 |

+

# ------------------------------

|

| 743 |

+

|

| 744 |

+

# The API key for the sandbox service

|

| 745 |

+

SANDBOX_API_KEY=dify-sandbox

|

| 746 |

+

# The mode in which the Gin framework runs

|

| 747 |

+

SANDBOX_GIN_MODE=release

|

| 748 |

+

# The timeout for the worker in seconds

|

| 749 |

+

SANDBOX_WORKER_TIMEOUT=15

|

| 750 |

+

# Enable network for the sandbox service

|

| 751 |

+

SANDBOX_ENABLE_NETWORK=true

|

| 752 |

+

# HTTP proxy URL for SSRF protection

|

| 753 |

+

SANDBOX_HTTP_PROXY=http://ssrf_proxy:3128

|

| 754 |