File size: 2,183 Bytes

2d73a4b |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 |

---

license: apache-2.0

---

# MatMul-Free LL

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

[[Paper](https://arxiv.org/abs/2406.02528)] [[Code](https://github.com/ridgerchu/matmulfreellm/tree/master)]

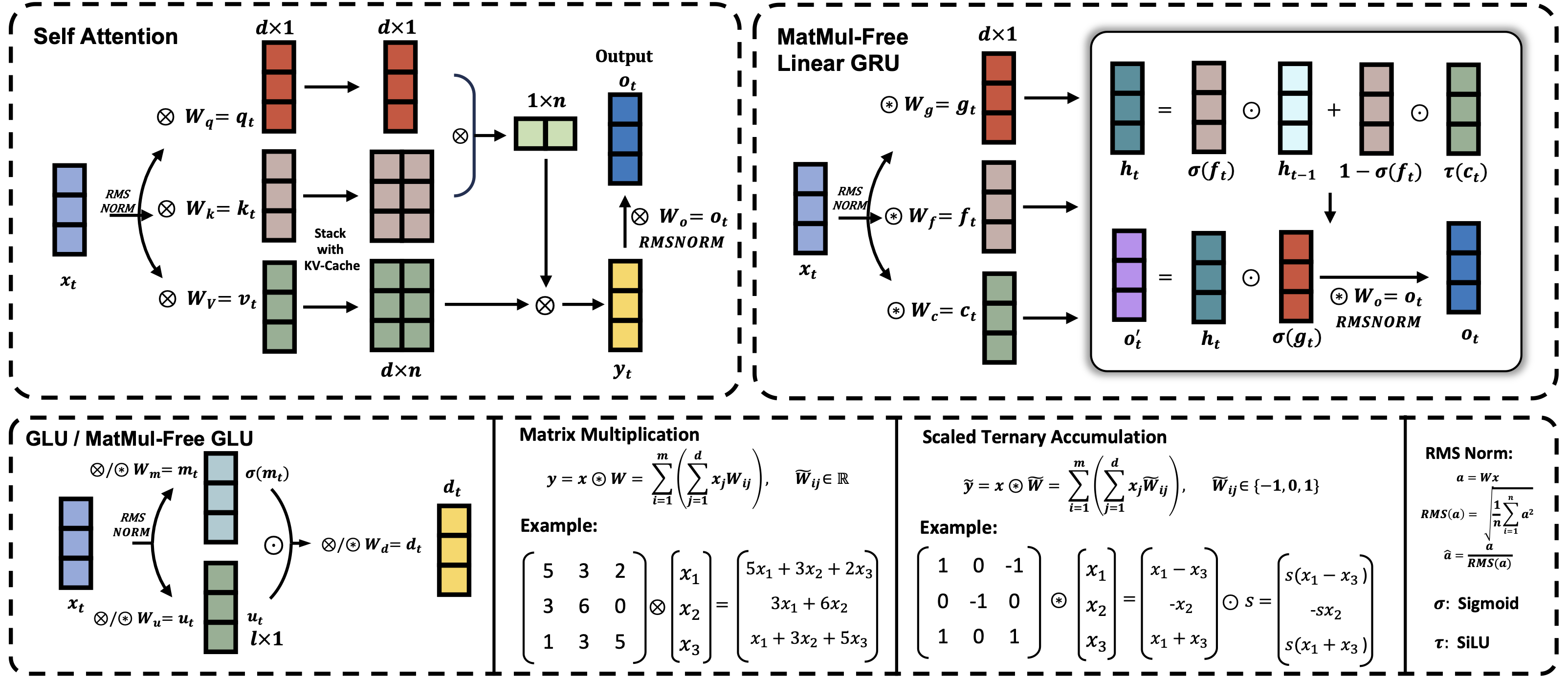

MatMul-Free LM is a language model architecture that eliminates the need for Matrix Multiplication (MatMul) operations.

This repository provides an implementation of MatMul-Free LM that is compatible with the 🤗 Transformers library.

## Scaling Law

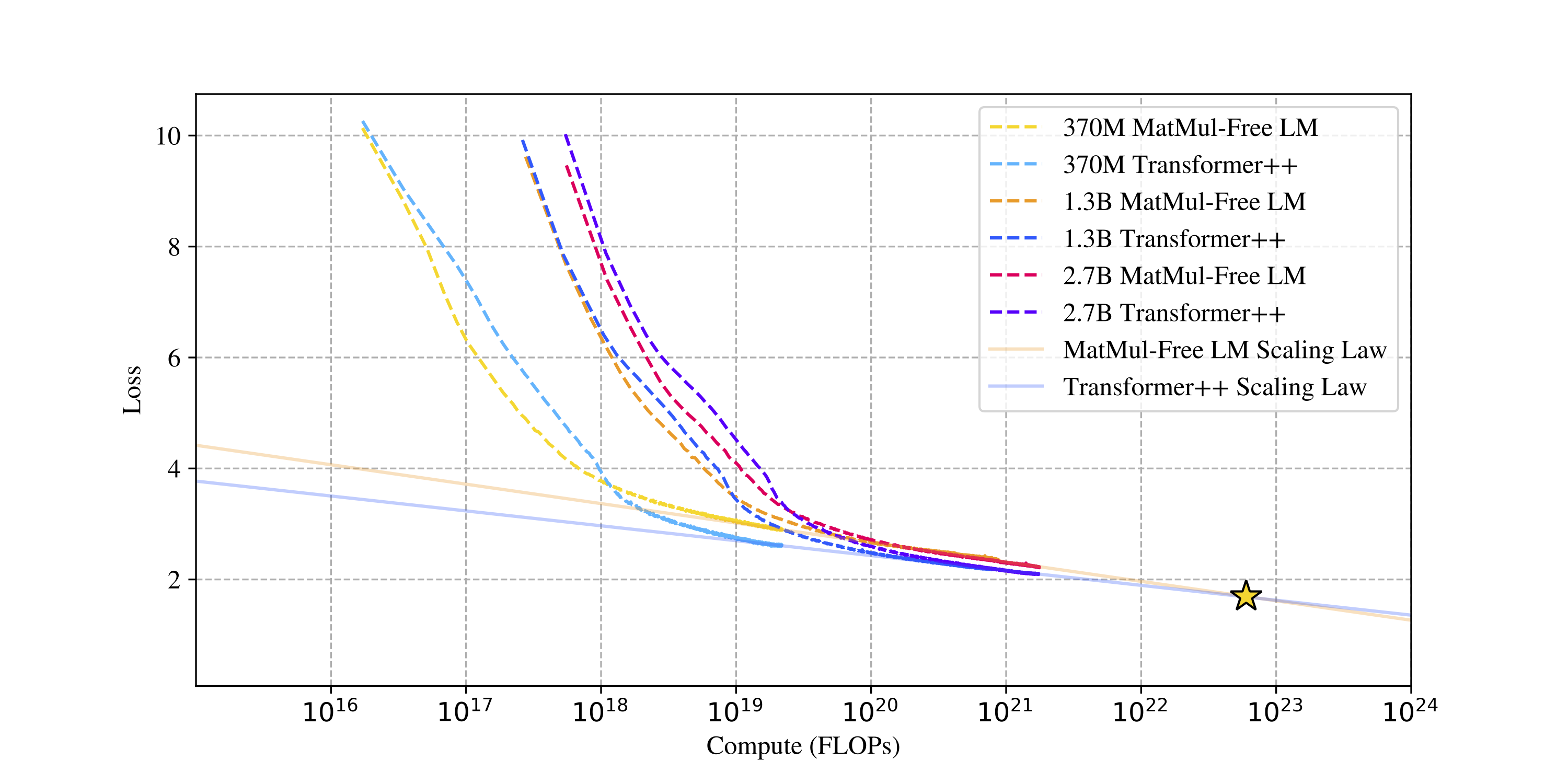

We evaluate how the scaling law fits to the 370M, 1.3B and 2.7B parameter models in both Transformer++ and our model.

For a fair comparison, each operation is treated identically, though our model uses more efficient ternary weights in some layers.

Interestingly, the scaling projection for our model exhibits a steeper descent compared to Transformer++,

suggesting our architecture is more efficient in leveraging additional compute to improve performance.

## Usage

We provide the implementations of models that are compatible with 🤗 Transformers library.

Here's an example of how to initialize a model from the default configs in ```matmulfreelm```:

This is a huggingface-compatible library that you can use such command to initialize the model with huggingface ```AutoModel```:

```shell

pip install transformers

pip install -U git+https://github.com/ridgerchu/matmulfreellm

```

```python

from mmfreelm.models import HGRNBitConfig

from mmfreelm.layers import hgrn_bit

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("ridger/MMfreeLM-2.7B")

```

## Pre-trained Model Zoo

| Model Size | Layer | Hidden dimension | Trained tokens |

| [370M](https://huggingface.co/ridger/MMfreeLM-370M) | 24 | 1024 | 15B |

| :---: | :---: | :---: | :---: |

| [1.3B](https://huggingface.co/ridger/MMfreeLM-1.3B) | 24 | 2048 | 100B |

| [2.7B](https://huggingface.co/ridger/MMfreeLM-2.7B) | 32 | 2560 | 100B |

|