Region-based Cluster Discrimination for Visual Representation Learning

Abstract

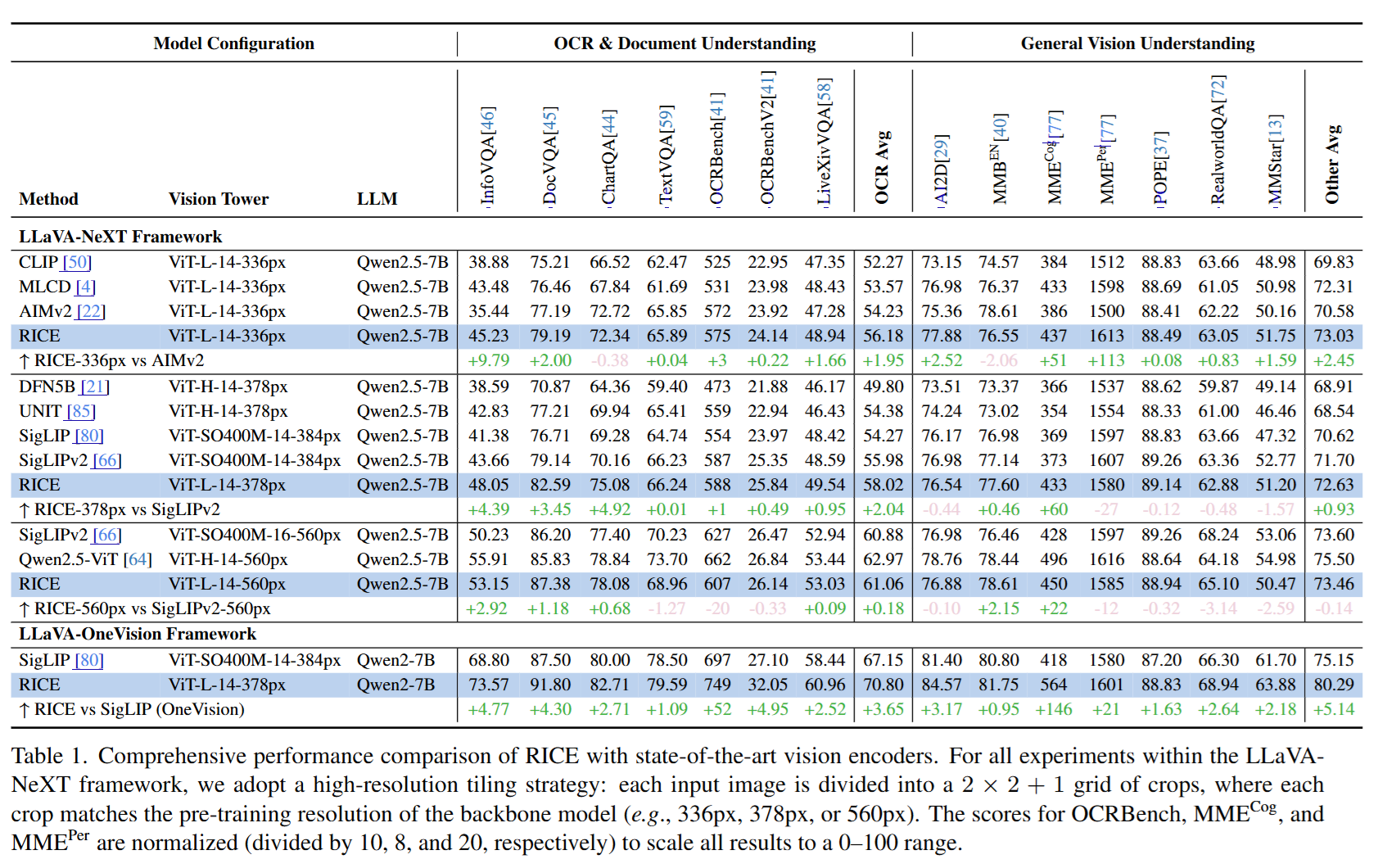

RICE, a novel method using a Region Transformer and region cluster discrimination loss, enhances region-level visual and OCR capabilities, outperforming previous methods in tasks like segmentation and dense detection.

Learning visual representations is foundational for a broad spectrum of downstream tasks. Although recent vision-language contrastive models, such as CLIP and SigLIP, have achieved impressive zero-shot performance via large-scale vision-language alignment, their reliance on global representations constrains their effectiveness for dense prediction tasks, such as grounding, OCR, and segmentation. To address this gap, we introduce Region-Aware Cluster Discrimination (RICE), a novel method that enhances region-level visual and OCR capabilities. We first construct a billion-scale candidate region dataset and propose a Region Transformer layer to extract rich regional semantics. We further design a unified region cluster discrimination loss that jointly supports object and OCR learning within a single classification framework, enabling efficient and scalable distributed training on large-scale data. Extensive experiments show that RICE consistently outperforms previous methods on tasks, including segmentation, dense detection, and visual perception for Multimodal Large Language Models (MLLMs). The pre-trained models have been released at https://github.com/deepglint/MVT.

Community

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- FOCUS: Unified Vision-Language Modeling for Interactive Editing Driven by Referential Segmentation (2025)

- Hierarchical Cross-modal Prompt Learning for Vision-Language Models (2025)

- Advancing Visual Large Language Model for Multi-granular Versatile Perception (2025)

- HierVL: Semi-Supervised Segmentation leveraging Hierarchical Vision-Language Synergy with Dynamic Text-Spatial Query Alignment (2025)

- Autoregressive Semantic Visual Reconstruction Helps VLMs Understand Better (2025)

- HRSeg: High-Resolution Visual Perception and Enhancement for Reasoning Segmentation (2025)

- Partial CLIP is Enough: Chimera-Seg for Zero-shot Semantic Segmentation (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Models citing this paper 1

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper