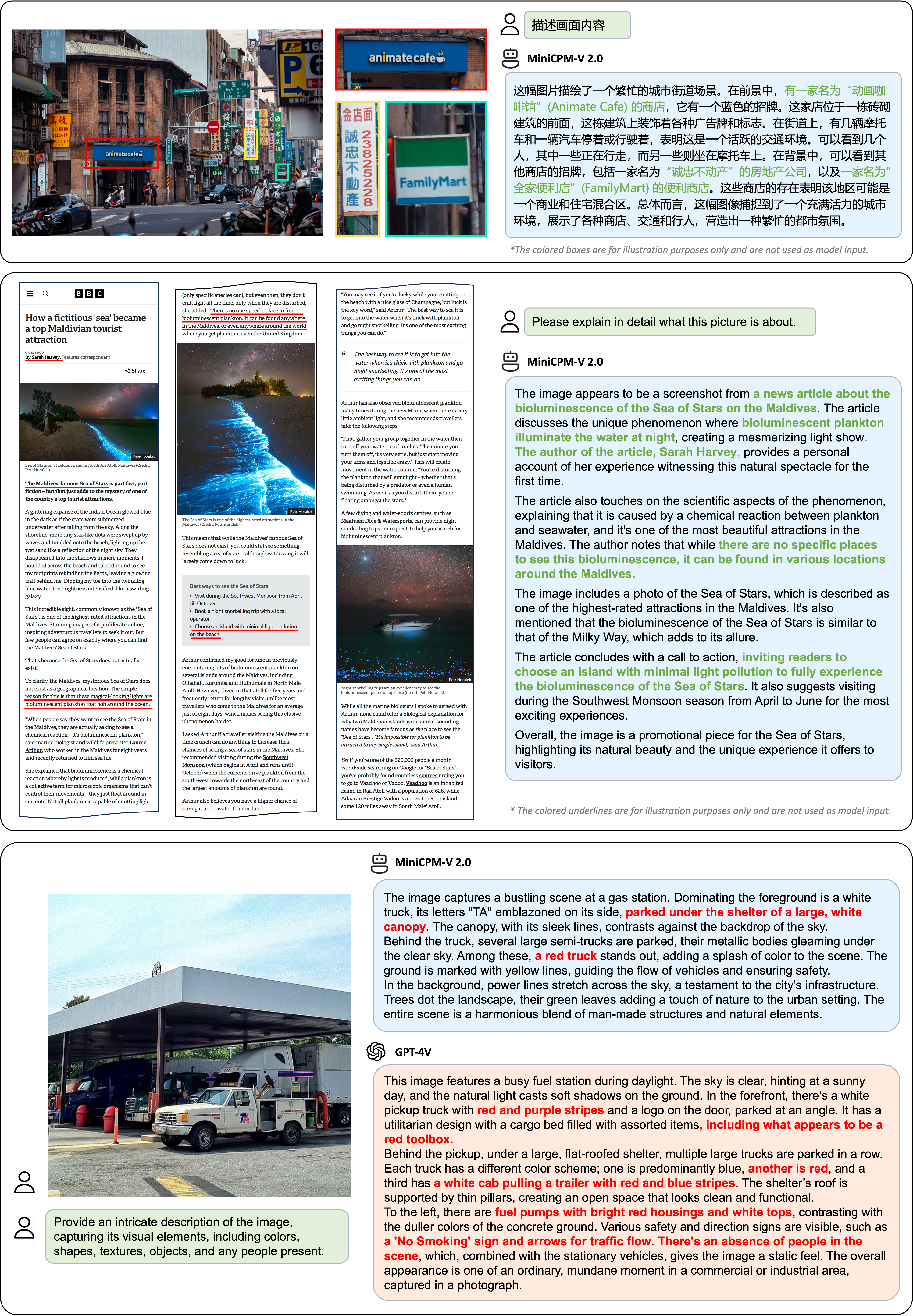

| Model | Size | TextVQA val | DocVQA test | OCRBench | OpenCompass | MME | MMB dev(en) | MMB dev(zh) | MMMU val | MathVista | LLaVA Bench | Object HalBench |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Proprietary models | ||||||||||||

| Gemini Pro Vision | - | 74.6 | 88.1 | 680 | 63.8 | 2148.9 | 75.2 | 74.0 | 48.9 | 45.8 | 79.9 | - |

| GPT-4V | - | 78.0 | 88.4 | 516 | 63.2 | 1771.5 | 75.1 | 75.0 | 53.8 | 47.8 | 93.1 | 86.4 / 92.7 |

| Open-source models 6B~34B | ||||||||||||

| Yi-VL-6B | 6.7B | 45.5* | 17.1* | 290 | 49.3 | 1915.1 | 68.6 | 68.3 | 40.3 | 28.8 | 51.9 | - |

| Qwen-VL-Chat | 9.6B | 61.5 | 62.6 | 488 | 52.1 | 1860.0 | 60.6 | 56.7 | 37.0 | 33.8 | 67.7 | 56.2 / 80.0 |

| Yi-VL-34B | 34B | 43.4* | 16.9* | 290 | 52.6 | 2050.2 | 71.1 | 71.4 | 45.1 | 30.7 | 62.3 | - |

| DeepSeek-VL-7B | 7.3B | 64.7* | 47.0* | 435 | 55.6 | 1765.4 | 74.1 | 72.8 | 38.3 | 36.8 | 77.8 | - |

| TextMonkey | 9.7B | 64.3 | 66.7 | 558 | - | - | - | - | - | - | - | - |

| CogVLM-Chat | 17.4B | 70.4 | 33.3* | 590 | 52.5 | 1736.6 | 63.7 | 53.8 | 37.3 | 34.7 | 73.9 | 73.6 / 87.4 |

| Open-source models 1B~3B | ||||||||||||

| DeepSeek-VL-1.3B | 1.7B | 58.4* | 37.9* | 413 | 46.0 | 1531.6 | 64.0 | 61.2 | 33.8 | 29.4 | 51.1 | - |

| MobileVLM V2 | 3.1B | 57.5 | 19.4* | - | - | 1440.5(P) | 63.2 | - | - | - | - | - |

| Mini-Gemini | 2.2B | 56.2 | 34.2* | - | - | 1653.0 | 59.8 | - | 31.7 | - | - | - |

| MiniCPM-V | 2.8B | 60.6 | 38.2 | 366 | 47.6 | 1650.2 | 67.9 | 65.3 | 38.3 | 28.9 | 51.3 | 78.4 / 88.5 |

| MiniCPM-V 2.0 | 2.8B | 74.1 | 71.9 | 605 | 55.0 | 1808.6 | 69.6 | 68.1 | 38.2 | 38.7 | 69.2 | 85.5 / 92.2 |