Upload 24 files

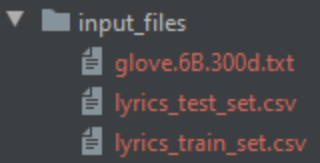

Browse files- compute_score.py +75 -0

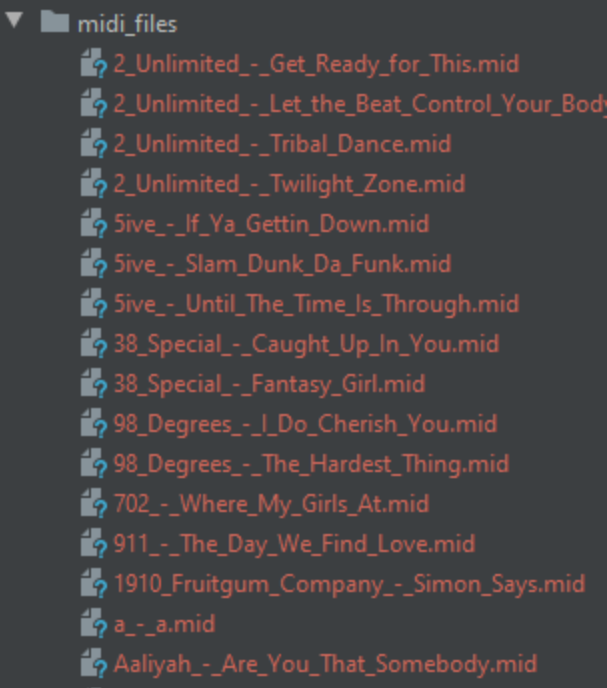

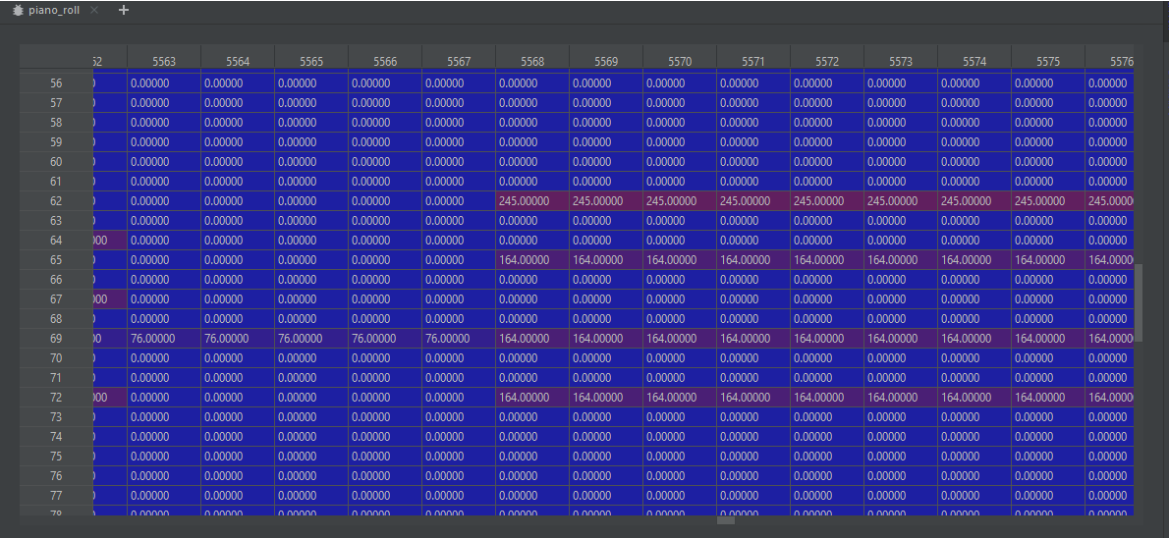

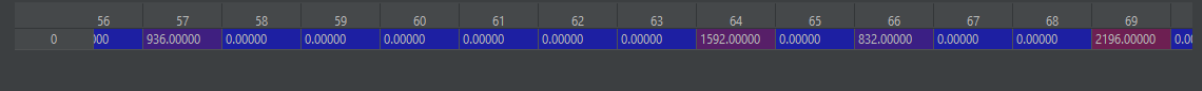

- data_loader.py +185 -0

- experiment.py +519 -0

- extract_melodies_features.py +179 -0

- figures/1.PNG +0 -0

- figures/10.PNG +0 -0

- figures/11.PNG +0 -0

- figures/12.PNG +0 -0

- figures/13.PNG +0 -0

- figures/14.PNG +0 -0

- figures/15.PNG +0 -0

- figures/2.PNG +0 -0

- figures/3.PNG +0 -0

- figures/4.PNG +0 -0

- figures/5.PNG +0 -0

- figures/6.PNG +0 -0

- figures/7.PNG +0 -0

- figures/8.PNG +0 -0

- figures/9.PNG +0 -0

- lstm_lyrics.py +76 -0

- lstm_melodies_lyrics.py +79 -0

- prepare_data.py +112 -0

- readme.md +385 -0

- rnn.py +108 -0

compute_score.py

ADDED

|

@@ -0,0 +1,75 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file computes the scores of the generated sentences

|

| 3 |

+

"""

|

| 4 |

+

import numpy as np

|

| 5 |

+

from numpy import dot

|

| 6 |

+

from numpy.linalg import norm

|

| 7 |

+

from textblob import TextBlob

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def calculate_cosine_similarity_n_gram(all_generated_lyrics, all_original_lyrics, n, word2vec):

|

| 11 |

+

"""

|

| 12 |

+

This function computes the similarity between 'n' words that are adjacent to each other.

|

| 13 |

+

:param all_generated_lyrics: list of all generated lyrics

|

| 14 |

+

:param all_original_lyrics: list of all original lyrics

|

| 15 |

+

:param n: size of grams

|

| 16 |

+

:param word2vec: a dictionary between word and index

|

| 17 |

+

:return: mean similarity between the all_generated_lyrics and all_original_lyrics

|

| 18 |

+

"""

|

| 19 |

+

cos_sim_list = []

|

| 20 |

+

for song_original_lyrics, song_generated_lyrics in zip(all_original_lyrics, all_generated_lyrics):

|

| 21 |

+

if len(song_original_lyrics) != len(song_generated_lyrics):

|

| 22 |

+

raise Exception('The vectors are not equal')

|

| 23 |

+

cos_sim_song_list = []

|

| 24 |

+

for i in range(len(song_original_lyrics) - n + 1):

|

| 25 |

+

starting_index = i

|

| 26 |

+

ending_index = i + n

|

| 27 |

+

n_gram_original = song_original_lyrics[starting_index:ending_index]

|

| 28 |

+

n_gram_generated = song_generated_lyrics[starting_index:ending_index]

|

| 29 |

+

original_vector = np.mean([word2vec[word] for word in n_gram_original], axis=0)

|

| 30 |

+

generated_vector = np.mean([word2vec[word] for word in n_gram_generated], axis=0)

|

| 31 |

+

cos_sim = dot(original_vector, generated_vector) / (norm(original_vector) * norm(generated_vector))

|

| 32 |

+

cos_sim_song_list.append(cos_sim)

|

| 33 |

+

cos_sim_song = np.mean(cos_sim_song_list)

|

| 34 |

+

cos_sim_list.append(cos_sim_song)

|

| 35 |

+

return np.mean(cos_sim_list)

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

def calculate_cosine_similarity(all_generated_lyrics, all_original_lyrics, word2vec):

|

| 39 |

+

# The similarity between the generated lyrics and the original lyrics.

|

| 40 |

+

cos_sim_list = []

|

| 41 |

+

for song_original_lyrics, song_generated_lyrics in zip(all_original_lyrics, all_generated_lyrics):

|

| 42 |

+

original_vector = np.mean([word2vec[word] for word in song_original_lyrics], axis=0)

|

| 43 |

+

generated_vector = np.mean([word2vec[word] for word in song_generated_lyrics], axis=0)

|

| 44 |

+

cos_sim = dot(original_vector, generated_vector) / (norm(original_vector) * norm(generated_vector))

|

| 45 |

+

cos_sim_list.append(cos_sim)

|

| 46 |

+

return np.mean(cos_sim_list)

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

def get_polarity_diff(all_generated_lyrics, all_original_lyrics):

|

| 50 |

+

# The polarity score is a float within the range [-1.0, 1.0].

|

| 51 |

+

pol_diff_list = []

|

| 52 |

+

for song_original_lyrics, song_generated_lyrics in zip(all_original_lyrics, all_generated_lyrics):

|

| 53 |

+

generated_lyrics = ' '.join(song_original_lyrics)

|

| 54 |

+

generated_blob = TextBlob(generated_lyrics)

|

| 55 |

+

original_lyrics = ' '.join(song_generated_lyrics)

|

| 56 |

+

original_blob = TextBlob(original_lyrics)

|

| 57 |

+

pol_diff = abs(generated_blob.sentiment.polarity - original_blob.sentiment.polarity)

|

| 58 |

+

pol_diff_list.append(pol_diff)

|

| 59 |

+

return np.mean(pol_diff_list)

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def get_subjectivity_diff(all_generated_lyrics, all_original_lyrics):

|

| 63 |

+

# The subjectivity is a float within the range [0.0, 1.0] where 0.0 is very objective and 1.0 is very subjective.

|

| 64 |

+

pol_diff_list = []

|

| 65 |

+

for song_original_lyrics, song_generated_lyrics in zip(all_original_lyrics, all_generated_lyrics):

|

| 66 |

+

generated_lyrics = ' '.join(song_original_lyrics)

|

| 67 |

+

generated_blob = TextBlob(generated_lyrics)

|

| 68 |

+

original_lyrics = ' '.join(song_generated_lyrics)

|

| 69 |

+

original_blob = TextBlob(original_lyrics)

|

| 70 |

+

pol_diff = abs(generated_blob.sentiment.subjectivity - original_blob.sentiment.subjectivity)

|

| 71 |

+

pol_diff_list.append(pol_diff)

|

| 72 |

+

return np.mean(pol_diff_list)

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

print("Loaded Successfully")

|

data_loader.py

ADDED

|

@@ -0,0 +1,185 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file manages the loading of the data

|

| 3 |

+

"""

|

| 4 |

+

import csv

|

| 5 |

+

import os

|

| 6 |

+

import pickle

|

| 7 |

+

import string

|

| 8 |

+

|

| 9 |

+

import numpy as np

|

| 10 |

+

import pretty_midi

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def get_midi_files(midi_pickle, midi_folder, artists, names):

|

| 14 |

+

"""

|

| 15 |

+

This function loads the midi files

|

| 16 |

+

:param midi_pickle: path for the pickle file

|

| 17 |

+

:param midi_folder: path for the midi folder

|

| 18 |

+

:param artists: list of artist

|

| 19 |

+

:param names: list of song names

|

| 20 |

+

:return: list of pretty midi objects

|

| 21 |

+

"""

|

| 22 |

+

# If the pickle file is already exists, read that file

|

| 23 |

+

pretty_midi_songs = _read_pickle_if_exists(pickle_path=midi_pickle)

|

| 24 |

+

if pretty_midi_songs is None: # If the pickle is exists, covert the list into variables

|

| 25 |

+

pretty_midi_songs = []

|

| 26 |

+

lower_upper_files = get_lower_upper_dict(midi_folder)

|

| 27 |

+

if len(artists) != len(names):

|

| 28 |

+

raise Exception('Artists and Names lengths are different.')

|

| 29 |

+

for artist, song_name in zip(artists, names):

|

| 30 |

+

if song_name[0] == " ":

|

| 31 |

+

song_name = song_name[1:]

|

| 32 |

+

song_file_name = f'{artist}_-_{song_name}.mid'.replace(" ", "_")

|

| 33 |

+

if song_file_name not in lower_upper_files:

|

| 34 |

+

print(f'Song {song_file_name} does not exist, even though'

|

| 35 |

+

f' the song is provided in the training or testing sets')

|

| 36 |

+

continue

|

| 37 |

+

original_file_name = lower_upper_files[song_file_name]

|

| 38 |

+

midi_file_path = os.path.join(midi_folder, original_file_name)

|

| 39 |

+

try:

|

| 40 |

+

pretty_midi_format = pretty_midi.PrettyMIDI(midi_file_path)

|

| 41 |

+

pretty_midi_songs.append(pretty_midi_format)

|

| 42 |

+

except Exception:

|

| 43 |

+

print(f'Exception raised from Mido using this file: {midi_file_path}')

|

| 44 |

+

|

| 45 |

+

_save_pickle(pickle_path=midi_pickle, content=pretty_midi_songs)

|

| 46 |

+

return pretty_midi_songs

|

| 47 |

+

|

| 48 |

+

|

| 49 |

+

def get_lower_upper_dict(midi_folder):

|

| 50 |

+

"""

|

| 51 |

+

This function maps between lower case name to upper case name

|

| 52 |

+

:param midi_folder: midi folder path

|

| 53 |

+

:return: A dictionary between lower case name to upper case name

|

| 54 |

+

"""

|

| 55 |

+

lower_upper_files = {}

|

| 56 |

+

for file_name in os.listdir(midi_folder):

|

| 57 |

+

if file_name.endswith(".mid"):

|

| 58 |

+

lower_upper_files[file_name.lower()] = file_name

|

| 59 |

+

return lower_upper_files

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

def get_input_sets(input_file, pickle_path, word2vec, midi_folder) -> (list, list, list):

|

| 63 |

+

"""

|

| 64 |

+

This function loads the training and testing set that provided by the course staff.

|

| 65 |

+

In addition some pre-processing methods are work here.

|

| 66 |

+

:param input_file: training or testing set path

|

| 67 |

+

:param pickle_path: training or testing pickle path

|

| 68 |

+

:param word2vec: dictionary maps between a word and a vector

|

| 69 |

+

:param midi_folder: the midi folder that we use to validate if song is exists

|

| 70 |

+

:return: Nothing

|

| 71 |

+

"""

|

| 72 |

+

# If the pickle file is already exists, read that file

|

| 73 |

+

pickle_value = _read_pickle_if_exists(pickle_path=pickle_path)

|

| 74 |

+

# We want only songs with midi file

|

| 75 |

+

lower_upper_files = get_lower_upper_dict(midi_folder)

|

| 76 |

+

if pickle_value is not None: # If the pickle is exists, covert the list into variables

|

| 77 |

+

artists, names, lyrics = pickle_value[0], pickle_value[1], pickle_value[2]

|

| 78 |

+

else: # The pickle file is exists.

|

| 79 |

+

artists, names, lyrics = [], [], []

|

| 80 |

+

with open(input_file, newline='') as f:

|

| 81 |

+

lines = csv.reader(f, delimiter=',', quotechar='|')

|

| 82 |

+

for row in lines:

|

| 83 |

+

artist_name = row[0]

|

| 84 |

+

song_name = row[1]

|

| 85 |

+

if song_name[0] == " ":

|

| 86 |

+

song_name = song_name[1:]

|

| 87 |

+

song_file_name = f'{artist_name}_-_{song_name}.mid'.replace(" ", "_")

|

| 88 |

+

if song_file_name not in lower_upper_files:

|

| 89 |

+

print(f'Song {song_file_name} does not exist, even though'

|

| 90 |

+

f' the song is provided in the training or testing sets')

|

| 91 |

+

continue

|

| 92 |

+

original_file_name = lower_upper_files[song_file_name]

|

| 93 |

+

midi_file_path = os.path.join(midi_folder, original_file_name)

|

| 94 |

+

try:

|

| 95 |

+

pretty_midi.PrettyMIDI(midi_file_path)

|

| 96 |

+

except Exception:

|

| 97 |

+

print(f'Exception raised from Mido using this file: {midi_file_path}')

|

| 98 |

+

continue

|

| 99 |

+

song_lyrics = row[2]

|

| 100 |

+

song_lyrics = song_lyrics.replace('&', '')

|

| 101 |

+

song_lyrics = song_lyrics.replace(' ', ' ')

|

| 102 |

+

song_lyrics = song_lyrics.replace('\'', '')

|

| 103 |

+

song_lyrics = song_lyrics.replace('--', ' ')

|

| 104 |

+

|

| 105 |

+

tokens = song_lyrics.split()

|

| 106 |

+

table = str.maketrans('', '', string.punctuation) # remove punctuation from each token

|

| 107 |

+

tokens = [w.translate(table) for w in tokens]

|

| 108 |

+

tokens = [word for word in tokens if

|

| 109 |

+

word.isalpha()] # remove remaining tokens that are not alphabetic

|

| 110 |

+

tokens = [word.lower() for word in tokens if word.lower() in word2vec] # make lower case

|

| 111 |

+

song_lyrics = ' '.join(tokens)

|

| 112 |

+

artists.append(artist_name)

|

| 113 |

+

names.append(song_name)

|

| 114 |

+

lyrics.append(song_lyrics)

|

| 115 |

+

_save_pickle(pickle_path=pickle_path, content=[artists, names, lyrics])

|

| 116 |

+

|

| 117 |

+

return {'artists': artists, 'names': names, 'lyrics': lyrics}

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

def get_word2vec(word2vec_path, pre_trained, vector_size, encoding='utf-8') -> dict:

|

| 121 |

+

"""

|

| 122 |

+

This function returns a dictionary that maps between word and a vector

|

| 123 |

+

:param word2vec_path: path for the pickle file

|

| 124 |

+

:param pre_trained: path for the pre-trained embedding file

|

| 125 |

+

:param vector_size: the vector size for each word

|

| 126 |

+

:param encoding: the encoding the the pre_trained file

|

| 127 |

+

:return: dictionary maps between a word and a vector

|

| 128 |

+

"""

|

| 129 |

+

# If the pickle file is already exists, read that file

|

| 130 |

+

word2vec = _read_pickle_if_exists(word2vec_path)

|

| 131 |

+

if word2vec is None: # The pickle file is not exists.

|

| 132 |

+

with open(pre_trained, 'r', encoding=encoding) as f: # Read a pre-trained word vectors.

|

| 133 |

+

list_of_lines = list(f)

|

| 134 |

+

word2vec = _iterate_over_glove_list(list_of_lines=list_of_lines, vector_size=vector_size)

|

| 135 |

+

_save_pickle(pickle_path=word2vec_path, content=word2vec) # Save pickle for the next running

|

| 136 |

+

return word2vec

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

def _iterate_over_glove_list(list_of_lines, vector_size):

|

| 140 |

+

"""

|

| 141 |

+

This function iterates over the glove list line by line and returns a word2vec dictionary

|

| 142 |

+

:param list_of_lines: List of glove lines

|

| 143 |

+

:param vector_size: the size of the embedding vector size

|

| 144 |

+

:return: dictionary maps between a word and a vector

|

| 145 |

+

"""

|

| 146 |

+

word2vec = {}

|

| 147 |

+

punctuation = string.punctuation

|

| 148 |

+

for line in list_of_lines:

|

| 149 |

+

values = line.split(' ')

|

| 150 |

+

word = values[0]

|

| 151 |

+

if word in punctuation:

|

| 152 |

+

continue

|

| 153 |

+

vec = np.asarray(values[1:], "float32")

|

| 154 |

+

if len(vec) != vector_size:

|

| 155 |

+

raise Warning(f"Vector size is different than {vector_size}")

|

| 156 |

+

else:

|

| 157 |

+

word2vec[word] = vec

|

| 158 |

+

return word2vec

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

def _save_pickle(pickle_path, content):

|

| 162 |

+

"""

|

| 163 |

+

This function saves a value to pickle file

|

| 164 |

+

:param pickle_path: path for the pickle file

|

| 165 |

+

:param content: the value you want to save

|

| 166 |

+

:return: Nothing

|

| 167 |

+

"""

|

| 168 |

+

with open(pickle_path, 'wb') as f:

|

| 169 |

+

pickle.dump(content, f)

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

def _read_pickle_if_exists(pickle_path):

|

| 173 |

+

"""

|

| 174 |

+

This function reads a pickle file

|

| 175 |

+

:param pickle_path:path for the pickle file

|

| 176 |

+

:return: the saved value in the pickle file

|

| 177 |

+

"""

|

| 178 |

+

pickle_file = None

|

| 179 |

+

if os.path.exists(pickle_path):

|

| 180 |

+

with open(pickle_path, 'rb') as f:

|

| 181 |

+

pickle_file = pickle.load(f)

|

| 182 |

+

return pickle_file

|

| 183 |

+

|

| 184 |

+

|

| 185 |

+

print('Loaded Successfully')

|

experiment.py

ADDED

|

@@ -0,0 +1,519 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

This file manages the experiments, see the main function for changing the settings

|

| 3 |

+

"""

|

| 4 |

+

import os

|

| 5 |

+

import random

|

| 6 |

+

import time

|

| 7 |

+

|

| 8 |

+

import pandas as pd

|

| 9 |

+

from gtts import gTTS

|

| 10 |

+

from keras_preprocessing.text import Tokenizer

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def main():

|

| 14 |

+

"""

|

| 15 |

+

This function runs the process of the experiments. Iterates over the parameters and output the results.

|

| 16 |

+

:return: Nothing

|

| 17 |

+

"""

|

| 18 |

+

# Some settings for the files we will use

|

| 19 |

+

saved_file_type = 'pkl'

|

| 20 |

+

midi_pickle = os.path.join(PICKLES_FOLDER, f"midi.{saved_file_type}")

|

| 21 |

+

midi_folder = os.path.join(DATA_PATH, "midi_files")

|

| 22 |

+

|

| 23 |

+

# Read a pre-trained word2vec dictionary

|

| 24 |

+

word2vec_path = os.path.join(PICKLES_FOLDER, f"{WORD2VEC_FILENAME}.{saved_file_type}")

|

| 25 |

+

pre_trained = os.path.join(INPUT_FOLDER, f"{GLOVE_FILE_NAME}.txt")

|

| 26 |

+

|

| 27 |

+

# Get the embedding dictionary that maps between word to a vector

|

| 28 |

+

word2vec = get_word2vec(word2vec_path=word2vec_path,

|

| 29 |

+

pre_trained=pre_trained,

|

| 30 |

+

vector_size=VECTOR_SIZE,

|

| 31 |

+

encoding=ENCODING)

|

| 32 |

+

|

| 33 |

+

# load the training and testing set that provided by the course staff

|

| 34 |

+

train_pickle_path = os.path.join(PICKLES_FOLDER, f'{TRAIN_NAME}.{saved_file_type}')

|

| 35 |

+

input_train_path = os.path.join(INPUT_FOLDER, INPUT_TRAINING_SET)

|

| 36 |

+

training_set = get_input_sets(input_file=input_train_path,

|

| 37 |

+

pickle_path=train_pickle_path,

|

| 38 |

+

word2vec=word2vec,

|

| 39 |

+

midi_folder=midi_folder)

|

| 40 |

+

test_pickle_path = os.path.join(PICKLES_FOLDER, f'{TEST_NAME}.{saved_file_type}')

|

| 41 |

+

input_test_path = os.path.join(INPUT_FOLDER, INPUT_TESTING_SET)

|

| 42 |

+

testing_set = get_input_sets(input_file=input_test_path,

|

| 43 |

+

pickle_path=test_pickle_path,

|

| 44 |

+

word2vec=word2vec,

|

| 45 |

+

midi_folder=midi_folder)

|

| 46 |

+

|

| 47 |

+

artists = training_set['artists'] + testing_set['artists']

|

| 48 |

+

songs_names = training_set['names'] + testing_set['names']

|

| 49 |

+

lyrics = training_set['lyrics'] + testing_set['lyrics']

|

| 50 |

+

|

| 51 |

+

tokenizer = Tokenizer()

|

| 52 |

+

tokenizer.fit_on_texts(lyrics)

|

| 53 |

+

total_words = len(tokenizer.word_index) + 1

|

| 54 |

+

|

| 55 |

+

encoded_lyrics_list = tokenizer.texts_to_sequences(lyrics)

|

| 56 |

+

index2word = tokenizer.index_word

|

| 57 |

+

|

| 58 |

+

melodies = get_midi_files(midi_folder=midi_folder,

|

| 59 |

+

midi_pickle=midi_pickle,

|

| 60 |

+

artists=artists,

|

| 61 |

+

names=songs_names)

|

| 62 |

+

|

| 63 |

+

train_encoded_lyrics_list = encoded_lyrics_list[:len(training_set['lyrics'])]

|

| 64 |

+

test_encoded_lyrics_list = encoded_lyrics_list[len(training_set['lyrics']):]

|

| 65 |

+

melody_pickle = os.path.join(PICKLES_FOLDER, "melody_data." + saved_file_type)

|

| 66 |

+

|

| 67 |

+

comb_dict = {'seed': [], 'seq_length': [], 'learning_rate': [], 'batch_size': [], 'epochs': [],

|

| 68 |

+

'patience': [], 'min_delta': [], 'melody_method': [], 'model_names': [], 'cos_sim_1_gram': [],

|

| 69 |

+

'cos_sim_2_gram': [],

|

| 70 |

+

'cos_sim_3_gram': [], 'cos_sim_5_gram': [], 'cos_sim_max_gram': [], 'polarity_diff': [],

|

| 71 |

+

'subjectivity_diff': [], 'loss_val': [], 'accuracy': []}

|

| 72 |

+

|

| 73 |

+

word2vec_matrix = get_word2vec_matrix(total_words=total_words,

|

| 74 |

+

index2word=index2word,

|

| 75 |

+

word2vec=word2vec,

|

| 76 |

+

vector_size=VECTOR_SIZE)

|

| 77 |

+

|

| 78 |

+

for seed in seeds_list:

|

| 79 |

+

for sl in seq_length_list:

|

| 80 |

+

sets_dict = create_sets(

|

| 81 |

+

train_encoded_lyrics_list=train_encoded_lyrics_list,

|

| 82 |

+

test_encoded_lyrics_list=test_encoded_lyrics_list,

|

| 83 |

+

total_words=total_words,

|

| 84 |

+

seq_length=sl,

|

| 85 |

+

validation_set_size=VALIDATION_SET_SIZE,

|

| 86 |

+

seed=seed)

|

| 87 |

+

training_sequences = sets_dict['train'][1].shape[0] + sets_dict['validation'][1].shape[0]

|

| 88 |

+

for melody_method in melody_extraction:

|

| 89 |

+

m_train, m_val, m_test = get_melody_data_sets(

|

| 90 |

+

train_num=training_sequences,

|

| 91 |

+

val_size=VALIDATION_SET_SIZE,

|

| 92 |

+

melodies_list=melodies,

|

| 93 |

+

sequence_length=sl,

|

| 94 |

+

encoded_lyrics_matrix=encoded_lyrics_list,

|

| 95 |

+

pkl_file_path=melody_pickle,

|

| 96 |

+

seed=seed,

|

| 97 |

+

feature_method=melody_method)

|

| 98 |

+

melody_feature_vector_size = m_train.shape[2]

|

| 99 |

+

for l in learning_rate_list:

|

| 100 |

+

for bs in batch_size_list:

|

| 101 |

+

for ep in epochs_list:

|

| 102 |

+

for pa in patience_list:

|

| 103 |

+

for md in min_delta_list:

|

| 104 |

+

for u in units_list:

|

| 105 |

+

for m_name in model_names_list:

|

| 106 |

+

run_combination(comb_dict, sl, bs, ep, index2word, l, md, pa, seed,

|

| 107 |

+

testing_set['artists'], melody_method,

|

| 108 |

+

testing_set['lyrics'], testing_set['names'], total_words, u,

|

| 109 |

+

word2vec,

|

| 110 |

+

word2vec_matrix, tokenizer, sets_dict['train'][0],

|

| 111 |

+

sets_dict['validation'][0], sets_dict['test'][0], m_train,

|

| 112 |

+

m_val, m_test, sets_dict['train'][1],

|

| 113 |

+

sets_dict['validation'][1], sets_dict['test'][1], m_name,

|

| 114 |

+

melody_feature_vector_size)

|

| 115 |

+

if m_name == 'lyrics':

|

| 116 |

+

break

|

| 117 |

+

# Here we save all the results to a csv file

|

| 118 |

+

comb_df = pd.DataFrame.from_dict(comb_dict)

|

| 119 |

+

comb_df.to_csv(COMB_PATH, index=False)

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

def run_combination(comb_dict, seq_length, batch_size, epochs, index2word, learning_rate, min_delta, patience, seed,

|

| 123 |

+

test_artists, melody_extraction_method,

|

| 124 |

+

test_lyrics, test_names, total_words, units, word2vec, word2vec_matrix, tokenizer, x_train,

|

| 125 |

+

x_val, x_test, m_train, m_val, m_test, y_train, y_val, y_test, model_name, melody_num_features):

|

| 126 |

+

"""

|

| 127 |

+

This function runs a combination with a specific settings and training or testing set

|

| 128 |

+

:param melody_extraction_method: The method used to extract melody features (naive or with meta data)

|

| 129 |

+

:param comb_dict: dictionary of all the results

|

| 130 |

+

:param seq_length: this is the input sequence length we used for the LSTM model

|

| 131 |

+

:param batch_size: the batch size for the model

|

| 132 |

+

:param epochs: number of epochs for the model

|

| 133 |

+

:param index2word: a dictionary maps between index and words.

|

| 134 |

+

:param learning_rate: learning rate for the model

|

| 135 |

+

:param min_delta: minimum delta for early stopping of the model

|

| 136 |

+

:param patience: patience fo the early stopping of the model

|

| 137 |

+

:param seed: for the random state

|

| 138 |

+

:param test_artists: list of artist in the training set

|

| 139 |

+

:param test_lyrics: list of lyrics in the training set

|

| 140 |

+

:param test_names: list of songs name in the training set

|

| 141 |

+

:param total_words: total size of the vocabulary

|

| 142 |

+

:param units: number of LSTM units

|

| 143 |

+

:param word2vec: dictionary maps between a word and a vector

|

| 144 |

+

:param word2vec_matrix: a matrix of words (rows) and vectors (columns) of the word2vec

|

| 145 |

+

:param tokenizer: Tokenizer object

|

| 146 |

+

:param x_train: lyrics training set

|

| 147 |

+

:param x_val: lyrics validation set

|

| 148 |

+

:param x_test: lyrics testing xet

|

| 149 |

+

:param m_train: melody training set

|

| 150 |

+

:param m_val: melody validation set

|

| 151 |

+

:param m_test: melody testing set

|

| 152 |

+

:param y_train: training output words

|

| 153 |

+

:param y_val: validation output words

|

| 154 |

+

:param y_test: testing output words

|

| 155 |

+

:param model_name: the name of the model we want to use in this function

|

| 156 |

+

:param melody_num_features: size of the melody vector

|

| 157 |

+

:return: Nothing

|

| 158 |

+

"""

|

| 159 |

+

model_save_type = 'h5' # file type

|

| 160 |

+

initialize_seed(seed) # files paths

|

| 161 |

+

parameters_name = f'seq_lens_{seq_length}_seed_{seed}_u_{units}_lr_{learning_rate}_bs_{batch_size}_ep_{epochs}_' \

|

| 162 |

+

f'val_{VALIDATION_SET_SIZE}_pa_{patience}_md_{min_delta}_mn_{model_name}'

|

| 163 |

+

if not model_name == 'lyrics':

|

| 164 |

+

parameters_name += f'_fm_{melody_extraction_method}'

|

| 165 |

+

# A path for the weights

|

| 166 |

+

load_weights_path = os.path.join(WEIGHTS_FOLDER, f'weights_{parameters_name}.{model_save_type}')

|

| 167 |

+

model = None

|

| 168 |

+

if model_name == 'lyrics':

|

| 169 |

+

model = LSTMLyrics(seed=seed,

|

| 170 |

+

loss=LOSS,

|

| 171 |

+

metrics=METRICS,

|

| 172 |

+

optimizer=OPTIMIZER,

|

| 173 |

+

learning_rate=learning_rate,

|

| 174 |

+

total_words=total_words,

|

| 175 |

+

seq_length=seq_length,

|

| 176 |

+

vector_size=VECTOR_SIZE,

|

| 177 |

+

word2vec_matrix=word2vec_matrix,

|

| 178 |

+

units=units)

|

| 179 |

+

elif model_name == 'melodies_lyrics':

|

| 180 |

+

x_train = [x_train, m_train]

|

| 181 |

+

x_val = [x_val, m_val]

|

| 182 |

+

x_test = [x_test, m_test]

|

| 183 |

+

model = LSTMLyricsMelodies(seed=seed,

|

| 184 |

+

loss=LOSS,

|

| 185 |

+

metrics=METRICS,

|

| 186 |

+

optimizer=OPTIMIZER,

|

| 187 |

+

learning_rate=learning_rate,

|

| 188 |

+

total_words=total_words,

|

| 189 |

+

seq_length=seq_length,

|

| 190 |

+

vector_size=VECTOR_SIZE,

|

| 191 |

+

word2vec_matrix=word2vec_matrix,

|

| 192 |

+

units=units,

|

| 193 |

+

melody_num_features=melody_num_features)

|

| 194 |

+

model.fit(weights_file=load_weights_path,

|

| 195 |

+

batch_size=batch_size,

|

| 196 |

+

epochs=epochs,

|

| 197 |

+

patience=patience,

|

| 198 |

+

min_delta=min_delta,

|

| 199 |

+

x_train=x_train,

|

| 200 |

+

y_train=y_train,

|

| 201 |

+

x_val=x_val,

|

| 202 |

+

y_val=y_val)

|

| 203 |

+

loss_val, accuracy = model.evaluate(x_test=x_test, y_test=y_test, batch_size=batch_size)

|

| 204 |

+

print(f'Loss on Testing set: {loss_val}')

|

| 205 |

+

print(f'Accuracy on Testing set: {accuracy}')

|

| 206 |

+

all_original_lyrics, all_generated_lyrics = generate_lyrics(

|

| 207 |

+

model_name=model_name,

|

| 208 |

+

word_index=index2word,

|

| 209 |

+

seq_length=seq_length,

|

| 210 |

+

model=model,

|

| 211 |

+

tokenizer=tokenizer,

|

| 212 |

+

artists=test_artists,

|

| 213 |

+

lyrics=test_lyrics,

|

| 214 |

+

names=test_names,

|

| 215 |

+

word2vec=word2vec,

|

| 216 |

+

melodies=m_test

|

| 217 |

+

)

|

| 218 |

+

cos_sim_1_gram = calculate_cosine_similarity_n_gram(all_generated_lyrics=all_generated_lyrics,

|

| 219 |

+

all_original_lyrics=all_original_lyrics,

|

| 220 |

+

n=1,

|

| 221 |

+

word2vec=word2vec)

|

| 222 |

+

print(f'Mean Cosine Similarity (1-gram): {cos_sim_1_gram}')

|

| 223 |

+

cos_sim_2_gram = calculate_cosine_similarity_n_gram(all_generated_lyrics=all_generated_lyrics,

|

| 224 |

+

all_original_lyrics=all_original_lyrics,

|

| 225 |

+

n=2,

|

| 226 |

+

word2vec=word2vec)

|

| 227 |

+

print(f'Mean Cosine Similarity (2-gram): {cos_sim_2_gram}')

|

| 228 |

+

cos_sim_3_gram = calculate_cosine_similarity_n_gram(all_generated_lyrics=all_generated_lyrics,

|

| 229 |

+

all_original_lyrics=all_original_lyrics,

|

| 230 |

+

n=3,

|

| 231 |

+

word2vec=word2vec)

|

| 232 |

+

print(f'Mean Cosine Similarity (3-gram): {cos_sim_3_gram}')

|

| 233 |

+

cos_sim_5_gram = calculate_cosine_similarity_n_gram(all_generated_lyrics=all_generated_lyrics,

|

| 234 |

+

all_original_lyrics=all_original_lyrics,

|

| 235 |

+

n=5,

|

| 236 |

+

word2vec=word2vec)

|

| 237 |

+

print(f'Mean Cosine Similarity (5-gram): {cos_sim_5_gram}')

|

| 238 |

+

cos_sim = calculate_cosine_similarity(all_generated_lyrics=all_generated_lyrics,

|

| 239 |

+

all_original_lyrics=all_original_lyrics,

|

| 240 |

+

word2vec=word2vec)

|

| 241 |

+

print(f'Mean Cosine Similarity (Max-gram): {cos_sim}')

|

| 242 |

+

pol_dif = get_polarity_diff(all_generated_lyrics=all_generated_lyrics, all_original_lyrics=all_original_lyrics)

|

| 243 |

+

print(f'Mean Polarity Difference: {pol_dif}')

|

| 244 |

+

subj_dif = get_subjectivity_diff(all_generated_lyrics=all_generated_lyrics, all_original_lyrics=all_original_lyrics)

|

| 245 |

+

print(f'Mean Subjectivity Difference: {subj_dif}')

|

| 246 |

+

update_comb_dict(batch_size, comb_dict, cos_sim, cos_sim_1_gram, cos_sim_2_gram, cos_sim_3_gram, cos_sim_5_gram,

|

| 247 |

+

epochs, learning_rate, min_delta, model_name, patience, pol_dif, seed, seq_length, subj_dif,

|

| 248 |

+

melody_extraction_method, loss_val, accuracy)

|

| 249 |

+

|

| 250 |

+

|

| 251 |

+

def update_comb_dict(batch_size, comb_dict, cos_sim, cos_sim_1_gram, cos_sim_2_gram, cos_sim_3_gram, cos_sim_5_gram,

|

| 252 |

+

epochs, learning_rate, min_delta, model_name, patience, pol_dif, seed, seq_length, subj_dif,

|

| 253 |

+

melody_extraction_method, loss_val, accuracy):

|

| 254 |

+

"""

|

| 255 |

+

This function update the combination dictionary to write to csv

|

| 256 |

+

:param accuracy: accuracy on the testing set

|

| 257 |

+

:param loss_val: loss on the testing set

|

| 258 |

+

:param batch_size: the batch size for the model

|

| 259 |

+

:param comb_dict: the results dictionary

|

| 260 |

+

:param cos_sim: the similarity score between the original and the generated sentence

|

| 261 |

+

:param cos_sim_1_gram: the similarity score between each 1 gram of original and the generated sentence

|

| 262 |

+

:param cos_sim_2_gram: the similarity score between each 2 gram of original and the generated sentence

|

| 263 |

+

:param cos_sim_3_gram: the similarity score between each 3 gram of original and the generated sentence

|

| 264 |

+

:param cos_sim_5_gram: the similarity score between each 5 gram of original and the generated sentence

|

| 265 |

+

:param epochs: number of epochs for the model

|

| 266 |

+

:param learning_rate: learning rate for the model

|

| 267 |

+

:param min_delta: minimum delta for early stopping of the model

|

| 268 |

+

:param model_name: The model name we want to test

|

| 269 |

+

:param patience: patience fo the early stopping of the model

|

| 270 |

+

:param pol_dif: the difference polarity score between the original and the generated sentence

|

| 271 |

+

:param seed: for the random state

|

| 272 |

+

:param seq_length: length of the given sequences

|

| 273 |

+

:param subj_dif: the difference subjective score between the original and the generated sentence

|

| 274 |

+

:param melody_extraction_method: The method used to extract melody features (naive or with meta data)

|

| 275 |

+

:return: Nothing

|

| 276 |

+

"""

|

| 277 |

+

comb_dict['seed'].append(seed)

|

| 278 |

+

comb_dict['seq_length'].append(seq_length)

|

| 279 |

+

comb_dict['learning_rate'].append(learning_rate)

|

| 280 |

+

comb_dict['batch_size'].append(batch_size)

|

| 281 |

+

comb_dict['epochs'].append(epochs)

|

| 282 |

+

comb_dict['patience'].append(patience)

|

| 283 |

+

comb_dict['min_delta'].append(min_delta)

|

| 284 |

+

comb_dict['model_names'].append(model_name)

|

| 285 |

+

comb_dict['cos_sim_1_gram'].append(cos_sim_1_gram)

|

| 286 |

+

comb_dict['cos_sim_2_gram'].append(cos_sim_2_gram)

|

| 287 |

+

comb_dict['cos_sim_3_gram'].append(cos_sim_3_gram)

|

| 288 |

+

comb_dict['cos_sim_5_gram'].append(cos_sim_5_gram)

|

| 289 |

+

comb_dict['cos_sim_max_gram'].append(cos_sim)

|

| 290 |

+

comb_dict['polarity_diff'].append(pol_dif)

|

| 291 |

+

comb_dict['subjectivity_diff'].append(subj_dif)

|

| 292 |

+

comb_dict['melody_method'].append(melody_extraction_method)

|

| 293 |

+

comb_dict['loss_val'].append(loss_val)

|

| 294 |

+

comb_dict['accuracy'].append(accuracy)

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

def generate_song_given_sequence(model_name, model, tokenizer, seed_words, vector_of_indices, required_length, artist,

|

| 298 |

+

name, index_value, melodies_song):

|

| 299 |

+

"""

|

| 300 |

+

This function generates a new song

|

| 301 |

+

:param model_name: model name

|

| 302 |

+

:param melodies_song: a matrix contains the melodies of this song

|

| 303 |

+

:param model:

|

| 304 |

+

:param tokenizer:

|

| 305 |

+

:param seed_words:

|

| 306 |

+

:param vector_of_indices:

|

| 307 |

+

:param required_length:

|

| 308 |

+

:param artist:

|

| 309 |

+

:param name:

|

| 310 |

+

:param index_value:

|

| 311 |

+

:return: Nothing

|

| 312 |

+

"""

|

| 313 |

+

new_song_lyrics: list = [seed_words]

|

| 314 |

+

for word_i in range(required_length):

|

| 315 |

+

if model_name == 'lyrics': # Different input for lyrics alone and lyrics and melodies.

|

| 316 |

+

voc_prob = model.predict(vector_of_indices)

|

| 317 |

+

else:

|

| 318 |

+

melody_seq = np.expand_dims(a=melodies_song[word_i], axis=0)

|

| 319 |

+

voc_prob = model.predict([vector_of_indices, melody_seq])

|

| 320 |

+

voc_prob = voc_prob.T # Transpose the array

|

| 321 |

+

word_index_array = np.arange(voc_prob.size)

|

| 322 |

+

# This line select a word based on the predicted probabilities

|

| 323 |

+

index_of_selected_word = random.choices(word_index_array, k=1, weights=voc_prob)

|

| 324 |

+

selected_word = find_word_by_index(word_index=index_of_selected_word[0], tokenizer=tokenizer)

|

| 325 |

+

index_of_selected_word_array = np.array(np.array(index_of_selected_word).reshape(1, 1))

|

| 326 |

+

vector_of_indices = np.append(vector_of_indices, index_of_selected_word_array, axis=1)

|

| 327 |

+

remove_index = 0

|

| 328 |

+

vector_of_indices = np.delete(vector_of_indices, remove_index, 1)

|

| 329 |

+

new_song_lyrics.append(selected_word)

|

| 330 |

+

final_text = ' '.join(new_song_lyrics)

|

| 331 |

+

if WRITE_TO_MP3:

|

| 332 |

+

lyrics_to_mp3 = gTTS(text=final_text, lang='en', slow=False)

|

| 333 |

+

lyrics_to_mp3.save(os.path.join(OUTPUT_FOLDER, f"{artist}_{name}_{index_value}.mp3"))

|

| 334 |

+

return final_text

|

| 335 |

+

|

| 336 |

+

|

| 337 |

+

def find_word_by_index(word_index, tokenizer):

|

| 338 |

+

"""

|

| 339 |

+

This function returns the word given the index

|

| 340 |

+

:param word_index: the index of the word we want to find

|

| 341 |

+

:param tokenizer: object

|

| 342 |

+

:return: the word at that index

|

| 343 |

+

"""

|

| 344 |

+

for word, index in tokenizer.word_index.items():

|

| 345 |

+

if index == word_index:

|

| 346 |

+

return word

|

| 347 |

+

|

| 348 |

+

|

| 349 |

+

def generate_lyrics(model_name, word_index, seq_length, model, tokenizer, artists, lyrics, names,

|

| 350 |

+

word2vec, melodies) -> (list, list):

|

| 351 |

+

"""

|

| 352 |

+

This function creates lyrics for each song in the testing set

|

| 353 |

+

:param melodies: a 3D array that maps sequence and the melodies features (2D array (sequence size, melody vector)).

|

| 354 |

+

:param model_name: The model name we want to test

|

| 355 |

+

:param word_index: A dictionary maps between index to word

|

| 356 |

+

:param seq_length: length of the given sequences

|

| 357 |

+

:param model: the learned model

|

| 358 |

+

:param tokenizer: the tokenizer object

|

| 359 |

+

:param artists: list of artists in the testing set

|

| 360 |

+

:param lyrics: list of lyrics in the testing set

|

| 361 |

+

:param names: list of song names in the testing set

|

| 362 |

+

:param word2vec: A dictionary maps between word to embedding vector

|

| 363 |

+

:return: lists of original and generated songs and

|

| 364 |

+

"""

|

| 365 |

+

all_original_lyrics = []

|

| 366 |

+

all_generated_lyrics = []

|

| 367 |

+

start_index_melody = 0

|

| 368 |

+

for artist, name, lyrics in zip(artists, names, lyrics):

|

| 369 |

+

print('-' * 100)

|

| 370 |

+

print(f'Original lyrics for {artist} - {name} are: "{lyrics}"')

|

| 371 |

+

relevant_words_in_song = []

|

| 372 |

+

find_relevant_words(lyrics, relevant_words_in_song, word2vec)

|

| 373 |

+

number_of_seq = len(relevant_words_in_song) - seq_length + 1

|

| 374 |

+

end_index_melody = start_index_melody + number_of_seq

|

| 375 |

+

melodies_song = melodies[start_index_melody:end_index_melody, :, :]

|

| 376 |

+

required_length = len(relevant_words_in_song) - (seq_length * TESTING_SEED_TEXT_PER_SONG)

|

| 377 |

+

for seed_index in range(TESTING_SEED_TEXT_PER_SONG):

|

| 378 |

+

# We select three different word\sentence as seed for the new song

|

| 379 |

+

starting_index = 0 + seed_index * seq_length

|

| 380 |

+

ending_index = starting_index + seq_length

|

| 381 |

+

song_first_word_in_word2vec = relevant_words_in_song[starting_index:ending_index]

|

| 382 |

+

song_first_indices = []

|

| 383 |

+

for word in song_first_word_in_word2vec:

|

| 384 |

+

word_i = [k for k, v in word_index.items() if v == word][0]

|

| 385 |

+

song_first_indices.append(word_i)

|

| 386 |

+

encoded_test = np.asarray(song_first_indices).reshape((1, seq_length))

|

| 387 |

+

seed_text = ' '.join(song_first_word_in_word2vec)

|

| 388 |

+

generated_text = generate_song_given_sequence(model_name, model, tokenizer, seed_text, encoded_test,

|

| 389 |

+

required_length, artist, name, seed_index, melodies_song)

|

| 390 |

+

gen_list = generated_text.split(' ')

|

| 391 |

+

all_generated_lyrics.append(gen_list.copy()[seq_length:])

|

| 392 |

+

original_starting_index = starting_index + seq_length

|

| 393 |

+

original_ending_index = original_starting_index + required_length

|

| 394 |

+

original_lyrics = relevant_words_in_song[original_starting_index:original_ending_index]

|

| 395 |

+

all_original_lyrics.append(original_lyrics)

|

| 396 |

+

gen_list.insert(seq_length, '\n')

|

| 397 |

+

generated_text = ' '.join(gen_list)

|

| 398 |

+

print(f'Seed text: {generated_text}, required {required_length} words')

|

| 399 |

+

print('-' * 100)

|

| 400 |

+

start_index_melody = end_index_melody + 1

|

| 401 |

+

return all_original_lyrics, all_generated_lyrics

|

| 402 |

+

|

| 403 |

+

|

| 404 |

+

def find_relevant_words(lyrics, selected_words, word2vec):

|

| 405 |

+

"""

|

| 406 |

+

This loop selects all the relevant words in the pre-defined word2vec

|

| 407 |

+

:param lyrics:

|

| 408 |

+

:param selected_words:

|

| 409 |

+

:param word2vec:

|

| 410 |

+

:return:

|

| 411 |

+

"""

|

| 412 |

+

for word in lyrics.split():

|

| 413 |

+

if word in word2vec and word not in selected_words:

|

| 414 |

+

selected_words.append(word)

|

| 415 |

+

|

| 416 |

+

|

| 417 |

+

def initialize_seed(seed):

|

| 418 |

+

"""

|

| 419 |

+

Initialize all relevant environments with the seed.

|

| 420 |

+

"""

|

| 421 |

+

os.environ['PYTHONHASHSEED'] = str(seed)

|

| 422 |

+

random.seed(seed)

|

| 423 |

+

np.random.seed(seed)

|

| 424 |

+

|

| 425 |

+

|

| 426 |

+

def folder_exists(path):

|

| 427 |

+

"""

|

| 428 |

+

This function checks if folder path is exists, in case not, the function creates the folder.

|

| 429 |

+

:param path: folder path

|

| 430 |

+

"""

|

| 431 |

+

if not os.path.exists(path):

|

| 432 |

+

os.mkdir(path)

|

| 433 |

+

|

| 434 |

+

|

| 435 |

+

if __name__ == '__main__':

|

| 436 |

+

# Environment settings

|

| 437 |

+

IS_COLAB = (os.name == 'posix')

|

| 438 |

+

LOAD_DATA = not (os.name == 'posix')

|

| 439 |

+

path_separator = os.path.sep

|

| 440 |

+

|

| 441 |

+

IS_EXPERIMENT = False

|

| 442 |

+

WRITE_TO_MP3 = False

|

| 443 |

+

if IS_COLAB:

|

| 444 |

+

# the google drive folder we used

|

| 445 |

+

DATA_PATH = os.path.sep + os.path.join('content', 'drive', 'My\ Drive', 'datasets', 'midi').replace('\\', '')

|

| 446 |

+

IS_EXPERIMENT = True

|

| 447 |

+

else:

|

| 448 |

+

# locally

|

| 449 |

+

from data_loader import get_word2vec

|

| 450 |

+

from data_loader import get_input_sets

|

| 451 |

+

from data_loader import get_midi_files

|

| 452 |

+

from lstm_lyrics import LSTMLyrics

|

| 453 |

+

from lstm_melodies_lyrics import LSTMLyricsMelodies

|

| 454 |

+

from prepare_data import get_word2vec_matrix

|

| 455 |

+

from prepare_data import create_sets

|

| 456 |

+

from compute_score import calculate_cosine_similarity

|

| 457 |

+

from compute_score import get_polarity_diff

|

| 458 |

+

from compute_score import get_subjectivity_diff

|

| 459 |

+

from compute_score import calculate_cosine_similarity_n_gram

|

| 460 |

+

from extract_melodies_features import *

|

| 461 |

+

|

| 462 |

+

DATA_PATH = os.path.join('.\\', 'midi')

|

| 463 |

+

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

|

| 464 |

+

|

| 465 |

+

# PATHS

|

| 466 |

+

TRAIN_NAME = 'train'

|

| 467 |

+

INPUT_TRAINING_SET = f"lyrics_{TRAIN_NAME}_set.csv"

|

| 468 |

+

TEST_NAME = 'test'

|

| 469 |

+

INPUT_TESTING_SET = f"lyrics_{TEST_NAME}_set.csv"

|

| 470 |

+

OUTPUT_FOLDER = os.path.join(DATA_PATH, 'output_files')

|

| 471 |

+

folder_exists(OUTPUT_FOLDER)

|

| 472 |

+

INPUT_FOLDER = os.path.join(DATA_PATH, 'input_files')

|

| 473 |

+

folder_exists(INPUT_FOLDER)

|

| 474 |

+

PICKLES_FOLDER = os.path.join(DATA_PATH, 'pickles')

|

| 475 |

+

folder_exists(PICKLES_FOLDER)

|

| 476 |

+

WEIGHTS_FOLDER = os.path.join(DATA_PATH, 'weights')

|

| 477 |

+

folder_exists(WEIGHTS_FOLDER)

|

| 478 |

+

WORD2VEC_FILENAME = 'word2vec'

|

| 479 |

+

RESULTS_FILE_NAME = 'results.csv'

|

| 480 |

+

COMB_PATH = os.path.join(OUTPUT_FOLDER, RESULTS_FILE_NAME)

|

| 481 |

+

GLOVE_FILE_NAME = 'glove.6B.300d'

|

| 482 |

+

ENCODING = 'utf-8'

|

| 483 |

+

|

| 484 |

+

LOSS = 'categorical_crossentropy'

|

| 485 |

+

METRICS = ['accuracy']

|

| 486 |

+

VECTOR_SIZE = 300

|

| 487 |

+

VALIDATION_SET_SIZE = 0.2

|

| 488 |

+

TESTING_SEED_TEXT_PER_SONG = 3

|

| 489 |

+

OPTIMIZER = 'adam'

|

| 490 |

+

|

| 491 |

+

if IS_EXPERIMENT: # Experiments settings

|

| 492 |

+

seeds_list = [0]

|

| 493 |

+

learning_rate_list = [0.01]

|

| 494 |

+

batch_size_list = [32, 64]

|

| 495 |

+

epochs_list = [10]

|

| 496 |

+

patience_list = [0]

|

| 497 |

+

min_delta_list = [0.1]

|

| 498 |

+

units_list = [256]

|

| 499 |

+

seq_length_list = [1, 5, 20]

|

| 500 |

+

model_names_list = ['melodies_lyrics', 'lyrics']

|

| 501 |

+

melody_extraction = ['naive']

|

| 502 |