# Training customization

TRL is designed with modularity in mind so that users to be able to efficiently customize the training loop for their needs. Below are some examples on how you can apply and test different techniques.

## Train on multiple GPUs / nodes

The trainers in TRL use 🤗 Accelerate to enable distributed training across multiple GPUs or nodes. To do so, first create an 🤗 Accelerate config file by running

```bash

accelerate config

```

and answering the questions according to your multi-gpu / multi-node setup. You can then launch distributed training by running:

```bash

accelerate launch your_script.py

```

We also provide config files in the [examples folder](https://github.com/huggingface/trl/tree/main/examples/accelerate_configs) that can be used as templates. To use these templates, simply pass the path to the config file when launching a job, e.g.:

```shell

accelerate launch --config_file=examples/accelerate_configs/multi_gpu.yaml --num_processes {NUM_GPUS} path_to_script.py --all_arguments_of_the_script

```

Refer to the [examples page](https://github.com/huggingface/trl/tree/main/examples) for more details.

### Distributed training with DeepSpeed

All of the trainers in TRL can be run on multiple GPUs together with DeepSpeed ZeRO-{1,2,3} for efficient sharding of the optimizer states, gradients, and model weights. To do so, run:

```shell

accelerate launch --config_file=examples/accelerate_configs/deepspeed_zero{1,2,3}.yaml --num_processes {NUM_GPUS} path_to_your_script.py --all_arguments_of_the_script

```

Note that for ZeRO-3, a small tweak is needed to initialize your reward model on the correct device via the `zero3_init_context_manager()` context manager. In particular, this is needed to avoid DeepSpeed hanging after a fixed number of training steps. Here is a snippet of what is involved from the [`sentiment_tuning`](https://github.com/huggingface/trl/blob/main/examples/scripts/ppo.py) example:

```python

ds_plugin = ppo_trainer.accelerator.state.deepspeed_plugin

if ds_plugin is not None and ds_plugin.is_zero3_init_enabled():

with ds_plugin.zero3_init_context_manager(enable=False):

sentiment_pipe = pipeline("sentiment-analysis", model="lvwerra/distilbert-imdb", device=device)

else:

sentiment_pipe = pipeline("sentiment-analysis", model="lvwerra/distilbert-imdb", device=device)

```

Consult the 🤗 Accelerate [documentation](https://huggingface.co/docs/accelerate/usage_guides/deepspeed) for more information about the DeepSpeed plugin.

## Use different optimizers

By default, the `PPOTrainer` creates a `torch.optim.Adam` optimizer. You can create and define a different optimizer and pass it to `PPOTrainer`:

```python

import torch

from transformers import GPT2Tokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead

# 1. load a pretrained model

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

ref_model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

# 2. define config

ppo_config = {'batch_size': 1, 'learning_rate':1e-5}

config = PPOConfig(**ppo_config)

# 2. Create optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=config.learning_rate)

# 3. initialize trainer

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer, optimizer=optimizer)

```

For memory efficient fine-tuning, you can also pass `Adam8bit` optimizer from `bitsandbytes`:

```python

import torch

import bitsandbytes as bnb

from transformers import GPT2Tokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead

# 1. load a pretrained model

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

ref_model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

# 2. define config

ppo_config = {'batch_size': 1, 'learning_rate':1e-5}

config = PPOConfig(**ppo_config)

# 2. Create optimizer

optimizer = bnb.optim.Adam8bit(model.parameters(), lr=config.learning_rate)

# 3. initialize trainer

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer, optimizer=optimizer)

```

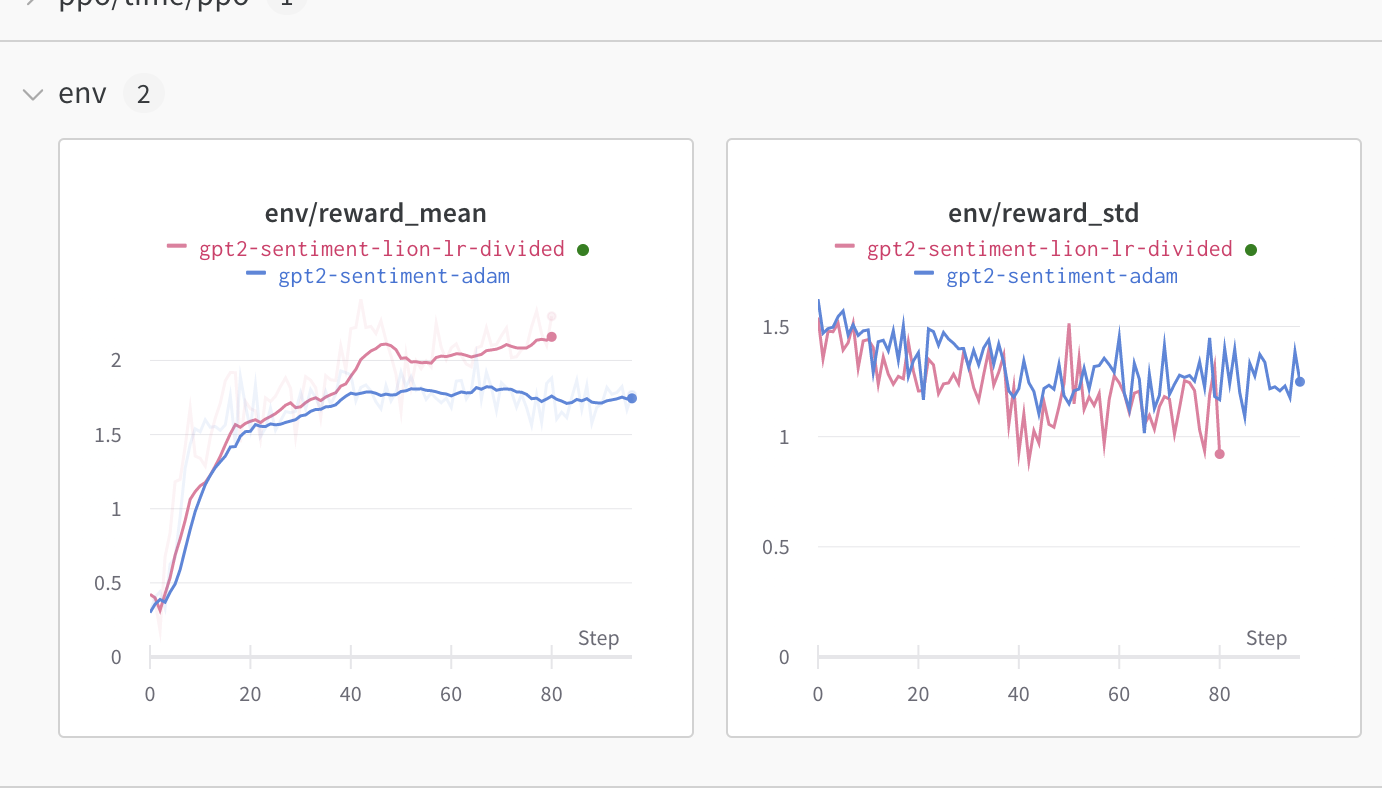

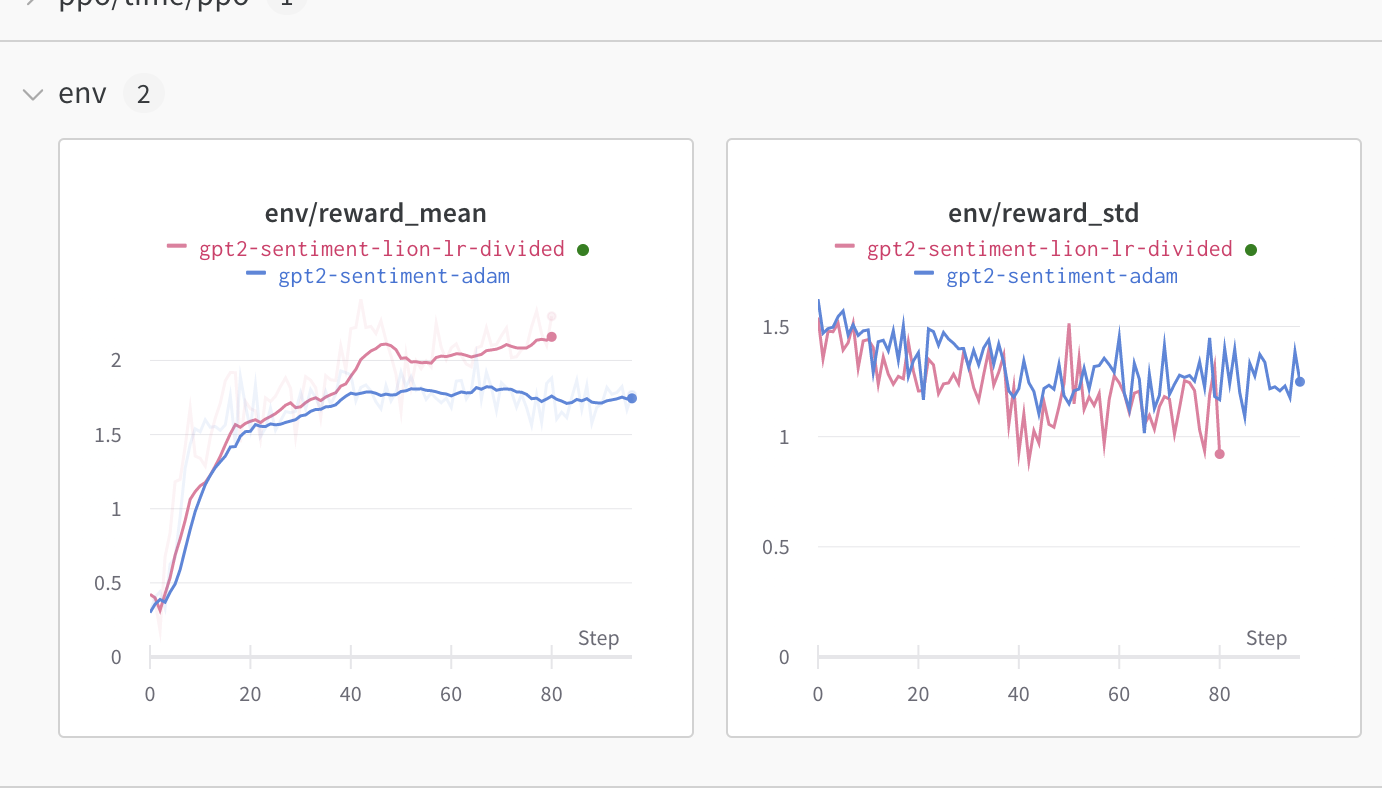

### Use LION optimizer

You can use the new [LION optimizer from Google](https://huggingface.co/papers/2302.06675) as well, first take the source code of the optimizer definition [here](https://github.com/lucidrains/lion-pytorch/blob/main/lion_pytorch/lion_pytorch.py), and copy it so that you can import the optimizer. Make sure to initialize the optimizer by considering the trainable parameters only for a more memory efficient training:

```python

optimizer = Lion(filter(lambda p: p.requires_grad, self.model.parameters()), lr=self.config.learning_rate)

...

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer, optimizer=optimizer)

```

We advise you to use the learning rate that you would use for `Adam` divided by 3 as pointed out [here](https://github.com/lucidrains/lion-pytorch#lion---pytorch). We observed an improvement when using this optimizer compared to classic Adam (check the full logs [here](https://wandb.ai/distill-bloom/trl/runs/lj4bheke?workspace=user-younesbelkada)):

## Add a learning rate scheduler

You can also play with your training by adding learning rate schedulers!

```python

import torch

from transformers import GPT2Tokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead

# 1. load a pretrained model

model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

ref_model = AutoModelForCausalLMWithValueHead.from_pretrained('gpt2')

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

# 2. define config

ppo_config = {'batch_size': 1, 'learning_rate':1e-5}

config = PPOConfig(**ppo_config)

# 2. Create optimizer

optimizer = torch.optim.SGD(model.parameters(), lr=config.learning_rate)

lr_scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)

# 3. initialize trainer

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer, optimizer=optimizer, lr_scheduler=lr_scheduler)

```

## Memory efficient fine-tuning by sharing layers

Another tool you can use for more memory efficient fine-tuning is to share layers between the reference model and the model you want to train.

```python

import torch

from transformers import AutoTokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead, create_reference_model

# 1. load a pretrained model

model = AutoModelForCausalLMWithValueHead.from_pretrained('bigscience/bloom-560m')

ref_model = create_reference_model(model, num_shared_layers=6)

tokenizer = AutoTokenizer.from_pretrained('bigscience/bloom-560m')

# 2. initialize trainer

ppo_config = {'batch_size': 1}

config = PPOConfig(**ppo_config)

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer)

```

## Pass 8-bit reference models

Since `trl` supports all key word arguments when loading a model from `transformers` using `from_pretrained`, you can also leverage `load_in_8bit` from `transformers` for more memory efficient fine-tuning.

Read more about 8-bit model loading in `transformers` [here](https://huggingface.co/docs/transformers/perf_infer_gpu_one#bitsandbytes-integration-for-int8-mixedprecision-matrix-decomposition).

```python

# 0. imports

# pip install bitsandbytes

import torch

from transformers import AutoTokenizer

from trl import PPOTrainer, PPOConfig, AutoModelForCausalLMWithValueHead

# 1. load a pretrained model

model = AutoModelForCausalLMWithValueHead.from_pretrained('bigscience/bloom-560m')

ref_model = AutoModelForCausalLMWithValueHead.from_pretrained('bigscience/bloom-560m', device_map="auto", load_in_8bit=True)

tokenizer = AutoTokenizer.from_pretrained('bigscience/bloom-560m')

# 2. initialize trainer

ppo_config = {'batch_size': 1}

config = PPOConfig(**ppo_config)

ppo_trainer = PPOTrainer(config, model, ref_model, tokenizer)

```

## Use the CUDA cache optimizer

When training large models, you should better handle the CUDA cache by iteratively clearing it. Do do so, simply pass `optimize_cuda_cache=True` to `PPOConfig`:

```python

config = PPOConfig(..., optimize_cuda_cache=True)

```

## Use score scaling/normalization/clipping

As suggested by [Secrets of RLHF in Large Language Models Part I: PPO](https://huggingface.co/papers/2307.04964), we support score (aka reward) scaling/normalization/clipping to improve training stability via `PPOConfig`:

```python

from trl import PPOConfig

ppo_config = {

use_score_scaling=True,

use_score_norm=True,

score_clip=0.5,

}

config = PPOConfig(**ppo_config)

```

To run `ppo.py`, you can use the following command:

```

python examples/scripts/ppo.py --log_with wandb --use_score_scaling --use_score_norm --score_clip 0.5

```