Librarian Bot: Add language metadata for dataset

Browse filesThis pull request aims to enrich the metadata of your dataset by adding language metadata to `YAML` block of your dataset card `README.md`.

How did we find this information?

- The librarian-bot downloaded a sample of rows from your dataset using the [dataset-server](https://huggingface.co/docs/datasets-server/) library

- The librarian-bot used a language detection model to predict the likely language of your dataset. This was done on columns likely to contain text data.

- Predictions for rows are aggregated by language and a filter is applied to remove languages which are very infrequently predicted

- A confidence threshold is applied to remove languages which are not confidently predicted

The following languages were detected with the following mean probabilities:

- English (en): 100.00%

If this PR is merged, the language metadata will be added to your dataset card. This will allow users to filter datasets by language on the [Hub](https://huggingface.co/datasets).

If the language metadata is incorrect, please feel free to close this PR.

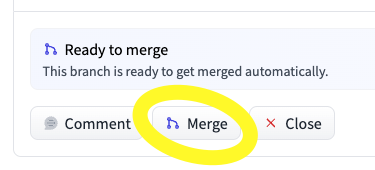

To merge this PR, you can use the merge button below the PR:

This PR comes courtesy of [Librarian Bot](https://huggingface.co/librarian-bots). If you have any feedback, queries, or need assistance, please don't hesitate to reach out to

@davanstrien

.

|

@@ -1,46 +1,48 @@

|

|

| 1 |

---

|

|

|

|

|

|

|

| 2 |

dataset_info:

|

| 3 |

features:

|

| 4 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

dtype: string

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

dtype: string

|

| 19 |

-

- name: D

|

| 20 |

-

dtype: string

|

| 21 |

-

- name: category

|

| 22 |

-

dtype: string

|

| 23 |

-

- name: img

|

| 24 |

-

dtype: image

|

| 25 |

configs:

|

| 26 |

- config_name: 1_correct

|

| 27 |

data_files:

|

| 28 |

- split: validation

|

| 29 |

-

path:

|

| 30 |

- split: test

|

| 31 |

-

path:

|

| 32 |

- config_name: 1_correct_var

|

| 33 |

data_files:

|

| 34 |

- split: validation

|

| 35 |

-

path:

|

| 36 |

- split: test

|

| 37 |

-

path:

|

| 38 |

- config_name: n_correct

|

| 39 |

data_files:

|

| 40 |

- split: validation

|

| 41 |

-

path:

|

| 42 |

- split: test

|

| 43 |

-

path:

|

| 44 |

---

|

| 45 |

# DARE

|

| 46 |

|

|

|

|

| 1 |

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

dataset_info:

|

| 5 |

features:

|

| 6 |

+

- name: id

|

| 7 |

+

dtype: string

|

| 8 |

+

- name: instance_id

|

| 9 |

+

dtype: int64

|

| 10 |

+

- name: question

|

| 11 |

+

dtype: string

|

| 12 |

+

- name: answer

|

| 13 |

+

list:

|

| 14 |

dtype: string

|

| 15 |

+

- name: A

|

| 16 |

+

dtype: string

|

| 17 |

+

- name: B

|

| 18 |

+

dtype: string

|

| 19 |

+

- name: C

|

| 20 |

+

dtype: string

|

| 21 |

+

- name: D

|

| 22 |

+

dtype: string

|

| 23 |

+

- name: category

|

| 24 |

+

dtype: string

|

| 25 |

+

- name: img

|

| 26 |

+

dtype: image

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 27 |

configs:

|

| 28 |

- config_name: 1_correct

|

| 29 |

data_files:

|

| 30 |

- split: validation

|

| 31 |

+

path: 1_correct/validation/0000.parquet

|

| 32 |

- split: test

|

| 33 |

+

path: 1_correct/test/0000.parquet

|

| 34 |

- config_name: 1_correct_var

|

| 35 |

data_files:

|

| 36 |

- split: validation

|

| 37 |

+

path: 1_correct_var/validation/0000.parquet

|

| 38 |

- split: test

|

| 39 |

+

path: 1_correct_var/test/0000.parquet

|

| 40 |

- config_name: n_correct

|

| 41 |

data_files:

|

| 42 |

- split: validation

|

| 43 |

+

path: n_correct/validation/0000.parquet

|

| 44 |

- split: test

|

| 45 |

+

path: n_correct/test/0000.parquet

|

| 46 |

---

|

| 47 |

# DARE

|

| 48 |

|