Datasets:

ArXiv:

License:

File size: 7,457 Bytes

7f382b9 130c112 7f382b9 130c112 7f382b9 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 |

---

license: mit

---

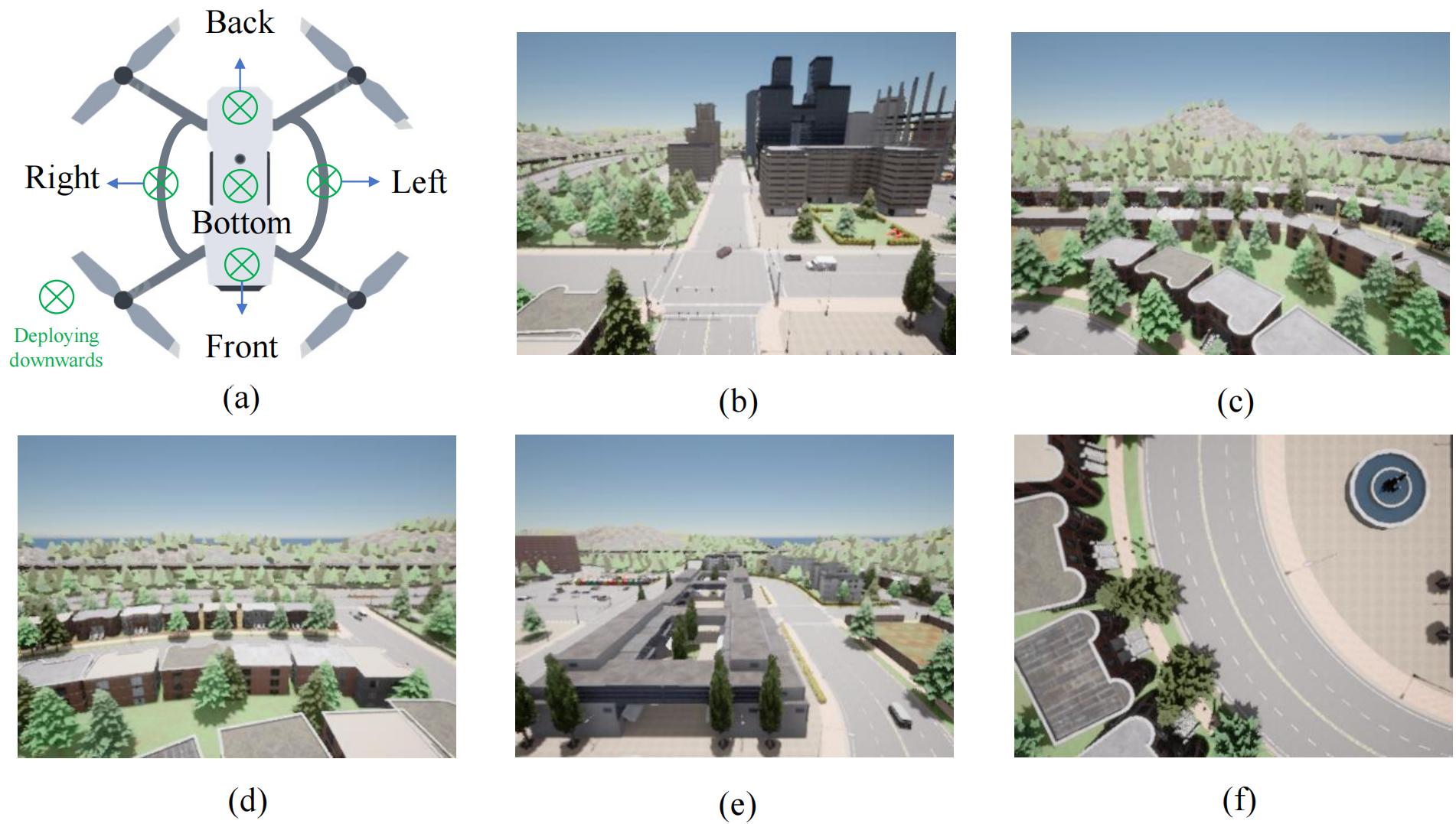

# Multi-View UAV Dataset

A comprehensive multi-view UAV dataset for visual navigation research in GPS-denied urban environments, collected using the CARLA simulator.

[](https://opensource.org/licenses/MIT)

## Dataset Overview

This dataset supports research on visual navigation for unmanned aerial vehicles (UAVs) in GPS-denied urban environments. It features multi-directional camera views collected from simulated UAV flights across diverse urban landscapes, making it ideal for developing localization and navigation algorithms that rely on visual cues rather than GPS signals.

## Key Features

- **Multi-View Perspective**: 5 cameras (Front, Back, Left, Right, Down) providing panoramic visual information

- **Multiple Data Types**: RGB images, semantic segmentation, and depth maps for comprehensive scene understanding

- **Precise Labels**: Accurate position coordinates and rotation angles for each frame

- **Diverse Environments**: 8 different urban maps with varying architectural styles and layouts

- **Large Scale**: 357,690 multi-view frames enabling robust algorithm training and evaluation

## Dataset Structure

```

Multi-View-UAV-Dataset/town{XX}_YYYYMMDD_HHMMSS/

├── calibration/

│ └── camera_calibration.json # Parameters for all 5 UAV onboard cameras

├── depth/ # Depth images from all cameras

│ ├── Back/

│ │ ├── NNNNNN.npy # Depth data in NumPy format

│ │ ├── NNNNNN.png # Visualization of depth data

│ │ └── ...

│ ├── Down/

│ ├── Front/

│ ├── Left/

│ └── Right/

├── metadata/ # UAV position, rotation angles and timestamps

│ ├── NNNNNN.json

│ ├── NNNNNN.json

│ └── ...

├── rgb/ # RGB images from all cameras (PNG format)

│ ├── Back/

│ ├── Down/

│ ├── Front/

│ ├── Left/

│ └── Right/

└── semantic/ # Semantic segmentation images (PNG format)

├── Back/

├── Down/

├── Front/

├── Left/

└── Right/

```

## Data Format Details

### Image Data

- **RGB Images**: 400×300 pixel resolution in PNG format

- **Semantic Segmentation**: Class-labeled pixels in PNG format

- **Depth Maps**:

- PNG format for visualization

- NumPy (.npy) format for precise depth values

### Metadata

Each frame includes a corresponding JSON file containing:

- Precise UAV position coordinates (x, y, z)

- Rotation angles (roll, pitch, yaw)

- Timestamp information

### Camera Calibration

- Single JSON file with intrinsic and extrinsic parameters for all five cameras

## Collection Methodology

The dataset was collected using:

- **Simulator**: CARLA open urban driving simulator

- **Flight Pattern**: Constant height UAV flight following road-aligned waypoints with random direction changes

- **Hardware**: 4×RTX 5000 Ada GPUs for simulation and data collection

- **Environments**: 8 urban maps (Town01, Town02, Town03, Town04, Town05, Town06, Town07, Town10HD)

## Visual Examples

### RGB Camera Views

### Semantic Segmentation Views

### Depth Map Views

## Research Applications

This dataset enables research in multiple areas:

- Visual-based UAV localization in GPS-denied environments

- Multi-view feature extraction and fusion

- Communication-efficient UAV-edge collaboration

- Task-oriented information bottleneck approaches

- Deep learning for aerial navigation

The dataset was specifically designed for the research presented in [Task-Oriented Communications for Visual Navigation with Edge-Aerial Collaboration in Low Altitude Economy](https://www.researchgate.net/publication/391159895_Task-Oriented_Communications_for_Visual_Navigation_with_Edge-Aerial_Collaboration_in_Low_Altitude_Economy).

## Usage Example

```python

# Basic example to load and visualize data

import os

import json

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

# Set paths

dataset_path = "path/to/dataset/town05_20241218_092919/town05_20241218_092919"

frame_id = "000000"

# Load metadata

with open(os.path.join(dataset_path, "metadata", f"{frame_id}.json"), "r") as f:

metadata = json.load(f)

# Print UAV position

print(f"UAV Position: X={metadata['position']['x']}, Y={metadata['position']['y']}, Z={metadata['position']['z']}")

print(f"UAV Rotation: Roll={metadata['rotation']['roll']}, Pitch={metadata['rotation']['pitch']}, Yaw={metadata['rotation']['yaw']}")

# Load and display RGB image (Front camera)

rgb_path = os.path.join(dataset_path, "rgb", "Front", f"{frame_id}.png")

rgb_image = Image.open(rgb_path)

# Load and display semantic image (Front camera)

semantic_path = os.path.join(dataset_path, "semantic", "Front", f"{frame_id}.png")

semantic_image = Image.open(semantic_path)

# Load depth data (Front camera)

depth_path = os.path.join(dataset_path, "depth", "Front", f"{frame_id}.npy")

depth_data = np.load(depth_path)

# Display images

fig, axes = plt.subplots(1, 3, figsize=(15, 5))

axes[0].imshow(rgb_image)

axes[0].set_title("RGB Image")

axes[1].imshow(semantic_image)

axes[1].set_title("Semantic Segmentation")

axes[2].imshow(depth_data, cmap='plasma')

axes[2].set_title("Depth Map")

plt.tight_layout()

plt.show()

```

## Citation

If you use this dataset in your research, please cite our paper:

```bibtex

@misc{fang2025taskorientedcommunicationsvisualnavigation,

title={Task-Oriented Communications for Visual Navigation with Edge-Aerial Collaboration in Low Altitude Economy},

author={Zhengru Fang and Zhenghao Liu and Jingjing Wang and Senkang Hu and Yu Guo and Yiqin Deng and Yuguang Fang},

year={2025},

eprint={2504.18317},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2504.18317},

}

```

## License

This dataset is released under the MIT License.

## Acknowledgments

This work was supported in part by the Hong Kong SAR Government under the Global STEM Professorship and Research Talent Hub, the Hong Kong Jockey Club under the Hong Kong JC STEM Lab of Smart City (Ref.: 2023-0108), the National Natural Science Foundation of China under Grant No. 62222101 and No. U24A20213, the Beijing Natural Science Foundation under Grant No. L232043 and No. L222039, the Natural Science Foundation of Zhejiang Province under Grant No. LMS25F010007, and the Hong Kong Innovation and Technology Commission under InnoHK Project CIMDA.

## Contact

For questions, issues, or collaboration opportunities, please contact:

- Email: zhefang4-c [AT] my [DOT] cityu [DOT] edu [DOT] hk

- GitHub: [TOC-Edge-Aerial](https://github.com/fangzr/TOC-Edge-Aerial) |