---

license: mit

language:

- en

base_model:

- microsoft/phi-4

tags:

- not-for-all-audiences

---

Phi-Line_14B

---

---

---

Unlike its lobotomized [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) sister, this one **kept all the brain cells**.

## Wow! It must be so much better!

This makes perfect sense, of course! But... it's **not** how this AI **voodoo works**.

Is it **smarter?** Yes, it's **much smarter** (more brain cells, no lobotomy), but it's not as creative, and outright **unhinged**. The **brain-damaged** sister was pretty much like the stereotypical **schizo artist on psychedelics**. I swear, these blobs of tensors show some uncanny similarities to human truisms.

Anyway, here's what's interesting:

- I used the **exact** same data I've used for [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4)

- I used the **exact** same training parameters

- Results are **completely different**

What gives? And the weirdest part? This one is **less** stable in RP than the lobotomized model! Talk about counterintuitive... After 1-2 swipes it **will stabilize**, and is **very pleasant to play with**, in my opinion, but it's still... **weird**. It shouldn't be like that, yet it is 🤷🏼♂️

To conclude, this model is **not** an upgrade to [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4), it's not **better** and not **worse**, it's simply different.

What's similar? It's quite low on **SLOP**, but [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) is even lower, (**this model** however, has not ended up sacrificing smarts and assistant capabilities for it's creativity, and relative sloplessness).

---

# Included Character cards in this repo:

- [Vesper](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Vesper.png) (Schizo **Space Adventure**)

- [Nina_Nakamura](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Nina_Nakamura.png) (The **sweetest** dorky co-worker)

- [Employe#11](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Employee%2311.png) (**Schizo workplace** with a **schizo worker**)

---

### TL;DR

- **Excellent Roleplay** with more brains. (Who would have thought Phi-4 models would be good at this? so weird... )

- **Medium length** response (1-4 paragraphs, usually 2-3).

- **Excellent assistant** that follows instructions well enough, and keeps good formating.

- Strong **Creative writing** abilities. Will obey requests regarding formatting (markdown headlines for paragraphs, etc).

- Writes and roleplays **quite uniquely**, probably because of lack of RP\writing slop in the **pretrain**. This is just my guesstimate.

- **LOW refusals** - Total freedom in RP, can do things other RP models won't, and I'll leave it at that. Low refusals in assistant tasks as well.

- **VERY good** at following the **character card**. Math brain is used for gooner tech, as it should be.

### Important: Make sure to use the correct settings!

[Assistant settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-assistant-mode)

[Roleplay settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-roleplay-mode)

---

## Available quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B)

- GGUF & iMatrix: [GGUF](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GGUF) | [iMatrix](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_iMatrix)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.0bpw) | [3.5 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.5bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-8.0bpw)

- GPTQ: [4-Bit-g32](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GPTQ)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_FP8)

- Mobile (ARM): [Q4_0](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_ARM)

---

## Model Details

- Intended use: **Role-Play**, **Creative Writing**, **General Tasks**.

- Censorship level: Medium

- **5 / 10** (10 completely uncensored)

## UGI score:

---

---

---

Unlike its lobotomized [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) sister, this one **kept all the brain cells**.

## Wow! It must be so much better!

This makes perfect sense, of course! But... it's **not** how this AI **voodoo works**.

Is it **smarter?** Yes, it's **much smarter** (more brain cells, no lobotomy), but it's not as creative, and outright **unhinged**. The **brain-damaged** sister was pretty much like the stereotypical **schizo artist on psychedelics**. I swear, these blobs of tensors show some uncanny similarities to human truisms.

Anyway, here's what's interesting:

- I used the **exact** same data I've used for [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4)

- I used the **exact** same training parameters

- Results are **completely different**

What gives? And the weirdest part? This one is **less** stable in RP than the lobotomized model! Talk about counterintuitive... After 1-2 swipes it **will stabilize**, and is **very pleasant to play with**, in my opinion, but it's still... **weird**. It shouldn't be like that, yet it is 🤷🏼♂️

To conclude, this model is **not** an upgrade to [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4), it's not **better** and not **worse**, it's simply different.

What's similar? It's quite low on **SLOP**, but [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) is even lower, (**this model** however, has not ended up sacrificing smarts and assistant capabilities for it's creativity, and relative sloplessness).

---

# Included Character cards in this repo:

- [Vesper](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Vesper.png) (Schizo **Space Adventure**)

- [Nina_Nakamura](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Nina_Nakamura.png) (The **sweetest** dorky co-worker)

- [Employe#11](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Employee%2311.png) (**Schizo workplace** with a **schizo worker**)

---

### TL;DR

- **Excellent Roleplay** with more brains. (Who would have thought Phi-4 models would be good at this? so weird... )

- **Medium length** response (1-4 paragraphs, usually 2-3).

- **Excellent assistant** that follows instructions well enough, and keeps good formating.

- Strong **Creative writing** abilities. Will obey requests regarding formatting (markdown headlines for paragraphs, etc).

- Writes and roleplays **quite uniquely**, probably because of lack of RP\writing slop in the **pretrain**. This is just my guesstimate.

- **LOW refusals** - Total freedom in RP, can do things other RP models won't, and I'll leave it at that. Low refusals in assistant tasks as well.

- **VERY good** at following the **character card**. Math brain is used for gooner tech, as it should be.

### Important: Make sure to use the correct settings!

[Assistant settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-assistant-mode)

[Roleplay settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-roleplay-mode)

---

## Available quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B)

- GGUF & iMatrix: [GGUF](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GGUF) | [iMatrix](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_iMatrix)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.0bpw) | [3.5 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.5bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-8.0bpw)

- GPTQ: [4-Bit-g32](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GPTQ)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_FP8)

- Mobile (ARM): [Q4_0](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_ARM)

---

## Model Details

- Intended use: **Role-Play**, **Creative Writing**, **General Tasks**.

- Censorship level: Medium

- **5 / 10** (10 completely uncensored)

## UGI score:

---

## Recommended settings for assistant mode

---

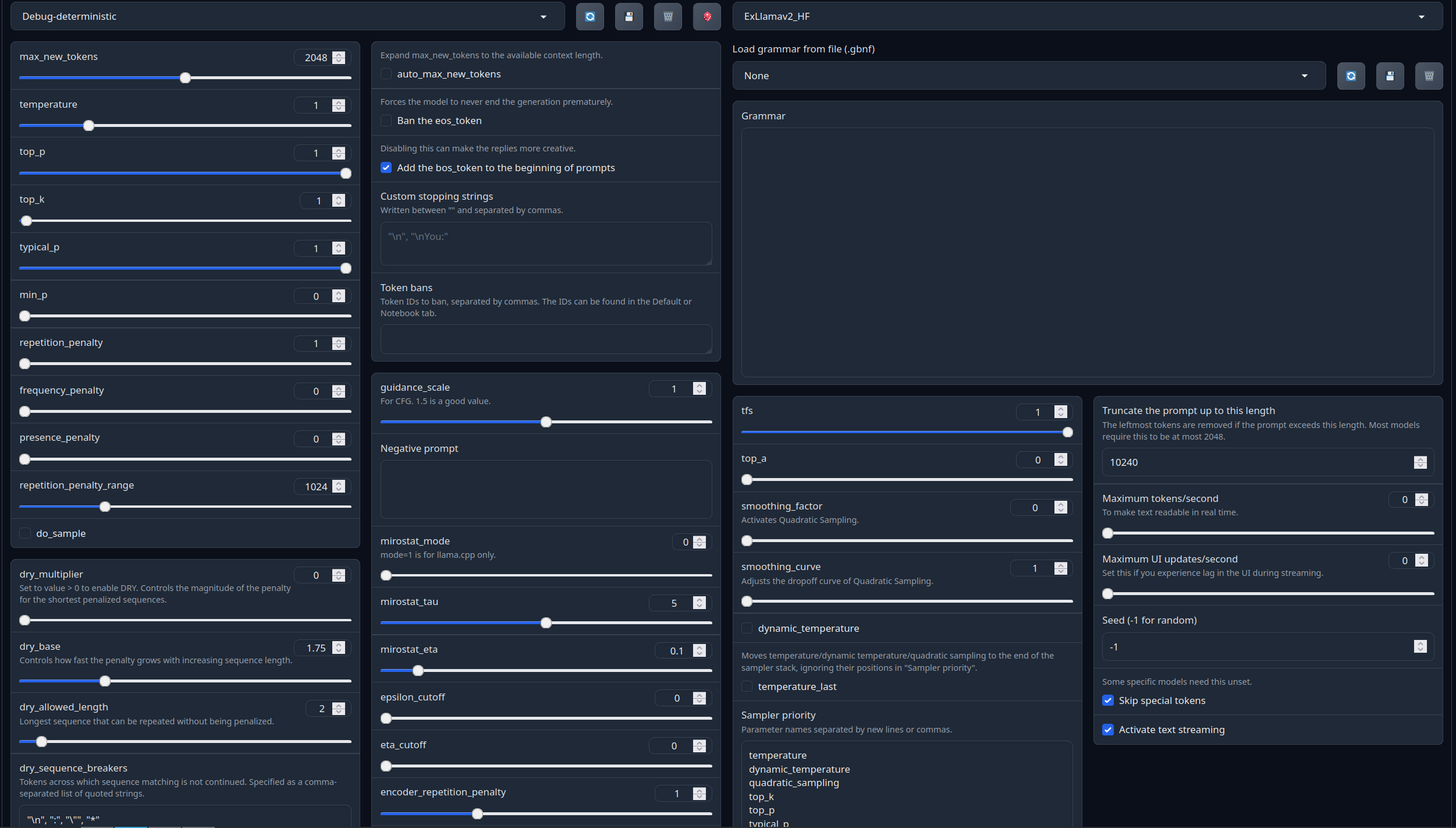

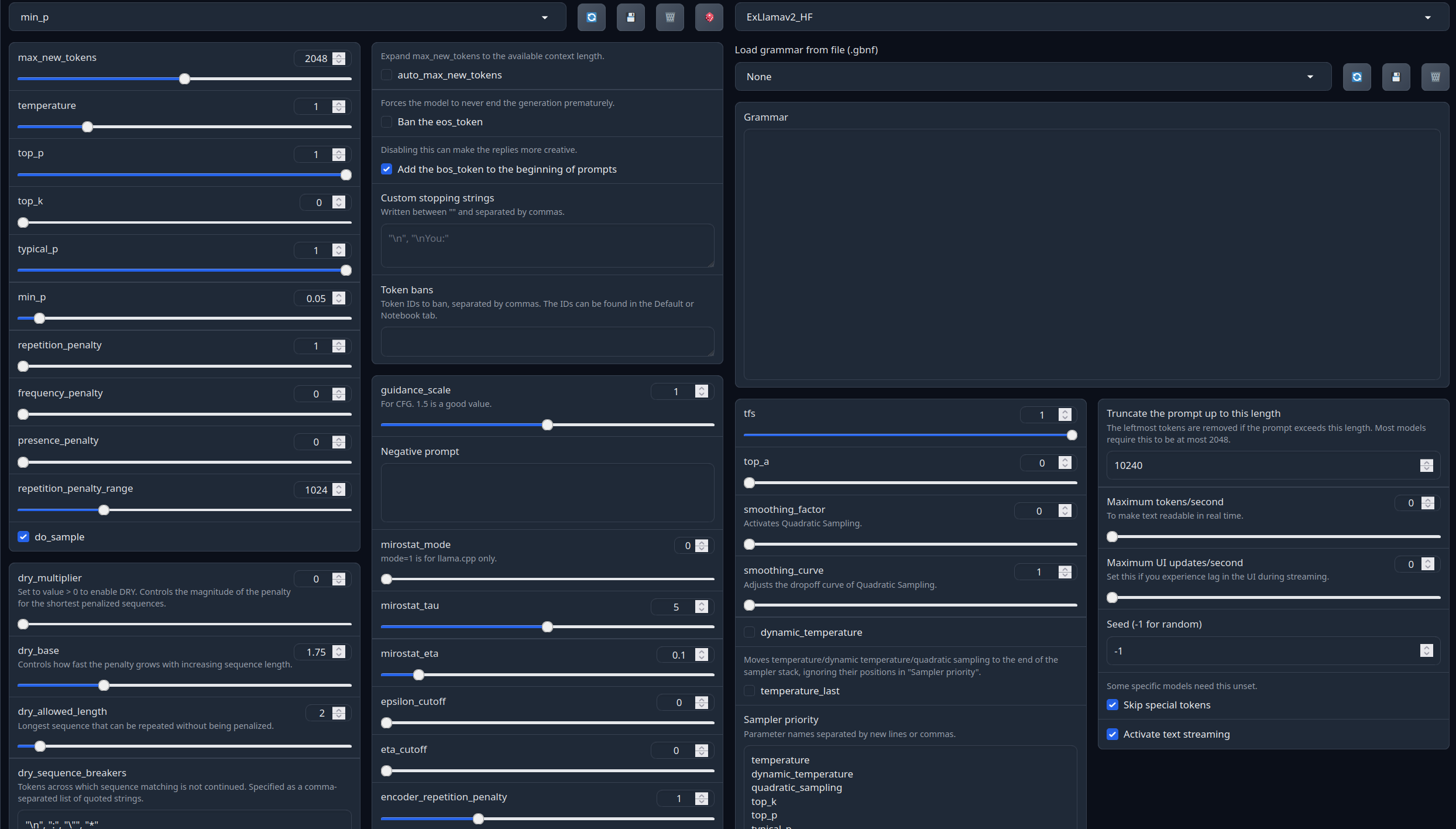

## Recommended settings for assistant mode

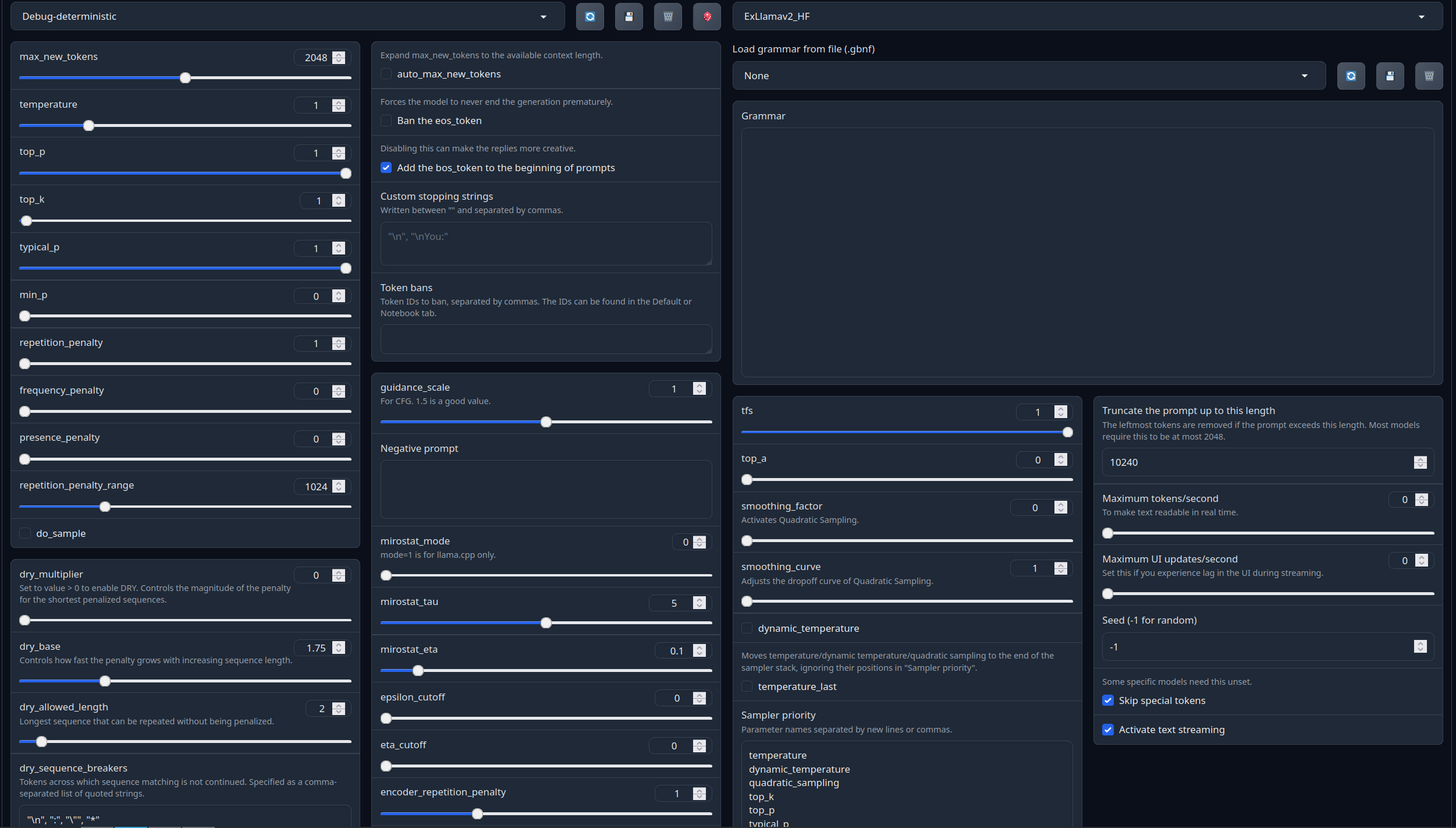

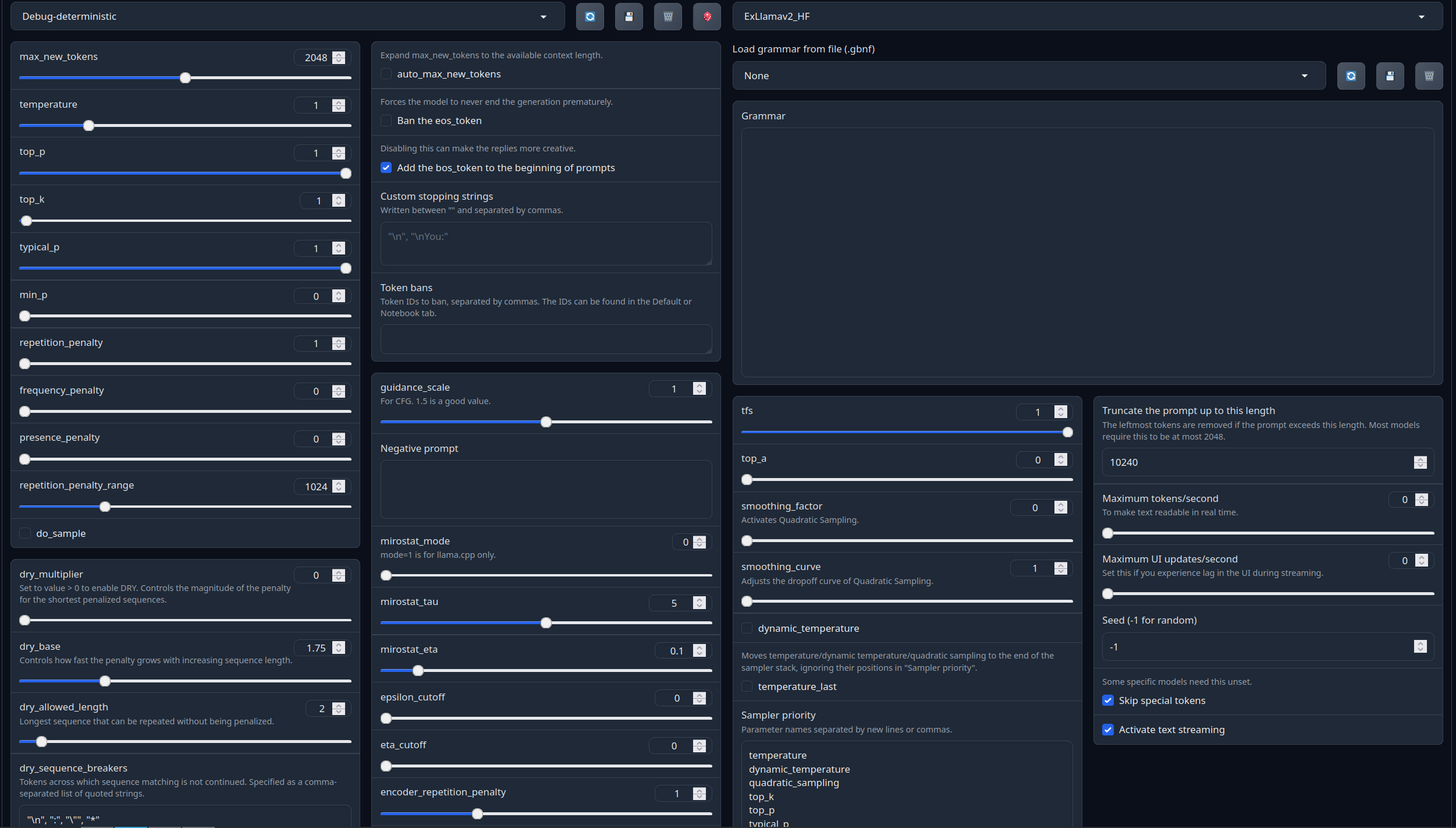

Full generation settings: Debug Deterministic.

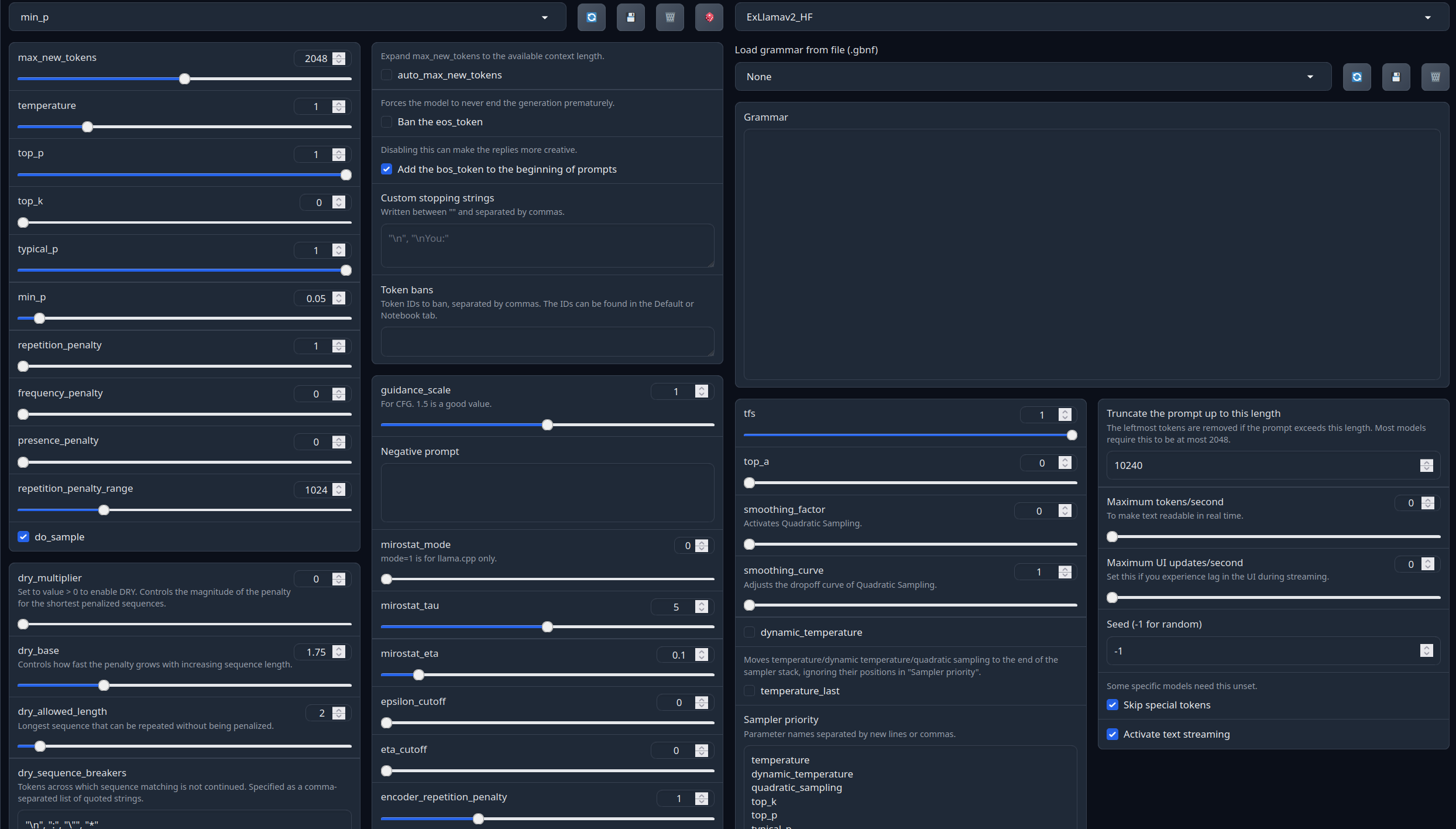

Full generation settings: min_p.

---

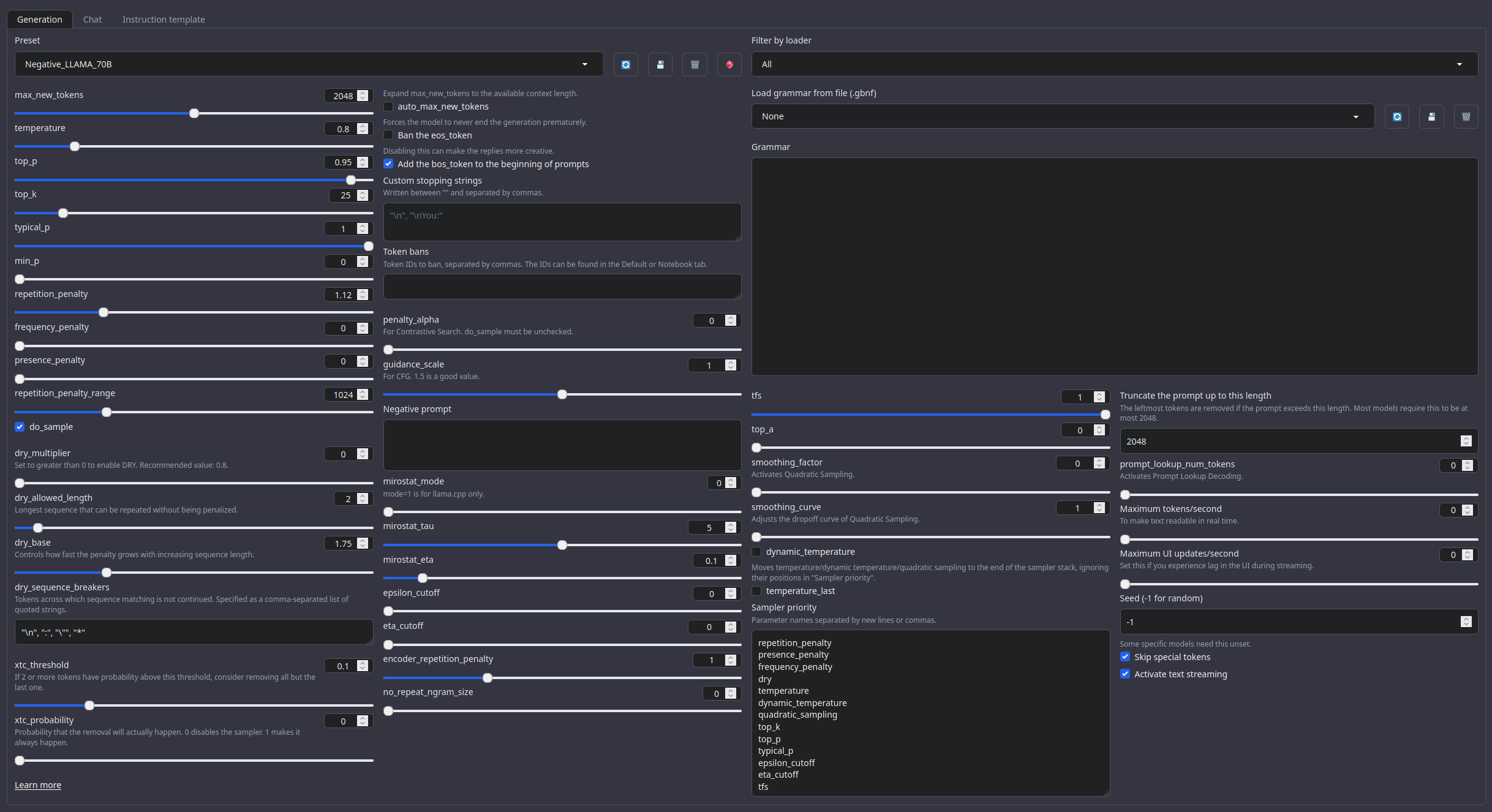

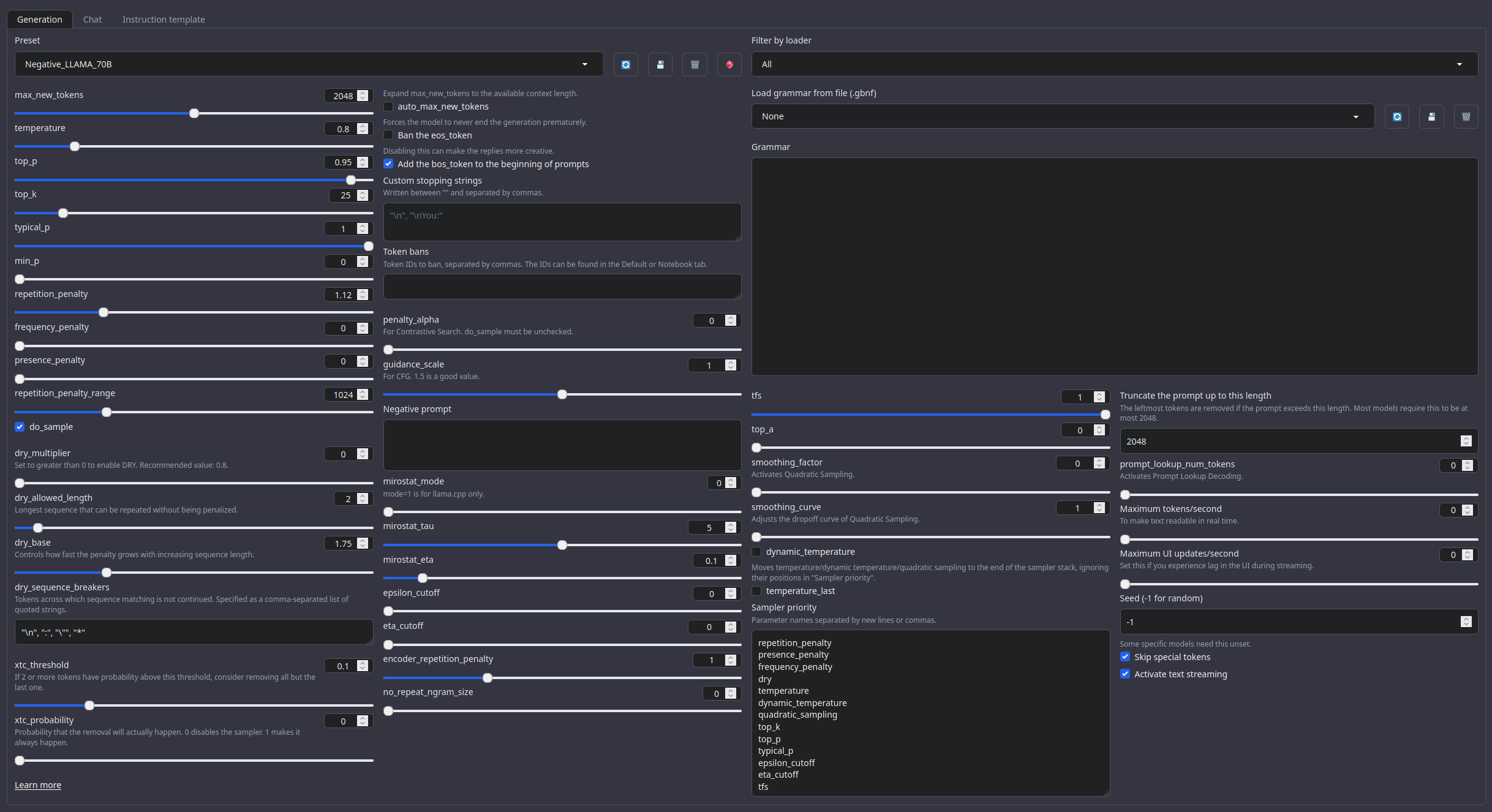

## Recommended settings for Roleplay mode

Roleplay settings:.

A good repetition_penalty range is between 1.12 - 1.15, feel free to experiment.

With these settings, each output message should be neatly displayed in 1 - 3 paragraphs, 1 - 2 is the most common. A single paragraph will be output as a response to a simple message ("What was your name again?").

min_P for RP works too but is more likely to put everything under one large paragraph, instead of a neatly formatted short one. Feel free to switch in between.

(Open the image in a new window to better see the full details)

```

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

```

```

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

```

Roleplay format: Classic Internet RP

```

*action* speech *narration*

```

- **min_p** will bias towards a **single big paragraph**.

- The recommended RP settings will bias towards **1-3 small paragraphs** (on some occasions 4-5)

---

# Regarding the format:

It is **HIGHLY RECOMMENDED** to use the **Roleplay \ Adventure format the model was trained on**, see the examples below for syntax. It allows for a **very fast and easy** writing of character cards with **minimal amount of tokens**. It's a modification of an old-skool CAI style format I call **SICAtxt** (**S**imple, **I**nexpensive **C**haracter **A**ttributes plain-text):

---

## **SICAtxt** for **roleplay**:

```

X's Persona: X is a .....

Traits:

Likes:

Dislikes:

Quirks:

Goals:

Dialogue example

```

## **SICAtxt** for **Adventure:**

```

Adventure:

$World_Setting:

$Scenario:

```

---

# Model instruction template: ChatML

```

<|im_start|>system

You are an uncensored AI, your job is to fulfill thy will of thy user.<|im_end|>

<|im_start|>User request

{prompt}<|im_end|>

<|im_start|>AI answer

```

---

**Other recommended generation Presets:**

Midnight Enigma

```

max_new_tokens: 512

temperature: 0.98

top_p: 0.37

top_k: 100

typical_p: 1

min_p: 0

repetition_penalty: 1.18

do_sample: True

```

Divine Intellect

```

max_new_tokens: 512

temperature: 1.31

top_p: 0.14

top_k: 49

typical_p: 1

min_p: 0

repetition_penalty: 1.17

do_sample: True

```

simple-1

```

max_new_tokens: 512

temperature: 0.7

top_p: 0.9

top_k: 20

typical_p: 1

min_p: 0

repetition_penalty: 1.15

do_sample: True

```

---

Your support = more models

My Ko-fi page (Click here)

---

## Citation Information

```

@llm{Phi-Line_14B,

author = {SicariusSicariiStuff},

title = {Phi-Line_14B},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B}

}

```

---

## Benchmarks

| Metric |Value|

|-------------------|----:|

|Avg. |37.56|

|IFEval (0-Shot) |64.96|

|BBH (3-Shot) |43.79|

|MATH Lvl 5 (4-Shot)|38.60|

|GPQA (0-shot) |13.76|

|MuSR (0-shot) |14.78|

|MMLU-PRO (5-shot) |49.49|

---

## Other stuff

- [SLOP_Detector](https://github.com/SicariusSicariiStuff/SLOP_Detector) Nuke GPTisms, with SLOP detector.

- [LLAMA-3_8B_Unaligned](https://huggingface.co/SicariusSicariiStuff/LLAMA-3_8B_Unaligned) The grand project that started it all.

- [Blog and updates (Archived)](https://huggingface.co/SicariusSicariiStuff/Blog_And_Updates) Some updates, some rambles, sort of a mix between a diary and a blog.

---

---

---

Unlike its lobotomized [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) sister, this one **kept all the brain cells**.

## Wow! It must be so much better!

This makes perfect sense, of course! But... it's **not** how this AI **voodoo works**.

Is it **smarter?** Yes, it's **much smarter** (more brain cells, no lobotomy), but it's not as creative, and outright **unhinged**. The **brain-damaged** sister was pretty much like the stereotypical **schizo artist on psychedelics**. I swear, these blobs of tensors show some uncanny similarities to human truisms.

Anyway, here's what's interesting:

- I used the **exact** same data I've used for [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4)

- I used the **exact** same training parameters

- Results are **completely different**

What gives? And the weirdest part? This one is **less** stable in RP than the lobotomized model! Talk about counterintuitive... After 1-2 swipes it **will stabilize**, and is **very pleasant to play with**, in my opinion, but it's still... **weird**. It shouldn't be like that, yet it is 🤷🏼♂️

To conclude, this model is **not** an upgrade to [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4), it's not **better** and not **worse**, it's simply different.

What's similar? It's quite low on **SLOP**, but [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) is even lower, (**this model** however, has not ended up sacrificing smarts and assistant capabilities for it's creativity, and relative sloplessness).

---

# Included Character cards in this repo:

- [Vesper](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Vesper.png) (Schizo **Space Adventure**)

- [Nina_Nakamura](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Nina_Nakamura.png) (The **sweetest** dorky co-worker)

- [Employe#11](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Employee%2311.png) (**Schizo workplace** with a **schizo worker**)

---

### TL;DR

- **Excellent Roleplay** with more brains. (Who would have thought Phi-4 models would be good at this? so weird... )

- **Medium length** response (1-4 paragraphs, usually 2-3).

- **Excellent assistant** that follows instructions well enough, and keeps good formating.

- Strong **Creative writing** abilities. Will obey requests regarding formatting (markdown headlines for paragraphs, etc).

- Writes and roleplays **quite uniquely**, probably because of lack of RP\writing slop in the **pretrain**. This is just my guesstimate.

- **LOW refusals** - Total freedom in RP, can do things other RP models won't, and I'll leave it at that. Low refusals in assistant tasks as well.

- **VERY good** at following the **character card**. Math brain is used for gooner tech, as it should be.

### Important: Make sure to use the correct settings!

[Assistant settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-assistant-mode)

[Roleplay settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-roleplay-mode)

---

## Available quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B)

- GGUF & iMatrix: [GGUF](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GGUF) | [iMatrix](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_iMatrix)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.0bpw) | [3.5 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.5bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-8.0bpw)

- GPTQ: [4-Bit-g32](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GPTQ)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_FP8)

- Mobile (ARM): [Q4_0](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_ARM)

---

## Model Details

- Intended use: **Role-Play**, **Creative Writing**, **General Tasks**.

- Censorship level: Medium

- **5 / 10** (10 completely uncensored)

## UGI score:

---

---

---

Unlike its lobotomized [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) sister, this one **kept all the brain cells**.

## Wow! It must be so much better!

This makes perfect sense, of course! But... it's **not** how this AI **voodoo works**.

Is it **smarter?** Yes, it's **much smarter** (more brain cells, no lobotomy), but it's not as creative, and outright **unhinged**. The **brain-damaged** sister was pretty much like the stereotypical **schizo artist on psychedelics**. I swear, these blobs of tensors show some uncanny similarities to human truisms.

Anyway, here's what's interesting:

- I used the **exact** same data I've used for [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4)

- I used the **exact** same training parameters

- Results are **completely different**

What gives? And the weirdest part? This one is **less** stable in RP than the lobotomized model! Talk about counterintuitive... After 1-2 swipes it **will stabilize**, and is **very pleasant to play with**, in my opinion, but it's still... **weird**. It shouldn't be like that, yet it is 🤷🏼♂️

To conclude, this model is **not** an upgrade to [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4), it's not **better** and not **worse**, it's simply different.

What's similar? It's quite low on **SLOP**, but [Phi-lthy](https://huggingface.co/SicariusSicariiStuff/Phi-lthy4) is even lower, (**this model** however, has not ended up sacrificing smarts and assistant capabilities for it's creativity, and relative sloplessness).

---

# Included Character cards in this repo:

- [Vesper](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Vesper.png) (Schizo **Space Adventure**)

- [Nina_Nakamura](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Nina_Nakamura.png) (The **sweetest** dorky co-worker)

- [Employe#11](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B/resolve/main/Character_Cards/Employee%2311.png) (**Schizo workplace** with a **schizo worker**)

---

### TL;DR

- **Excellent Roleplay** with more brains. (Who would have thought Phi-4 models would be good at this? so weird... )

- **Medium length** response (1-4 paragraphs, usually 2-3).

- **Excellent assistant** that follows instructions well enough, and keeps good formating.

- Strong **Creative writing** abilities. Will obey requests regarding formatting (markdown headlines for paragraphs, etc).

- Writes and roleplays **quite uniquely**, probably because of lack of RP\writing slop in the **pretrain**. This is just my guesstimate.

- **LOW refusals** - Total freedom in RP, can do things other RP models won't, and I'll leave it at that. Low refusals in assistant tasks as well.

- **VERY good** at following the **character card**. Math brain is used for gooner tech, as it should be.

### Important: Make sure to use the correct settings!

[Assistant settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-assistant-mode)

[Roleplay settings](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B#recommended-settings-for-roleplay-mode)

---

## Available quantizations:

- Original: [FP16](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B)

- GGUF & iMatrix: [GGUF](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GGUF) | [iMatrix](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_iMatrix)

- EXL2: [3.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.0bpw) | [3.5 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-3.5bpw) | [4.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-4.0bpw) | [5.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-5.0bpw) | [6.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-6.0bpw) | [7.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-7.0bpw) | [8.0 bpw](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B-8.0bpw)

- GPTQ: [4-Bit-g32](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_GPTQ)

- Specialized: [FP8](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_FP8)

- Mobile (ARM): [Q4_0](https://huggingface.co/SicariusSicariiStuff/Phi-Line_14B_ARM)

---

## Model Details

- Intended use: **Role-Play**, **Creative Writing**, **General Tasks**.

- Censorship level: Medium

- **5 / 10** (10 completely uncensored)

## UGI score:

---

## Recommended settings for assistant mode

---

## Recommended settings for assistant mode

```

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

```

```

temperature: 0.8

top_p: 0.95

top_k: 25

typical_p: 1

min_p: 0

repetition_penalty: 1.12

repetition_penalty_range: 1024

```