🔥 Official Implementation

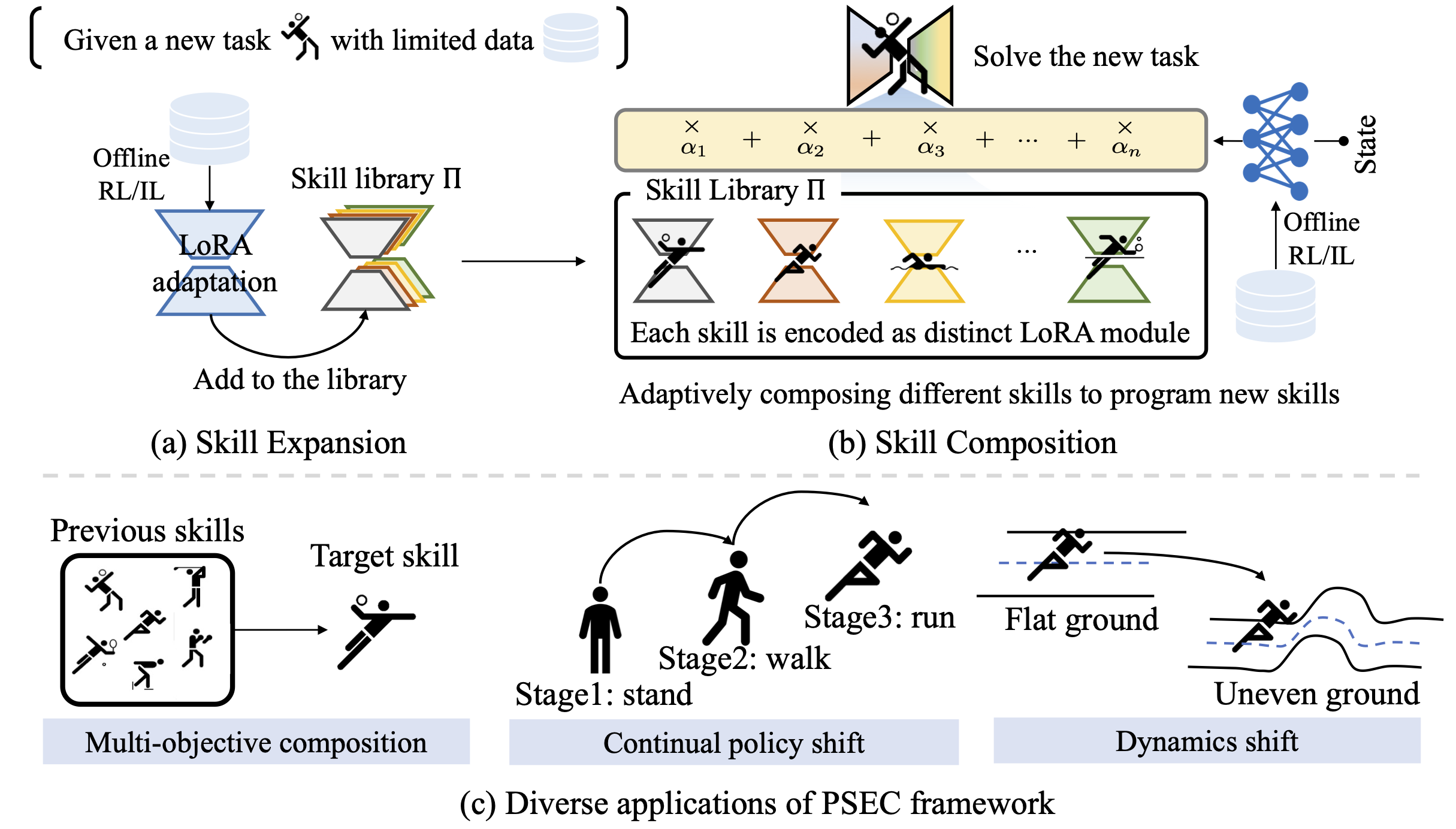

PSEC is a novel framework designed to:

🚀 Facilitate efficient and flexible skill expansion and composition

🔄 Iteratively evolve the agents' capabilities

⚡ Efficiently address new challenges