Commit

·

a4182c5

1

Parent(s):

34efa15

add some more info to the model card

Browse files

README.md

CHANGED

|

@@ -40,19 +40,24 @@ model-index:

|

|

| 40 |

name: WER

|

| 41 |

---

|

| 42 |

|

| 43 |

-

<!--

|

| 44 |

-

|

| 45 |

|

| 46 |

# Whisper Small Uzbek

|

| 47 |

|

| 48 |

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) trained on the mozilla-foundation/common_voice_11_0 uz and google/fleurs uz_uz datasets, and evaluated on the mozilla-foundation/common_voice_11_0 uz dataset.

|

| 49 |

-

It achieves the following results on the evaluation set:

|

| 50 |

- Loss: 0.3872

|

| 51 |

- Wer: 23.6509

|

|

|

|

|

|

|

| 52 |

|

| 53 |

## Model description

|

| 54 |

|

| 55 |

-

|

|

|

|

|

|

|

|

|

|

| 56 |

|

| 57 |

## Intended uses & limitations

|

| 58 |

|

|

@@ -60,10 +65,14 @@ More information needed

|

|

| 60 |

|

| 61 |

## Training and evaluation data

|

| 62 |

|

| 63 |

-

|

|

|

|

| 64 |

|

| 65 |

## Training procedure

|

| 66 |

|

|

|

|

|

|

|

|

|

|

| 67 |

### Training hyperparameters

|

| 68 |

|

| 69 |

The following hyperparameters were used during training:

|

|

|

|

| 40 |

name: WER

|

| 41 |

---

|

| 42 |

|

| 43 |

+

<!-- Disclaimer: I've never written a model card before. I'm probably not correctly following standard practices on how they should be written.

|

| 44 |

+

I'm new to this. I'm sorry -->

|

| 45 |

|

| 46 |

# Whisper Small Uzbek

|

| 47 |

|

| 48 |

This model is a fine-tuned version of [openai/whisper-small](https://huggingface.co/openai/whisper-small) trained on the mozilla-foundation/common_voice_11_0 uz and google/fleurs uz_uz datasets, and evaluated on the mozilla-foundation/common_voice_11_0 uz dataset.

|

| 49 |

+

It achieves the following results on the common_voice_11_0 evaluation set:

|

| 50 |

- Loss: 0.3872

|

| 51 |

- Wer: 23.6509

|

| 52 |

+

It achieves the following results on the FLEURS evaluation set:

|

| 53 |

+

- Wer: 47.15

|

| 54 |

|

| 55 |

## Model description

|

| 56 |

|

| 57 |

+

This model was created as part of the Whisper fine-tune sprint event.

|

| 58 |

+

Based on eval, this model achieves a WER of 23.6509 against the Common Voice 11 dataset and 47.15 against the FLEURS dataset.

|

| 59 |

+

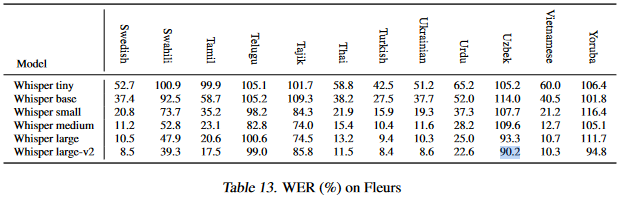

This is a significant improvement over the reported WER of 90.2 recorded on the [Whisper article](https://cdn.openai.com/papers/whisper.pdf):

|

| 60 |

+

|

| 61 |

|

| 62 |

## Intended uses & limitations

|

| 63 |

|

|

|

|

| 65 |

|

| 66 |

## Training and evaluation data

|

| 67 |

|

| 68 |

+

Training was performed using the train and evaluation splits from [Mozilla's Common Voice 11](https://huggingface.co/mozilla-foundation/common_voice_11_0) and [Google's FLEURS](https://huggingface.co/google/fleurs) datasets.

|

| 69 |

+

Testing was performed using the test splits from the same datasets.

|

| 70 |

|

| 71 |

## Training procedure

|

| 72 |

|

| 73 |

+

Training and CV11 testing was performed using a modified version of the [run_speech_recognition_seq2seq_streaming.py](https://github.com/kamfonas/whisper-fine-tuning-event/blob/e0377f55004667f18b37215d11bf0e54f5bda463/run_speech_recognition_seq2seq_streaming.py) script by farsipal, which enabled training on multiple datasets in a convenient way.

|

| 74 |

+

FLEURS testing was performed using the standard [run_eval_whisper_streaming.py](https://github.com/huggingface/community-events/blob/main/whisper-fine-tuning-event/run_eval_whisper_streaming.py) script.

|

| 75 |

+

|

| 76 |

### Training hyperparameters

|

| 77 |

|

| 78 |

The following hyperparameters were used during training:

|