init

Browse files- .gitattributes +1 -0

- README.md +50 -0

- comparison.png +0 -0

- ggml-model-Q4_K_M.gguf +3 -0

- ggml-model-f16.gguf +3 -0

- icon.png +0 -0

- images/example_1.png +0 -0

- images/example_2.png +0 -0

- mmproj-model-f16.gguf +3 -0

- ollama-Q4_K_M +15 -0

- ollama-f16 +15 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.gguf filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

inference: false

|

| 3 |

+

license: apache-2.0

|

| 4 |

+

---

|

| 5 |

+

|

| 6 |

+

# Model Card

|

| 7 |

+

|

| 8 |

+

<p align="center">

|

| 9 |

+

<img src="./icon.png" alt="Logo" width="350">

|

| 10 |

+

</p>

|

| 11 |

+

|

| 12 |

+

📖 [Technical report](https://arxiv.org/abs/2402.11530) | 🏠 [Code](https://github.com/BAAI-DCAI/Bunny) | 🐰 [3B Demo](https://wisemodel.cn/spaces/baai/Bunny) | 🐰 [8B Demo](https://2e09fec5116a0ba343.gradio.live)

|

| 13 |

+

|

| 14 |

+

This is **GGUF** format of [Bunny-Llama-3-8B-V](https://huggingface.co/BAAI/Bunny-Llama-3-8B-V).

|

| 15 |

+

|

| 16 |

+

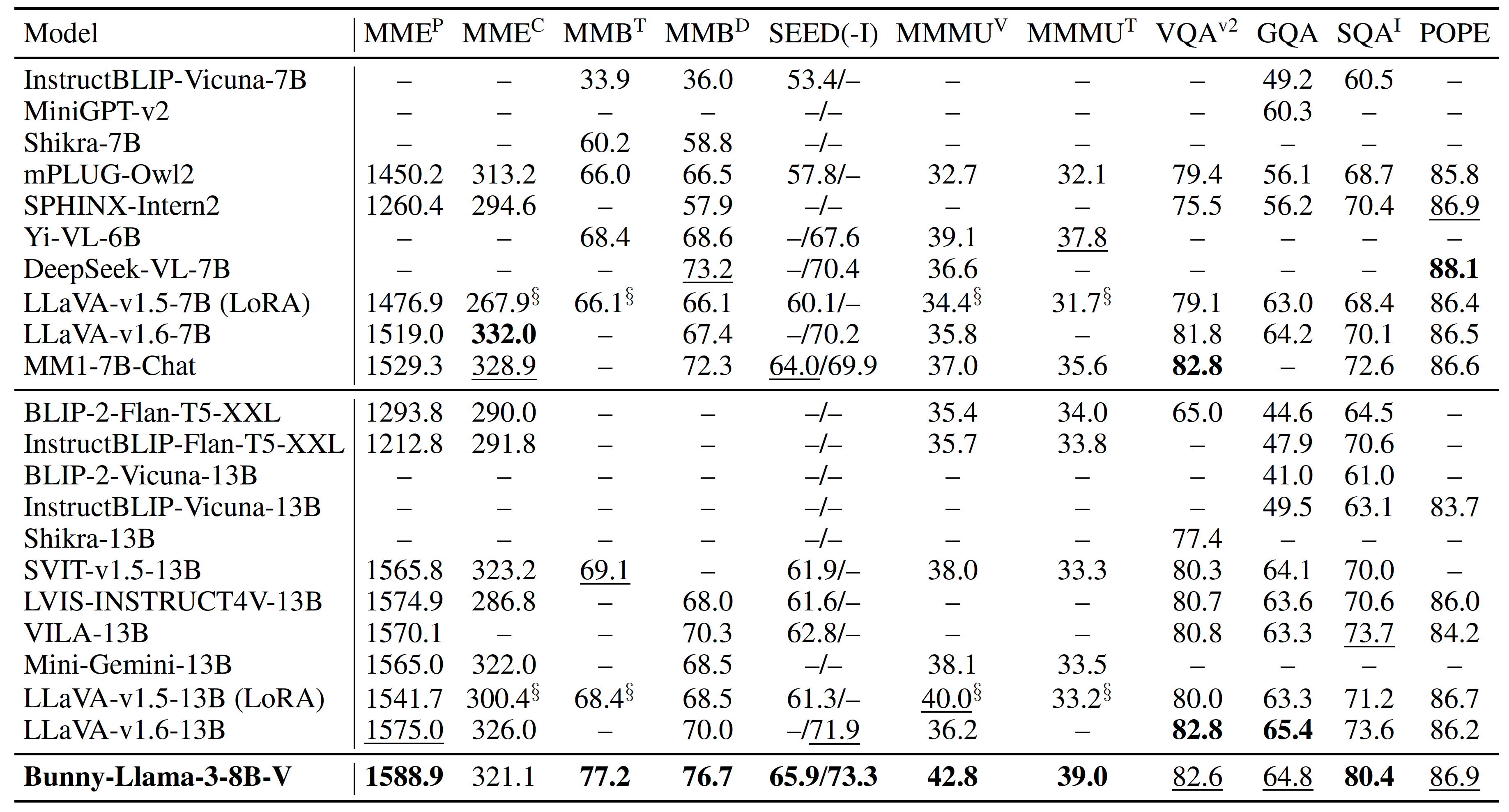

Bunny is a family of lightweight but powerful multimodal models. It offers multiple plug-and-play vision encoders, like EVA-CLIP, SigLIP and language backbones, including Llama-3-8B, Phi-1.5, StableLM-2, Qwen1.5, MiniCPM and Phi-2. To compensate for the decrease in model size, we construct more informative training data by curated selection from a broader data source.

|

| 17 |

+

|

| 18 |

+

We provide Bunny-Llama-3-8B-V, which is built upon [SigLIP](https://huggingface.co/google/siglip-so400m-patch14-384) and [Llama-3-8B-Instruct](https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct). More details about this model can be found in [GitHub](https://github.com/BAAI-DCAI/Bunny).

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

# Quickstart

|

| 24 |

+

|

| 25 |

+

## Chay by [`llama.cpp`](https://github.com/ggerganov/llama.cpp)

|

| 26 |

+

|

| 27 |

+

```shell

|

| 28 |

+

# sample images can be found in images folder

|

| 29 |

+

|

| 30 |

+

# fp16

|

| 31 |

+

./llava-cli -m ggml-model-f16.gguf --mmproj mmproj-model-f16.gguf --image example_2.png -c 4096 -p "Why is the image funny?" --temp 0.0

|

| 32 |

+

|

| 33 |

+

# int4

|

| 34 |

+

./llava-cli -m ggml-model-Q4_K_M.gguf --mmproj mmproj-model-f16.gguf --image example_2.png -c 4096 -p "Why is the image funny?" --temp 0.0

|

| 35 |

+

```

|

| 36 |

+

|

| 37 |

+

## Chat by [ollama](https://ollama.com/)

|

| 38 |

+

|

| 39 |

+

```shell

|

| 40 |

+

# sample images can be found in images folder

|

| 41 |

+

|

| 42 |

+

# fp16

|

| 43 |

+

ollama create Bunny-Llama-3-8B-V-fp16 -f ./ollama-f16

|

| 44 |

+

ollama run Bunny-Llama-3-8B-V-fp16 "example_2.png Why is the image funny?"

|

| 45 |

+

|

| 46 |

+

# int4

|

| 47 |

+

ollama create Bunny-Llama-3-8B-V-int4 -f ./ollama-Q4_K_M

|

| 48 |

+

ollama run Bunny-Llama-3-8B-V-int4 "example_2.png Why is the image funny?"

|

| 49 |

+

```

|

| 50 |

+

|

comparison.png

ADDED

|

ggml-model-Q4_K_M.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:88f0a61f947dbf129943328be7262ae82e3a582a0c75e53544b07f70355a7c30

|

| 3 |

+

size 4921246976

|

ggml-model-f16.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e9ff2d9f6e36a2c117fce8311d97e50db6abc11fcac591c08203db11cba0df0b

|

| 3 |

+

size 16069403872

|

icon.png

ADDED

|

|

images/example_1.png

ADDED

|

images/example_2.png

ADDED

|

mmproj-model-f16.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:96d033387a91e56cf97fa5d60e02c0128ce07c8fa83aaaefb74ec40541615ea5

|

| 3 |

+

size 877771936

|

ollama-Q4_K_M

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM ./ggml-model-Q4_K_M.gguf

|

| 2 |

+

FROM ./mmproj-model-f16.gguf

|

| 3 |

+

|

| 4 |

+

SYSTEM """

|

| 5 |

+

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

TEMPLATE """

|

| 9 |

+

{{ .System }}

|

| 10 |

+

USER: {{ .Prompt }}

|

| 11 |

+

ASSISTANT:

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

PARAMETER temperature 0.0

|

| 15 |

+

PARAMETER num_ctx 4096

|

ollama-f16

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

FROM ./ggml-model-f16.gguf

|

| 2 |

+

FROM ./mmproj-model-f16.gguf

|

| 3 |

+

|

| 4 |

+

SYSTEM """

|

| 5 |

+

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

TEMPLATE """

|

| 9 |

+

{{ .System }}

|

| 10 |

+

USER: {{ .Prompt }}

|

| 11 |

+

ASSISTANT:

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

PARAMETER temperature 0.0

|

| 15 |

+

PARAMETER num_ctx 4096

|